When thinking about potential future technology, one way to divide possible future tech is into probable and speculative. Probable future technology involves extrapolating existing technology into the future, such as imaging what advanced computers might be like. This category also includes technology that we know is possible, we just haven’t mastered it yet, like fusion power. For these technologies the question is more when than if.

When thinking about potential future technology, one way to divide possible future tech is into probable and speculative. Probable future technology involves extrapolating existing technology into the future, such as imaging what advanced computers might be like. This category also includes technology that we know is possible, we just haven’t mastered it yet, like fusion power. For these technologies the question is more when than if.

Speculative technology, however, may or may not even be possible within the laws of physics. Such technology is usually highly disruptive, seems magical in nature, but would be incredibly useful if it existed. Common technologies in this group include faster than light travel or communication, time travel, zero-point energy, cold fusion, anti-gravity, and propellantless thrust. I tend to think of these as science fiction technologies, not just speculative. The big question for these phenomena is how confident are we that they are impossible within the laws of physics. They would all be awesome if they existed (well, maybe not time travel – that one is tricky), but I am not holding my breath for any of them. If I had to bet, I would say none of these exist.

That last one, propellantless thrust, does not usually get as much attention as the other items on the list. The technology is rarely discussed explicitly in science fiction, but often it is portrayed and just taken for granted. Star Trek’s “impulse drive”, for example, seems to lack any propellant. Any ship that zips into orbit like the Millennium Falcon likely is also using some combination of anti-gravity and propellantless thrust. It certainly doesn’t have large fuel tanks or display any exhaust similar to a modern rocket.

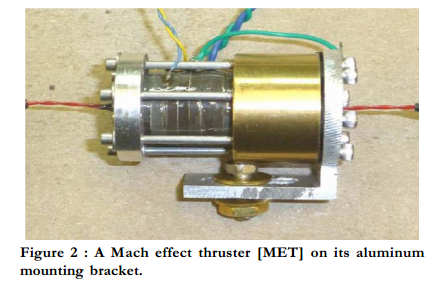

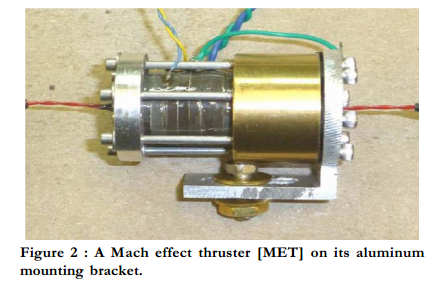

In recent years NASA has tested two speculative technologies that claim to be able to produce thrust without propellant – the EM drive and the Mach Effect thruster (MET). For some reason the EM drive received more media attention (including from me), but the MET was actually the more interesting claim. All existing forms of internal thrust involve throwing something out the back end of the ship. The conservation of momentum means that there will be an equal and opposite reaction, and the ship will be thrust in the opposite direction. This is your basic rocket. We can get more efficient by accelerating the propellant to higher and higher velocity, so that you get maximal thrust from each atom or propellant your ship carries, but there is no escape from the basic physics. Ion drives are perhaps the most efficient thrusters we have, because they accelerate charged particles to relativistic speeds, but they produce very little thrust. So they are good for moving ships around in space but cannot get a ship off the surface of the Earth.

Continue Reading »

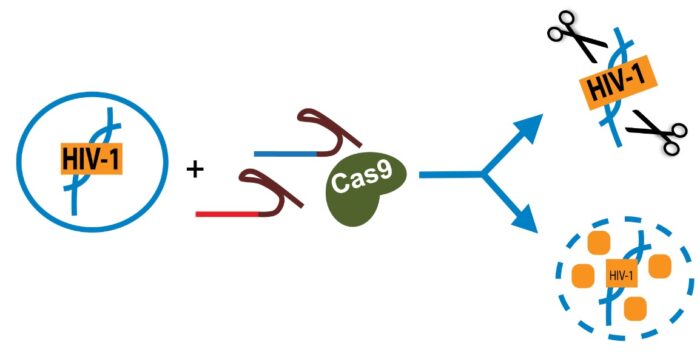

CRISPR has been big scientific news since it was introduced in 2012. The science actually goes back to 1987, but the CRISPR/Cas9 system was patented in 2012, and the developers won the Noble Prize in Chemistry in 2020. The system gives researchers the ability to quickly and cheaply make changes to DNA, by seeking out and matching a desired sequence and then making a cut in the DNA at that location. This can be done to inactivate a specific gene or, using the cells own repair machinery, to insert a gene at that location. This is a massive boon to genetics research but is also a powerful tool of genetic engineering.

CRISPR has been big scientific news since it was introduced in 2012. The science actually goes back to 1987, but the CRISPR/Cas9 system was patented in 2012, and the developers won the Noble Prize in Chemistry in 2020. The system gives researchers the ability to quickly and cheaply make changes to DNA, by seeking out and matching a desired sequence and then making a cut in the DNA at that location. This can be done to inactivate a specific gene or, using the cells own repair machinery, to insert a gene at that location. This is a massive boon to genetics research but is also a powerful tool of genetic engineering.

For the last two decades electricity demand in the US has been fairly flat. While it has been increasing overall, the increase has been very low. This has been largely attributed to the fact that as the use of electrical devices has increased, the efficiency of those devices has also increased. The introduction of LED bulbs, increased building insulation, more energy efficient appliances has largely offset increased demand. However,

For the last two decades electricity demand in the US has been fairly flat. While it has been increasing overall, the increase has been very low. This has been largely attributed to the fact that as the use of electrical devices has increased, the efficiency of those devices has also increased. The introduction of LED bulbs, increased building insulation, more energy efficient appliances has largely offset increased demand. However,  Ah, the categorization question again. This is an endless, but much needed, endeavor within human intellectual activity. We have the need to categorize things, if for no other reason than we need to communicate with each other about them. Often skeptics, like myself, talk about conspiracy theories or grand conspiracies. We also often define exactly what we mean by such terms, although not always exhaustively or definitively. It is too cumbersome to do so every single time we refer to such conspiracy theories. To some extent there is a cumulative aspect to discussions about such topics, either here or, for example,

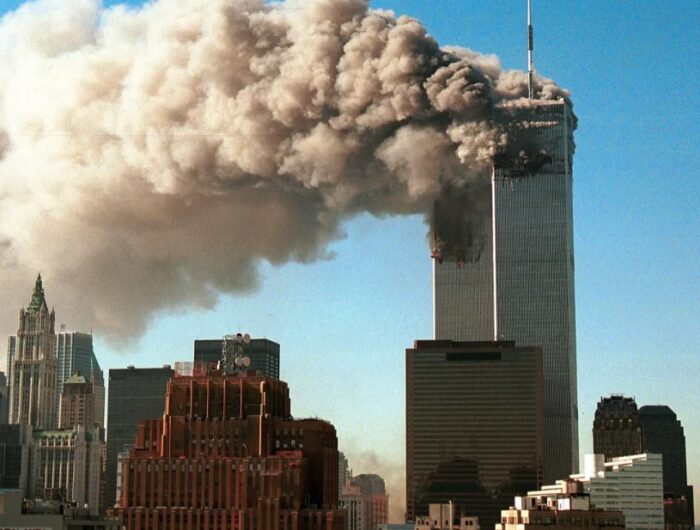

Ah, the categorization question again. This is an endless, but much needed, endeavor within human intellectual activity. We have the need to categorize things, if for no other reason than we need to communicate with each other about them. Often skeptics, like myself, talk about conspiracy theories or grand conspiracies. We also often define exactly what we mean by such terms, although not always exhaustively or definitively. It is too cumbersome to do so every single time we refer to such conspiracy theories. To some extent there is a cumulative aspect to discussions about such topics, either here or, for example,  In response to a recent surge in interest in alien phenomena and claims that the US government is hiding what it knows about extraterrestrials, the Pentagon established a committee to investigate the question – the All-Domain Anomaly Resolution Office (AARO). They have recently released

In response to a recent surge in interest in alien phenomena and claims that the US government is hiding what it knows about extraterrestrials, the Pentagon established a committee to investigate the question – the All-Domain Anomaly Resolution Office (AARO). They have recently released  When

When  Like it or not, we are living in the age of artificial intelligence (AI). Recent advances in large language models, like ChatGPT, have helped put advanced AI in the hands of the average person, who now has a much better sense of how powerful these AI applications can be (and perhaps also their limitations). Even though they are narrow AI, not sentient in a human way, they can be highly disruptive. We are about to go through the first US presidential election where AI may play a significant role. AI has revolutionized research in many areas, performing months or even years of research in mere days.

Like it or not, we are living in the age of artificial intelligence (AI). Recent advances in large language models, like ChatGPT, have helped put advanced AI in the hands of the average person, who now has a much better sense of how powerful these AI applications can be (and perhaps also their limitations). Even though they are narrow AI, not sentient in a human way, they can be highly disruptive. We are about to go through the first US presidential election where AI may play a significant role. AI has revolutionized research in many areas, performing months or even years of research in mere days. I love to follow kerfuffles between different experts and deep thinkers. It’s great for revealing the subtleties of logic, science, and evidence. Recently there has been an interesting online exchange between a physicists science communicator (

I love to follow kerfuffles between different experts and deep thinkers. It’s great for revealing the subtleties of logic, science, and evidence. Recently there has been an interesting online exchange between a physicists science communicator ( When I use my virtual reality gear I do practical zero virtual walking – meaning that I don’t have my avatar walk while I am not walking. I general play standing up which means I can move around the space in my office mapped by my VR software – so I am physically walking to move in the game. If I need to move beyond the limits of my physical space, I teleport – point to where I want to go and instantly move there. The reason for this is that virtual walking creates severe motion sickness for me, especially if there is even the slightest up and down movement.

When I use my virtual reality gear I do practical zero virtual walking – meaning that I don’t have my avatar walk while I am not walking. I general play standing up which means I can move around the space in my office mapped by my VR software – so I am physically walking to move in the game. If I need to move beyond the limits of my physical space, I teleport – point to where I want to go and instantly move there. The reason for this is that virtual walking creates severe motion sickness for me, especially if there is even the slightest up and down movement. Amid much controversy, the Alabama State Supreme Court ruled that

Amid much controversy, the Alabama State Supreme Court ruled that  December 11, 1972, Apollo 17 soft landed on the lunar surface, carrying astronauts Gene Cernan and Harrison Schmitt. This was the last time anything American soft landed on the moon, over 50 years ago. It seems amazing that it’s been that long. On February 22, 2024, the

December 11, 1972, Apollo 17 soft landed on the lunar surface, carrying astronauts Gene Cernan and Harrison Schmitt. This was the last time anything American soft landed on the moon, over 50 years ago. It seems amazing that it’s been that long. On February 22, 2024, the