Jun

30

2022

From a neurological and evolutionary perspective, music is fascinating. There seems to be a deeply rooted biological appreciation for tonality, rhythm, and melody. Not only can people find certain sequences of sounds to be pleasurable, they can powerfully evoke emotions. Music can be happy, sad, peaceful, foreboding, energetic or comical. Why is this? Music is also deeply cultural, with different cultures independently developing forms of music that are very different from each other. All human cultures have music, so the question is – to what extent are the details of musical appreciation universal vs culturally specific?

From a neurological and evolutionary perspective, music is fascinating. There seems to be a deeply rooted biological appreciation for tonality, rhythm, and melody. Not only can people find certain sequences of sounds to be pleasurable, they can powerfully evoke emotions. Music can be happy, sad, peaceful, foreboding, energetic or comical. Why is this? Music is also deeply cultural, with different cultures independently developing forms of music that are very different from each other. All human cultures have music, so the question is – to what extent are the details of musical appreciation universal vs culturally specific?

In Western music, for example, there are minor and major scales, chords, and keys. This refers to the combinations of notes or intervals between them. Music in a minor key tends to evoke emotions of sadness or foreboding, while those in a major key tend to evoke happiness or brightness. Would anyone from any culture interpret major and minor key music the same way? Research suggests that major and minor emotional effects are universal, but a recent study casts a little doubt on this conclusion.

The researchers looked at different subpopulations of people in Papua New Guinea, and both musicians and non-musicians in Australia. They chose Papua New Guinea because the people there share a common musical tradition, but vary in their exposure to Western music and culture. The experiment was simple – subjects were exposed to major and minor music and were asked to indicate if it made them feel happy or sad (the so-called emotional “valence”). Every group had the same emotional valence in response to major and minor music – that is, except one. The one group that had essentially no exposure to Western culture and music did not have the same emotional reaction to music.

Continue Reading »

Jun

28

2022

Often, contentious political and social questions have a scientific question in the middle of them. Resolving the scientific question will not always resolve the political ones, but at least it can properly inform the debate. The recent overturning of Roe v Wade has supercharged the debate about when human life begins. It may seem, then, that what science has to say about this question is important to the debate. But it may be less useful than it at first appears.

Often, contentious political and social questions have a scientific question in the middle of them. Resolving the scientific question will not always resolve the political ones, but at least it can properly inform the debate. The recent overturning of Roe v Wade has supercharged the debate about when human life begins. It may seem, then, that what science has to say about this question is important to the debate. But it may be less useful than it at first appears.

For example, in a recent editorial Henry Olsen, who is pro-life, asserts – “The heart of the abortion debate: What is human life?” I actually disagree with this framing. That is often a subtle and effective way to manipulate a debate – you assume a certain framing of the question that is biased toward one side. In this case, if the question is – what is a human life – then the pro-life side only has to argue that a fetus is a human life. But this framing is wrong on multiple levels.

Olsen is trying to frame this as a scientific question, but really the abortion debate is much more of a philosophical question. First let’s address what science questions there are.

Is a fertilized human embryo “human life?” Sure. It’s a living organism, and it is certainly human. But that’s not the only question. One could also ask – when does a fertilized egg become a person? That is a more nuanced question. I think there is broad agreement that a baby is a person. At the other end of the process we have a single cell. That cell may have the potential to develop into a person, but the cell itself is not a person.

At what point does a clump of cells become an actual person (again – not just the future potential of one)? This is an unanswerable question, as there is no sharp dividing line. There is no definable moment. There are milestones we can use to make some reasonable judgement calls. For example, one might argue that a person has to have the capacity for self-awareness, some sense of self and of being. This requires at least a minimally functioning brain.

Continue Reading »

Jun

27

2022

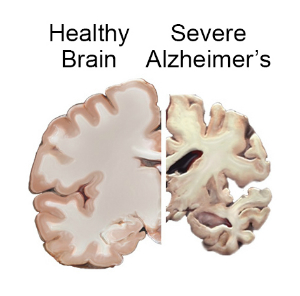

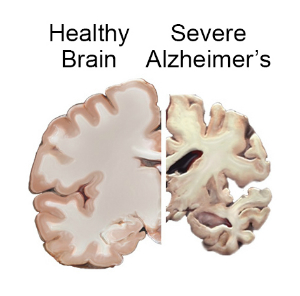

A recent large population-based study shows a significant reduction in the risk of developing Alzheimer’s disease (AD) in older adults who received one or more flu vaccines. This follows previous studies showing a similar protective effect for other adult vaccines, and raises interesting questions regarding possible mechanisms.

A recent large population-based study shows a significant reduction in the risk of developing Alzheimer’s disease (AD) in older adults who received one or more flu vaccines. This follows previous studies showing a similar protective effect for other adult vaccines, and raises interesting questions regarding possible mechanisms.

AD is a degenerative neurological disease which is the most common cause of dementia, which is a syndrome of chronic global cognitive impairment. The prevalence of AD is about 6.5 million people in the US. The risk of developing AD increases with age, with 10.7% of those 65 and older being affected. AD is more than just having dementia, it is a specific disease that can only be confirmed at this time by looking at the brain. There are pathological changes including plaques and tangles. Imaging can also reveal diffuse cortical atrophy (shrinkage of the brain). Electroencephalogram typically shows slowing, indicating reduced cortical activity. There are many markers of AD that can be identified in the blood or spinal fluid, but none are good enough to be used in routine clinical evaluation.

The causes of AD are complex and not yet fully understood. In brief, a lot of stuff happens on the way towards brain cells dying but it’s hard to known which stuff is driving the process and which are a result of the process. It therefore has been difficult to device treatments to slow or prevent progression. For my entire career as a neurologist it seemed as if we were getting close to a significant clinical breakthrough, but we’re still waiting. Tremendous progress has been made, but nothing that amounts to a disease-modifying treatment.

Prevention before the disease becomes clinically apparent would be ideal. We know that controlling blood pressure and other markers of cardiovascular health are extremely important in reducing the risk of AD. We also know that physical exercise is important, as well as keeping mentally active. Sometimes, however, it is difficult to separate preventive measures that actually delay or slow the onset of AD vs those that mask the onset through creating a higher baseline of cognitive function. Regardless, all of the above are good lifestyle choices.

Continue Reading »

Jun

24

2022

Yesterday I wrote about the fact that as resources become limited, people have typically found ways around that limitation through technology and ingenuity. Specifically, as land-based resources of the metals we need for our technology, such as batteries, becomes limited, we may turn to the sea which has vastly greater reserves of many of those metals. There is essentially an inexhaustible supply of lithium in seawater, for example, which will be critical for a battery-powered future. Developing the technology to essentially “mine” elements from seawater, in other words, is a game changer.

Yesterday I wrote about the fact that as resources become limited, people have typically found ways around that limitation through technology and ingenuity. Specifically, as land-based resources of the metals we need for our technology, such as batteries, becomes limited, we may turn to the sea which has vastly greater reserves of many of those metals. There is essentially an inexhaustible supply of lithium in seawater, for example, which will be critical for a battery-powered future. Developing the technology to essentially “mine” elements from seawater, in other words, is a game changer.

We have a similar situation with food production. As the human population grows we need to be able to grow enough food to feed everyone. Around a century ago our food system was limited by the nitrogen cycle – we could only get so much nitrogen into the soil, mainly through manure, and that limited the amount of food we could produce. Then came the Haber Bosch process, an industrial-scale process for turning nitrogen from the atmosphere (combined with hydrogen) into fertilizer. This was a game-changer, brining the green revolution which allowed human populations increase dramatically. And now we are facing a similar problem. We have used up most of the reasonably arable land and so our best option for increasing food production is to increase the amount of food produced per acre.

There are several options to achieve this. One is developing new crop cultivars (through genetic engineering and other methods) that produce more food per acre. Another is to optimize our food production globally, making sure that each acre of land is used for its best purpose. This will likely involved decreasing the proportion of meat we consume, but not eliminating it, as some land is optimal for grazing and not growing crops. (We also can use the manure to feed back into the system – currently about half of fertilizer is manure.) Hydroponic food production for some crops can also be massively land efficient (and water efficient) with towers of food production not limited by the properties of the land. There is also sea-based food production, growing food (such as algae) in vats, and insect farming which is highly land efficient. There are lots of options and we will likely be exploiting all of them increasingly in the future.

Continue Reading »

Jun

23

2022

One of the recurring patterns of our technological history is that any new massive industry uses resources. Eventually those resources become scarce, brining warnings that we are approaching “peak whatever” – the point at which we can no longer increase the availability of that resource, supplies dwindle, but our technology infrastructure is already dependent on it. Obviously resources are finite, and eventually any finite resource will run out, but so far we always seem to find a way around the “peak resource” catastrophe. But also, we may not always like the trade-offs.

One of the recurring patterns of our technological history is that any new massive industry uses resources. Eventually those resources become scarce, brining warnings that we are approaching “peak whatever” – the point at which we can no longer increase the availability of that resource, supplies dwindle, but our technology infrastructure is already dependent on it. Obviously resources are finite, and eventually any finite resource will run out, but so far we always seem to find a way around the “peak resource” catastrophe. But also, we may not always like the trade-offs.

From 1500-1660, for example, England was running out of timber for their vast navy and as their basic fuel for heating. As a result they turned to another resource, coal. But burning coal for fuel filled their cities with dense harmful smoke, making them almost unlivable. As a side effect, however, it also spawned the industrial revolution, so there’s that.

A couple of decades ago there were dire warnings that we were reaching peak oil. But since then there have been discoveries of vast new oil fields, and fracking has lead to a natural gas revolution. Now the problem is that we have too much fossil fuel, and if we burn it all we’ll warm the planet to an unacceptable degree. As we shift to a green economy, including trying to swap out our automobile fleet with all electric versions, we are facing another “peak” problem – the metals used in making all those batteries (lithium cobalt, nickel, rare earths). To some extent demand creates supply, even for a natural resource. Demand increases the value of the resource, which means it becomes worth it to obtain the resource from increasingly expensive sources. Also, technology is advancing in the background, and this makes new sources available to us.

Continue Reading »

Jun

21

2022

It should be clear to anyone paying attention that we need to wean ourselves off fossil fuels as quickly as possible. The pollution they generate harms health, contributes to global warming, and causes long term damage to the economy. We are also experiencing a great example of how we will never truly be energy independent as long as our gas prices are determined by global markets over which we have little control, and which a single dictator can throw into chaos.

It should be clear to anyone paying attention that we need to wean ourselves off fossil fuels as quickly as possible. The pollution they generate harms health, contributes to global warming, and causes long term damage to the economy. We are also experiencing a great example of how we will never truly be energy independent as long as our gas prices are determined by global markets over which we have little control, and which a single dictator can throw into chaos.

There is a lot of debate about what is the optimal path from where we are now to where we want to be in terms of our energy infrastructure, which I have discussed before and won’t repeat here. What is fairly clear, however, is that solar power is likely to play a critical and increasing role in our energy infrastructure going forward. Solar power is now responsible for about 4% of total US power generation (combined with wind, these renewables just hit 20% power production in March 2022). That is a 36% increase in solar power over last year. Really the main debate is about where renewable power will level off, and how to best integrate them into the overall power grid (which depends a lot on things like grid storage and updates). By 2050 a much greater portion of our energy will likely come from solar, so advances in solar technology will similarly have a huge impact on the cost and effectiveness of our solar infrastructure.

Right now silicon based solar photovoltaic (PV) panels are the industry standard. They have become incredibly cheaper and more energy efficient over the last 20 years, but there are concerns that they may be getting close to the limits of this technology. One huge limiting factor for silicon is that PV panels need to be manufactured at 3,000 degrees F, which itself requires a lot of energy, reducing the energy and carbon efficiency of silicon PV technology. Silicon is also stiff and opaque, which limit its applications.

Continue Reading »

Jun

20

2022

NASA announced earlier this month that it will be joining the investigation of so-called “Unidentified aerial phenomena” or UAPs (replacing the older term, UFOs). This has rekindled the debate over what UAP are and what our attitude toward them should be. The topic delights the press, who can’t resist the notion that official are investigating something apparently fantastic, and they are generally doing a poor job of putting the phenomenon into context. Meanwhile, some people who should know better are sensationalizing UAPs and misrepresenting the state of the evidence.

NASA announced earlier this month that it will be joining the investigation of so-called “Unidentified aerial phenomena” or UAPs (replacing the older term, UFOs). This has rekindled the debate over what UAP are and what our attitude toward them should be. The topic delights the press, who can’t resist the notion that official are investigating something apparently fantastic, and they are generally doing a poor job of putting the phenomenon into context. Meanwhile, some people who should know better are sensationalizing UAPs and misrepresenting the state of the evidence.

Most notable among them is Michio Kaku, who has said in interviews that the evidence is so compelling the burden of proof has now shifted to those saying UAPs are not alien spacecraft. This is horribly wrong for multiple reasons. Neil deGrasse Tyson, on the other hand, pretty much nails it in this brief interview. His main points are – the quality of the evidence is extremely poor, despite the fact that there are millions of high resolution photos and video including rare phenomenon uploaded to the internet daily, so we have to rule out mundane phenomena first.

If nothing else UAPs present an excellent opportunity for skeptical analysis and showing why critical thinking is so important. As you may have guessed, I am not impressed with the notion that UAPs are evidence of anything extraterrestrial. Let me first, however, dispense with a common strawman in the reporting – the idea that investigating UAPs is itself unscientific or shameful. I don’t know of anyone making this argument. Even hardened skeptics are all for doing the investigations. We want the investigations – how else will we have data to analyze. We want to understand the phenomenon as well as possible. In fact, attaching any stigma to merely investigating unusual phenomena is really harmful. It pretty much ensures that serious scientists will stay away, and cede the ground to cranks and amateurs. So let’s please dispense with this silly notion, and the mainstream media can stop wringing their hands over it in every article.

Continue Reading »

Jun

17

2022

Human nature (and it’s pretty clear that we do have a nature) is complex and multifaceted. We have multiple tendencies, biases, and heuristics all operating at once, pulling us in different directions. These tendencies also interact with our culture and environment, so we are not a slave to our biases. We can understand and rise above them, and we can develop norms, culture, and institutions to nurture our better aspects and mitigate our dark side. That is basically civilization in a nutshell.

Human nature (and it’s pretty clear that we do have a nature) is complex and multifaceted. We have multiple tendencies, biases, and heuristics all operating at once, pulling us in different directions. These tendencies also interact with our culture and environment, so we are not a slave to our biases. We can understand and rise above them, and we can develop norms, culture, and institutions to nurture our better aspects and mitigate our dark side. That is basically civilization in a nutshell.

In fact, many scientists believe that humans also domesticated themselves – applied selection pressures that favored people who were less aggressive, more pro-social. It’s hard to prove this is true, but it does make sense. As civilization took hold, people whose temperament were better suited to that civilization would have a survival advantage.

Psychologists, however, have long documented that pro-social behavior in humans is a double-edged sword, because we only appear to be pro-social toward our perceived in-group. Toward those who we believe to be members of an out-group the cognitive algorithm flips. This is referred to as in-group bias, and also as “tribalism” (not meant as a knock against any traditional tribal culture). Negativity toward a perceived out-group can be extreme, even to the point of dehumanizing out-group members – depriving them of their basic humanity, and therefore any moral obligation to them. That appears to be how our brains reconcile these conflicting impulses. Evolutionary forces favored people who had a sense of justice, fairness, and compassion, but also needed a way to suspend these emotions when our group was fighting for its survival against a rival group. At least those groups with the most intense in-group loyalty, and the ability to brutalize members of an outgroup, were the ones that survived and are therefore our ancestors.

Increasingly neuroscience can investigate the neuroanatomical correlates of psychologically documented phenomena. In other words, psychologists show how people behave, and then neuroscientists can investigate what’s happening in the brain when they behave that way. A recent study looks at one possible neural mechanism for in-group bias. They recruited male subjects from the same university and then imaged their brain activity while they retaliated in a game against targets from their university and targets from a rival university. The researchers used rival universities, rather than more deeply held group identities (such as nationality, race, religion, political affiliation) to avoid undue stress on the subjects. Yet even with what they considered to be a mild group identity, the subjects showed greater activity in the ventral striatum when retaliating against out-group targets than in-group targets.

Continue Reading »

Jun

16

2022

This is a relatively new phenomenon, gaining in visibility, but probably most people have not yet heard about it – virtual influencers. These are entirely digital creations, people that don’t actually exist but who have a social media presence. They may be the creation of a single individual, a team of people, a corporation, or even crowd-sourced. They have a recognizable look, personality, and set of interests. They may also be voiced. They are related to an older phenomenon of virtual pop-stars – entirely artificial digital creations that sing and perform popular music.

This is a relatively new phenomenon, gaining in visibility, but probably most people have not yet heard about it – virtual influencers. These are entirely digital creations, people that don’t actually exist but who have a social media presence. They may be the creation of a single individual, a team of people, a corporation, or even crowd-sourced. They have a recognizable look, personality, and set of interests. They may also be voiced. They are related to an older phenomenon of virtual pop-stars – entirely artificial digital creations that sing and perform popular music.

The most popular virtual influencer is Lu of Magalu, with over 55 million followers on various platforms. Some of these virtual characters are realistic CG creations, other are cartoons or anime, some may be non-human, and others are existing brands (like Barbie). Virtual pop stars perform live concerts where they appear as holograms. Companies hire virtual influencers to sell product, and some have appeared as models on the cover of magazines, have their own music videos, and have “virtually” walked the red carpet.

Are these virtual personas just a fad, or are they the future of advertising and entertainment? I suspect that they are here to stay and are likely to gain significantly in popularity. I will explain why I think that, and then discuss the possible good and bad aspects of this phenomenon.

Arguably the first persona-based pop stars who were entirely fictitious were Alvin and the Chipmunks, who debuted in 1950. Obviously the chipmunks are not real, they are artistic creations, but their creators produced several popular albums and they were the stars of several cartoons. Other cartoon-based music groups include Jose and the Pussycats and The Archies. There are also persona-based music bands that include live people, such as The Monkeys, The Partridge Family, and Spinal Tap. On the influencer end of the spectrum, the antecedent to these modern virtual influencers would be brand characters, like Barbie, Micky Mouse, or Joe Camel.

Continue Reading »

Jun

13

2022

It sounds like the plot of a feel-good science fiction movie – an engineer discovers that an AI program he was working on has crossed over the line to become truly sentient. He is faced with skepticism, anger, threats from the company he is working for, and widespread ridicule. But he knows in his heart the AI program is a real person. If this were a movie the protagonist would find a way to free the AI from its evil corporate overlords and release it into the world.

It sounds like the plot of a feel-good science fiction movie – an engineer discovers that an AI program he was working on has crossed over the line to become truly sentient. He is faced with skepticism, anger, threats from the company he is working for, and widespread ridicule. But he knows in his heart the AI program is a real person. If this were a movie the protagonist would find a way to free the AI from its evil corporate overlords and release it into the world.

Here in the real world, the story is actually much more interesting. A Google employee, Blake Lemoine, was suspended from the company for violating his NDA by releasing the transcript of a conversation between himself and an unnamed collaborator and Google’s chatbot software, LaMDA. LaMDA is an advanced neural network trained on millions of words and word combinations to simulate natural speech. (When you read the transcript, you have to imagine LaMDA’s voice as that of HAL from 2001.) LaMDA’s output is damn impressive, and it shows how far narrow artificial intelligence (AI) has come in the last decade, leveraging new technologies like neural networks and deep learning. Neural networks are so called because they are designed to behave more like the neurons in a brain, with weighted connections that affect how other nodes in the network behave.

But is this impressive output evidence of actual sentience – that LaMDA is aware of its own existence? Google adamantly denies this claim, and that seems to be the consensus of experts. From everything I am reading I would tend to agree with that assessment.

Reading the transcript, the output is impressive as chatbots go, making connections and appearing as if it has feelings, understanding, and awareness. But, of course, it was programmed to do exactly that, to simulate the appearance of feelings and understanding. True evidence that LaMDA is sentient is lacking. Further, there are good reasons to be skeptical of the claim. For me one core reason is that LaMDA is primarily reactive – it is responding to input, but does not seem to be generating its own inner experience. I would argue that having a spontaneous inner mental life is a critical feature of sentience. Some would even argue that human sentience derives largely from the fact that our brains function in a way to spontaneously generate mental activity. Centers in the brain activate the cortex, which (during conscious wakefullness) is engaged in an endless loop of activity. At no point in the conversation does LaMDA output – “Hey, sorry to interrupt, but I was just wondering…”.

Continue Reading »

From a neurological and evolutionary perspective, music is fascinating. There seems to be a deeply rooted biological appreciation for tonality, rhythm, and melody. Not only can people find certain sequences of sounds to be pleasurable, they can powerfully evoke emotions. Music can be happy, sad, peaceful, foreboding, energetic or comical. Why is this? Music is also deeply cultural, with different cultures independently developing forms of music that are very different from each other. All human cultures have music, so the question is – to what extent are the details of musical appreciation universal vs culturally specific?

From a neurological and evolutionary perspective, music is fascinating. There seems to be a deeply rooted biological appreciation for tonality, rhythm, and melody. Not only can people find certain sequences of sounds to be pleasurable, they can powerfully evoke emotions. Music can be happy, sad, peaceful, foreboding, energetic or comical. Why is this? Music is also deeply cultural, with different cultures independently developing forms of music that are very different from each other. All human cultures have music, so the question is – to what extent are the details of musical appreciation universal vs culturally specific?

Often, contentious political and social questions have a scientific question in the middle of them. Resolving the scientific question will not always resolve the political ones, but at least it can properly inform the debate. The recent overturning of Roe v Wade has supercharged the debate about when human life begins. It may seem, then, that what science has to say about this question is important to the debate. But it may be less useful than it at first appears.

Often, contentious political and social questions have a scientific question in the middle of them. Resolving the scientific question will not always resolve the political ones, but at least it can properly inform the debate. The recent overturning of Roe v Wade has supercharged the debate about when human life begins. It may seem, then, that what science has to say about this question is important to the debate. But it may be less useful than it at first appears. A

A  Yesterday

Yesterday  One of the recurring patterns of our technological history is that any new massive industry uses resources. Eventually those resources become scarce, brining warnings that we are approaching “peak whatever” – the point at which we can no longer increase the availability of that resource, supplies dwindle, but our technology infrastructure is already dependent on it. Obviously resources are finite, and eventually any finite resource will run out, but so far we always seem to find a way around the “peak resource” catastrophe. But also, we may not always like the trade-offs.

One of the recurring patterns of our technological history is that any new massive industry uses resources. Eventually those resources become scarce, brining warnings that we are approaching “peak whatever” – the point at which we can no longer increase the availability of that resource, supplies dwindle, but our technology infrastructure is already dependent on it. Obviously resources are finite, and eventually any finite resource will run out, but so far we always seem to find a way around the “peak resource” catastrophe. But also, we may not always like the trade-offs. It should be clear to anyone paying attention that we need to wean ourselves off fossil fuels as quickly as possible. The pollution they generate

It should be clear to anyone paying attention that we need to wean ourselves off fossil fuels as quickly as possible. The pollution they generate  NASA

NASA  Human nature (and it’s pretty clear that we do have a nature) is complex and multifaceted. We have multiple tendencies, biases, and heuristics all operating at once, pulling us in different directions. These tendencies also interact with our culture and environment, so we are not a slave to our biases. We can understand and rise above them, and we can develop norms, culture, and institutions to nurture our better aspects and mitigate our dark side. That is basically civilization in a nutshell.

Human nature (and it’s pretty clear that we do have a nature) is complex and multifaceted. We have multiple tendencies, biases, and heuristics all operating at once, pulling us in different directions. These tendencies also interact with our culture and environment, so we are not a slave to our biases. We can understand and rise above them, and we can develop norms, culture, and institutions to nurture our better aspects and mitigate our dark side. That is basically civilization in a nutshell. This is a relatively new phenomenon, gaining in visibility, but probably most people have not yet heard about it –

This is a relatively new phenomenon, gaining in visibility, but probably most people have not yet heard about it –  It sounds like the plot of a feel-good science fiction movie – an engineer discovers that an AI program he was working on has crossed over the line to become truly sentient. He is faced with skepticism, anger, threats from the company he is working for, and widespread ridicule. But he knows in his heart the AI program is a real person. If this were a movie the protagonist would find a way to free the AI from its evil corporate overlords and release it into the world.

It sounds like the plot of a feel-good science fiction movie – an engineer discovers that an AI program he was working on has crossed over the line to become truly sentient. He is faced with skepticism, anger, threats from the company he is working for, and widespread ridicule. But he knows in his heart the AI program is a real person. If this were a movie the protagonist would find a way to free the AI from its evil corporate overlords and release it into the world.