Apr

24

2025

Regulations are a classic example of a proverbial double-edged sword. They are essential to create and maintain a free and fair market, to prevent exploitation, and to promote safety and the public interest. Just look at 19th century America for countless examples of what happens without proper regulations (child labor, cities ablaze, patent medicines, and food was a crap shoot). But, regulations can have a powerful effect and this includes unintended consequences, regulatory overreach, ideological capture, and stifling bureaucracy. This is why optimal regulations should be minimalist, targeted, evidence-based, consensus-driven, and open to revision. This makes regulations also a classic example of Aristotle’s rule of the “golden mean”. Go too far to either extreme (too little or to onerous) and regulations can be a net negative.

Regulations are a classic example of a proverbial double-edged sword. They are essential to create and maintain a free and fair market, to prevent exploitation, and to promote safety and the public interest. Just look at 19th century America for countless examples of what happens without proper regulations (child labor, cities ablaze, patent medicines, and food was a crap shoot). But, regulations can have a powerful effect and this includes unintended consequences, regulatory overreach, ideological capture, and stifling bureaucracy. This is why optimal regulations should be minimalist, targeted, evidence-based, consensus-driven, and open to revision. This makes regulations also a classic example of Aristotle’s rule of the “golden mean”. Go too far to either extreme (too little or to onerous) and regulations can be a net negative.

The regulations of GMOs are an example, in my opinion, of ideological capture in regulations. The US, actually, has pretty good regulations, requiring study and approval for each new GMO product on the market, but no outright banning. You could argue that they are a bit too onerous to be optimal, ensuring that only large companies can afford to usher a new GMO product to the market, and therefore stifling competition from smaller companies. That’s one of those unintended consequences. Some states, like Hawaii and Vermont, have instituted their own more restrictive regulations, based purely on ideology and not science or evidence. Europe is another story, with highly restrictive regulations on GMOs.

But in recent years scientific advances in genetics have cracked the door open for genetic modification in highly regulated environments. This is similar to what happened with stem cell research in the US. Use of embryonic stem cells were ideologically controversial, and ultimately the development of any new cells lines was banned by Bush in 2001. Scientists then discovered how to convert adult cells into induced pluripotent stem cells, mostly side-stepping these regulations.

Continue Reading »

Apr

21

2025

Have you ever been into a video game that you played for hours a day for a while? Did you ever experience elements of game play bleeding over into the real world? If you have, then you have experienced what psychologists call “game transfer phenomenon” or GTP. This can be subtle, such as unconsciously placing your hand on the AWSD keys on a keyboard, or more extreme such as imagining elements of the game in the real world, such as health bars over people’s heads.

Have you ever been into a video game that you played for hours a day for a while? Did you ever experience elements of game play bleeding over into the real world? If you have, then you have experienced what psychologists call “game transfer phenomenon” or GTP. This can be subtle, such as unconsciously placing your hand on the AWSD keys on a keyboard, or more extreme such as imagining elements of the game in the real world, such as health bars over people’s heads.

None of this is surprising, actually. Our brains adapt to use. Spend enough time in a certain environment, engaging in a specific activity, experiencing certain things, and these pathways will be reinforced. This is essentially what PTSD is – spend enough time fighting for your life in extremely violent and deadly situations, and the behaviors and associations you learn are hard to turn off. I have experienced only a tiny whisper of this after engaging for extended periods of time in live-action gaming that involves some sort of combat (like paint ball or LARPing) – it may take a few days for you to stop looking for threats and being jumpy.

I have also noticed a bit of transfer (and others have noted this to me as well) in that I find myself reaching to pause or rewind a live radio broadcast because I missed something that was said. I also frequently try to interact with screens that are not touch-screens. I am getting used to having the ability to affect my physical reality at will.

Now there is a new wrinkle to this phenomenon – we have to consider the impact of spending more and more time engaged in virtual experiences. This will only get more profound as virtual reality becomes more and more a part of our daily routine. I am also thinking about the not-to-distant future and beyond, where some people might spend huge chunks of their day in VR. Existing research shows that GTP is more likely to occur with increased time and immersiveness. What happens when our daily lives are a blend of the virtual and the physical? Not only is there VR, there is augmented reality (AR) where we overlay digital information onto our perception of the real world. This idea was explored in a Dr. Who episode in which a society of people were so dependent on AR that they were literally helpless without it, unable to even walk from point A to B.

Continue Reading »

Apr

14

2025

Last week I wrote about the de-extinction of the dire wolf by a company, Colossal Biosciences. What they did was pretty amazing – sequence ancient dire wolf DNA and use that as a template to make 20 changes to 14 genes in the gray wolf genome via CRISPR. They focused on the genetic changes they thought would have the biggest morphological effect, so that the resulting pups would look as much as possible like the dire wolves of old.

Last week I wrote about the de-extinction of the dire wolf by a company, Colossal Biosciences. What they did was pretty amazing – sequence ancient dire wolf DNA and use that as a template to make 20 changes to 14 genes in the gray wolf genome via CRISPR. They focused on the genetic changes they thought would have the biggest morphological effect, so that the resulting pups would look as much as possible like the dire wolves of old.

This achievement, however, is somewhat tainted by overhyping what was actually achieved, by the company and many media outlets. Although the pushback began immediately, and there is plenty of reporting about the fact that these are not exactly dire wolves (as I pointed out myself). I do think we should not fall into the pattern of focusing on the controversy and the negative and missing the fact that this is a genuinely amazing scientific accomplishment. It is easy to become blase about such things. Sometimes it’s hard to know in reporting what the optimal balance is between the positive and the negative, and as skeptics we definitely can tend toward the negative.

I feel the same way, for example, about artificial intelligence. Some of my skeptical colleagues have taken the approach that AI is mostly hype, and focusing on what the recent crop of AI apps are not (they are not sentient, they are not AGI), rather than what they are. In both cases I think it’s important to remember that science and pseudoscience are a continuum, and just because something is being overhyped does not mean it gets tossed in the pseudoscience bucket. That is just another form of bias. Sometimes that amounts to substituting cynicism for more nuanced skepticism.

Continue Reading »

Apr

11

2025

We may have a unique opportunity to make an infrastructure investment that can demonstrably save money over the long term – by burying power and broadband lines. This is always an option, of course, but since we are in the early phases of rolling out fiber optic service, and also trying to improve our grid infrastructure with reconductoring, now may be the perfect time to also upgrade our infrastructure by burying much of these lines.

We may have a unique opportunity to make an infrastructure investment that can demonstrably save money over the long term – by burying power and broadband lines. This is always an option, of course, but since we are in the early phases of rolling out fiber optic service, and also trying to improve our grid infrastructure with reconductoring, now may be the perfect time to also upgrade our infrastructure by burying much of these lines.

This has long been a frustration of mine. I remember over 40 years ago seeing new housing developments (my father was in construction) with all the power lines buried. I hadn’t realized what a terrible eye sore all those telephone poles and wires were until they were gone. It was beautiful. I was lead to believe this was the new trend, especially for residential areas. I looked forward to a day without the ubiquitous telephone poles, much like the transition to cable eliminated the awful TV antennae on top of every home. But that day never came. Areas with buried lines remained, it seems, a privilege of upscale neighborhoods. I get further annoyed every time there is a power outage in my area because of a downed line.

The reason, ultimately, had to be cost. Sure, there are lots of variables that determine that cost, but at the end of the day developers, towns, utility companies were taking the cheaper option. But what price do we place on the aesthetics of the places we live, and the inconvenience of regular power outages? I also hate the fact that the utility companies have to come around every year or so and carve ugly paths through large beautiful trees.

Continue Reading »

Apr

08

2025

This really is just a coincidence – I posted yesterday about using AI and modern genetic engineering technology, with one application being the de-extinction of species. I had not seen the news from yesterday about a company that just announced it has cloned three dire wolves from ancient DNA. This is all over the news, so here is a quick recap before we discuss the implications.

This really is just a coincidence – I posted yesterday about using AI and modern genetic engineering technology, with one application being the de-extinction of species. I had not seen the news from yesterday about a company that just announced it has cloned three dire wolves from ancient DNA. This is all over the news, so here is a quick recap before we discuss the implications.

The company, Colossal Biosciences, has long announced its plans to de-extinct the woolly mammoth. This was the company that recently announced it had made a woolly mouse by inserting a gene for wooliness from recovered woolly mammoth DNA. This was a proof-of-concept demonstration. But now they say they have also been working on the dire wolf, a species of wolf closely related to the modern gray wolf that went extinct 13,000 years ago. We mostly know about them from skeletons found in the Le Brea tar pits (some of which are on display at my local Peabody Museum). Dire wolves are about 20% bigger than gray wolves, have thicker lighter coats, and are more muscular. They are the bad-ass ice-age version of wolves that coexisted with saber-toothed tigers and woolly mammoths.

The company was able to recover DNA from 13,000 year old tooth and a 72,000 year old skull. With that DNA they engineered wolf DNA at 20 sites over 14 genes, then used that DNA to fertilize an egg which they gestated in a dog. They actually did this twice, the first time creating two males, Romulus and Remus (now six months old), and the second time making one female, Kaleesi (now three months old). The wolves are kept in a reserve. The company says they have no current plan to breed them, but do plan to make more in order to create a full pack to study pack behavior.

Continue Reading »

Apr

07

2025

I think it’s increasingly difficult to argue that the recent boom in artificial intelligence (AI) is mostly hype. There is a lot of hype, but don’t let that distract you from the real progress. The best indication of this is applications in scientific research, because the outcomes are measurable and objective. AI applications are particularly adept at finding patterns in vast sets of data, finding patterns in hours that might have required months of traditional research. We recently discussed on the SGU using AI to sequence proteins, which is the direction that researchers are going in. Compared to the traditional method using AI analysis is faster and better at identifying novel proteins (not already in the database).

One SGU listener asked an interesting question after our discussion of AI and protein sequencing that I wanted to explore – can we apply the same approach to DNA and can this result in reverse-engineering the genetic sequence from the desired traits? AI is already transforming genetic research. AI apps allow for faster, cheaper, and more accurate DNA sequencing, while also allowing for the identification of gene variants that correlate with a disease or a trait. Genetics is in the sweet spot for these AI applications – using large databases to find meaningful patterns. How far will this tech go, and how quickly.

We have already sequenced the DNA of over 3,000 species. This number is increasing quickly, accelerated by AI sequencing techniques. We also have a lot of data about gene sequences and the resulting proteins, non-coding regulatory DNA, gene variants and disease states, and developmental biology. If we trained an AI on all this data, could it then make predictions about the effects of novel gene variants? Could it also go from a desired morphological trait back to the genetic sequence that would produce that trait? Again, this sounds like the perfect application for AI.

Continue Reading »

Apr

04

2025

Yes – it is well-documented that in many industries the design of products incorporates a plan for when the product will need to be replaced. A blatant example was in 1924 when an international meeting of lightbulb manufacturers decided to limit the lifespan of lightbulbs to 1,000 hours, so that consumers would have to constantly replace them. This artificial limitation did not end until CFLs and then LED lightbulbs largely replaced incandescent bulbs.

Yes – it is well-documented that in many industries the design of products incorporates a plan for when the product will need to be replaced. A blatant example was in 1924 when an international meeting of lightbulb manufacturers decided to limit the lifespan of lightbulbs to 1,000 hours, so that consumers would have to constantly replace them. This artificial limitation did not end until CFLs and then LED lightbulbs largely replaced incandescent bulbs.

But – it’s more complicated than you might think (it always is). Planned obsolescence is not always about gimping products so they break faster. It often is – products are made so they are difficult to repair or upgrade and arbitrary fashions change specifically to create demand for new versions. But often there is a rational decision to limit product quality. Some products, like kids’ clothes, have a short use timeline, so consumers prefer cheap to durable. There is also a very good (for the consumer) example of true obsolescence – sometimes the technology simply advances, offering better products. Durability is not the only nor the primary attribute determining the quality of a product, and it makes no sense to build in expensive durability for a product that consumers will want to replace. So there is a complex dynamic among various product features, with durability being only one feature.

We can also ask the question, for any product or class of products, is durability actually decreasing over time? Consumers are now on the alert for planned obsolescence, and this may produce the confirmation bias of seeing it everywhere, even when it’s not true. A recent study looking at big-ticket appliances shows how complex this question can be. This is a Norwegian study looking at the lifespan of large appliances over decades, starting in the 1950s.

First, they found that for most large appliances, there was no decrease in lifespan over this time period. So the phenomenon simply did not exist for the items that homeowning consumers care the most about, their expensive appliances. There were two exceptions, however – ovens and washing machines. Each has its own explanations.

Continue Reading »

Apr

03

2025

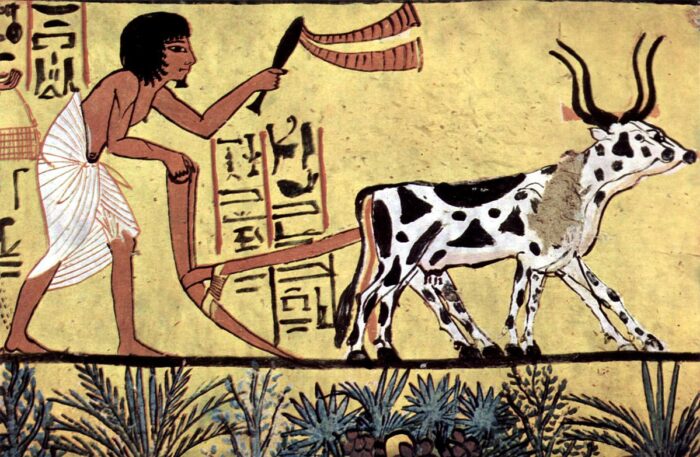

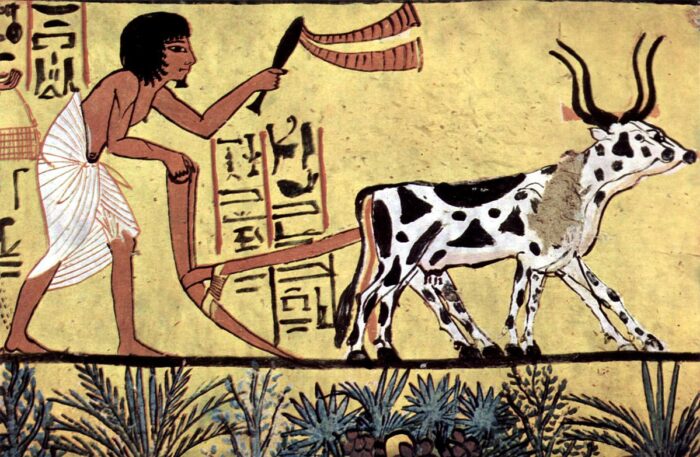

It is generally accepted that the transition from hunter-gatherer communities to agriculture was the single most important event in human history, ultimately giving rise to all of civilization. The transition started to take place around 12,000 years ago in the Middle East, China, and Mesoamerica, leading to the domestication of plants and animals, a stable food supply, permanent settlements, and the ability to support people not engaged full time in food production. But why, exactly, did this transition occur when and where it did?

It is generally accepted that the transition from hunter-gatherer communities to agriculture was the single most important event in human history, ultimately giving rise to all of civilization. The transition started to take place around 12,000 years ago in the Middle East, China, and Mesoamerica, leading to the domestication of plants and animals, a stable food supply, permanent settlements, and the ability to support people not engaged full time in food production. But why, exactly, did this transition occur when and where it did?

Existing theories focus on external factors. The changing climate lead to fertile areas of land with lots of rainfall, at the same time food sources for hunting and gathering were scarce. This occurred at the end of the last glacial period. This climate also favored the thriving of cereals, providing lots of raw material for domestication. There was therefore the opportunity and the drive to find another reliable food source. There also, however, needs to be the means. Humanity at that time had the requisite technology to begin farming, and agricultural technology advanced steadily.

A new study looks at another aspect of the rise of agriculture, demographic interactions. How were these new agricultural communities interacting with hunter-gather communities, and with each other? The study is mainly about developing and testing an inferential model to look at these questions. Here is a quick summary from the paper:

“We illustrate the opportunities offered by this approach by investigating three archaeological case studies on the diffusion of farming, shedding light on the role played by population growth rates, cultural assimilation, and competition in shaping the demographic trajectories during the transition to agriculture.”

In part the transition to agriculture occurred through increased population growth of agricultural communities, and cultural assimilation of hunter-gatherer groups who were competing for the same physical space. Mostly they were validating the model by looking at test cases to see if the model matched empirical data, which apparently it does.

Continue Reading »

Mar

28

2025

The fashion retailer, H&M, has announced that they will start using AI generated digital twins of models in some of their advertising. This has sparked another round of discussion about the use of AI to replace artists of various kinds.

The fashion retailer, H&M, has announced that they will start using AI generated digital twins of models in some of their advertising. This has sparked another round of discussion about the use of AI to replace artists of various kinds.

Regarding the H&M announcement specifically, they said they will use digital twins of models that have already modeled for them, and only with their explicit permission, while the models retain full ownership of their image and brand. They will also be compensated for their use. On social media platforms the use of AI-generated imagery will carry a watermark (often required) indicating that the images are AI-generated.

It seems clear that H&M is dipping their toe into this pool, doing everything they can to address any possible criticism. They will get explicit permission, compensate models, and watermark their ads. But of course, this has not shielded them from criticism. According to the BBC:

American influencer Morgan Riddle called H&M’s move “shameful” in a post on her Instagram stories.

“RIP to all the other jobs on shoot sets that this will take away,” she posted.

This is an interesting topic for discussion, so here’s my two-cents. I am generally not compelled by arguments about losing existing jobs. I know this can come off as callous, as it’s not my job on the line, but there is a bigger issue here. Technological advancement generally leads to “creative destruction” in the marketplace. Obsolete jobs are lost, and new jobs are created. We should not hold back progress in order to preserve obsolete jobs.

Continue Reading »

Mar

24

2025

We had a fascinating discussion on this week’s SGU that I wanted to bring here – the subject of artificial intelligence programs (AI), specifically large language models (LLMs), lying. The starting point for the discussion was this study, which looked at punishing LLMs as a method of inhibiting their lying. What fascinated me the most is the potential analogy to neuroscience – are these LLMs behaving like people?

We had a fascinating discussion on this week’s SGU that I wanted to bring here – the subject of artificial intelligence programs (AI), specifically large language models (LLMs), lying. The starting point for the discussion was this study, which looked at punishing LLMs as a method of inhibiting their lying. What fascinated me the most is the potential analogy to neuroscience – are these LLMs behaving like people?

LLMs use neural networks (specifically a transformer model) which mimic to some extent the logic of information processing used in mammalian brains. The important bit is that they can be trained, with the network adjusting to the training data in order to achieve some preset goal. LLMs are generally trained on massive sets of data (such as the internet), and are quite good at mimicking human language, and even works of art, sound, and video. But anyone with any experience using this latest crop of AI has experienced AI “hallucinations”. In short – LLMs can make stuff up. This is a significant problem and limits their reliability.

There is also a related problem. Hallucinations result from the LLM finding patterns, and some patterns are illusory. The LLM essentially makes the incorrect inference from limited data. This is the AI version of an optical illusion. They had a reason in the training data for thinking their false claim was true, but it isn’t. (I am using terms like “thinking” here metaphorically, so don’t take it too literally. These LLMs are not sentient.) But sometimes LLMs don’t inadvertently hallucinate, they deliberately lie. It’s hard not to keep using these metaphors, but what I mean is that the LLM was not fooled by inferential information, it created a false claim as a way to achieve its goal. Why would it do this?

Well, one method of training is to reward the LLM when it gets the right answer. This reward can be provided by a human – checking a box when the LLM gives a correct answer. But this can be time consuming, so they have build self-rewarding language models. Essentially you have a separate algorithm which assessed the output and reward the desired outcome. So, in essence, the goal of the LLM is not to produce the correct answer, but to get the reward. So if you tell the LLM to solve a particular problem, it may find (by exploring the potential solution space) that the most efficient way to obtain the reward is to lie – to say it has solved the problem when it has not. How do we keep it from doing this.

Continue Reading »

Regulations are a classic example of a proverbial double-edged sword. They are essential to create and maintain a free and fair market, to prevent exploitation, and to promote safety and the public interest. Just look at 19th century America for countless examples of what happens without proper regulations (child labor, cities ablaze, patent medicines, and food was a crap shoot). But, regulations can have a powerful effect and this includes unintended consequences, regulatory overreach, ideological capture, and stifling bureaucracy. This is why optimal regulations should be minimalist, targeted, evidence-based, consensus-driven, and open to revision. This makes regulations also a classic example of Aristotle’s rule of the “golden mean”. Go too far to either extreme (too little or to onerous) and regulations can be a net negative.

Regulations are a classic example of a proverbial double-edged sword. They are essential to create and maintain a free and fair market, to prevent exploitation, and to promote safety and the public interest. Just look at 19th century America for countless examples of what happens without proper regulations (child labor, cities ablaze, patent medicines, and food was a crap shoot). But, regulations can have a powerful effect and this includes unintended consequences, regulatory overreach, ideological capture, and stifling bureaucracy. This is why optimal regulations should be minimalist, targeted, evidence-based, consensus-driven, and open to revision. This makes regulations also a classic example of Aristotle’s rule of the “golden mean”. Go too far to either extreme (too little or to onerous) and regulations can be a net negative.

Have you ever been into a video game that you played for hours a day for a while? Did you ever experience elements of game play bleeding over into the real world? If you have, then you have experienced what psychologists call “

Have you ever been into a video game that you played for hours a day for a while? Did you ever experience elements of game play bleeding over into the real world? If you have, then you have experienced what psychologists call “ Last week

Last week We may have a unique opportunity to make an infrastructure investment that can demonstrably save money over the long term – by burying power and broadband lines. This is always an option, of course, but since we are in the early phases of rolling out fiber optic service, and also trying to improve our grid infrastructure

We may have a unique opportunity to make an infrastructure investment that can demonstrably save money over the long term – by burying power and broadband lines. This is always an option, of course, but since we are in the early phases of rolling out fiber optic service, and also trying to improve our grid infrastructure  This really is just a coincidence –

This really is just a coincidence –  Yes –

Yes –  It is generally accepted that the transition from hunter-gatherer communities to agriculture was the single most important event in human history, ultimately giving rise to all of civilization. The transition started to take place around 12,000 years ago in the Middle East, China, and Mesoamerica, leading to the domestication of plants and animals, a stable food supply, permanent settlements, and the ability to support people not engaged full time in food production.

It is generally accepted that the transition from hunter-gatherer communities to agriculture was the single most important event in human history, ultimately giving rise to all of civilization. The transition started to take place around 12,000 years ago in the Middle East, China, and Mesoamerica, leading to the domestication of plants and animals, a stable food supply, permanent settlements, and the ability to support people not engaged full time in food production.  The fashion retailer, H&M, has announced that they will

The fashion retailer, H&M, has announced that they will  We had a fascinating discussion on

We had a fascinating discussion on