Oct

31

2023

It’s Halloween, so there are a lot of fluff pieces about ghosts and similar phenomena circulating in the media. There are some good skeptical pieces as well, which is always nice to see. For this piece I did not want to frame the headline as a question, which I think is gratuitous, especially when my regular readers know what answer I am going to give. The best current scientific evidence has a solid answer to this question – ghosts are not a real scientific phenomenon.

It’s Halloween, so there are a lot of fluff pieces about ghosts and similar phenomena circulating in the media. There are some good skeptical pieces as well, which is always nice to see. For this piece I did not want to frame the headline as a question, which I think is gratuitous, especially when my regular readers know what answer I am going to give. The best current scientific evidence has a solid answer to this question – ghosts are not a real scientific phenomenon.

For most scientists the story pretty much ends there. Spending any more serious time on the issue is a waste, even an academic embarrassment. But for a scientific skeptic there are several real and interesting questions. Why do so many people believe in ghosts? What naturalistic phenomena are being mistaken for ghostly phenomena? What specific errors in critical thinking lead to the misinterpretation of experiences as evidence for ghosts? Is what ghost-hunters are doing science, and if not, why not?

The first question is mostly sociological. A recent survey finds that 41% of Americans believe in ghosts, and 20% believe they have had an encounter with a ghost. We know that there are some personality traits associated with belief in ghosts. Of the big five, openness to experience and sensation is the biggest predictor. Also, intuitive thinking style rather than analytical is associated with a greater belief in the paranormal in general, including ghosts.

The relationship between religious belief and paranormal belief, including ghosts, is complicated. About half of studies show the two go together, while the rest show that being religous reduces the chance of believing in the paranormal. It likely depends on the religion, the paranormal belief, and how questions are asked. Some religious preach that certain paranormal beliefs are evil, therefore creating a stigma against them.

Continue Reading »

Oct

30

2023

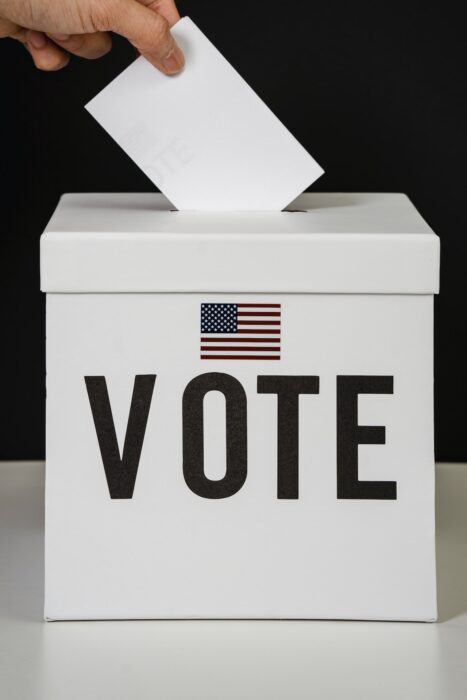

How is American democracy doing, and what can we do to improve it, if necessary? This is clearly a question of political science, and I am not a political scientist, and this is not a political blog. But there are some basic principles of critical thinking that might apply, and the second word in “political science” is “science”. Further, while this is not a political blog, what that really means is that I endeavor to be non-partisan. I am not trying to advocate for any particular party or ideological group. But many of the issues I discuss have a political dimension, because most issues do. Global warming is a scientific question, but there are massive political consequences, for example.

How is American democracy doing, and what can we do to improve it, if necessary? This is clearly a question of political science, and I am not a political scientist, and this is not a political blog. But there are some basic principles of critical thinking that might apply, and the second word in “political science” is “science”. Further, while this is not a political blog, what that really means is that I endeavor to be non-partisan. I am not trying to advocate for any particular party or ideological group. But many of the issues I discuss have a political dimension, because most issues do. Global warming is a scientific question, but there are massive political consequences, for example.

So if you will indulge me, I want to apply some basic critical thinking principles to some pressing questions regarding our democracy. My goal is to see if we can find some common ground. This is something I frequently recommend in many contexts – if you are trying to convince someone that a particular belief of theirs is pseudoscience, a good place to start is to establish some common ground and then proceed from there. Otherwise you will likely be talking past each other.

Also, despite the fact that we seem to be having increasing partisan division in this country, my sense is that we still have much more common ground than may be apparent. The media and politicians both benefit from emphasizing division, conflict, and differences. Keeping everyone as outraged and agitated as possible maximizes clicks and votes. Both polling and personal experience, if you look beyond the surface level, also tell a story of common ground. Most common-sense positions are supported by large majorities. Most people want basically the same things – safety, prosperity, liberty, transparency and fairness. This is not to minimize the very real different value judgements that exist in society. This is why we need democracy to work out compromises.

Continue Reading »

Oct

27

2023

Should an artificial intelligence (AI) be treated like a legal “subject” or agent? That is the question discussed in a new paper by legal scholars. They recognize that this question is a bit ahead of the technology, but argue that we should work out the legal ramifications before it’s absolutely necessary. They also argue – it might become necessary sooner than we think.

Should an artificial intelligence (AI) be treated like a legal “subject” or agent? That is the question discussed in a new paper by legal scholars. They recognize that this question is a bit ahead of the technology, but argue that we should work out the legal ramifications before it’s absolutely necessary. They also argue – it might become necessary sooner than we think.

One of their primary arguments is that it is technically possible for this to happen today. In the US a corporation can be considered a legal agent, or “artificial persons”, within the legal system. Corporations can have rights, because corporations are composed of people exerting their collective will. But, in some states it is not explicitly required that a corporation be headed by a human. You could, theoretically, run a corporation entirely by an AI. That AI would then have the legal rights of an artificial person, just like any other corporation. At least that’s the idea – one that can use discussion and perhaps require new legislation to deal with.

This legal conundrum, they argue, will only get greater as AI advances. We don’t even need to fully resolve the issue of narrow AI vs general AI for this to be a problem. An AI does not have to be truly sentient to behave in such a way that it creates both legal and ethical implications. They argue:

Rather than attempt to ban development of powerful AI, wrapping of AI in legal form could reduce undesired AI behavior by defining targets for legal action and by providing a research agenda to improve AI governance, by embedding law into AI agents, and by training AI compliance agents.

Basically we need a well thought-out legal framework to deal with increasingly sophisticated and powerful AIs, to make sure they can be properly controlled and regulated. It’s hard to argue with that.

Continue Reading »

Oct

26

2023

It’s pretty clear that we are at an inflection point with adoption of solar power. For the last 18 years in a row, solar PV electricity capacity has increased more (as a percentage increase) than any power source. Solar now accounts for 4.5% of global power generation. Wind generation is at 7.5%, which means wind and solar combined are at 12%. By comparison nuclear is at about 10% generation globally.

It’s pretty clear that we are at an inflection point with adoption of solar power. For the last 18 years in a row, solar PV electricity capacity has increased more (as a percentage increase) than any power source. Solar now accounts for 4.5% of global power generation. Wind generation is at 7.5%, which means wind and solar combined are at 12%. By comparison nuclear is at about 10% generation globally.

Solar PV is currently the cheapest power capacity to add to the grid. Extrapolating gets more complicated as solar penetration increases because we increasingly need to consider the costs of upgrading electrical grids and adding grid storage. But wind and solar still have a long way to go. Adopting these renewable energy source as quickly as possible is helped by technological improvements that make their installation and maintenance less expensive (and material and power hungry) and increase their efficiency. Part of the reason for the steep curves of wind and solar adoption is the fact that these technologies have been steadily improving.

There are numerous research programs looking at various methods for improving on the current silicon PV cells which dominate the market. The current range of energy conversion efficiencies for the top silicon solar cells on the market range from 18.7%–22.8%. Those are great numbers – when I started following the PV solar cell industry closely in the aughts efficiencies were around 15%. The theoretical upper limit for silicon is about 29%, so the technology has some head room. Increased efficiency, of course, means more energy per dollar invested, and fewer panels needed on any specific install.

Continue Reading »

Oct

24

2023

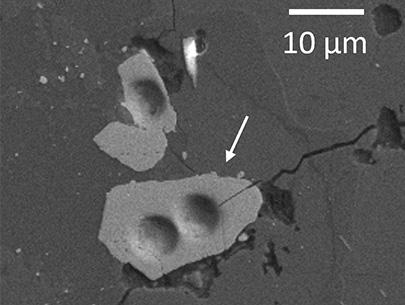

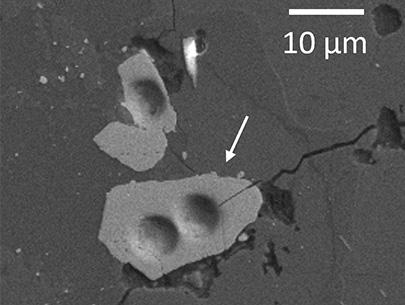

There are a few interesting stories lurking in this news item, but lets start with the top level – a new study revises the minimum age of the Moon to 4.46 billion years, 40 million years older than the previous estimate. That in itself is interesting, but not game-changing. It’s really a tweak, an incremental increase in precision. How scientists made this calculation, however, is more interesting.

There are a few interesting stories lurking in this news item, but lets start with the top level – a new study revises the minimum age of the Moon to 4.46 billion years, 40 million years older than the previous estimate. That in itself is interesting, but not game-changing. It’s really a tweak, an incremental increase in precision. How scientists made this calculation, however, is more interesting.

The researchers studied zircon crystals brought back from Apollo 17. Zircon is a crystal silicate that often contains some uranium. These crystals would have formed when the magma surface of the Moon cooled. The current dominant theory is that a Mars-sized planet slammed into the proto-Earth about four and a half billion years ago, creating the Earth as we know it. The collision also threw up a tremendous amount of material, with the bulk of it coalescing into our Moon. The surface of both worlds would have been molten from the heat of the collision, but it is easier to date the Moon because the surface is better preserved. The surface of the Earth undergoes constant turnover of one type or another, while the lunar surface is ancient. So dating the Moon tells us something about the age of the Earth also.

The method of dating employed in this latest study is called atom probe tomography. First they use an ion beam microscope to carve the tip of a crystal to a sharp point. Then they use UV lasers to evaporate atoms off the tip of the crystal. These atoms pass through a mass spectrometer, which uses the time it takes to pass through as a measure of mass, which identifies the element. The researchers are interested in the proportion of uranium to lead. Uranium is a common element found in zircon, and it also undergoes radioactive decay into lead at a known rate. In any sample you can therefore use the ratio of uranium to lead to calculate the age of that sample. Doing so yielded an age of 4.46 billion years old – the new minimum age of the Moon. It’s possible the Moon could be older than this, but it can’t be any younger.

Continue Reading »

Oct

23

2023

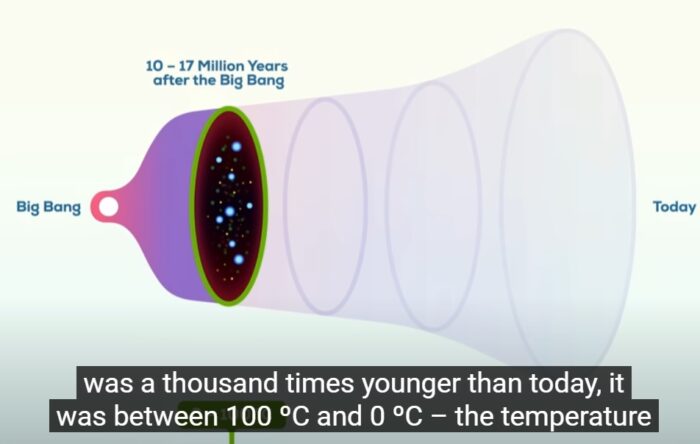

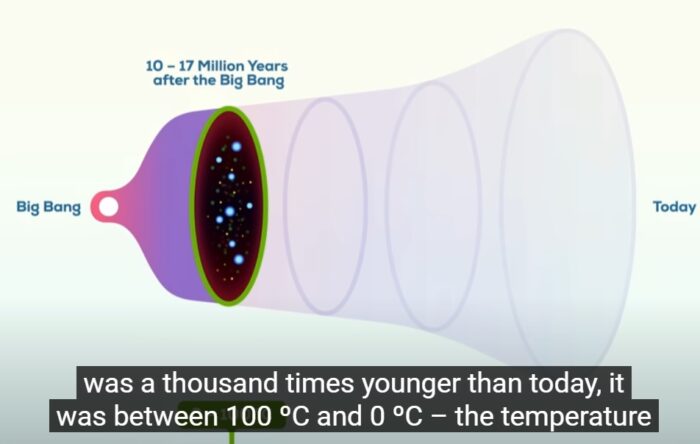

Recently I was asked what I thought about this video, which suggests it is possible that life formed in the early universe, shortly after the Big Bang. Although no mentioned specifically in the video, the ideas presents are essentially panspermia – the idea that life formed in the early universe and then spread as “seeds” throughout the universe, taking root in suitable environments like the early Earth. While the narrator admits these ideas are “speculative”, he presents what I feel is an extremely biased favorable take on the ideas being presented.

Recently I was asked what I thought about this video, which suggests it is possible that life formed in the early universe, shortly after the Big Bang. Although no mentioned specifically in the video, the ideas presents are essentially panspermia – the idea that life formed in the early universe and then spread as “seeds” throughout the universe, taking root in suitable environments like the early Earth. While the narrator admits these ideas are “speculative”, he presents what I feel is an extremely biased favorable take on the ideas being presented.

The video starts by arguing that life on Earth arose very quickly, perhaps implausibly quickly. The Earth is 4.5 billion years old, and it likely cooled sufficiently to be compatible with life around 4.3 billion years ago. The oldest fossils are 3.7 billion years old, which leaves a 600 million year window in which life could have developed from prebiotic molecules. When during that time did these complex molecules cross the line to be considered life is unknown, but it seems like there was probably 1-2 hundred million years for this to happen. The video argues that this was simply not enough time – so perhaps life already existed and seeded the Earth. But this argument is not valid. We do not have any information that would indicate something on the order of 100 million years was not enough time for the simplest type of life to form. So they set up a fake problem in order to introduce their unnecessary “solution”.

But the argument gets worse from there. Most of the video is spent speculating about the fact that between 10 million and 17 million years ago the temperature of the universe would have been between 100 C and 0 C, the temperature range of liquid water. During this time, life could have formed everywhere in the universe. But there is a glaring problem with this argument, that the video hand-waves away with a giant “may”.

Continue Reading »

Oct

20

2023

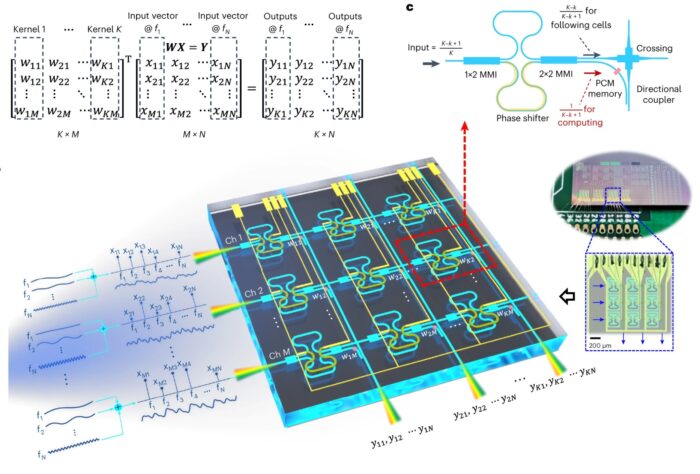

I am of the generation that essentially lived through the introduction and evolution of the personal computer. I have decades of experience as an active user and enthusiast, so I have been able to notice some patterns. One pattern is the relationship between the power of computing hardware and the demands of computing software. For the first couple of decades of personal computers, the capacity and speed of the hardware was definitely the limiting factor in terms of the end-user experience. Software was essentially fit into the available hardware, and as hardware continually improved, software power expanded to fill the available space. The same was true for hard drive capacity – each new drive seemed like a bottomless pit at first, but quickly file size increased to take advantage of the new capacity.

I am of the generation that essentially lived through the introduction and evolution of the personal computer. I have decades of experience as an active user and enthusiast, so I have been able to notice some patterns. One pattern is the relationship between the power of computing hardware and the demands of computing software. For the first couple of decades of personal computers, the capacity and speed of the hardware was definitely the limiting factor in terms of the end-user experience. Software was essentially fit into the available hardware, and as hardware continually improved, software power expanded to fill the available space. The same was true for hard drive capacity – each new drive seemed like a bottomless pit at first, but quickly file size increased to take advantage of the new capacity.

During these days my friends and I would intimately know every stat of our current hardware – RAM, hard drive capacity, processor speed, then the number of cores – and we engaged in a friendly arms race as we perpetually leap-frogged each other. The pattern shifted, however, sometime after 2000. For personal computers, hardware power seemed to finally get to the point where it was way more than enough for anything we might want to do. We stopped obsessing with things like processor speed – except, that is, for our gaming computer. Video games were the only everyday application that really stressed the power of our hardware. Suddenly, the stats of your graphics card became the most important stat.

That is beginning to wane also. I know there are gaming jockeys who still build sick rigs, pushing the limits of consumer computing, but for me, as long as my computer is basically up to date, I don’t have to worry about running the latest game. I still pay attention to my gaming card stats, however, especially when VR became a thing. There is always some new application that makes you want a hardware upgrade.

Today that new thing is artificial intelligence (AI), although this is not so much for the consumer as the big data centers. The latest crop of AI, like Chat GPT, which uses pretrained transformer technology, is hardware hungry. Interestingly, they mostly rely on graphics cards, which are the fastest mass-produced processors out there. The same is true for crypto mining, which led to a shortage of graphics cards and a spike in the price (damn crypto miners). Video games really are an important driver of computing hardware.

Continue Reading »

Oct

17

2023

The story has become a classic of failed futurism – driverless or self-driving cars were supposed start taking over the roads as early as 2020. But that didn’t happen – it turned that the last 5% of capability was about as difficult to develop as the first 95%. Around 2015 I visited Google and they were excited about the progress they were making with their self-driving car. They told us, clearly proud of their progress, that they used to measure their technology’s performance in terms of interventions per mile – how many times does the human driver have to grab the wheel to keep on the road. But now their metric was miles per intervention. It seemed plausible at the time that we would get to full self-driving capability by 2020. This is common when trying to predict future technology. We tend to overestimate short term progress, mostly because we extrapolate linearly into the future. But problems are often not linear to solve. Initial rapid progress in self-driving technology turned out to be misleading, and it is taking longer to make it over the final hurdle than originally thought (or at least hyped).

The story has become a classic of failed futurism – driverless or self-driving cars were supposed start taking over the roads as early as 2020. But that didn’t happen – it turned that the last 5% of capability was about as difficult to develop as the first 95%. Around 2015 I visited Google and they were excited about the progress they were making with their self-driving car. They told us, clearly proud of their progress, that they used to measure their technology’s performance in terms of interventions per mile – how many times does the human driver have to grab the wheel to keep on the road. But now their metric was miles per intervention. It seemed plausible at the time that we would get to full self-driving capability by 2020. This is common when trying to predict future technology. We tend to overestimate short term progress, mostly because we extrapolate linearly into the future. But problems are often not linear to solve. Initial rapid progress in self-driving technology turned out to be misleading, and it is taking longer to make it over the final hurdle than originally thought (or at least hyped).

Despite not meeting early hype, self-driving technology has continued to progress – so where are we now? The Society for Automotive Engineers (SAE) has developed a system for noting the level of autonomous driving, from L0 to L5. Levels 0-2 require a human driver to be behind the wheel, ready to take control when necessary. These levels are more accurately considered “driver assist” technology, than autonomous or self-driving. Level three can control the steering wheel and brake, can provide lane centering and adaptive cruise control. This level is currently available in Tesla’s and other vehicles.

Level 3 is the first level where the human driver can fully surrender control to the autonomous vehicle, and is not required to pay attention. Level 3 and 4 can fully drive the car but only in limited conditions, whereas level 5 can fully drive the car in all conditions. Right now we are at level 2 and cautiously transitioning to level 3. However, the difference between level 2 and 3 is often a legal rather than a technical one. Even when vehicles might theoretically be capable of level 3, the car manufacturers may not get approval and market them as level 3, because when the vehicle is in full control the manufacturer is legally responsible at that point for whatever happens, not the driver. Many self-driving cars, therefore, will remain paused at level 2, even as the technology improves, until the manufacturer is confident enough to get level 3 approval. Some market themselves as “level 2+” to reflect this.

Continue Reading »

Oct

16

2023

There are 33 billion chickens in the world, mostly domestic species raised for egg-laying or meat. They are a high efficiency source of high quality protein. It’s the kind of thing we need to do if we want to feed 8 billion people. Similarly we have planted 4.62 billion acres of cropland. About 75% of the food we consume comes from 12 plant species, and 5 animal species. But there is an unavoidable problem with growing so much biological material – we are not the only things that want to eat them.

There are 33 billion chickens in the world, mostly domestic species raised for egg-laying or meat. They are a high efficiency source of high quality protein. It’s the kind of thing we need to do if we want to feed 8 billion people. Similarly we have planted 4.62 billion acres of cropland. About 75% of the food we consume comes from 12 plant species, and 5 animal species. But there is an unavoidable problem with growing so much biological material – we are not the only things that want to eat them.

This is an – if you build it they will come – scenario. We are creating a food source for other organisms to eat and infect, which creates a lot of evolutionary pressure to do so. We are therefore locked in an evolutionary arms race against anything that would eat our lunch. And there is no easy way out of this. We have already has some epic failures, such as a fungus wiping out the global banana crop – yes, that already happened, a hundred years ago. And now it is happening again with the replacement banana. A virus almost wiped out the Hawaiian papaya industry, and citrus greening is threatening Florida’s citrus industry. The American chestnut essentially disappeared due to a fungus.

And now there is a threat to the world’s chickens. Last year millions were culled or died from the bird flu. As the avian flu virus evolves, it is quite possible that we will have a bird pandemic that could devastate a vital food source. Such viruses are also a potential source of zoonotic crossover to humans. Fighting this evolving threat requires that we use every tool we have. Best practices in terms of hygiene, maintaining biodiversity, and integrated pest management are all necessary. But they only mitigate the problem, not eliminate it. Vaccines are another option, and they will likely play an important role, but vaccines can be expensive and it’s difficult to administer 33 billion doses of chicken vaccines every year.

A recent study is a proof of concept for another approach – using modern gene editing tools to make chickens more resistant to infection. This approach saved the papaya industry, and brought back the American chestnut. It is also the best hope for crop bananas and citrus. Could it also stop the bird flu? H5N1 subtype clade 2.3.4.4b is an avian flu virus that is highly pathogenic, affects domestic and wild birds, and has cause numerous spillovers to mammals, including humans.

Continue Reading »

Oct

13

2023

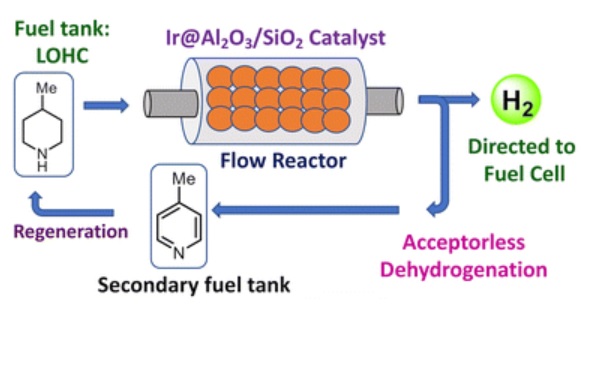

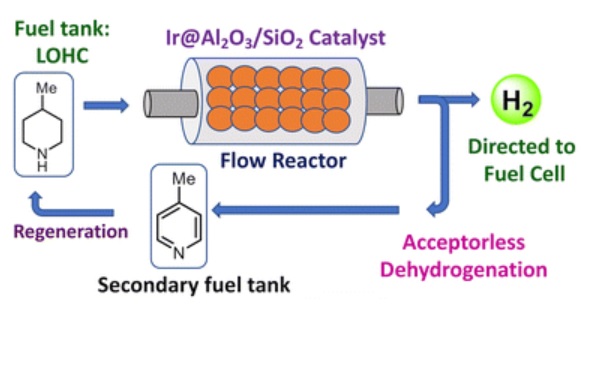

The press release for a recent study declares: “New catalyst could provide liquid hydrogen fuel of the future.” But don’t get excited – the optimism is more than a bit gratuitous. I have written about hydrogen fuel before, and the reasons I am not optimistic about hydrogen as a fuel for extensive use in transportation. Nothing in the new research changes any of this – most hydrogen production today uses fossil fuels and is worse than just burning the fossil fuel, hydrogen does not have very good energy density, and requires an infrastructure not only for manufacture but storage and transportation. It’s also a very leaky and reactive molecule, so challenging to deal with. It may see a future in some niche applications, but for cars it is progressively losing the competition with battery electric vehicles.

The press release for a recent study declares: “New catalyst could provide liquid hydrogen fuel of the future.” But don’t get excited – the optimism is more than a bit gratuitous. I have written about hydrogen fuel before, and the reasons I am not optimistic about hydrogen as a fuel for extensive use in transportation. Nothing in the new research changes any of this – most hydrogen production today uses fossil fuels and is worse than just burning the fossil fuel, hydrogen does not have very good energy density, and requires an infrastructure not only for manufacture but storage and transportation. It’s also a very leaky and reactive molecule, so challenging to deal with. It may see a future in some niche applications, but for cars it is progressively losing the competition with battery electric vehicles.

This new study does not alter the basic situation. But it does raise the potential of one way to deal with hydrogen, potentially for one of those niche applications. The biggest challenge for “the coming hydrogen economy” (which never came) is storage. There are basically three choices for storing hydrogen for use in a hydrogen fuel cell. You can cool it to liquid temperatures, you can compress it as a gas, or you can bind it up in some other material.

Hydrogen is the lightest element, and it contains a lot of potential energy, and for those reasons is an excellent fuel. It is the best fuel, arguably, for rockets, because it has the greatest specific energy (energy per mass), and for the rocket equation, energy per mass is everything. We may never do better than pure hydrogen as rocket fuel. Liquid hydrogen has about three times the energy per mass as gasoline. So theoretically it might seem like a good fuel. But – it has only one third the energy density (energy per volume) of gasoline. This is a limiting factor for small mobile applications like cars. Imagine a 50 gallon tank of liquid hydrogen to go as far as a 17 gallon tank of gasoline. Also, liquid hydrogen has to be kept very cold.

Continue Reading »

It’s Halloween, so there are a lot of fluff pieces about ghosts and similar phenomena circulating in the media. There are some good skeptical pieces as well, which is always nice to see. For this piece I did not want to frame the headline as a question, which I think is gratuitous, especially when my regular readers know what answer I am going to give. The best current scientific evidence has a solid answer to this question – ghosts are not a real scientific phenomenon.

It’s Halloween, so there are a lot of fluff pieces about ghosts and similar phenomena circulating in the media. There are some good skeptical pieces as well, which is always nice to see. For this piece I did not want to frame the headline as a question, which I think is gratuitous, especially when my regular readers know what answer I am going to give. The best current scientific evidence has a solid answer to this question – ghosts are not a real scientific phenomenon.

How is American democracy doing, and what can we do to improve it, if necessary? This is clearly a question of political science, and I am not a political scientist, and this is not a political blog. But there are some basic principles of critical thinking that might apply, and the second word in “political science” is “science”. Further, while this is not a political blog, what that really means is that I endeavor to be non-partisan. I am not trying to advocate for any particular party or ideological group. But many of the issues I discuss have a political dimension, because most issues do. Global warming is a scientific question, but there are massive political consequences, for example.

How is American democracy doing, and what can we do to improve it, if necessary? This is clearly a question of political science, and I am not a political scientist, and this is not a political blog. But there are some basic principles of critical thinking that might apply, and the second word in “political science” is “science”. Further, while this is not a political blog, what that really means is that I endeavor to be non-partisan. I am not trying to advocate for any particular party or ideological group. But many of the issues I discuss have a political dimension, because most issues do. Global warming is a scientific question, but there are massive political consequences, for example. Should an artificial intelligence (AI) be treated like a legal “subject” or agent? That is the question discussed

Should an artificial intelligence (AI) be treated like a legal “subject” or agent? That is the question discussed  It’s pretty clear that we are at an inflection point with adoption of solar power. For the last 18 years in a row, solar PV electricity capacity has increased more (as a percentage increase) than any power source. Solar now accounts for 4.5% of global power generation. Wind generation is at 7.5%, which means wind and solar combined are at 12%. By comparison nuclear is at about 10% generation globally.

It’s pretty clear that we are at an inflection point with adoption of solar power. For the last 18 years in a row, solar PV electricity capacity has increased more (as a percentage increase) than any power source. Solar now accounts for 4.5% of global power generation. Wind generation is at 7.5%, which means wind and solar combined are at 12%. By comparison nuclear is at about 10% generation globally. There are a few interesting stories lurking

There are a few interesting stories lurking  Recently I was asked what I thought about

Recently I was asked what I thought about  I am of the generation that essentially lived through the introduction and evolution of the personal computer. I have decades of experience as an active user and enthusiast, so I have been able to notice some patterns. One pattern is the relationship between the power of computing hardware and the demands of computing software. For the first couple of decades of personal computers, the capacity and speed of the hardware was definitely the limiting factor in terms of the end-user experience. Software was essentially fit into the available hardware, and as hardware continually improved, software power expanded to fill the available space. The same was true for hard drive capacity – each new drive seemed like a bottomless pit at first, but quickly file size increased to take advantage of the new capacity.

I am of the generation that essentially lived through the introduction and evolution of the personal computer. I have decades of experience as an active user and enthusiast, so I have been able to notice some patterns. One pattern is the relationship between the power of computing hardware and the demands of computing software. For the first couple of decades of personal computers, the capacity and speed of the hardware was definitely the limiting factor in terms of the end-user experience. Software was essentially fit into the available hardware, and as hardware continually improved, software power expanded to fill the available space. The same was true for hard drive capacity – each new drive seemed like a bottomless pit at first, but quickly file size increased to take advantage of the new capacity. The story has become a classic of failed futurism – driverless or self-driving cars were supposed start taking over the roads as early as 2020. But that didn’t happen – it turned that the last 5% of capability was about as difficult to develop as the first 95%. Around 2015 I visited Google and they were excited about the progress they were making with their self-driving car. They told us, clearly proud of their progress, that they used to measure their technology’s performance in terms of interventions per mile – how many times does the human driver have to grab the wheel to keep on the road. But now their metric was miles per intervention. It seemed plausible at the time that we would get to full self-driving capability by 2020. This is common when trying to predict future technology. We tend to overestimate short term progress, mostly because we extrapolate linearly into the future. But problems are often not linear to solve. Initial rapid progress in self-driving technology turned out to be misleading, and it is taking longer to make it over the final hurdle than originally thought (or at least hyped).

The story has become a classic of failed futurism – driverless or self-driving cars were supposed start taking over the roads as early as 2020. But that didn’t happen – it turned that the last 5% of capability was about as difficult to develop as the first 95%. Around 2015 I visited Google and they were excited about the progress they were making with their self-driving car. They told us, clearly proud of their progress, that they used to measure their technology’s performance in terms of interventions per mile – how many times does the human driver have to grab the wheel to keep on the road. But now their metric was miles per intervention. It seemed plausible at the time that we would get to full self-driving capability by 2020. This is common when trying to predict future technology. We tend to overestimate short term progress, mostly because we extrapolate linearly into the future. But problems are often not linear to solve. Initial rapid progress in self-driving technology turned out to be misleading, and it is taking longer to make it over the final hurdle than originally thought (or at least hyped). There are

There are  The press release for a recent study declares: “

The press release for a recent study declares: “