Apr

30

2021

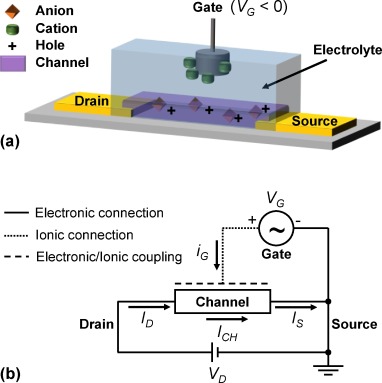

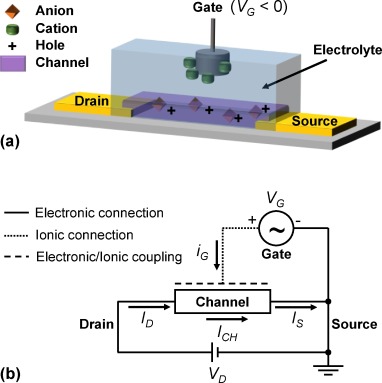

The title of this post should be provocative, if you think about it for a minute. For “organic” read flexible, soft, and biocompatible. An electrochemical synapse is essentially how mammalian brains work. So far we can be talking about a biological brain, but the last word, “transistor”, implies we are talking about a computer. This technology may represent the next step in artificial intelligence, developing a transistor that more closely resembles the functioning of the brain.

The title of this post should be provocative, if you think about it for a minute. For “organic” read flexible, soft, and biocompatible. An electrochemical synapse is essentially how mammalian brains work. So far we can be talking about a biological brain, but the last word, “transistor”, implies we are talking about a computer. This technology may represent the next step in artificial intelligence, developing a transistor that more closely resembles the functioning of the brain.

Let’s step back and talk about how brains and traditional computers work. A typical computer, such as the device you are likely using to read this post, has separate memory and logic. This means that there are components specifically for storing information, such as RAM (random-access memory), cache memory (fast memory that acts as a buffer between the processor and RAM) and for long-term storage hard drives and solid state drives. There are also separate components that perform logic functions to process information, such as the central CPU (central processing unit), graphics card, and other specialized processors.

The strength of computers is that they can perform some types of processing extremely fast, such as calculating with very large numbers. Memory is also very stable. You can store a billion word document and years later it will be unchanged. Try memorizing a billion words. The weakness of this architecture is that it is very energy intensive, largely because of the inefficiency of constantly having to transfer information from the memory components to the processing components. Processors are also very linear – they do one thing at a time. This is why more modern computers use multi-core processors, so they can have some limited multi-tasking.

Continue Reading »

Apr

29

2021

When studying the history of life evolutionary biologists and paleontologists have no choice but to look where the light is good. There are fossil windows into specific times and places in the past, and through these we glimpse a moment in biological history. We string these moments together to map out the past, but we know there are a lot of missing pieces.

When studying the history of life evolutionary biologists and paleontologists have no choice but to look where the light is good. There are fossil windows into specific times and places in the past, and through these we glimpse a moment in biological history. We string these moments together to map out the past, but we know there are a lot of missing pieces.

One relatively dark passage in the history of life is the evolution of multicellularity. Beginning about 541 million years ago (mya) we can see the beginning of the Cambrian explosion – the appearance of a vast diversity of multicellular life. But this “explosion” was only partly due to rapid adaptive radiation, it is also an artifact of the evolution of hard parts that can fossilize. That development turned on the lights. The earliest known single-celled organisms are 3.77 billion years old, so we have a 3 billion year time span during which a lot must have happened. We know from changes in the atmosphere that cells evolved that could use sunlight to produce oxygen, and other critters evolved to eat them.

Continue Reading »

Apr

27

2021

Battery technology has been consistently improving for decades, making possible the shift to EV cars. Tesla’s North American Model S Long Range Plus now has an official range of 402 miles. At the low end EVs have ranges >250 miles. And every year they get a little better. At some point improving battery capacity will translate into smaller and cheaper batteries rather than just increased range. I wonder what that point will be. In other words, if you could have a car with a range anywhere from 500 miles to 5,000 miles, what would you pay for? Where is the optimal cost vs benefit?

Battery technology has been consistently improving for decades, making possible the shift to EV cars. Tesla’s North American Model S Long Range Plus now has an official range of 402 miles. At the low end EVs have ranges >250 miles. And every year they get a little better. At some point improving battery capacity will translate into smaller and cheaper batteries rather than just increased range. I wonder what that point will be. In other words, if you could have a car with a range anywhere from 500 miles to 5,000 miles, what would you pay for? Where is the optimal cost vs benefit?

Batteries are also increasingly a good idea for grid storage. Batteries have a lot of features that make them desirable for grid-level storage. They have good energy and power density, and can provide as-needed (dispatchable) energy almost instantly. You don’t need to ramp up a turbine – the energy is just there. They have great round-trip efficiency (rivaled only by pumped hydro), meaning little energy is lost in the storage and supply of energy. They maintain their energy for a long enough time (they have slow self-discharge). They also have decent lifespans – charge-discharge cycles.

There are already battery grid storage facilities with 100-300 megawatt capacity, with more in the works, including a 409 megawatt system in South Florida. It is now cheaper for some utility companies to build a battery storage facility to add dispatchable capacity then to build a natural gas powered plant. There are other methods of grid storage, but batteries have had an increasing share of storage capacity over the last decade. But anytime one technology scales up by orders of magnitude, we may run into resource and infrastructure limitations. At some point we are going to stress our supplies of lithium, for example. As we radically change our energy infrastructure, therefore, we need to plan for a sustainable system. That means we need to think about what happens to batteries throughout their lifespan.

Continue Reading »

Apr

26

2021

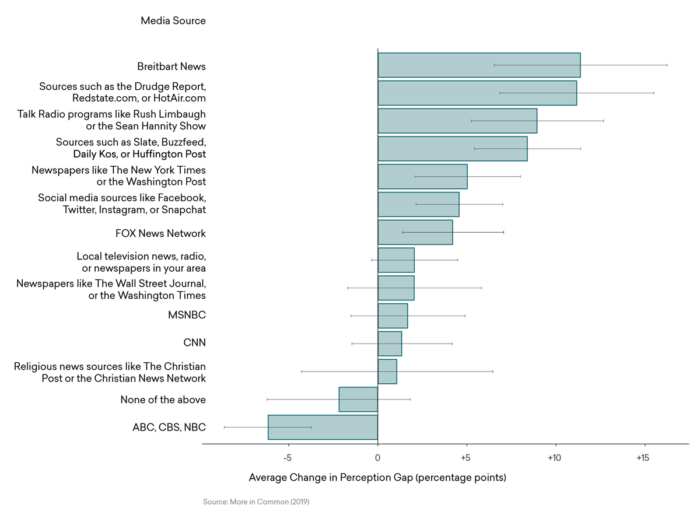

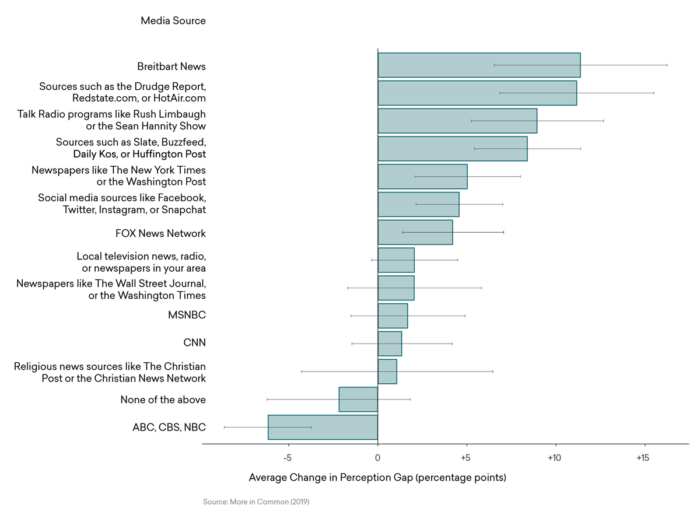

Humans are tribal by nature. We tend to sort ourselves into in-groups and out-groups, and then we engage in confirmation bias and motivated reasoning to believe mostly positive things about our in-group, and mostly negative things about the out-group. This is particularly dangerous in a species with advanced weaponry. But even short of mutual annihilation, these tendencies can make for a lot of problems, and frustrate our attempts at running a stable democracy.

Humans are tribal by nature. We tend to sort ourselves into in-groups and out-groups, and then we engage in confirmation bias and motivated reasoning to believe mostly positive things about our in-group, and mostly negative things about the out-group. This is particularly dangerous in a species with advanced weaponry. But even short of mutual annihilation, these tendencies can make for a lot of problems, and frustrate our attempts at running a stable democracy.

Part of this psychological tribalism is that we tend to exaggerate what we assume are the negative feelings of the other group toward our own. Prior research has shown this, and a new study also demonstrates this exaggerated polarization and negativity. There are a few reasons for this. One is basic tribal psychology as outlined above. Other cognitive biases, like oversimplification and a desire for moral clarity, motivate us to craft cardboard strawmen out of our political opponents. We come to assume that their position is either in bad faith, and/or is simplistic nonsense. We tend to ignore all nuance in our opponent’s position, fail to consider the justifiable reasons they may have for their position and the commonality of our goals. Ironically this view is simplistic and may motivate us to act in bad faith, which fuels these same beliefs about us by the other side, creating a cycle of radicalization.

This process is helped along by the media, both traditional and social media. Social media tends to form echochambers where our radicalized simplistic view of the “other side” can become more extreme. Also, impersonal online interactions (just read the comments here) may allow us to engage with the cardboard fiction in our minds rather than the real person at the other end.

Traditional media contributes to this phenomenon by focusing on issues in conflict at the expense of issues where there is more consensus and commonality. The media likes conflict, and this give everyone a distorted view of how much polarization there actually is.

Continue Reading »

Apr

23

2021

After four years of backsliding on tackling climate change, it is good to see the US once again taking it seriously and trying to lead the world on climate action. Good intensions are necessary, but insufficient, however. The Biden Administration pledges a 50-52% decrease in CO2 emissions from 2005 levels by 2030. That sounds ambitious, and it is, but it is also not enough. It helps clarify how big the task is we have before us, but also how high the stakes are. Some recent studies also help clarify the picture.

After four years of backsliding on tackling climate change, it is good to see the US once again taking it seriously and trying to lead the world on climate action. Good intensions are necessary, but insufficient, however. The Biden Administration pledges a 50-52% decrease in CO2 emissions from 2005 levels by 2030. That sounds ambitious, and it is, but it is also not enough. It helps clarify how big the task is we have before us, but also how high the stakes are. Some recent studies also help clarify the picture.

First, a recent study yet again dispenses with the false dichotomy that dealing with climate change is about the environment vs the economy. Wrong. Climate change hurts the environment and the economy – so both of these concerns are in alignment. This study was done by a large insurance company (who are used to estimating risk and cost) and they concluded that climate change will cost the world economy $23 trillion in lost productivity by 2050 (compared to where we would be without climate change). Failing to tackle climate change is the costly option. Further, these costs will disproportionately affect poorer countries, increasing the wealth gap between rich and poor nations and likely causing political instability (not to mention a climate refugee crisis).

This does not even account for health care costs and lost productivity due to poor health from pollution. These costs are estimated to be hundreds of millions of dollars per year for the US and several billion worldwide.

Even if we just look at climate change through an economic lens, investing in clean energy is a no-brainer. Green technologies are the technologies of the future, and so it also makes sense for any country to invest in this industry to be competitive. Investing in these technologies would be a massive boost to our economy, with each dollar spent being returned many times over. Failure to do so is economic malpractice. Clinging to dirty 17th century technology is a loser’s strategy.

Continue Reading »

Apr

22

2021

European Union (EU) agricultural scientists are in a bit of a pickle. I’m not sure to what extent it is one of their own making or how much it was imposed upon them by politics and public opinion, but they are now confronting a dilemma they at least ignored if not helped to create. The question is – how best to achieve sustainable agriculture in a world with a growing population? This problem is made more difficult by the fact that we already tapped the most efficient arable land, so any extension of agricultural land will necessarily push into less and less efficient land with greater displacements of populations and natural ecosystems.

European Union (EU) agricultural scientists are in a bit of a pickle. I’m not sure to what extent it is one of their own making or how much it was imposed upon them by politics and public opinion, but they are now confronting a dilemma they at least ignored if not helped to create. The question is – how best to achieve sustainable agriculture in a world with a growing population? This problem is made more difficult by the fact that we already tapped the most efficient arable land, so any extension of agricultural land will necessarily push into less and less efficient land with greater displacements of populations and natural ecosystems.

The dilemma stems from the EU’s regulatory support for organic farming. The core problem is actually the very concept of organic farming itself, which is rooted historically and ideologically in pseudoscience. Organic farming is philosophy-based rather than science-based farming – it is a manifestation of the appeal to nature fallacy. The result is a set of specific rules in order to qualify as “organic” that mostly represent a rejection of modern agricultural technology. There are some good things in there as well. Sometimes low tech methods are best. But organic farming does not use the best most sustainable methods. It uses the most “natural” methods, by some vague, arbitrary, gut-feeling criteria. So, for example, you can use pesticides, but only if they are derived from natural sources, even if they are less effective and more toxic. You also can’t irradiate food, because irradiation seems scary (even though it safely reduces food spoilage thereby reducing waste and foodborne disease).

And of course the organic farming industry is driving the biggest controversy in agriculture – the use of genetically modified organisms (GMOs). This is the focus of a new paper by EU agricultural scientists who now have to confront the organic farming hobgoblin which is getting in the way of sustainable farming. Here are the highlights: They open by dispensing with the most common argument used to dismiss the need for GMOs and justify organic farming inefficiency –

Sustainable food systems will require profound changes in people’s consumption patterns and lifestyles, which is true regardless of the farming methods used and does not change the fact that organic farming often requires more land than conventional farming for the same quantity of food output.

Continue Reading »

Apr

20

2021

One major factor in the progress of our understanding of how brains function is the ability to image the anatomy and function of the brain in greater detail. At first our examination of the brain was at the gross anatomy level – looking at structures with the naked eye. With this approach we were able to divide the brain in to different areas that were involved with different tasks. But it soon became clear that the organization and function of the brain was far more complex than gross examination could reveal. The advent of microscopes and staining techniques allowed us to examine the microscopic anatomy of the brain, and see the different cell types, their organization into layers, and how they network together. This gave us a much more detailed map of the anatomy of the brain, and from examining diseased or damaged brains we could infer what most of the identifiable structures in the brain did.

One major factor in the progress of our understanding of how brains function is the ability to image the anatomy and function of the brain in greater detail. At first our examination of the brain was at the gross anatomy level – looking at structures with the naked eye. With this approach we were able to divide the brain in to different areas that were involved with different tasks. But it soon became clear that the organization and function of the brain was far more complex than gross examination could reveal. The advent of microscopes and staining techniques allowed us to examine the microscopic anatomy of the brain, and see the different cell types, their organization into layers, and how they network together. This gave us a much more detailed map of the anatomy of the brain, and from examining diseased or damaged brains we could infer what most of the identifiable structures in the brain did.

But still, we were a couple of layers removed from the true level of complexity of brain functioning. Electroencephalography gave us the ability not to look at brain anatomy but function – we could detect the electrical activity of the brain with a series of electrodes in real time. This gave us good temporal resolution of function, and a good window into overall brain function (is the brain awake, asleep, or damaged) but very poor spatial resolution. This has improved in recent decades thanks to computer analysis of EEG signals, which can map brain function in higher detail, but is still very limited.

CT scans and later MRI scans allow us to image brain anatomy, even deep anatomy, in living creatures. In addition we can see some pathological details like edema, bleeding, scar tissue, iron deposition, or inflammation. With detailed imaging we could see the lesion while still being able to examine a living patient (rather than having to wait until autopsy to see the lesion). As MRI scans advanced we could also correlate non-pathological anatomical features with neurological function (such as skills or personality), giving us yet another window into brain function.

Continue Reading »

Apr

19

2021

I’ve been watching For All Mankind – a very interesting series that imagines an alternate history in which the Soviets beat the US to landing on the Moon, triggering an extended space race that puts us decades ahead of where we are now. By the 1980s we had a permanent lunar base and a reusable lunar lander, not to mention spacecraft with nuclear engines. Meanwhile, back in reality, we are approaching 50 years since any human has stepped foot on the moon.

I’ve been watching For All Mankind – a very interesting series that imagines an alternate history in which the Soviets beat the US to landing on the Moon, triggering an extended space race that puts us decades ahead of where we are now. By the 1980s we had a permanent lunar base and a reusable lunar lander, not to mention spacecraft with nuclear engines. Meanwhile, back in reality, we are approaching 50 years since any human has stepped foot on the moon.

But NASA does plan on returning to the Moon and staying there this time, with their Artemis mission. (In Greek mythology Artemis was the twin sister of Apollo.) Originally they planned to return to the Moon by 2028, then Trump asked them to move up the timeline to 2024. NASA dutifully complied, but this was never realistic and anyone who has been following Artemis knew this was not going to happen. And now NASA is admitting they will not be ready by 2024. But sometime likely in the latter half of this decade we will return to the Moon.

One of the last pieces to put into place is a lunar lander, something to get people from lunar orbit down to the surface of the Moon. NASA has finally awarded the contract to build this lander – to SpaceX. They are making no secret of the reason. SpaceX gave the lowest bid, by far. This is partly because the entire mission of SpaceX is to make space travel cheaper, mainly by using as many reusable parts as possible. Toward this end they perfected the technology for landing rockets vertically. The videos of Falcon rockets landing after launching satellites is still stunning. SpaceX also achieved a rating for their Dragon Crew capsule to actually carry people into space, and they have delivered astronauts to the ISS. Finally, SpaceX has already been developing their Starship design, which will be the basis of the new lander, which NASA is calling the Human Landing System (HLS).

Interestingly, a recent independent analysis found that the most efficient (only looking at efficiency) landing system using non-reusable parts was the Apollo system – a two-stage approach with a landing module and ascent module. However, if you use a renewable lander, then the one stage approach makes the most sense. That is in keeping with SpaceX’s philosophy, so it’s not surprising that they are taking that approach. I do wonder if they are going to use an actual Starship just outfitted for lunar landing, or are they going to make a new and smaller version? If the former, then it seems a bit odd that the HLS part of the system is a ship capable (theoretically) of doing the entire mission, from Earth surface to Lunar surface. That is Musk’s vision, single stage to destination for maximal reusability.

Continue Reading »

Apr

16

2021

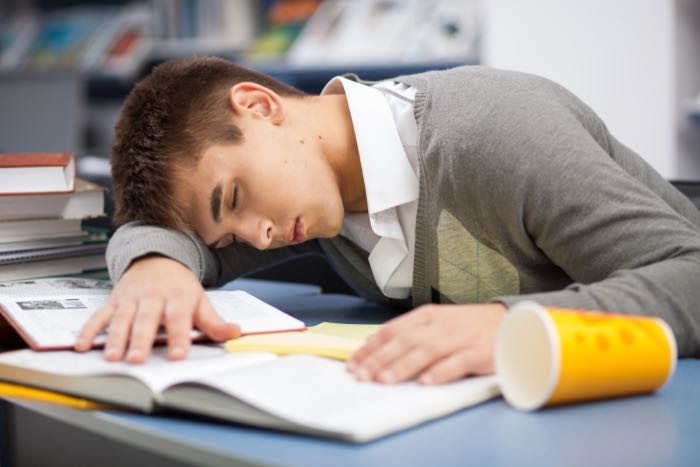

Yet another study shows the benefits of delaying the start time for High School students. This study also looked at middle school and elementary school students, had a two year follow up, and including both parent and student feedback. In this study: “Participating elementary schools started 60 minutes earlier, middle, 40-60 minutes later, and high school started 70 minutes later,” and found

shows the benefits of delaying the start time for High School students. This study also looked at middle school and elementary school students, had a two year follow up, and including both parent and student feedback. In this study: “Participating elementary schools started 60 minutes earlier, middle, 40-60 minutes later, and high school started 70 minutes later,” and found

Researchers found that the greatest improvements in these measures occurred for high school students, who obtained an extra 3.8 hours of sleep per week after the later start time was implemented. More than one in ten high school students reported improved sleep quality and one in five reported less daytime sleepiness. The average “weekend oversleep,” or additional sleep on weekends, amongst high schoolers dropped from just over two hours to 1.2 hours, suggesting that with enough weekday sleep, students are no longer clinically sleep deprived and no longer feel compelled to “catch up” on weekends. Likewise, middle school students obtained 2.4 additional hours of sleep per week with a later school start time. Researchers saw a 12% decrease in middle schoolers reporting daytime sleepiness. The percent of elementary school students reporting sufficient sleep duration, poor sleep quality, or daytime sleepiness did not change over the course of the study.

This adds to prior research which shows similar results, and also shows that student academic performance and school attendance improves. For teens their mood improves, their physical health improves, and the rate of car crashes decreases. So it seems like an absolute no-brainer that the typical school start time should be adjusted to optimize these outcomes. Why isn’t it happening? Getting in the way are purely logistical problems – synchronizing school start times with parents who need to go to work, sharing buses among elementary, middle, and high school, and leaving enough time at the end of the day for extracurricular activities. But these are entirely solvable logistical hurdles.

Continue Reading »

Apr

15

2021

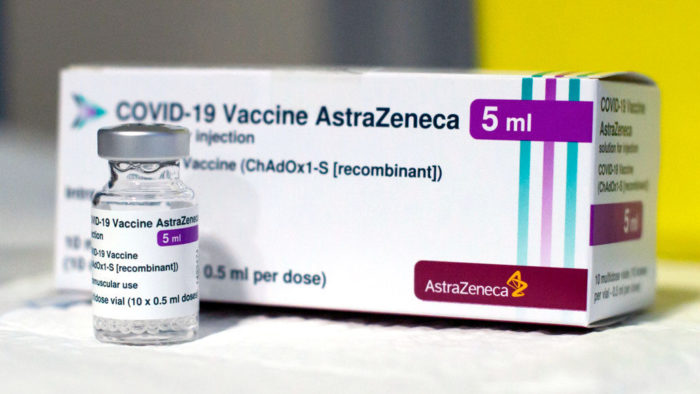

As the COVID vaccine rollout continues at a feverish pace, the occurrence of rare but serious blood clots associated with two adenovirus vaccines, AstraZeneca and Johnson & Johnson, is an important story, and should be covered with care and thoughtfulness. I have followed this story on Science Based Medicine here, here, and here. There is a lot of nuance to this issue, and it presents a clear dilemma. The ultimate goal is to optimally balance risk vs benefit while we are in the middle of a surging pandemic and while information is preliminary. This means we don’t panic, we consider all options, and we investigate thoroughly and transparently. There is a real debate to be had about how best to react to these rare cases, and as a science communicator I have tried to present the issues as reasonably as possible.

As the COVID vaccine rollout continues at a feverish pace, the occurrence of rare but serious blood clots associated with two adenovirus vaccines, AstraZeneca and Johnson & Johnson, is an important story, and should be covered with care and thoughtfulness. I have followed this story on Science Based Medicine here, here, and here. There is a lot of nuance to this issue, and it presents a clear dilemma. The ultimate goal is to optimally balance risk vs benefit while we are in the middle of a surging pandemic and while information is preliminary. This means we don’t panic, we consider all options, and we investigate thoroughly and transparently. There is a real debate to be had about how best to react to these rare cases, and as a science communicator I have tried to present the issues as reasonably as possible.

But we no longer live in an age where most people get most of their science news from edited science journalists. Most get their news online, from a range of sources, some good, some bad, some acting in bad faith or filtered through an intense ideological filter, and many just trolling. There are even “pseudojournalists” out there, reporting outside any kind of serious review. One such pseudojournalist is Paul Thacker, who recently decided he had to criticize the reporting of “skeptics” on the COVID vaccine blood clotting issue.

For background, Thacker was fired from the journal Environmental Science & Technology for showing an anti-industry bias. Bias is a bad thing in journalism, the core principle of which is objectivity. I have no idea if Thacker honestly believes what he writes or if he can’t resist trolling, but it doesn’t really matter. He has espoused anti-GMO views to the point of harassing GMO scientists, leading Keith Kloor to call him a “sadistic troll”. Thacker has also promoted 5g conspiracy theories.

Continue Reading »

The title of this post should be provocative, if you think about it for a minute. For “organic” read flexible, soft, and biocompatible. An electrochemical synapse is essentially how mammalian brains work. So far we can be talking about a biological brain, but the last word, “transistor”, implies we are talking about a computer. This technology may represent the next step in artificial intelligence, developing a transistor that more closely resembles the functioning of the brain.

The title of this post should be provocative, if you think about it for a minute. For “organic” read flexible, soft, and biocompatible. An electrochemical synapse is essentially how mammalian brains work. So far we can be talking about a biological brain, but the last word, “transistor”, implies we are talking about a computer. This technology may represent the next step in artificial intelligence, developing a transistor that more closely resembles the functioning of the brain.

When studying the history of life evolutionary biologists and paleontologists have no choice but to look where the light is good. There are fossil windows into specific times and places in the past, and through these we glimpse a moment in biological history. We string these moments together to map out the past, but we know there are a lot of missing pieces.

When studying the history of life evolutionary biologists and paleontologists have no choice but to look where the light is good. There are fossil windows into specific times and places in the past, and through these we glimpse a moment in biological history. We string these moments together to map out the past, but we know there are a lot of missing pieces. Battery technology has been consistently improving for decades, making possible the shift to EV cars. Tesla’s

Battery technology has been consistently improving for decades, making possible the shift to EV cars. Tesla’s  Humans are tribal by nature. We tend to sort ourselves into in-groups and out-groups, and then we engage in confirmation bias and motivated reasoning to believe mostly positive things about our in-group, and mostly negative things about the out-group. This is particularly dangerous in a species with advanced weaponry. But even short of mutual annihilation, these tendencies can make for a lot of problems, and frustrate our attempts at running a stable democracy.

Humans are tribal by nature. We tend to sort ourselves into in-groups and out-groups, and then we engage in confirmation bias and motivated reasoning to believe mostly positive things about our in-group, and mostly negative things about the out-group. This is particularly dangerous in a species with advanced weaponry. But even short of mutual annihilation, these tendencies can make for a lot of problems, and frustrate our attempts at running a stable democracy. After four years of backsliding on tackling climate change, it is good to see the US once again taking it seriously and trying to lead the world on climate action. Good intensions are necessary, but insufficient, however. The Biden Administration pledges a 50-52% decrease in CO2 emissions from 2005 levels by 2030. That sounds ambitious, and it is, but it is also not enough. It helps clarify how big the task is we have before us, but also how high the stakes are. Some recent studies also help clarify the picture.

After four years of backsliding on tackling climate change, it is good to see the US once again taking it seriously and trying to lead the world on climate action. Good intensions are necessary, but insufficient, however. The Biden Administration pledges a 50-52% decrease in CO2 emissions from 2005 levels by 2030. That sounds ambitious, and it is, but it is also not enough. It helps clarify how big the task is we have before us, but also how high the stakes are. Some recent studies also help clarify the picture. European Union (EU) agricultural scientists are in a bit of a pickle. I’m not sure to what extent it is one of their own making or how much it was imposed upon them by politics and public opinion, but they are now confronting a dilemma they at least ignored if not helped to create. The question is – how best to achieve sustainable agriculture in a world with a growing population? This problem is made more difficult by the fact that we already tapped the most efficient arable land, so any extension of agricultural land will necessarily push into less and less efficient land with greater displacements of populations and natural ecosystems.

European Union (EU) agricultural scientists are in a bit of a pickle. I’m not sure to what extent it is one of their own making or how much it was imposed upon them by politics and public opinion, but they are now confronting a dilemma they at least ignored if not helped to create. The question is – how best to achieve sustainable agriculture in a world with a growing population? This problem is made more difficult by the fact that we already tapped the most efficient arable land, so any extension of agricultural land will necessarily push into less and less efficient land with greater displacements of populations and natural ecosystems. One major factor in the progress of our understanding of how brains function is the ability to image the anatomy and function of the brain in greater detail. At first our examination of the brain was at the gross anatomy level – looking at structures with the naked eye. With this approach we were able to divide the brain in to different areas that were involved with different tasks. But it soon became clear that the organization and function of the brain was far more complex than gross examination could reveal. The advent of microscopes and staining techniques allowed us to examine the microscopic anatomy of the brain, and see the different cell types, their organization into layers, and how they network together. This gave us a much more detailed map of the anatomy of the brain, and from examining diseased or damaged brains we could infer what most of the identifiable structures in the brain did.

One major factor in the progress of our understanding of how brains function is the ability to image the anatomy and function of the brain in greater detail. At first our examination of the brain was at the gross anatomy level – looking at structures with the naked eye. With this approach we were able to divide the brain in to different areas that were involved with different tasks. But it soon became clear that the organization and function of the brain was far more complex than gross examination could reveal. The advent of microscopes and staining techniques allowed us to examine the microscopic anatomy of the brain, and see the different cell types, their organization into layers, and how they network together. This gave us a much more detailed map of the anatomy of the brain, and from examining diseased or damaged brains we could infer what most of the identifiable structures in the brain did. I’ve been watching For All Mankind – a very interesting series that imagines an alternate history in which the Soviets beat the US to landing on the Moon, triggering an extended space race that puts us decades ahead of where we are now. By the 1980s we had a permanent lunar base and a reusable lunar lander, not to mention spacecraft with nuclear engines. Meanwhile, back in reality, we are approaching 50 years since any human has stepped foot on the moon.

I’ve been watching For All Mankind – a very interesting series that imagines an alternate history in which the Soviets beat the US to landing on the Moon, triggering an extended space race that puts us decades ahead of where we are now. By the 1980s we had a permanent lunar base and a reusable lunar lander, not to mention spacecraft with nuclear engines. Meanwhile, back in reality, we are approaching 50 years since any human has stepped foot on the moon.

As the COVID vaccine rollout continues at a feverish pace, the occurrence of rare but serious blood clots associated with two adenovirus vaccines, AstraZeneca and Johnson & Johnson, is an important story, and should be covered with care and thoughtfulness. I have followed this story on Science Based Medicine

As the COVID vaccine rollout continues at a feverish pace, the occurrence of rare but serious blood clots associated with two adenovirus vaccines, AstraZeneca and Johnson & Johnson, is an important story, and should be covered with care and thoughtfulness. I have followed this story on Science Based Medicine