Apr

28

2023

The show Ted Lasso is about to wrap up its final season. I am one of the many people who really enjoy the show, which turns on a group of likable people helping each other through various life challenges with care and empathy. Lasso is an American college football coach who was recruited to coach an English “football” team, and manages to muddle through with Zen-like calm and folksy good spirits.

The show Ted Lasso is about to wrap up its final season. I am one of the many people who really enjoy the show, which turns on a group of likable people helping each other through various life challenges with care and empathy. Lasso is an American college football coach who was recruited to coach an English “football” team, and manages to muddle through with Zen-like calm and folksy good spirits.

Although entertaining, is the Ted Lasso style of coaching effective? Is it more effective to coach more like a drill sergeant, with fear and intimidation? It’s interesting that the fear-based model of leadership, whether coaching or otherwise, seems to be intuitive. It’s the default mode for many people. But evidence increasingly shows that the Ted Lasso model works better. Empathy may be the most effective leadership style.

Coaches who lead with empathy tend to get more out of their athletes, foster loyalty, establish trust and more effective communication. When you think about it, it makes sense. People work harder when they are motivated. Fear-based motivation is ultimately external, the fear of displeasing a leader, earning their wrath or punishment. Empathy nurtures internal motivation, wanting to succeed because you feel confident, and you want to achieve personal and group goals.

This philosophy is not new – this is a form of the old cliche of catching more flies with honey than vinegar. The philosophy has also filtered into the education community, which increasingly emphasizes positive reward rather than negative feedback. Making students feel anxious or stupid, it turns out, is counterproductive. This can be taken too far as well, however. I have seen it manifest as a policy of never telling students they are wrong. But what if they are? Well, don’t ask them a question that can be right or wrong, therefore there is no possibility of being wrong. OK, but some answers are still better than others.

Continue Reading »

Apr

27

2023

I’m really excited about the recent developments in artificial intelligence (AI) and their potential as powerful tools. I am also concerned about unintended consequences. As with any really powerful tool, there is the potential for abuse and also disruption. But I also think that the recent calls to pause or shutdown AI development, or concerns that AI may become conscious, are misguided and verging on panic.

I’m really excited about the recent developments in artificial intelligence (AI) and their potential as powerful tools. I am also concerned about unintended consequences. As with any really powerful tool, there is the potential for abuse and also disruption. But I also think that the recent calls to pause or shutdown AI development, or concerns that AI may become conscious, are misguided and verging on panic.

I don’t think we should pause AI development. In fact, I think further research and development is exactly what we need. Recent AI developments, such as the generative pretrained transformers (GPT) have created a jump in AI capability. They are yet another demonstration of how powerful narrow AI can be, without the need for general AI or anything approaching consciousness. What I think is freaking many people out is how well GPT-based AIs, trained on billions of examples, can mimic human behavior. I think this has as much to do with how much we underestimate how derivative our own behavior is as how powerful these AI are.

Most of our behavior and speech is simply mimicking the culture in which we are embedded. Most of us can get through our day without an original thought, relying entirely on prepackaged phrases and interactions. Perhaps mimicking human speech is a much lower bar than we would like to imagine. But still, these large language models are impressive. They represent a jump in technology, able to produce natural-language interactions with humans that are coherent and grammatically correct. But they remain a little brittle. Spend any significant time chatting with one of these large language models and you will detect how generic and soulless the responses are. It’s like playing a video game – even with really good AI driving the behavior of the NPCs in the game, they are ultimately predictable and not at all like interacting with an actual sentient being.

Continue Reading »

Apr

25

2023

When it comes to technology (and also probably many things) there is a pyramid of ideas. At the very bottom of the pyramid is pure speculation, just throwing out “what if” ideas to feed the conceptual pipeline. A subset of these ideas will pass the sniff test enough to justify some kind of proof-of-concept evaluation. This could be just crunching numbers, or even an experiment to see if the idea can work in theory. A subset of those ideas that seem promising will feed a pipeline of research and development, translating these basic concepts into a pragmatic technology. And a subset of those will make it through the pipeline to produce some working technology. A still smaller subset will have all the features necessary to be a successful technology product – economically feasible and competitive, with some marketable practical utility.

When it comes to technology (and also probably many things) there is a pyramid of ideas. At the very bottom of the pyramid is pure speculation, just throwing out “what if” ideas to feed the conceptual pipeline. A subset of these ideas will pass the sniff test enough to justify some kind of proof-of-concept evaluation. This could be just crunching numbers, or even an experiment to see if the idea can work in theory. A subset of those ideas that seem promising will feed a pipeline of research and development, translating these basic concepts into a pragmatic technology. And a subset of those will make it through the pipeline to produce some working technology. A still smaller subset will have all the features necessary to be a successful technology product – economically feasible and competitive, with some marketable practical utility.

When discussing any technology news it’s important to give this context – where in the pyramid are we and how likely is it to make it all the way to the peak? Unfortunately a lot of popular technology reporting treats ideas that are in the pure speculation phase, or perhaps just cracking into the proof of concept level, as if a working product is right around the corner. This leads to confusion and disillusionment. It can also bury news of true breakthroughs in a sea of pseudo-breakthroughs.

With that in mind, I would place this news item about superconducting highways into the pure speculation phase, with a small touch of proof-of-concept. The authors write:

“We envision combining the transport of people and goods and energy transmission and storage in a single system. Such a system, built on existing highway infrastructure, incorporates a superconductor guideway, allowing for simultaneous levitation of vehicles with magnetized undercarriages for rapid transport without schedule limitations and lossless transmission and storage of electricity. Incorporating liquefied hydrogen additionally allows for simultaneous cooling of the superconductor guideway and sustainable energy transport and storage.”

Starting with “we envision” is a good give-away. But here is the proof-of-concept:

Here, we report the successful demonstration of the primary technical prerequisite, levitating a magnet above a superconductor guideway.

Continue Reading »

Apr

24

2023

A common joke in the medical world is, “The operation was a success, but the patient died.” The irony comes from how we might define “success”. On April 20th SpaceX conducted the maiden launch of the fully assembled Starship, including a Starship rocket on top of a super heavy lifter. The initial launch seemed success and the rocket flew well for several minutes. However, it then started to become erratic, and at 3:59 into the flight the onboard computers triggered the Flight Termination System (ie it’s self-destruct) and blew up the rocket as a safety precaution.

A common joke in the medical world is, “The operation was a success, but the patient died.” The irony comes from how we might define “success”. On April 20th SpaceX conducted the maiden launch of the fully assembled Starship, including a Starship rocket on top of a super heavy lifter. The initial launch seemed success and the rocket flew well for several minutes. However, it then started to become erratic, and at 3:59 into the flight the onboard computers triggered the Flight Termination System (ie it’s self-destruct) and blew up the rocket as a safety precaution.

This was an uncrewed test flight, so it can be said that as a test it fulfilled its primary mission, to provide data about how the fully assembled Starship and associated launch pad will perform. Pre-launch SpaceX indicated that as long as the ship clears the tower, that would be considered a successful test. The goal is data, and they got lots of data. SpaceX says:

With a test like this, success comes from what we learn, and we learned a tremendous amount about the vehicle and ground systems today that will help us improve on future flights of Starship.

However, the rocket did also fail, and they need to figure out exactly why that happened. The proximate cause is likely the failure of several of the 33 raptor engines. I was at the Air and Space museum over the weekend, and had a chance to look at a rocket engine of the Saturn V rocket – which had five giant engines. SpaceX decided to go in a different direction with lots of smaller engines. With 5 or more of these engines out the rocket could still lift off the pad, but they were unable to compensate for the imbalance this produced, which is likely what resulted in the loss of control of the rocket. But then, why did the engines fail?

Continue Reading »

Apr

17

2023

I first wrote about the Theranos scandal in 2016, and I guess it should not be surprising that it took 7 years to follow this story through to the end. Elizabeth Holmes, founder of the company Theranos, was convicted of defrauding investors and sentenced to 11 years in prison. She will be going to prison even while her appeal is pending, because she failed to convince a judge that she is likely to win on appeal.

I first wrote about the Theranos scandal in 2016, and I guess it should not be surprising that it took 7 years to follow this story through to the end. Elizabeth Holmes, founder of the company Theranos, was convicted of defrauding investors and sentenced to 11 years in prison. She will be going to prison even while her appeal is pending, because she failed to convince a judge that she is likely to win on appeal.

I think her conviction and sentencing is a healthy development, and I hope it has an impact on the industry and broader culture. To quickly summarize, Holmes began a startup called Theranos which claimed to be able to perform 30 common medical laboratory tests on a single drop of blood and in a single day. So instead of collecting multiple vials of blood, with test results coming back over the course of a week, only a finger stick and drop of blood would be necessary (like people with diabetes do to test their blood sugar).

The basic idea is a good one, and also fairly obvious. Being able to determine reliable blood-testing results with a smaller sample, and being able to run multiple tests at a time, and very quickly, have obvious medical advantages. Patients, for example, who have prolonged hospital stays can actually get anemic from repeated blood draws. At some point testing has to be limited. Repeated blood sticks can also take its toll. For outpatient testing, rather than going to a lab, you could get a testing kit, provide a drop of blood, and then send it in.

The problem was that Holmes was apparently starting with a problem to be solved rather than a technology. We can think of technological development as happening in one of two primary ways. We may start with a problem and then search for a solution. Or we can start with a technology and look for applications. Both approaches have their pitfalls. The sweet spot is when both pathways meet in the middle – a new technology solves a clear problem.

Continue Reading »

Apr

14

2023

I have been following battery technology pretty closely, as this is a key technology for the transition to green energy. The most obvious application is in battery electric vehicles (BEVs). The second most obvious application is in grid storage. But also there are all the electronic devices that we increasingly depend on day-to-day. That same battery technology that powers your Tesla also powers your laptop and your smartphone.

I have been following battery technology pretty closely, as this is a key technology for the transition to green energy. The most obvious application is in battery electric vehicles (BEVs). The second most obvious application is in grid storage. But also there are all the electronic devices that we increasingly depend on day-to-day. That same battery technology that powers your Tesla also powers your laptop and your smartphone.

As I have discussed before, a useful battery technology needs to simultaneously have a suite of features (with different priorities depending on application) – good energy density (energy storage per volume) specific energy (energy per mass, also called gravimetric density), stability, fast charge and discharge, many rechargeable life-cycles, useful over a large range of temperatures, and made from material that is ideally non-toxic, recyclable, cheap and abundant. The current lithium-ion batteries are actually very good. They have been incrementally improving over the last two decades, allowing for the BEV revolution to really get under way.

But they have some downsides. They are just at the edge of energy density for aviation applications. They use some difficult to source raw material, like nickel and cobalt. They can occasionally catch fire (although are getting safer). And they are still pretty expensive. Further, we are really stretching the lithium supply chain if we want to build millions of cars and lots of grid storage. Fortunately, the key role that battery technology is playing in the green revolution is widely appreciated in the industry, and there has been a tremendous investment in accelerating battery development. Here are a few potential advances I have been keeping an eye on.

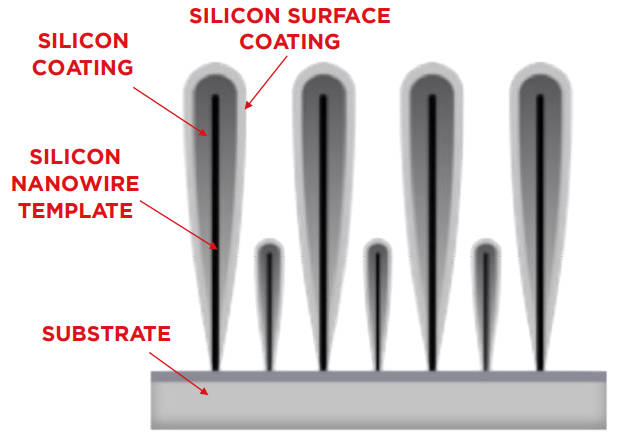

The first is actually not potential, but already in production. I interviewed for the SGU this week (the episode will come out Saturyday) the COO of Amprius, who have started production (actual production) of a lithium ion battery with twice the energy density and specific energy of the current batteries being used in BEVs – 500 Wh/kg, 1300 Wh/L vs about 240/650 for a current Tesla battery. So yeah – double. That is not an incremental advance, that’s a pretty big leap. These numbers have been independently verified, so they seem legit.

Continue Reading »

Apr

13

2023

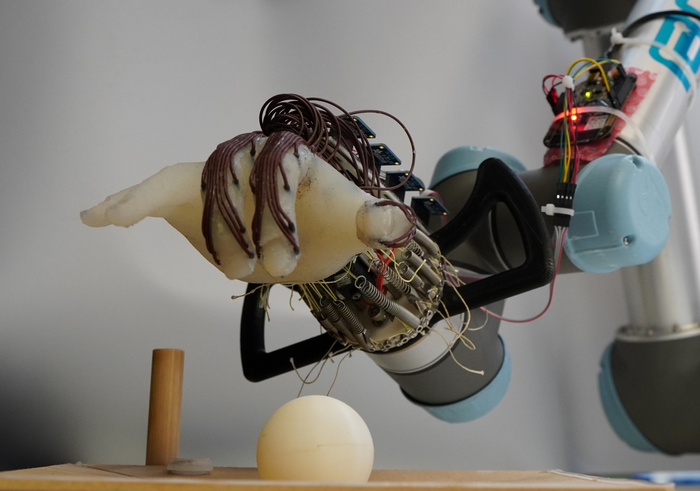

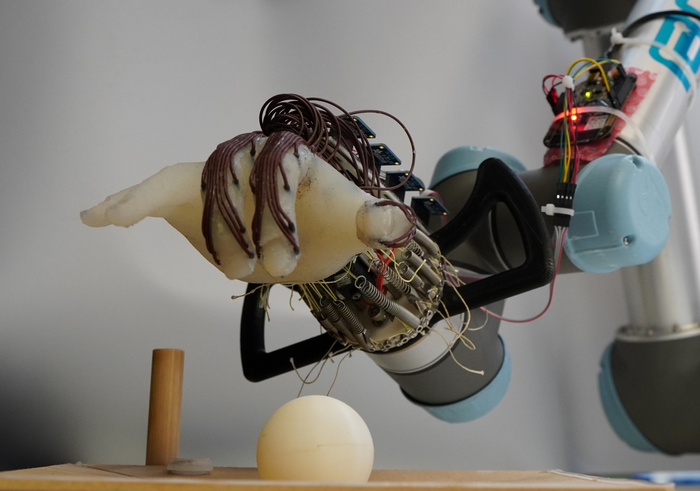

Roboticists are often engaged in a process of reinventing the wheel – duplicating the function of biological bodies in rubber, metal, and plastic. This is a difficult task because biological organisms are often wondrous machines. The human hand, in particular, is a feat of evolutionary engineering.

Roboticists are often engaged in a process of reinventing the wheel – duplicating the function of biological bodies in rubber, metal, and plastic. This is a difficult task because biological organisms are often wondrous machines. The human hand, in particular, is a feat of evolutionary engineering.

Researchers at the University of Cambridge have designed a robotic hand that both reflects the challenge of this task and some of the principles that might help guide the development of this technology. One feature of this study struck me as significant because of how it reflects the actual function of the human hand, in a way not mentioned in the paper or press release. This made me wonder if the roboticists were even aware that they were replicating a known principle.

The phenomenon in question is called tenodesis (I did a search on the paper and could not find this term). I learned about this during my neurology residency when rotating in a rehab hospital. When you extend your wrist this pulls the tendons of the fingers tight and causes the fingers to flex into a weak grasp. For people with a spinal cord injury around the C6-7 level, they can extend their wrist but not grasp their fingers. So they can learn to exploit the tenodesis effect to have a functional grasp, which can make a huge difference to their independence.

The Cambridge roboticists have apparently independently hit upon this same idea. They designed a robot hand that is anthropomorphic but where the fingers were not attached to actuators. The robot, however, could flex its wrist, which would passively cause the fingers to flex into a grasp – exactly as it happens in human hands with tenodesis. But why would roboticists want to make a robot hand with “paralyzed” fingers? The answer is – to optimize efficiency. Attaching all the fingers to actuators is a complex engineering feat and also using those actuators consumes a lot of energy. Passive grip is therefore much more energy efficient, which is a huge advantage in robotics.

Continue Reading »

Apr

11

2023

When you think about it, plants are self-reproducing solar-powered biological factories. They are powered by the sun, extract raw material from the air and soil, and make all sorts of useful molecules. Mostly we use them to make edible molecules (food), but also to make textiles, fuel, and drugs. Their use to make biofuel is still controversial, because of cost and scaling issues. Can we set aside enough land to grow enough feedstock for biofuels to make a difference? Otherwise plants are an indispensable method of manufacturing.

When you think about it, plants are self-reproducing solar-powered biological factories. They are powered by the sun, extract raw material from the air and soil, and make all sorts of useful molecules. Mostly we use them to make edible molecules (food), but also to make textiles, fuel, and drugs. Their use to make biofuel is still controversial, because of cost and scaling issues. Can we set aside enough land to grow enough feedstock for biofuels to make a difference? Otherwise plants are an indispensable method of manufacturing.

This may become even more true as our bioengineering technology advances. A recent study demonstrates how powerful this technology is becoming – Tunable control of insect pheromone biosynthesis in Nicotiana benthamiana. The specific application here, making pheromones, is actually not the real story. It is just one potential application. But let’s review what the researchers did.

The goal of the study was to bioengineer a species of tobacco plant to make female moth pheromones. Tobacco plants are often used in this research because it is a “model” organism, about which we know a great deal, including a full genome of many varieties. (There are many other reasons as well – a short growth cycle of 3 months, good for tissue cultures, produce many seeds per cross, etc.) The interest in moth pheromones is in their potential use for pest control. The idea is to replicate the pheromones that the female moths of a specific species release to attract males. This will attract all of the local males and distract them from the females, therefore significantly reducing reproduction and the pest population. This can potentially be a form of pest control that does not involve applying a pesticide to the plants themselves.

Continue Reading »

Apr

10

2023

Does the lunar cycle affect human behavior? This seems to be a question that refuses to die, no matter how hard it is to confirm any actual effect. It’s now a cultural idea, deeply embedded and not going anywhere. A recent study, however, seems to show a correlation between suicide and the week of the full moon in a pre-Covid cohort of subjects from Marion County. What are we to make of this finding?

Does the lunar cycle affect human behavior? This seems to be a question that refuses to die, no matter how hard it is to confirm any actual effect. It’s now a cultural idea, deeply embedded and not going anywhere. A recent study, however, seems to show a correlation between suicide and the week of the full moon in a pre-Covid cohort of subjects from Marion County. What are we to make of this finding?

Specifically they show that:

We analyzed pre-COVID suicides from the Marion County Coroner’s Office (n = 776), and show that deaths by suicide are significantly increased during the week of the full moon (p = 0.037), with older individuals (age ≥ 55) showing a stronger effect (p = 0.019). We also examined in our dataset which hour of the day (3–4 pm, p = 0.035), and which month of the year (September, p = 0.09) show the most deaths by suicide.

They also found suicides were not significantly associated with the full moon for subjects <30. They did not give the p-value for those between 30 and 55, and I suspect, given the numbers, that group was either not significant or barely significant. The first question we should ask when confronted with data like this is – is this likely to be a real effect, or just a statistical false positive? We can think about prior plausibility, the statistical power of the study, and the degree of significance. But really there is one primary way this question is sorted out – replication.

In fact, studies like this are best done in at least two phases, an initial exploratory phases and a follow up internal replication to confirm the results of the first data set. But confirmation can also be done through subsequent replication, by the same group or others. A real effect should replicate, while a false positive should not. The authors note that they did a literature search and found the results “mixed”. In fact, this study is a replication (not exact replication, but still a replication) of earlier studies asking the same question. Did they replicate the results of previous studies? Let’s take a look. Continue Reading »

Apr

06

2023

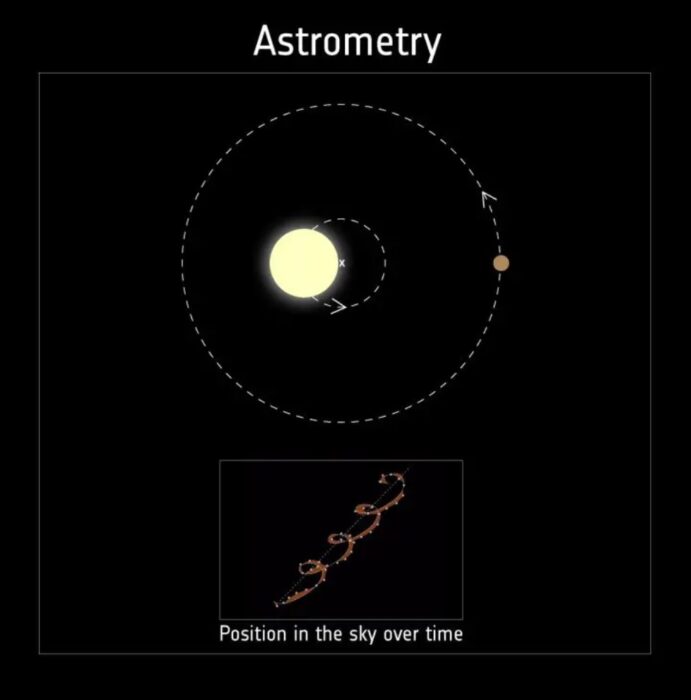

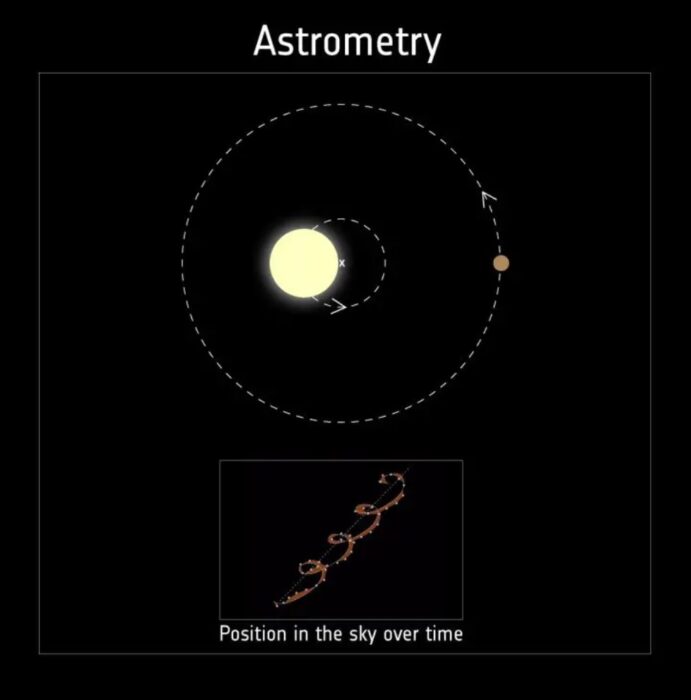

ESA’s Gaia orbital telescop e has recently discovered two new black holes. This, in itself, is not surprising, as that is Gaia’s mission – to precisely map the three-dimensional position of two billion objects in our galaxy, using three separate instruments. The process is called astrometry, and the goal is to produce a highly accurate map of the galaxy. The two new black holes, Gaia BH1 and Gaia BH2, have two features that make them noteworthy. The first is that these are the two closest blackholes to the Earth every discovered, at 1560 and 3800 light years away (on galactic terms, that’s close). More important, however, is that they represent a new type of black hole.

e has recently discovered two new black holes. This, in itself, is not surprising, as that is Gaia’s mission – to precisely map the three-dimensional position of two billion objects in our galaxy, using three separate instruments. The process is called astrometry, and the goal is to produce a highly accurate map of the galaxy. The two new black holes, Gaia BH1 and Gaia BH2, have two features that make them noteworthy. The first is that these are the two closest blackholes to the Earth every discovered, at 1560 and 3800 light years away (on galactic terms, that’s close). More important, however, is that they represent a new type of black hole.

Black holes are the most massive single objects in the universe. They are made from stellar remnants that have sufficient mass that the inward force of gravity is greater than any outward force of the matter itself. If the stellar remnant is >2.16 solar masses, it will become a black hole. Less than that, down to about 1.4 solar masses, and you have a neutron star. Stars of 20 solar masses or more are large enough to leave a stellar remnant behind after they nova to create a black hole. Black holes can also be formed when two neutron stars collide, the resulting mass being greater than the 2.16 limit. Or, a neutron star can have a close companion and it can gravitationally draw mass from the companion star until it reaches the black hole limit.

There are also supermassive black holes, usually formed at the center of galaxies from gobbling up more and more stars. The most massive discovered so far is 66 billion solar masses.

Continue Reading »

The show Ted Lasso is about to wrap up its final season. I am one of the many people who really enjoy the show, which turns on a group of likable people helping each other through various life challenges with care and empathy. Lasso is an American college football coach who was recruited to coach an English “football” team, and manages to muddle through with Zen-like calm and folksy good spirits.

The show Ted Lasso is about to wrap up its final season. I am one of the many people who really enjoy the show, which turns on a group of likable people helping each other through various life challenges with care and empathy. Lasso is an American college football coach who was recruited to coach an English “football” team, and manages to muddle through with Zen-like calm and folksy good spirits.

I’m really excited about the recent developments in artificial intelligence (AI) and their potential as powerful tools. I am also concerned about unintended consequences. As with any really powerful tool, there is the potential for abuse and also disruption. But I also think that the recent calls to

I’m really excited about the recent developments in artificial intelligence (AI) and their potential as powerful tools. I am also concerned about unintended consequences. As with any really powerful tool, there is the potential for abuse and also disruption. But I also think that the recent calls to  When it comes to technology (and also probably many things) there is a pyramid of ideas. At the very bottom of the pyramid is pure speculation, just throwing out “what if” ideas to feed the conceptual pipeline. A subset of these ideas will pass the sniff test enough to justify some kind of proof-of-concept evaluation. This could be just crunching numbers, or even an experiment to see if the idea can work in theory. A subset of those ideas that seem promising will feed a pipeline of research and development, translating these basic concepts into a pragmatic technology. And a subset of those will make it through the pipeline to produce some working technology. A still smaller subset will have all the features necessary to be a successful technology product – economically feasible and competitive, with some marketable practical utility.

When it comes to technology (and also probably many things) there is a pyramid of ideas. At the very bottom of the pyramid is pure speculation, just throwing out “what if” ideas to feed the conceptual pipeline. A subset of these ideas will pass the sniff test enough to justify some kind of proof-of-concept evaluation. This could be just crunching numbers, or even an experiment to see if the idea can work in theory. A subset of those ideas that seem promising will feed a pipeline of research and development, translating these basic concepts into a pragmatic technology. And a subset of those will make it through the pipeline to produce some working technology. A still smaller subset will have all the features necessary to be a successful technology product – economically feasible and competitive, with some marketable practical utility. A common joke in the medical world is, “The operation was a success, but the patient died.” The irony comes from how we might define “success”. On April 20th SpaceX conducted the maiden launch of the fully assembled Starship, including a Starship rocket on top of a super heavy lifter. The initial launch seemed success and the rocket flew well for several minutes. However, it then started to become erratic, and at 3:59 into the flight the onboard computers triggered the Flight Termination System (ie it’s self-destruct) and blew up the rocket as a safety precaution.

A common joke in the medical world is, “The operation was a success, but the patient died.” The irony comes from how we might define “success”. On April 20th SpaceX conducted the maiden launch of the fully assembled Starship, including a Starship rocket on top of a super heavy lifter. The initial launch seemed success and the rocket flew well for several minutes. However, it then started to become erratic, and at 3:59 into the flight the onboard computers triggered the Flight Termination System (ie it’s self-destruct) and blew up the rocket as a safety precaution. I first wrote about the

I first wrote about the  Roboticists are often engaged in a process of reinventing the wheel – duplicating the function of biological bodies in rubber, metal, and plastic. This is a difficult task because biological organisms are often wondrous machines. The human hand, in particular, is a feat of evolutionary engineering.

Roboticists are often engaged in a process of reinventing the wheel – duplicating the function of biological bodies in rubber, metal, and plastic. This is a difficult task because biological organisms are often wondrous machines. The human hand, in particular, is a feat of evolutionary engineering. When you think about it, plants are self-reproducing solar-powered biological factories. They are powered by the sun, extract raw material from the air and soil, and make all sorts of useful molecules. Mostly we use them to make edible molecules (food), but also to make textiles, fuel, and drugs. Their use to make biofuel is still controversial, because of cost and scaling issues. Can we set aside enough land to grow enough feedstock for biofuels to make a difference? Otherwise plants are an indispensable method of manufacturing.

When you think about it, plants are self-reproducing solar-powered biological factories. They are powered by the sun, extract raw material from the air and soil, and make all sorts of useful molecules. Mostly we use them to make edible molecules (food), but also to make textiles, fuel, and drugs. Their use to make biofuel is still controversial, because of cost and scaling issues. Can we set aside enough land to grow enough feedstock for biofuels to make a difference? Otherwise plants are an indispensable method of manufacturing. Does the lunar cycle affect human behavior? This seems to be a question that refuses to die, no matter how hard it is to confirm any actual effect. It’s now a cultural idea, deeply embedded and not going anywhere.

Does the lunar cycle affect human behavior? This seems to be a question that refuses to die, no matter how hard it is to confirm any actual effect. It’s now a cultural idea, deeply embedded and not going anywhere.