Mar 29 2012

Perception and Publication Bias

The psychological literature is full of studies that demonstrate that our biases affect our perception of the world. In fact psychologists have defined many specific biases that affect not only how we see but how we think about the world. Confirmation bias, for example, is the tendency to notice, accept, and remember data that confirms what we already believe, and to ignore, forget, or explain away data that is contradictory to our beliefs.

Balcetis and Dunning have published a series of five studies that add to this literature by showing what they call “wishful seeing.” In their studies they found that people perceive desirable items as being physically closer to them than less desirable items. This finding is plausible and easy to believe for a skeptic steeped in knowledge of cognitive flaws and biases. But is this finding itself reliable? Psychologists familiar with the history of this question might note that similar ideas were researched in the 1950s and ultimately rejected. But that aside, can we analyze the data from Balcetis and Dunning and make conclusions about how reliable it is?

Recently Gregory Francis did just that, revealing an interesting aspect of the “wishful seeing” data that calls it into question. Ironically the fact that Balcetis and Dunning published the results of five studies may have weakened their data rather than strengthen it. The reason is publication bias.

Carrying out high quality scientific research is extremely difficult. There are many pitfalls, some of which are very subtle, that can distort the outcome of research. I have earlier written about exploiting researcher degrees of freedom – making decisions about the details of a study that can all seem reasonable in isolation but which can also systematically bias the outcome toward the positive. Even studies that look good on paper may be the result of this type of researcher bias. The literature taken as a whole is also a complicated beast. Most notably there is publication bias – the tendency for researchers and journal editors to favor publishing positive research while confining negative studies to the file drawer (the so-called “file-drawer effect”).

All this is why we don’t get very excited over single studies or studies of small size or limited methodological quality. The possibility of false positives due to bias is huge. It is more reasonable to wait for a consensus of large high quality studies and independent replication, especially if the claim seems inherently implausible.

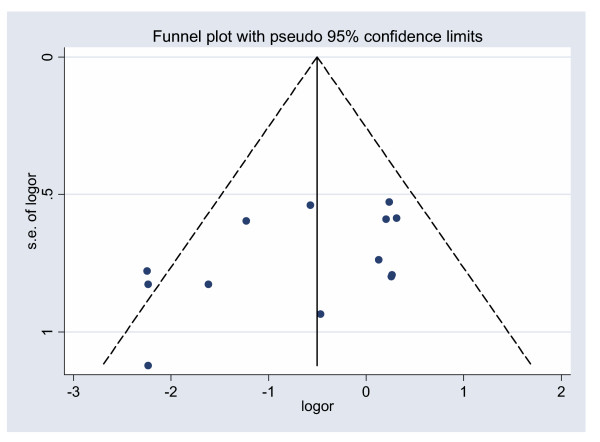

Researchers and statisticians have become fairly sophisticated in analyzing not only individual studies but the pattern of studies in the published literature to root out bias. For example there is the well-established notion of funnel plots. You can make a graph of all published studies with the quality of the study on the vertical axis and the result (positive or negative) along the horizontal axis. If all studies on a scientific question are being published then you would expect to see a random distribution of outcomes around the real effect size, and the better quality the study the smaller the degree of variation. The result should look like a funnel zeroing in on the real effect size.

Researchers and statisticians have become fairly sophisticated in analyzing not only individual studies but the pattern of studies in the published literature to root out bias. For example there is the well-established notion of funnel plots. You can make a graph of all published studies with the quality of the study on the vertical axis and the result (positive or negative) along the horizontal axis. If all studies on a scientific question are being published then you would expect to see a random distribution of outcomes around the real effect size, and the better quality the study the smaller the degree of variation. The result should look like a funnel zeroing in on the real effect size.

If there is publication bias, however, then the negative half of the funnel plot may be diminished or even missing. The scatter of studies will be skewed toward the positive. This is critical because systematic reviews and meta-analysis will reflect the distribution of published studies, and this publication bias may make a null effect seem real.

Francis has analyzed the five published studies of Balcetis and Dunning in a similar way to show that their data displays publication bias. Essentially he calculated the odds, given the size of each of the five studies, that they would all have come out positive, given the apparent effect size found in the data. He found that their data was below the cut off previously established.

In other words, a series of five small studies is likely to show a random scatter of results, and we can calculate the odds of getting any particular result. Even if the effect that Balcetis and Dunning claim to have found were real, their data should be more scattered. Instead we have five small studies that are all positive.

All of this does not mean that the wishful seeing effect is not real. It just means that there is evidence for internal publication bias in the Balcetis and Dunning data. Therefore we need a fresh set of data to investigate the question.

This also demonstrates the primary weakness of the meta-analysis – a method for combining the results of multiple studies into one large study. If we did a meta-analysis on the five studies published by Balcetis and Dunning we might find what appears to be one large data set with high power showing a positive effect. But five small studies are not the same thing as one large study, precisely because things like publication bias can affect the results.

Francis points out in his paper some of the things that might have biased the results in the wishful seeing series of studies. A researcher may, for example, gather data and look at the results, and if they are not positive then gather some more data and look at the results, and continue this process until they get a positive result. This might feel like just gathering more data to get a more reliable outcome, but if the stopping point is cherry picked when the data looks positive, that is a way to bias the outcome. It is a way of cherry picking data. This is one of the problems pointed out by Simmons et al in their paper on manufacturing false positives (which Francis does cite) by exploiting researcher degrees of freedom.

Conclusion

To me the question of researcher and publication bias is more interesting than the question of whether or not wishful seeing is a real phenomenon. Don’t get me wrong, perception bias is very interesting and an important realization for any critical thinker. The implications of researcher and publication bias, however, are far more profound and far reaching.

The take-home lesson is that when interpreting the research on any question all of the above concerns, and more, need to be taken into account. Do not base conclusions on any one study. Low power or methodologically weak studies do not add up to reliable research. The potential for many kinds of bias are just too great.

What is reliable is when we have studies of sufficient power that show a robust effect with reasonable signal to noise ratio, and further that this result is independently replicated. Further these results need to stand up to not only pre-publication peer review, but post-publication review by skeptical experts who try their best to probe for flaws and weaknesses in the data. Only when data holds up to this kind of assault do we tentatively conclude that it may be true.

Further, any research question needs to be put into the context of other scientific knowledge. Do we have multiple independent lines of evidence all converging on one conclusion, or does the claim in question exist in isolation, or worse does it seem to be at odds with other lines of investigation?

These are all perfectly reasonable criteria that scientists apply every day. Over time we are learning more and more about how important these methods are, and how even serious and accomplished researchers can fall prey to subtle biases in their research.

When we hold up controversial claims to these accepted scientific criteria, it becomes readily apparent why they are controversial. Psi research, for example, has failed to produce high quality replicated studies. Homeopathy has only poor quality studies rife with bias to offer as evidence, while the larger better quality studies are all negative. Further, homeopaths cannot present a coherent explanation for the effects they allege. Contrary to multiple lines of evidence converging on homeopathy being real, everything we know from physics, chemistry, and biology are screaming that homeopathy is beyond implausible and should be considered, as much as can be determined by scientific methods, impossible.

As for wishful seeing, I will have to reserve judgment. The effect is plausible and in line with other research showing similar perceptual biases, but this specific bias has not been established. An independent large study will be needed to address the question, and replication to confirm it. Meanwhile the Balcetis Dunning data set appears to be compromised and should be looked upon with skepticism. They may just be the victims of random chance (like a gambler who is just a bit too lucky for the casino’s comfort), we will have to wait for new data to see.