Nov 10 2015

Dunning-Kruger in Groups

This research was published in February of this year, but somehow I missed it when it came out. Fortunately, the internet never forgets, and the study is making the rounds again on social media, this time getting caught in my net.

This research was published in February of this year, but somehow I missed it when it came out. Fortunately, the internet never forgets, and the study is making the rounds again on social media, this time getting caught in my net.

The study is an examination of how we make decisions in groups, with the specific question of how we weight the opinions of different members of the group. The researchers studied pairs of subjects (which they call dyads) who were given a specific task, such as identifying a target in a photo they are able to briefly glimpse. Each member of the dyad registers their choice. If they disagree, then one member is chosen at random to be the arbiter. The question is – will the arbiter favor their own opinion, or that of their partner?

For each pair 256 trials were run, and after each one they were given feedback as to who was correct. The idea is that each member of the pair would learn who was performing better. In one experiment they were given a running tally, to make sure they knew who was performing better. In another the task was made more difficult for one partner, increasing the difference in their performance, and in a final experiment the pair was given a financial incentive to perform better.

Despite these factors, in all of the experiments both participants displayed an equality bias – they overrated the lower-performing member and underrated the higher-performing member. This effect was seen with subjects in Denmark, Iran, and China in order to control for possible cultural differences. The studies were done only with men, however, to eliminate any gender bias as a confounding factor.

As always with psychological research there are many factors in play, and a simple interpretation of one study is often not possible. But what does a straightforward interpretation of these results mean?

We may be seeing the Dunning-Kruger effect in groups. The Dunning-Kruger effect reflects a persistent bias in how we assess our own specific competence in an area. The effect is often summarized (usually for the purpose of snark on an internet exchange) as, “dumb people are too dumb to realize how dumb they are.”

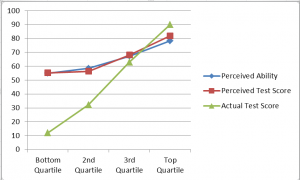

However, the Dunning-Kruger effect does not necessarily apply to specific people, it applies to everyone. We are all on different places on the Dunning-Kruger spectrum for different areas of expertise. Further, the curve shows that the lower one’s expertise the greater one overestimates their expertise, up to around the 75th percentile, above which we tend to underestimate our expertise. The estimate curve is flat compared to the reality curve.

Are we seeing this same effect in the current research but for groups? Both members tended to overvalue the opinions of the lesser-performing partner and undervalue the opinions of the better-performing partner. This cannot be pure Dunning-Kruger, however, because this effect persisted even when both members were given hard data that showed exactly how each member was performing.

This is where it always gets tricky, figuring out why people behaved as they did in a study. The authors conclude that the subjects in their study were displaying an equality bias, which is a straightforward and reasonable interpretation. Even when there is hard data and an incentive to optimize group performance, there was apparently a greater emotional incentive to weight each member’s opinion closer to equal.

This could be a social pressure to get along in groups, which is an established and powerful psychological motivation for humans.The lesser-performing member would feel pressure to maintain their relevance to the group (no one wants to get voted off the island, or be “the weakest link”). The better-performing member would feed the need to get along with the group and maintain group cohesion.

It is also possible the Dunning-Kruger effect was playing a role as well. The lesser-performing member, despite hard data, might still overestimate their competence. They may feel they were just unlucky, still getting warmed up, or improving as the game goes on and so cannot be judged entirely by their past performance. The better-performing member may also believe those things about the lesser-performing member, or at least be willing to give them the benefit of the doubt. They might also underestimate their own competence based on their performance, feeling they were lucky so far but their luck might not hold up.

None of these beliefs are entirely rational. It therefore seems more reasonable that if one’s goal is to optimize the performance of the group, then you would listen to the hard data. Why, then, did not evolution optimize our behavior?

Maybe it did, at least for our evolutionary environment. I don’t want to get into hand-waving evolutionary psychology here, but it is possible that group cohesion and everyone feeling confident and valuable is important to the overall functioning of a group. If we optimize individual decisions with cold-hard data, without consideration for the feelings of group members, we may optimize decisions in the short run, but compromise the group in the long run.

There may also be a nurturing aspect to this instinct. Do we always allow the best hunter to throw the spear (or the most experienced doctor to do the procedure), or do we allow less competent members to have a go in order to give them experience in the hopes they will improve (trading short-term optimization for long-term improvement)?

Regardless of the reason for this equality bias, the effect seems to be real and robust. What are the implications and how do we deal with it?

The obvious concern is that the opinion of experts will not be given the proper weight, and the opinion of the uninformed will rule the day. This can be a serious problem for a technological democratic society (as Chris Mooney discusses in his article about this study).

In practice, however, it is difficult to just defer to expertise. In our day-to-day lives it is difficult to always know or assess someone’s expertise. People also may tend to overstate their credentials in order to get an upper hand in a discussion. Disagreements can often degrade into penis-measuring contests, and so taking an egalitarian approach may be a reasonable first-approximation.

If the purpose of an exchange is not decision-making but simply the free exchange of ideas, an equality bias may serve a useful purpose. Just think of any comment-section debate you have had or witnessed. Citing one’s expertise does not get you far, nor should it. Rather, make coherent arguments and reference your factual premises. Everyone gets judged on the value of their arguments, not the length of their resume.

Or think of a classroom in which the goal is learning – everyone’s ideas are equal in the classroom is not a bad rule of thumb.

However, if three medical professionals are huddled around a gurney in the emergency room faced with an urgent and complex medical situation, deference to the greatest expertise is probably the best approach. If a government committee is trying to decide on policy that is heavily influence by scientific data, I would hope that data and real expertise would hold more sway than mere opinion.

Conclusion

We can add the equality bias to the long list of biases and heuristics that humans tend to follow. I find the implications of this one, however, to be fairly complex. At least we should be aware of the bias and factor it into our decision-making.

It seems that one lesson from this research is that we need to be flexible and sensitive to context. We cannot always defer to expertise, neither should we never defer to expertise. We need to understand the context of any given situation. Are we here to exchange ideas, to learn, or to solve an immediate problem? How clear are we as to everyone’s experience and knowledge, and how relevant are they to the decision-making? What are our short term and long term goals, and what is their relative importance?

I also think this research suggests that as individuals we should strive to be less sensitive to having our opinions trumped by data. In my experience people often take it personally when they are told they are wrong, even when discussing a hard fact that has nothing to do with opinion. If we were more humble before facts, then we could optimize our short and long terms goals more effectively.