Aug 17 2020

Adding Sound to AI

It has been fascinating, perhaps especially so as a neuroscientist, to watch the progress being made in artificial intelligence (AI), robotics, and brain-machine interface. Our understanding of biological intelligence is progressing in tandem with our attempts to replicate some of the functioning of that intelligence, as well as interface with it. Neuroscience and AI/robotics inform each other.

It has been fascinating, perhaps especially so as a neuroscientist, to watch the progress being made in artificial intelligence (AI), robotics, and brain-machine interface. Our understanding of biological intelligence is progressing in tandem with our attempts to replicate some of the functioning of that intelligence, as well as interface with it. Neuroscience and AI/robotics inform each other.

Here is another example of that – adding sound to the perception of an AI/robotic system to help it distinguish different objects. Before now AI object recognition has mostly been purely visual. (I always qualify these statements because I am not aware of every lab in the world that might be working on such things.) Visual object recognition is a good place to start, and this is what we probably think of when we imagine identifying an object ourselves – we look at it and compare it to our mental database of known objects. That is how our visual processing works.

But that is also not the whole story. We tend to underestimate, or simply not be aware of, the extent to which our brain are simultaneously processing multiple different sensory modalities to make sense of the world. When we listen to someone talk, for example, our brains also process the movement of their lips in order to make sense of the sounds as language (this is called the McGurk effect). When we identify someone else’s probable gender, we are strongly influenced by their voice.

As an aside, another specialty that I am interesting in because of its attempts to simulate reality is film-making. Film makers have learned all sorts of tricks over the years to create the illusion, through sound and vision, of what it is they are trying to convey. You can make things look bigger, for example, by slowing them down. Sound effect can also dramatically influence our perception of what is happening on the screen.

So – think again about how you might identify an object in the physical world. You might be satisfied with just looking at it, but you also may pick it up to test its heft. You would probably alter your categorization of the object depending on its weight, or how the surface feels. You might also tap the object to see what it sounds like, or shake it to see if there is anything loose, or anything inside. We would therefore want our robots, eventually, to have this same capability.

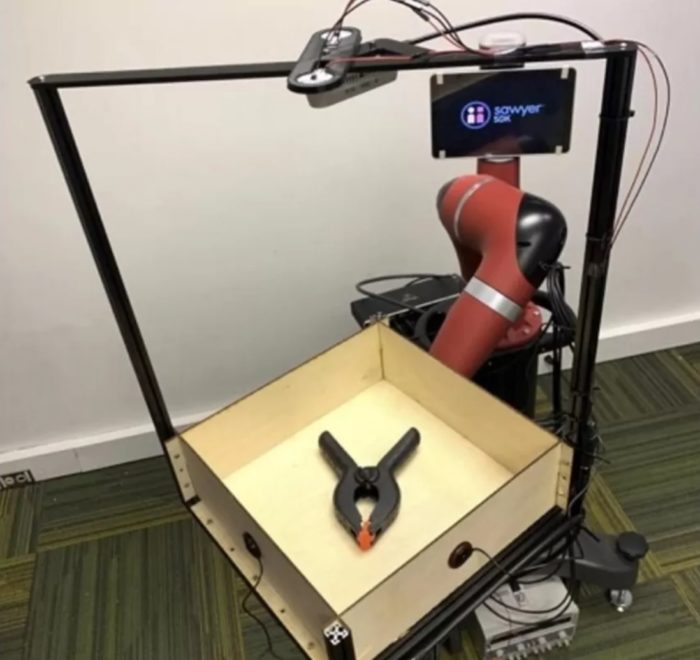

This is the focus of the current study – the researchers used a tilt-bot with a square try to see and hear objects as they rolled around on the tray and banged into the sides. They created database of 60 common objects, with 150,000 interactions. They used the database to train AI to identify the objects, which they were able to do 76% of the time. That sounds modestly successful, but not bad for a first try with a limited database. Think of how many objects and interactions you must have in your database. The AI was also able to use what it learned to make predictions about previously unseen objects – extrapolating to the rest of the world.

This was a proof-of-concept study that I think proved the concept. Adding sound perception is incremental to the capabilities of robots – it adds to their ability to identify and interact with the world. AI powered robots equipped with sight, touch, and hearing would likely more quickly approach a human-level ability to interact with the world, even if it is within a limited sphere depending on their purpose. Taste and smell are both ways to chemically analyze the world, and we already have machines that can do that.

As with many technologies – we begin by first duplicating what already exists. But eventually we will extend beyond that artificial limit. So we are making robots that can see, feel, hear, and smell. (There are other senses as well, such as vestibular sense, and many subtypes of feeling.) But what other possible senses are there that humans do not possess? We can certainly include other types of sensing devices, like a geiger counter, to detect radiation. We can extend the range of frequencies of light and sound they can detect. Some animals sense magnetic fields, but this could be much greater in a machine designed for such detection. Our brains do sense the direction of gravity (and by extension acceleration), but there are likely other ways to sense gravity as well.

Robots of the future may have an array of senses that dwarf human capability. But what is perhaps even more interesting is how AI will learn to make use of these multiple sensory systems. The vertebrate brain evolved the ability to integrate its various sensory inputs, to compare them to each other in real time to make a more complete model of the world and what is happening. Our AI, using learning algorithms and perhaps even evolutionary algorithms, will likely develop similar abilities. What interesting combinations of senses will emerge from this? It makes it difficult to fully anticipate the capabilities of future robotic AI.