Jun

26

2025

RFK Jr. is an anti-vaxxer. He will protest that, but it’s a dodge. He basically lied (and it was quite transparent) to the senate confirmation committee, and I think Cassidy and others knew full well what they were getting when they approved him as HHS secretary. Those of us who have been following RFK’s career as an anti-vaxxer have not been surprised (although we are certainly horrified) as he got to work systematically undermining vaccines in every way within his reach. You can read the saga in great detail at SBM, where we anticipated his moves. Let’s quickly review.

Of course, RFK is not going to say, “I will destroy America’s vaccine infrastructure because I hate all vaccines.” He is doing what he always does, using a pretext. Now he is claiming that he is just supporting “gold standard science”. But this is exactly like science deniers saying that they are just applying healthy skepticism. When it comes to safety data, for example, you can set the bar as arbitrarily high as you want. There is no such thing as 100% certitude in science or medicine, and so you can nit pick the evidence-base and declare that any medical intervention you don’t like needs more safety data. You can claim that any connection to industry is a fatal conflict of interest, even if it isn’t. You can claim data is too old and needs review, or that public funds are better spent elsewhere. Finding a pretext to undermine vaccines is easy. The pattern, however, is undeniable and was predicted – in every case, RFK’s judgement will come down against vaccines.

He has pulled funding for mRNA research into viruses with pandemic potential, including the bird flu. He clawed back funding that was already given to fund testing, claiming the vaccine needs more testing. he also claimed that other interventions are more promising. This, of course, is also the guy who thinks that vitamin A is more effective than vaccines at preventing measles, something which is patently not true. It’s hard to imagine a better health investment than developing a vaccine to prevent the next pandemic, but here we are.

Continue Reading »

Jun

06

2025

First, don’t get too excited, this is a laboratory study, which means if all goes well we are about a decade or more from an actual treatment. The study, however, is a nice demonstration of the potential of recent biotechnology, specifically mRNA technology and lipid nanoparticles. We are seeing some real benefits building on decades of basic science research. It is a hopeful sign of the potential of biotechnology to improve our lives. It is also a painful reminder of how much damage is being done by the current administration’s defunding of that very science and the institutions that make it happen.

First, don’t get too excited, this is a laboratory study, which means if all goes well we are about a decade or more from an actual treatment. The study, however, is a nice demonstration of the potential of recent biotechnology, specifically mRNA technology and lipid nanoparticles. We are seeing some real benefits building on decades of basic science research. It is a hopeful sign of the potential of biotechnology to improve our lives. It is also a painful reminder of how much damage is being done by the current administration’s defunding of that very science and the institutions that make it happen.

The study –Efficient mRNA delivery to resting T cells to reverse HIV latency – is looking for a solution to a particular problem in the treatment of HIV. The virus likes to hide inside white blood cells (CD4+ T cells). There the virus will wait in a latent stage and can activate later. It acts as a reservoir of virus that can keep the infection going, even in the face of effective anti-HIV drugs and immune attack. It is part of what makes HIV so difficult to fully eliminate from the body.

We already have drugs that address this issue. They are called, appropriately, latency-reversing agents (LRAs), and include Romidepsin, Panobinostat, and Vorinostat. These drugs inhibit an enzyme which allows the virus to hide inside white blood cells. So this isn’t a new idea, and there are already effective treatments, which do make other anti-HIV drugs more effective and keep viral counts very low. But they are not quite effective enough to allow for total virus elimination. More and more effective LRAs, therefore, could be highly beneficial to HIV treatment.

Continue Reading »

May

06

2025

The recent discussions about autism have been fascinating, partly because there is a robust neurodiversity community who have very deep, personal, and thoughtful opinions about the whole thing. One of the issues that has come up after we discussed this on the SGU was that of self-diagnosis. Some people in the community are essentially self-diagnosed as being on the autism spectrum. Cara and I both reflexively said this was not a good thing, and then moved on. But some in the community who are self-diagnosed took exception to our dismissiveness. I didn’t even realize this was a point of contention.

Two issues came up, the reasons they feel they need self-diagnosis, and the accuracy of self diagnosis. The main reason given to support self-diagnoses was the lack of adequate professional services available. It can be difficult to find a qualified practitioner. It can take a long time to get an appointment. Insurance does not cover “mental health” services very well, and so often getting a professional diagnosis would simply be too expensive for many to afford. So self-diagnosis is their only practical option.

I get this, and I have been complaining about the lack of mental health services for a long time. The solution here is to increase the services available and insurance coverage, not to rely on self-diagnosis. But this will not happen overnight, and may not happen anytime soon, so they have a point. But this doesn’t change the unavoidable reality that diagnoses based upon neurological and psychological signs and symptoms are extremely difficult, and self-diagnosis in any medical area is also fraught with challenges. Let me start by discussing the issues with self-diagnosis generally (not specifically with autism).

I wrote recently about the phenomenon of diagnosis itself. (I do recommend you read that article first, if you haven’t already.) A medical/psychological diagnosis is a complex multifaceted phenomenon. It exists in a specific context and for a specific purpose. Diagnoses can be purely descriptive, based on clinical signs and symptoms, or based on various kinds of biological markers – blood tests, anatomical scans, biopsy findings, functional tests, or genetics. Also, clinical entities are often not discrete, but are fuzzy around the edges, manifest differently in different populations and individuals, and overlap with other diagnoses. Some diagnoses are just placeholders for things we don’t understand. There are also generic categorization issues, like lumping vs splitting (do we use big umbrella diagnoses or split every small difference up into its own diagnosis?).

Continue Reading »

Apr

29

2025

In my previous post I wrote about how we think about and talk about autism spectrum disorder (ASD), and how RFK Jr misunderstands and exploits this complexity to weave his anti-vaccine crank narrative. There is also another challenge in the conversation about autism, which exists for many diagnoses – how do we talk about it in a way that is scientifically accurate, useful, and yet not needlessly stigmatizing or negative? A recent NYT op-ed by a parent of a child with profound autism had this to say:

“Many advocacy groups focus so much on acceptance, inclusion and celebrating neurodiversity that it can feel as if they are avoiding uncomfortable truths about children like mine. Parents are encouraged not to use words like “severe,” “profound” or even “Level 3” to describe our child’s autism; we’re told those terms are stigmatizing and we should instead speak of “high support needs.” A Harvard-affiliated research center halted a panel on autism awareness in 2022 after students claimed that the panel’s language about treating autism was “toxic.” A student petition circulated on Change.org said that autism ‘is not an illness or disease and, most importantly, it is not inherently negative.'”

I’m afraid there is no clean answer here, there are just tradeoffs. Let’s look at this question (essentially, how do we label ASD) from two basic perspectives – scientific and cultural. You may think that a purely scientific approach would be easier and result in a clear answer, but that is not the case. While science strives to be objective, the universe is really complex, and our attempts at making it understandable and manageable through categorization involve subjective choices and tradeoffs. As a physician I have had to become comfortable with this reality. Diagnoses are often squirrelly things.

When the profession creates or modifies a diagnosis, this is really a type of categorization. There are different criteria that we could potentially use to define a diagnostic label or category. We could use clinical criteria – what are the signs, symptoms, demographics, and natural history of the diagnosis in question? This is often where diagnoses begin their lives, as a pure description of what is being seen in the clinic. Clinical entities almost always present as a range of characteristics, because people are different and even specific diseases will manifest differently. The question then becomes – are we looking at one disease, multiple diseases, variations on a theme, or completely different processes that just overlap in the signs and symptoms they cause. This leads to the infamous “lumper vs splitter” debate – do we tend to lump similar entities together in big categories or split everything up into very specific entities, based on even tiny differences?

Continue Reading »

Jul

02

2024

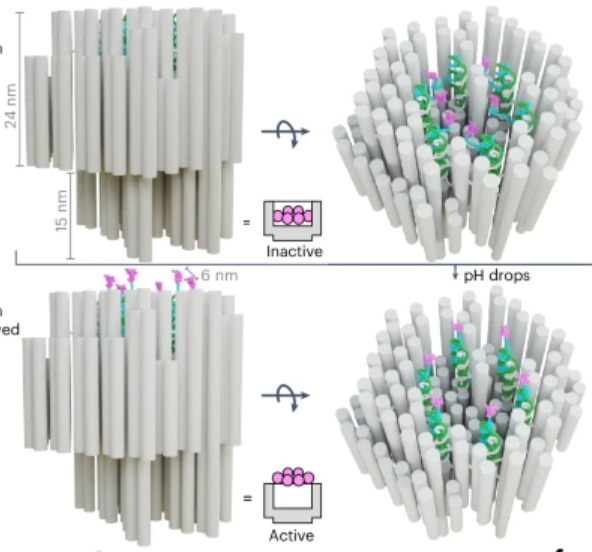

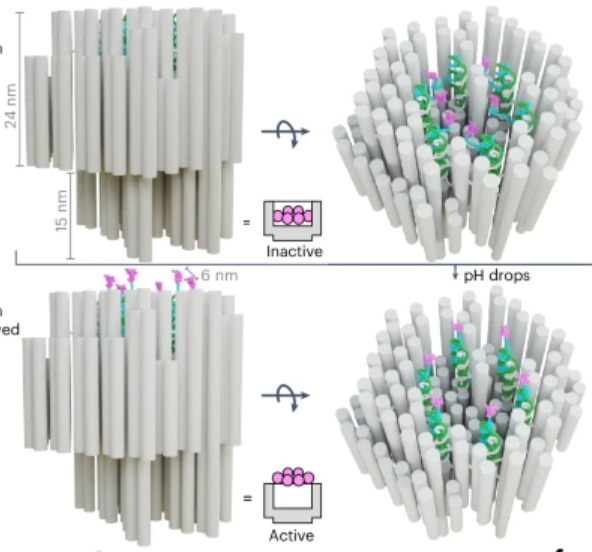

How’s that for a provocative title? But it is technically accurate. The title of the paper in question is: “A DNA robotic switch with regulated autonomous display of cytotoxic ligand nanopatterns.” The study is a proof of concept in an animal model, so we are still years away from a human treatment (if all goes well), but the tech is cool.

How’s that for a provocative title? But it is technically accurate. The title of the paper in question is: “A DNA robotic switch with regulated autonomous display of cytotoxic ligand nanopatterns.” The study is a proof of concept in an animal model, so we are still years away from a human treatment (if all goes well), but the tech is cool.

First we start with what is called “DNA origami”. These are sequences of DNA that fold up into specific shapes. In this case the DNA origami is used to create a nanoscale “robot” which is used as a delivery mechanism for the kill switch. The skill switch is quite literal – a “death receptor” (DR) which is a ligand of 6 amino acids. These exist on all healthy cells, but when sufficiently clustered on the surface of a cell, DRs trigger apoptosis, which is programmed cell death – a death switch.

The DNA origami robot has six such ligands arranged in a hexagonal pattern on the interior of its structure. The DNA, in fact, creates this structure with precise distance and arrangement to effectively trigger apoptosis. When it opens up it reveals these ligands and can attach them to a cell surface, triggering apoptosis. The researchers have managed to create a DNA robot that remains closed in normal body pH, but also will open up in an acidic environment.

Continue Reading »

Mar

25

2024

On March 16 surgeons transplanted a kidney taken from a pig into a human recipient, Rick Slayman. So far the transplant is a success, but of course the real test will be how well the kidney functions and for how long. This is the first time such a transplant has been done into a living donor – previous experimental pig transplants were done on brain dead patients.

On March 16 surgeons transplanted a kidney taken from a pig into a human recipient, Rick Slayman. So far the transplant is a success, but of course the real test will be how well the kidney functions and for how long. This is the first time such a transplant has been done into a living donor – previous experimental pig transplants were done on brain dead patients.

This approach to essentially “growing organs” for transplant into humans, in my opinion, has the most potential. There are currently over 100 thousand people on the US transplant waiting list, and many of them will die while waiting. There are not enough organs to go around. If we could somehow manufacture organs, especially ones that have a low risk of immune rejection, that would be a huge medical breakthrough. Currently there are several options.

One is to essentially construct a new organ. Attempts are already underway to 3D print organs from stem cells, which can be taken from the intended recipient. This requires a “scaffold” which is connective tissue taken from an organ where the cells have been stripped off. So you still need, for example, a donor heart. You then strip that heart of cells, 3D print new heart cells onto what’s left to create a new heart. This is tricky technology, and I am not confident it will even work.

Another option is to grow the organs ex-vivo – grow them in a tank of some kind from stem cells taken from the intended recipient. The advantage here is that the organ can potentially be a perfect new organ, entirely human, and with the genetics of the recipient, so no issues with rejection. The main limitation is that it takes time. Considering, however, that people often spend years on the transplant wait list, this could still be an option for some. The problem here is that we don’t currently have the technology to do this.

Continue Reading »

Feb

16

2024

I was recently asked what I thought about the Solex AO Scan. The website for the product includes this claim:

I was recently asked what I thought about the Solex AO Scan. The website for the product includes this claim:

AO Scan Technology by Solex is an elegant, yet simple-to-use frequency technology based on Tesla, Einstein, and other prominent scientists’ discoveries. It uses delicate bio-frequencies and electromagnetic signals to communicate with the body.

The AO Scan Technology contains a library of over 170,000 unique Blueprint Frequencies and created a hand-held technology that allows you to compare your personal frequencies to these Blueprints in order to help you achieve homeostasis, the body’s natural state of balance.

This is all hogwash (to use the technical term). Throwing out the names Tesla and Einstein, right off, is a huge red flag. This is a good rule of thumb – whenever these names (or Galileo) are invoked to hawk a product, it is most likely a scam. I guess you can say that any electrical device is based on the work of any scientist who had anything to do with electromagnetism.

Continue Reading »

Sep

21

2023

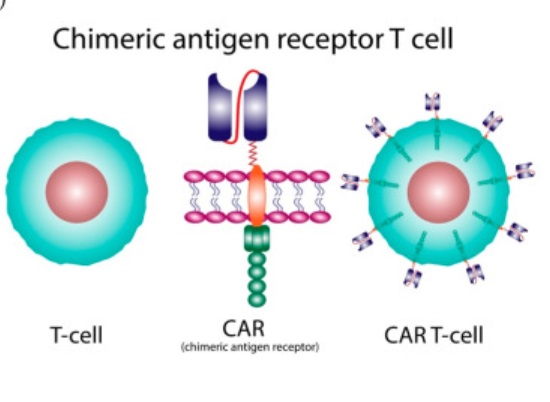

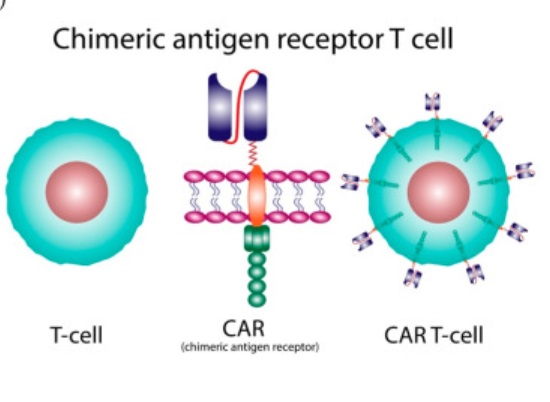

There is a recent medical advance that you may not have heard about unless you are a healthcare professional or encountered it from the patient side – CAR-T cell therapy. A recent study shows the potential for continued incremental advance of this technology, but already it is a powerful treatment.

There is a recent medical advance that you may not have heard about unless you are a healthcare professional or encountered it from the patient side – CAR-T cell therapy. A recent study shows the potential for continued incremental advance of this technology, but already it is a powerful treatment.

Like all technologies, the roots go back pretty far. It was first discovered that immune cells protect against cancer in 1960. In 1986 tumor-infiltrating lymphocytes were used to attack cancer cells. In 1993 the first generation of genetically modified T-cells using the chimeric antigen receptor (CAR) technique was developed. This technique fuses the business end of an antibody to a receptor on a T-cell (hence a chimera) which potentially targets that T-cell against whatever antigen the antibody targets. So you can make antibodies against a protein unique to a specific type of cancer, then fuse part of that antibody to T-cell receptors, put those T-cells back into the patient and they will potentially attack the cancer cells. This first generation CAR-T cells were not effective clinically because they did not survive long enough in the body to work.

In 1998 it was discovered that adding a costimulatory domain (CD28) to the CAR allowed it to persist longer in the body, creating the potential for clinical treatment. In 2002 this technique was used to develop second generation CAR-T cells that were shown for the first time to fight cancer in mice (the first study was of prostate cancer). Then in 2003 CAR-T cells using CD19 instead of CD28 were developed, and found to be more effective. In 2013 the first clinical trial in humans showing efficacy of CAR-T therapy in cancer (leukemia) was published, starting the era of using CAR-T therapy in treating blood cancers. The first CAR-T base treatment was approved by the FDA in 2017.

Over the last 8 years CAR-T therapy has become standard treatment for blood-born cancers (lymphoma and acute lymphoblastic leukemia). T-cells are taken from patients with cancer, they are then genetically modified into CAR-T cells, reproduced to make lots of them, and then given back to the patient to fight their cancer. In the last decade scientific advance has continued, with research targeting other proteins to potentially fight solid tumors. In 2017 scientists starting using CRISPR technology to make their CAR-T cells allowing greater control and improved function.

Continue Reading »

Aug

24

2023

Japan is planning on releasing treated radioactive water from the Fukushima nuclear accident into the ocean. They claim this will be completely safe, but there are protests going on in both Japan and South Korea, and China has just placed a ban on seafood from Japan. In a perfect world we would just have a calm and transparent discussion about the relevant scientific facts, make a reasonable decision, and go forward without any drama. But of course that is not the world we live in. But let’s pretend it is – what are the relevant facts?

Japan is planning on releasing treated radioactive water from the Fukushima nuclear accident into the ocean. They claim this will be completely safe, but there are protests going on in both Japan and South Korea, and China has just placed a ban on seafood from Japan. In a perfect world we would just have a calm and transparent discussion about the relevant scientific facts, make a reasonable decision, and go forward without any drama. But of course that is not the world we live in. But let’s pretend it is – what are the relevant facts?

In 2011 a tsunami (and poor safety decisions) caused several reactors at the Fukushima Daichi nuclear power plant to melt down. These reactors were flooded with water to cool them, but heat from continued radioactive decay means they need to be continuously cooled. The water used has become contaminated with 64 different radioactive isotopes. In the past 12 years 350 million gallons of contaminated water has been stored in over 1,000 tanks on site, but they are simply running out of room, which is why there is urgency to do something with the stored contaminated water. How unsafe is this water?

Over the last 12 years the short half-life isotopes have lost most of their radioactivity, but there are still some long half-life isotopes. This is good because the shorter the half-life the more intense the radioactivity per mass, by definition. Really long half-life isotopes, like carbon-14 (half-life 5,000 years), have much lower intensity. Also, the contaminated water as been treated with several processes, such as filtration and sedimentation. Most of the remaining radioactive isotopes have been removed (to levels below acceptable limits) by this process, although carbon-14 and tritium remain. How much radioactivity is left in this contaminated but treated water? That is the key question.

Continue Reading »

Aug

10

2023

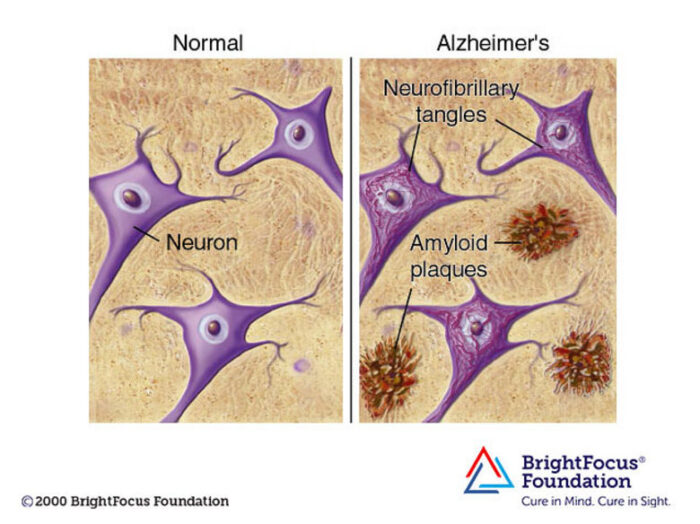

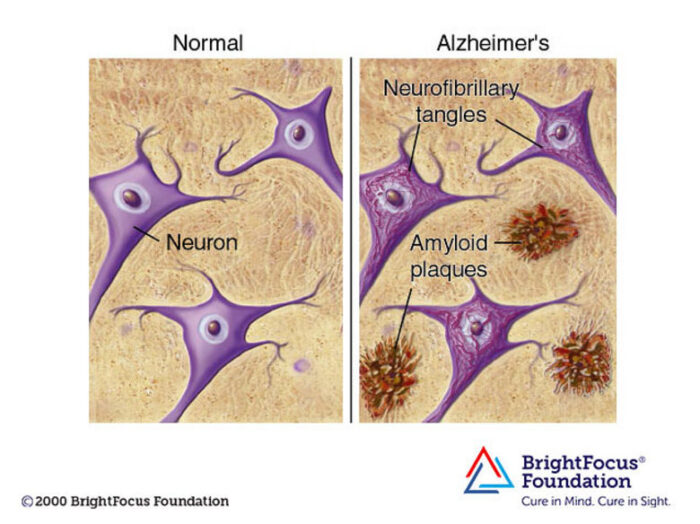

Decades of complex research and persevering through repeated disappointment appears to be finally paying off for the diagnosis and treatment of Alzheimer’s disease (AD). In 2021 Aduhelm was the first drug approved by the FDA (granted contingent accelerated approval) that is potentially disease-modifying in AD. This year two additional drugs received FDA approval. All three drugs are monoclonal antibodies that target amyloid protein. They each seem to have overall modest clinical effect, but they are the first drugs to actually slow down progression of AD, which represents important confirmation of the amyloid hypothesis. Until now attempts at slowing down the disease by targeting amyloid have failed.

Decades of complex research and persevering through repeated disappointment appears to be finally paying off for the diagnosis and treatment of Alzheimer’s disease (AD). In 2021 Aduhelm was the first drug approved by the FDA (granted contingent accelerated approval) that is potentially disease-modifying in AD. This year two additional drugs received FDA approval. All three drugs are monoclonal antibodies that target amyloid protein. They each seem to have overall modest clinical effect, but they are the first drugs to actually slow down progression of AD, which represents important confirmation of the amyloid hypothesis. Until now attempts at slowing down the disease by targeting amyloid have failed.

Three drugs in as many years is no coincidence – this is the result of decades of research into a very complex disease, combined with monoclonal antibody technology coming into its own as a therapeutic option. AD is a form of dementia, a chronic degenerative disease of the brain that causes the slow loss of cognitive function and memory over years. There are over 6 million people in the US alone with AD, and it represents a massive health care burden. More than 10% of the population over 65 have AD.

The probable reason we have rapidly crossed over the threshold to detectable clinical effect is attributed by experts to two main factors – treating people earlier in the disease, and giving a more aggressive treatment (essentially pushing dosing to a higher level). The higher dosing comes with a downside of significant side effects, including brain swelling and bleeding. But that it what it took to show even a modest clinical benefit. But the fact that three drugs, which target different aspects of amyloid protein, show promising or demonstrated clinical benefit helps confirm that the amyloid protein and the plaques they form in the brain are, to some extend driving AD. They are not just a marker for brain cell damage, they are at least partly responsible for that damage. Until now, this was not clear.

Continue Reading »

First, don’t get too excited, this is a laboratory study, which means if all goes well we are about a decade or more from an actual treatment. The study, however, is a nice demonstration of the potential of recent biotechnology, specifically mRNA technology and lipid nanoparticles. We are seeing some real benefits building on decades of basic science research. It is a hopeful sign of the potential of biotechnology to improve our lives. It is also a painful reminder of how much damage is being done by the current administration’s defunding of that very science and the institutions that make it happen.

First, don’t get too excited, this is a laboratory study, which means if all goes well we are about a decade or more from an actual treatment. The study, however, is a nice demonstration of the potential of recent biotechnology, specifically mRNA technology and lipid nanoparticles. We are seeing some real benefits building on decades of basic science research. It is a hopeful sign of the potential of biotechnology to improve our lives. It is also a painful reminder of how much damage is being done by the current administration’s defunding of that very science and the institutions that make it happen. How’s that for a provocative title? But it is technically accurate. The title of

How’s that for a provocative title? But it is technically accurate. The title of  On March 16 surgeons

On March 16 surgeons I was recently asked what I thought about the Solex AO Scan. The website for the product includes this claim:

I was recently asked what I thought about the Solex AO Scan. The website for the product includes this claim: There is a recent medical advance that you may not have heard about unless you are a healthcare professional or encountered it from the patient side – CAR-T cell therapy.

There is a recent medical advance that you may not have heard about unless you are a healthcare professional or encountered it from the patient side – CAR-T cell therapy.  Japan is planning on releasing treated radioactive water from the Fukushima nuclear accident into the ocean. They claim this will be completely safe, but there are

Japan is planning on releasing treated radioactive water from the Fukushima nuclear accident into the ocean. They claim this will be completely safe, but there are  Decades of complex research and persevering through repeated disappointment appears to be finally paying off for the diagnosis and treatment of Alzheimer’s disease (AD). In 2021 Aduhelm was the first drug approved by the FDA (granted contingent accelerated approval) that is potentially disease-modifying in AD. This year

Decades of complex research and persevering through repeated disappointment appears to be finally paying off for the diagnosis and treatment of Alzheimer’s disease (AD). In 2021 Aduhelm was the first drug approved by the FDA (granted contingent accelerated approval) that is potentially disease-modifying in AD. This year