Jul 14 2023

Magnetohydrodynamic Drive – Silent Water Propulsion

DARPA, the US Defense Advanced Research Projects Agency, is now working on developing a magnet-driven silent water propulsion system – the magnetohydrodynamic (MHD) drive. The primary reason is to develop silent military naval craft. Imagine a nuclear submarine with an MHD drive, without moving parts, that can slice through the water silently. No moving parts also means much less maintenance (a bonus I can attest to, owning a fully electric vehicle).

DARPA, the US Defense Advanced Research Projects Agency, is now working on developing a magnet-driven silent water propulsion system – the magnetohydrodynamic (MHD) drive. The primary reason is to develop silent military naval craft. Imagine a nuclear submarine with an MHD drive, without moving parts, that can slice through the water silently. No moving parts also means much less maintenance (a bonus I can attest to, owning a fully electric vehicle).

But don’t be distracted by the obvious military application – if DARPA research leads to a successful MHD drive there are implications beyond the military, and there are a lot of interesting elements to this story. Let’s start, however, with the technology itself. How does the MHD work?

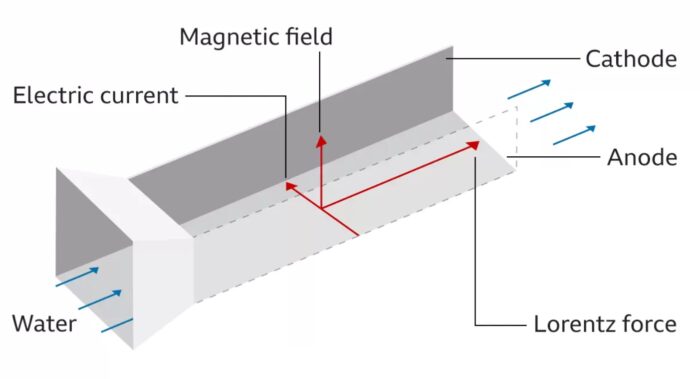

The drive was first imagined in the 1960s. That’s generic technology lesson #1 – technology often has deeper roots than you imagine, because development often takes a lot longer than initial hype would suggest. In 1992 Japan built the Yamato-1, a prototype ship with an MHD drive that worked. It was an important proof of concept, but was not practical. Even over 30 years later, we are not there yet. The drive works through powerful magnetic fields, which are place at right angles to an electrical current, producing a Lorentz force. This is a force produced on a particle moving through both an electrical and magnetic field, at right angles to both. Salt water contains charged particles which would feel this Lorentz force. Therefore, if arranged properly, the magnetic and electrical fields could push water toward the back of the ship, providing propulsion.

Sounds pretty straight forward, so what’s the holdup? Well, there are several. The most important aspect of the Yamato-1 is that is provided great research into all the technical hurdles for this technology. The first is that the MHD drive is horribly energy inefficient, which means it was very expensive to operate. What was mainly needed to improve efficiency was more powerful and more efficient magnets. Here we get to generic technology lesson #2 – basic technology developed for one application may have other or even greater utility for other applications. In this case the MHD is partly benefiting from the fusion energy industry, which requires powerful efficient magnets. We can take those same magnet innovations and apply them to MHD drives, making them energy and cost effective.

But there is still one major and one minor problem remaining. The major problem is the electrodes and electronics necessary to generate the electrical current. Electronics and salt water don’t mix – the salt water is highly corrosive, more so when exposed to magnetic fields and electrical current. We therefore need to develop highly corrosive-resistant electrodes. Fortunately, such development is already underway in the battery industry, that also needs robust electrodes. Apparently we are not there yet when it comes to MHD, and that will be a major focus of DARPA research.

There is also the minor problem of the electrodes electrolyzing the salt water, creating bubbles of hydrogen and oxygen. This reduces the efficiency of the system – not a deal-killer, but it would be nice to reduce this effect. I immediately wondered if the created gases can be captured somehow, both solving the problem and making green hydrogen from the shipping industry. In any case, that’s problem #2 for DARPA to solve.

If all goes well, we are probably 10-20 years (or more) still away from working MHD drives on ships. Probably the military applications will come first. I hope they don’t hog the technology, which they might in order to maintain their military technological dominance, but the civilian applications can be huge. The noise generated by shipping has massive negative consequences on marine life, especially whales and other cetaceans who rely on long distance sound to communicate with each other, to navigate, and to migrate. Propellers churning up water is also an ecological problem. If it ever becomes cost effective enough, a working MHD drive could revolutionize ocean travel and shipping. Electrifying ocean propulsion could also help reduce GHG emissions.

Plus, there might be other downstream benefits from the DARPA research. Those robust corrosion resistant electrodes will likely have many applications. It may feed back into battery technology. It may also lead to better electrodes for a brain-machine interface. This reminds me of the book and TV series Connections, by James Burke. This is a brilliant series I have not seen in a while and should probably watch again. It traces long chains of technological developments, from one application to the next, showing how extensively technologies cross-fertilize. A need in one area leads to an advance that makes a completely different application feasible – and so on and so on. I guess that’s generic technology lesson #3.

DARPA has a solid history of accelerating specific technologies in order to bring new industries to fruition more quickly. Hopefully they will be successful here as well. The downstream benefits of an MHD drive could be significant, with spin-off benefits to many industries.

This is pretty exciting neuroscience news –

This is pretty exciting neuroscience news – This is definitely a “you got chocolate in my peanut butter” type of advance, because it combines two emerging technologies to create a potential significant advance.

This is definitely a “you got chocolate in my peanut butter” type of advance, because it combines two emerging technologies to create a potential significant advance.  Rapidly advancing computer technology has greatly enhanced our lives and had ripple effects throughout many industries. I essentially lived through the computer and internet revolution, and in fact each stage of my life is marked by the state of computer technology at that time. You can also easily date movies in a contemporary setting by the computer and cell phone technology in use. But one downside to rapid advance is so-called orphaned technology. You may, for example use a piece of software that you know really well and feel is the perfect compromise of usability and functionality. Upgrades may be too expensive for you, or simply not desired. But at some point the company stops supporting the software, because they have moved on to later versions and would rather just have their customers upgrade. Without upgrades the software slowly becomes unusable – vulnerable to hacks and not compatible with other software and hardware.

Rapidly advancing computer technology has greatly enhanced our lives and had ripple effects throughout many industries. I essentially lived through the computer and internet revolution, and in fact each stage of my life is marked by the state of computer technology at that time. You can also easily date movies in a contemporary setting by the computer and cell phone technology in use. But one downside to rapid advance is so-called orphaned technology. You may, for example use a piece of software that you know really well and feel is the perfect compromise of usability and functionality. Upgrades may be too expensive for you, or simply not desired. But at some point the company stops supporting the software, because they have moved on to later versions and would rather just have their customers upgrade. Without upgrades the software slowly becomes unusable – vulnerable to hacks and not compatible with other software and hardware. The term “bionics” was coined by Jack E. Steele in August 1958. It is a portmanteau of biologic and electronic. Martin Caidin used the word in his 1972 novel, Cyborg (which is another portmanteau of cybernetic organism). But the term really became popularized in the 1970s TV show, The Six Million Dollar Man. Of course, at the time bionic limbs seemed futuristic, perhaps something we would see in a few decades. Thirty years always feels like far enough in the future that any imagined technology should be ready by then. But here we are, almost 50 years later, and we are nowhere near the technology Steve Austin was sporting. Bionics, as depicted, was more like 100 or more years premature. This is tech more appropriate to Luke Skywalker’s hand in Star Wars, rather than some secret government project in the 1970s.

The term “bionics” was coined by Jack E. Steele in August 1958. It is a portmanteau of biologic and electronic. Martin Caidin used the word in his 1972 novel, Cyborg (which is another portmanteau of cybernetic organism). But the term really became popularized in the 1970s TV show, The Six Million Dollar Man. Of course, at the time bionic limbs seemed futuristic, perhaps something we would see in a few decades. Thirty years always feels like far enough in the future that any imagined technology should be ready by then. But here we are, almost 50 years later, and we are nowhere near the technology Steve Austin was sporting. Bionics, as depicted, was more like 100 or more years premature. This is tech more appropriate to Luke Skywalker’s hand in Star Wars, rather than some secret government project in the 1970s. Imagine having an extra arm, or an extra thumb on one hand, or even a tail, and imagine that it felt like a natural part of your body and you could control it easily and dexterously. How plausible is this type of robotic augmentation? Could “Doc Oc” really exist?

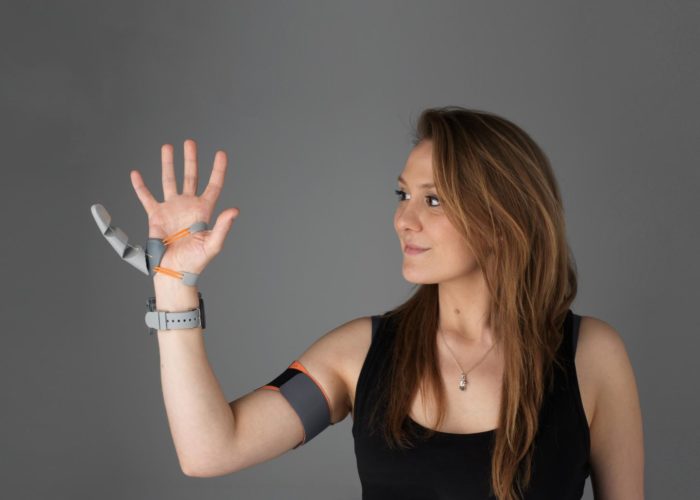

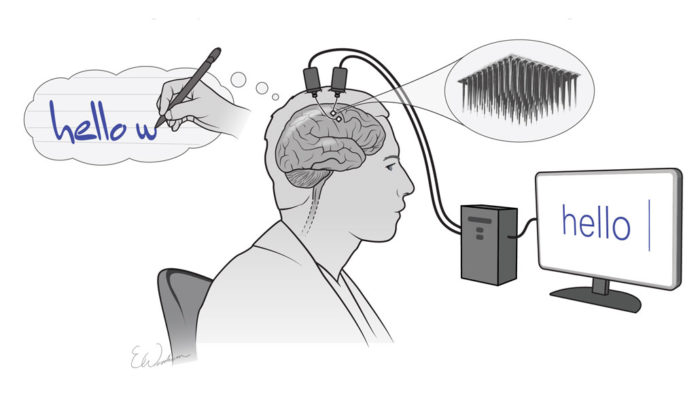

Imagine having an extra arm, or an extra thumb on one hand, or even a tail, and imagine that it felt like a natural part of your body and you could control it easily and dexterously. How plausible is this type of robotic augmentation? Could “Doc Oc” really exist? We have another incremental advance with brain-machine interface technology, and one with practical applications.

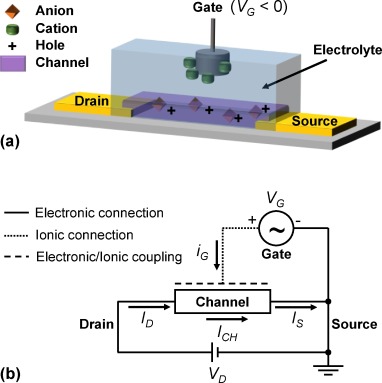

We have another incremental advance with brain-machine interface technology, and one with practical applications.  The title of this post should be provocative, if you think about it for a minute. For “organic” read flexible, soft, and biocompatible. An electrochemical synapse is essentially how mammalian brains work. So far we can be talking about a biological brain, but the last word, “transistor”, implies we are talking about a computer. This technology may represent the next step in artificial intelligence, developing a transistor that more closely resembles the functioning of the brain.

The title of this post should be provocative, if you think about it for a minute. For “organic” read flexible, soft, and biocompatible. An electrochemical synapse is essentially how mammalian brains work. So far we can be talking about a biological brain, but the last word, “transistor”, implies we are talking about a computer. This technology may represent the next step in artificial intelligence, developing a transistor that more closely resembles the functioning of the brain. Some terms created for science fiction eventually are adopted when the technology they anticipate comes to pass. In this case, we can thank The Six Million Dollar Man for popularizing the term “bionic” which was originally coined by Jack E. Steele in August 1958. The term is a portmanteau of biological and electronic, plus it just sounds cools and does roll off the tongue, so it’s a keeper. So while there are more technical terms for an artificial electronic eye, such as “biomimetic”, the press has almost entirely used the term “bionic”.

Some terms created for science fiction eventually are adopted when the technology they anticipate comes to pass. In this case, we can thank The Six Million Dollar Man for popularizing the term “bionic” which was originally coined by Jack E. Steele in August 1958. The term is a portmanteau of biological and electronic, plus it just sounds cools and does roll off the tongue, so it’s a keeper. So while there are more technical terms for an artificial electronic eye, such as “biomimetic”, the press has almost entirely used the term “bionic”. Three days ago Elon Musk revealed an

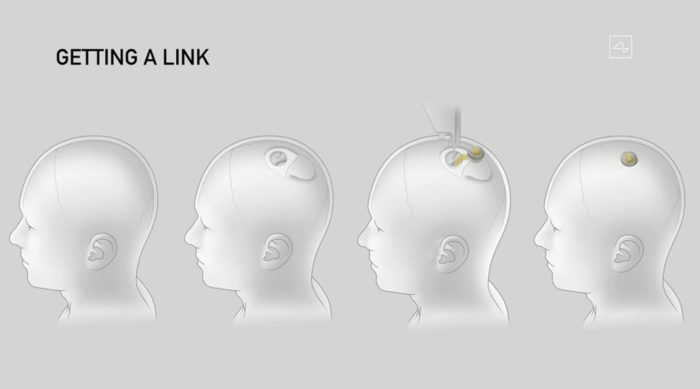

Three days ago Elon Musk revealed an