May 02 2023

Reading The Mind with fMRI and AI

This is pretty exciting neuroscience news – Semantic reconstruction of continuous language from non-invasive brain recordings. What this means is that researchers have been able to, sort of, decode the words that subjects were thinking of simply by reading their fMRI scan. They were able to accomplish this feat using a large language model AI, specifically GPT-1, an early version of Chat-GPT. It’s a great example of how these AI systems can be leveraged to aid research.

This is pretty exciting neuroscience news – Semantic reconstruction of continuous language from non-invasive brain recordings. What this means is that researchers have been able to, sort of, decode the words that subjects were thinking of simply by reading their fMRI scan. They were able to accomplish this feat using a large language model AI, specifically GPT-1, an early version of Chat-GPT. It’s a great example of how these AI systems can be leveraged to aid research.

This is the latest advance in an overall research goal of figuring out how to read brain activity and translate that activity into actual thoughts. Researchers started by picking some low-hanging fruit – determining what image a person was looking at by reading the pattern of activity in their visual cortex. This is relatively easy because the visual cortex actually maps to physical space, so if someone is looking at a giant letter E, that pattern of activity will appear in the cortex as well.

Moving to language has been tricky, because there is no physical mapping going on, just conceptual mapping. Efforts so far have relied upon high resolution EEG data from implanted electrodes. This research has also focused on single words or phrases, and often trying to pick one from among several known targets. This latest research represents three significant advances. The first is using a non-invasive technique to get the data, fMRI scan. The second is inferring full sentences and ideas, not just words. And the third is that the targets were open-ended, not picked from a limited set of choices. But let’s dig into some details, which are important.

An fMRI scan looks at blood flow to the brain, which is an indirect measure of brain activity. The human brain is a hungry organ, and when part of the brain is active it needs a stream of glucose to feed the brain cells. This results in an increase in blood flow to that part of the brain, which typically increases, peaks, and then returns to baseline over about 10 seconds. Current fMRI scans are able to generate images of blood flow to the brain is fairly high resolution. But there is nothing they can do about the delay, which is inherent to physiology. There is also a lot of background noise in the system, and picking any meaningful signal out of that noise can be challenging.

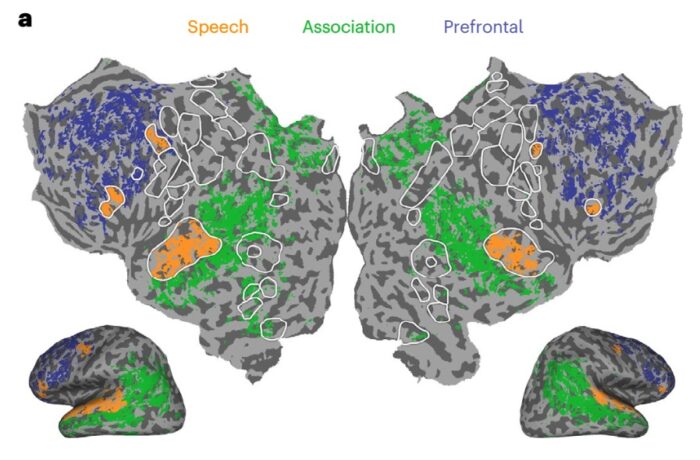

Mostly fMRI research has been about determining what part of the brain is active during certain activities, in order to figure out how the brain is wired. This current research takes the analysis to a new level, trying to determine the meaning of that brain activity, not just where it is located. Between the delay and the noise, it just has not been possible to reliable do this. But now we have generative AI and large language models, which are good at finding patterns in noise and generating cogent language from those patterns. Seems like a perfect fit.

The researchers needed subjects to go through a long period of training – training on lots of data is one of the things that make the large language models so powerful. Each subject underwent about 16 hours of training, which means they were lying in an fMRI scan listening to podcasts so the AI could train on their brain activity and match it to the words they were hearing. After the training period the subjects then listened to new audio which the AI did not know, and the AI had to construct what they were listening to just from their fMRI activity. They also were asked to just imagine a story. And finally they were shown silent video, with the hypothesis that they would have a running verbal commentary in their head as they watched the video.

How did the AI do in reconstructing their language? Pretty good. Here are some examples:

Actual stimulus: i got up from the air mattress and pressed my face against the glass of the bedroom window expecting to see eyes staring back at me but instead finding only darkness

Decoded stimulus: i just continued to walk up to the window and open the glass i stood on my toes and peered out i didn’t see anything and looked up again i saw nothing

Actual stimulus: i didn’t know whether to scream cry or run away instead i said leave me alone i don’t need your help adam disappeared and i cleaned up alone crying

Decoded stimulus: started to scream and cry and then she just said i told you to leave me alone you can’t hurt me anymore i’m sorry and then he stormed off i thought he had left i started to cry

The system is good at getting the gist correct, and some of the phrases are exact, and there are also lots of errors. But this is impressive from just looking at brain activity. There is every reason to suspect that it can get much better. This was with GPT-1. I wonder how well Chat GPT-4 will do? Or what if a large language model was tweaked for this specific task? Also, while 16 hours of training is a lot, what would happen after weeks or months of training? I wonder what the ultimate limit of this approach is.

There are two other outcomes worth pointing out. When the subjects were asked to try to fool the AI, they were easily able to do it. Throwing in random thoughts pretty much broke the algorithm. Also, when they used a model trained on one individual to test a separate individual, the outcome was gibberish. At this level, brain activity is unique to each individual.

What does all this mean, and what are the research questions going forward? Of course, mainstream reporting had to follow their typical algorithm – what practical application might this have? They all spoke of using this to allow people who are paralyzed to speak. Theoretically, sure, it means such a system is possible. But this approach will not be practical, because you have to be in an fMRI scanner for it to work. For now, this is a research tool. We can just let it be that.

Researchers can also, however, apply this same approach to other methods of reading brain activity, such as EEG or PET scanning. I do wonder how good it can get with scalp EEG leads, which are non-invasive. Because the skull attenuates and blurs the EEG signals, however, this approach will be inherently limited. It will definitely be better with leads under the skull. Perhaps stentrodes inside veins in the skull will be enough.

The idea of using this technology to violate the private thoughts of an unwilling subject was also raised. The authors point out, however, that training and using the system requires cooperation from the subject. So the system will not work on you unless you cooperated with hours of training. Even then you can thwart the system easily if you don’t cooperate, just think of a bluejay, or whatever. You don’t even have to do it consistently, just enough to throw some noise into the system.

As an aside, since I just wrote about ESP yesterday, it seems to me this has implications for psi research, specifically mind-reading. This study shows that the specific activity of individual brains at the level of detail that translates into coherent speech is mutually unintelligible. So if a mind reader were reading the activity in someone else’s brain, presumably using their own brain as the interpreter, the result would be gibberish. I have little doubt that ESP believers can come up with some hand-waving made up explanation around this (ESP doesn’t work that way, whatever that means), but it seems like a pretty solid concern to me.

Meanwhile, real science is way cooler than fake paranormal “science”. We are actually on a path to decoding human brain activity. This is going to be a long road, but this study is an important proof of concept. It also shows we are only scratching the surface in terms of AI aided research.