Feb 18 2019

Warning About Big Data in Science

At the AAAS this past weekend Dr Genevera Allen from Rice University in Houston presented her findings regarding the impact of using machine learning algorithms on evaluating scientific data. She argues that it is contributing to the reproducibility problem.

At the AAAS this past weekend Dr Genevera Allen from Rice University in Houston presented her findings regarding the impact of using machine learning algorithms on evaluating scientific data. She argues that it is contributing to the reproducibility problem.

The core problem, which I have discussed many times before, is that if scientists do not use sufficiently rigorous methods, they will find erroneous patterns in their data that are not really real. Sometimes this amounts to p-hacking, which results from methods that may seem innocent but tweak the statistical results in order to manufacture significance. This could be something as innocuous as analyzing the data as you go and then stopping the study when you reach statistical significance. Or, similarly, if you initially plan on testing 100 subjects, and the results are not quite significant, you may decide to enroll another 20 subjects in the hopes that you will “cross the finish line.”

Here is another issue that is similar to what Allen is warning about. Let’s say a doctor notices an apparent pattern – my patients with disease X all seem to have red hair. So they review their patient records, and find that indeed there is an increased probability of having red hair if you have disease X. That all seems perfectly cromulent – but it isn’t. Or we could say that such a correlation is preliminary, and needs to be verified.

The reason for this is that the physician may have just noticed a random correlation in their patient population, a statistical fluke. Every data set, such as a patient population, will have many such spurious correlations by chance alone. If you notice such a random correlation, that doesn’t make it a real phenomenon, even if you then count the numbers and do the math. You haven’t tested the hypothesis that the correlation is real, you just confirmed an observation of a chance clumpiness in the data.

In order to test the hypothesis you need to look at a fresh set of data, and (this is critical) one that does not include the original observations – otherwise you are just carrying the random clumpiness forward.

You might argue that publishing preliminary data is fine – put it out there and let the research community test the hypothesis with fresh data. That is a perfectly legitimate approach. The downside, however, is that the published research is getting flooded with these preliminary (and ultimately spurious) correlations, and there isn’t adequate follow up. Obviously often there is, and that is how our knowledge advances, but some argue we are not getting the balance correct.

Making the problem worse is that the reporting of these preliminary findings often does not put them into perspective. So the public is being fed all the spurious correlations without being properly told where the data is in the course of normal science. The end result is far too much noise and too little signal, and this is being dumped on the public with too little explanation.

Increasingly researchers like Allen are arguing that scientists should be doing internal replications before publishing. Don’t waste everyone’s time publishing a random correlation without first checking it yourself. This takes more time, and means fewer publications, but each publication will be worth much more. Unfortunately the academic world has created perverse incentives to publish lots of papers, rather than fewer more developed ones.

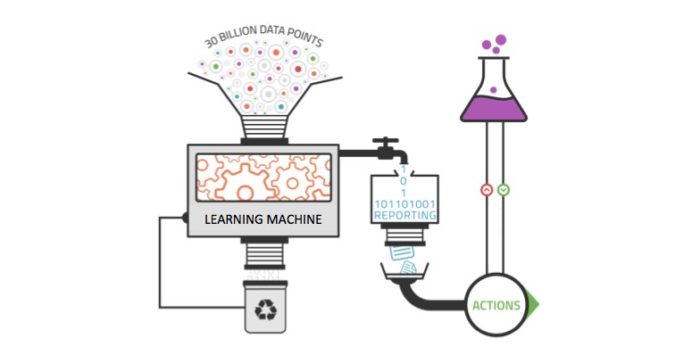

Allen is adding a new wrinkle to this discussion. She argues that machine learning algorithms are being used to pull apparent statistical signals out of large data sets. This did not create the problem of reporting spurious correlations, but it has exacerbated it tremendously. It is now easier to find these statistical flukes in big data. Her analysis finds, essentially, that this is increasing the false positive rate of finding apparent correlations – you can look at essentially any big data set and the computer will spit out all kinds of correlations. But, she argues, the vast majority of these will not replicate. Again – that is because if the correlation is a random statistical fluke, there is no reason for it to exist in a fresh set of data. Only if it is real phenomenon should it replicate.

So essentially our technology has resulted in a lowering of the bar for finding apparent correlations, so we can detect much more noise. This results in – publishing more noise. In order to compensate for this effect, we need to build in more internal checks, to reduce the noise and sift out only the legitimate signals. Otherwise researchers waste a lot of time and resources chasing down the noise, effects that are not real.

All this makes sense, and Allen is not the first researcher who does this kind of metaresearch to call for more internal replications prior to publication. Treat a correlation as a hypothesis, then do a proper study to test the hypothesis with fresh data, and publish those results, not the initial apparent correlation.

I like the analogy of looking at the institution of science like a machine that uses combustion. If you don’t get the mix of air and fuel right, the combustion will be inefficient and produce more pollution. We definitely need to tweak the mix of the science combustion to improve efficiency and reduce the pollution of preliminary, and ultimately wrong, correlations being published.

In medicine this is especially important, because ultimately wrong correlations can have an impact on the practice of medicine (it shouldn’t, but it does). Further, patients are increasing doing their own “research” and treating themselves. This means reading stuff online, most of which is preliminary and not reproducible. We are in an era of false-positive medicine. We know the fixes, we just have to implement them.