May

03

2024

If all goes well, Boeing’s Starliner capsule will launch on Monday May 6th with two crew members aboard, Butch Wilmore and Suni Williams, who will be spending a week aboard the ISS. This is the last (hopefully) test of the new capsule, and if successful it will become officially in service. This will give NASA two commercial capsules, including the SpaceX Dragon capsule, on which it can purchase seats for its astronauts.

If all goes well, Boeing’s Starliner capsule will launch on Monday May 6th with two crew members aboard, Butch Wilmore and Suni Williams, who will be spending a week aboard the ISS. This is the last (hopefully) test of the new capsule, and if successful it will become officially in service. This will give NASA two commercial capsules, including the SpaceX Dragon capsule, on which it can purchase seats for its astronauts.

If successful this will fulfill NASAs Commercial Crew Program (CCP) – it decided, rather than building its own next generation space capsule, it would contract with commercial companies. After an initial evaluation phase it chose two companies, Boeing and SpaceX, to get the contracts. Initially the two projects were neck and neck, but to its credit SpaceX was able to complete development first, with its Dragon 2 capsule going into service in 2020. Boeing now hopes to be the second commercial company to have a NASA approved crewed capsule.

There is obviously a lot riding on this final test flight on Monday, given the recent difficulties that Boeing has had. Its hard-earned reputation for aerospace excellence has been significantly tarnished by recent failures of its jetliners which seem to have been due to systemic problems with quality control within Boeing, and a corporate culture that no longer seems dedicated to quality and safety first. Let’s hope the space capsule division does not have the same issues.

Continue Reading »

Apr

29

2024

Digital life is getting more dangerous. Literally every day I have to fend off attempts at scamming me in one way or another. I get texts trying to lure me into responding. I get e-mails hoping I will click a malicious link on a reflex. I get phone calls from people warning me that I am being scammed, when in fact they are just trying to scam me. I even get snail mail trying to con me into sending in sensitive information. My social media feeds are also full of fake news and ads. Some of this is just the evolution of online scamming, but there has also been an uptick due artificial intelligence (AI). It’s now easier for scammers to create lots of fake content, and flood our digital space with traps and lures.

Digital life is getting more dangerous. Literally every day I have to fend off attempts at scamming me in one way or another. I get texts trying to lure me into responding. I get e-mails hoping I will click a malicious link on a reflex. I get phone calls from people warning me that I am being scammed, when in fact they are just trying to scam me. I even get snail mail trying to con me into sending in sensitive information. My social media feeds are also full of fake news and ads. Some of this is just the evolution of online scamming, but there has also been an uptick due artificial intelligence (AI). It’s now easier for scammers to create lots of fake content, and flood our digital space with traps and lures.

Here is just one example – have you heard yet of “cloaking”. Facebook uses algorithms to filter out fake or malicious ads on their site. But scammers have quickly figured out how to bypass the filters. They use AI generated fake news articles with links to more information. Those links go to malicious pages that will try to get money from you. Facebook will block ads that direct to malicious webpages. So the scammers “cloak” their behavior by linking to a benign page first. Once they get approval from Facebook, they then add a redirect from the benign page to their malicious scamming page.

Facebook says it will remove such pages when they are brought to their attention, but this is not adequate. The scammers only need to be up and running for a short time. Once Facebook catches up to them, they just create new fake ads directing to their malicious page. AI makes it easy to create lots of fake content for this purpose. Facebook also says that now that they are aware of this phenomenon they will try to account for it, to filter out such cloaking pages from the start. That is much better, but again we are just in a digital arms race. The scammers will find some other workaround and exploit that as long as they can.

Continue Reading »

Apr

19

2024

Boston Dynamics (now owned by Hyundai) has revealed its electric version of its Atlas robot. These robot videos always look impressive, but at the very least we know that we are seeing the best take. We don’t know how many times the robot failed to get the one great video. There are also concerns about companies presenting what the full working prototype might look like, rather than what it actually currently does. The state of CGI is such that it’s possible to fake robot videos that are indistinguishable to the viewer from real ones.

Boston Dynamics (now owned by Hyundai) has revealed its electric version of its Atlas robot. These robot videos always look impressive, but at the very least we know that we are seeing the best take. We don’t know how many times the robot failed to get the one great video. There are also concerns about companies presenting what the full working prototype might look like, rather than what it actually currently does. The state of CGI is such that it’s possible to fake robot videos that are indistinguishable to the viewer from real ones.

So it’s understandable that these robot reveal videos are always looked at with a bit of skepticism. But it does seem that pushback does have an effect, and there is pressure on robotics companies to be more transparent. The video of the new Atlas robot does seem to be real, at least. Also, these are products for the real world. At some point the latest version of Atlas will be operating on factory floors, and if it didn’t work Boston Dynamics would not be sustainable as a company.

What we are now seeing, not just with Atlas but also Tesla’s Optimus Gen 2, and others, is conversion to all electric robots. This makes them smaller, lighter, and quieter than the previous hydraulic versions. They are also not tethered to cables as previous versions.

My first question was – what is the battery life? Boston Dynamics says they are “targeting” a four hour battery life for the commercial version of the Atlas. I love that corporate speak. I could not find a more direct answer in the time I had to research this piece. But four hours seems reasonable – the prior version from 2015 had about a 90 minute battery life depending on use. Apparently the new Atlas can swap out its own battery.

Continue Reading »

Apr

15

2024

Generative AI applications seem to be on the steep part of the development curve – not only is the technology getting better, but people are finding more and more uses for it. It’s a new powerful tool with broad applicability, and so there are countless startups and researchers exploring its potential. The last time, I think, a new technology had this type of explosion was the smartphone and the rapid introduction of millions of apps.

Generative AI applications seem to be on the steep part of the development curve – not only is the technology getting better, but people are finding more and more uses for it. It’s a new powerful tool with broad applicability, and so there are countless startups and researchers exploring its potential. The last time, I think, a new technology had this type of explosion was the smartphone and the rapid introduction of millions of apps.

Generative AI applications have been created to generate text, pictures, video, songs, and imitate specific voices. I have been using most of these apps extensively, and they are continually improving. Now we can add another application to the list – generating virtual environments. This is not a public use app, but was developed by engineers for a specific purpose – to train robots.

The application is called holodeck, after the Star Trek holodeck. You can use natural language to direct the application to build a specific type of virtual 3D space, such as “build me a three bedroom single floor apartment” or “build me a music studio”. The application uses generative AI technology to then build the space, with walls, floor, and ceiling, and then pull from a database of objects to fill the space with appropriate things. It also has a set of rules for where things go, so it doesn’t put a couch on the ceiling.

The purpose of the app is to be able to generate lots of realistic and complex environments in which to train robot navigation AI. Such robotic AIs need to be trained on virtual spaces so they can learn how to navigate out there is the real world. Like any AI training, the more data the better. This means the trainers need millions of virtual environments, and they just don’t exist. In an initial test, Holodeck was compared to an earlier application called ProcTHOR and performed significantly better. For example, when asked to find a piano in a music studio a ProcTHOR trained robot succeeded 6% of the time while a Holodeck trained robot succeeded 30% of the time.

Continue Reading »

Apr

11

2024

Over the weekend when I was in Dallas for the eclipse, I ran into a local businessman who works in the energy sector, mainly involved in new solar projects. This is not surprising as Texas is second only to California in solar installation. I asked him if he is experiencing a backlog in connections to the grid and his reaction was immediate – a huge backlog. This aligns with official reports – there is a huge backlog and its growing.

Over the weekend when I was in Dallas for the eclipse, I ran into a local businessman who works in the energy sector, mainly involved in new solar projects. This is not surprising as Texas is second only to California in solar installation. I asked him if he is experiencing a backlog in connections to the grid and his reaction was immediate – a huge backlog. This aligns with official reports – there is a huge backlog and its growing.

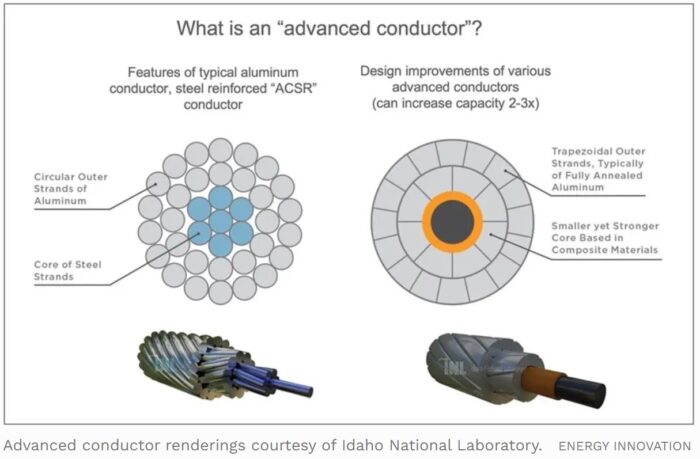

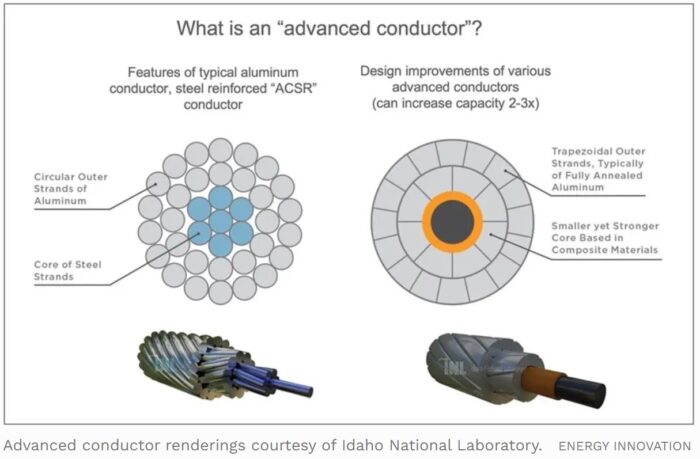

In fact, the various electrical grids may be the primary limiting factor in transitioning to greener energy sources. As I wrote recently, energy demand is increasing, faster than previously projected. Our grid infrastructure is aging, and mainly uses 100 year old technology. There are also a number of regulatory hurdles to expanding and upgrading the grid. There is good news in this story, however. We have at our disposal the technology to virtually double the capacity of our existing grid, while reducing the risk of sparking fires and weather-induced power outages. This can be done cheaper and faster than building new power lines.

The process is called reconductoring, which just means replacing existing power lines with more advanced power lines. I have to say, I falsely assumed that all this talk about upgrading the electrical grid included replacing existing power lines and other infrastructure with more advanced technology, but it really doesn’t. It is mainly about building new grid extensions to accommodate new energy sources and demand. Every resource I have read, including this Forbes article, give the same primary reason why this is the case. Utility companies make more money from expensive expansion projects, for which they can charge their customers. Cheaper reconductoring projects make them less money.

Continue Reading »

Apr

02

2024

On a recent SGU live streaming discussion someone in the chat asked – aren’t frivolous AI applications just toys without any useful output? The question was meant to downplay recent advances in generative AI. I pointed out that the question is a bit circular – aren’t frivolous applications frivolous? But what about the non-frivolous applications?

On a recent SGU live streaming discussion someone in the chat asked – aren’t frivolous AI applications just toys without any useful output? The question was meant to downplay recent advances in generative AI. I pointed out that the question is a bit circular – aren’t frivolous applications frivolous? But what about the non-frivolous applications?

Recent generative AI applications are a powerful tool. They leverage the power and scale of current data centers with the massive training data provided by the internet, using large language model AI tools that are able to find patterns and generate new (although highly derivative) content. Most people are likely familiar with this tech through applications like ChatGPT, which uses this AI process to generate natural-language responses to open ended “prompts”. The result is a really good chat bot, but also a useful interface for searching the web for information.

This same technology can generate output other than text. It can generate images, video, and music. The results are technically impressive (if far from perfect), but in my experience not genuinely creative. I think these are the fun applications the questioner was referring to.

But there are many serious applications of this technology in development as well. An app like ChatGPT can make an excellent expert system, searching through tons of data to produce useful information. This can have many practical applications, from generating lists of potential diagnoses for doctors to consider, to writing first-draft legal contracts. There are still kinks to be worked out, but the potential is clearly amazing.

Continue Reading »

Mar

26

2024

In 1974 Robert Nozick published the book, Anarchy, State, and Utopia, in which he posed the following thought experiment: If you could be plugged into an “experience machine” (what we would likely call today a virtual reality or “Matrix”) that could perfectly replicate real-life experiences, but was 100% fake, would you do it? The question was whether you would do this irreversibly for the rest of your life. What if, in this virtual reality, you could live an amazing life – perfect health and fitness, wealth and resources, and unlimited opportunity for adventure and fun?

In 1974 Robert Nozick published the book, Anarchy, State, and Utopia, in which he posed the following thought experiment: If you could be plugged into an “experience machine” (what we would likely call today a virtual reality or “Matrix”) that could perfectly replicate real-life experiences, but was 100% fake, would you do it? The question was whether you would do this irreversibly for the rest of your life. What if, in this virtual reality, you could live an amazing life – perfect health and fitness, wealth and resources, and unlimited opportunity for adventure and fun?

Nozick hypothesized that people generally would not elect to do this (as summarized in a recent BBC article). He gave three reasons – we want to actual do certain things, and not just have the experience of doing them, we want to be a certain kind of person and that can only happen in reality, and we want meaning and purpose in our lives, which is only possible in reality.

A lot has happened in the last 50 years and it is interesting to revisit Nozick’s thought experiment. I would say I basically disagree with Nozick, but there is a lot of nuance that needs to be explored. For me there are two critical variables, only one of which I believe was explicitly addressed by Nozick. In his thought experience once you go into the experience machine you have no memory of doing so, therefore you would believe the virtual reality to be real. I would not want to do this. So in that sense I agree with him – but he did not give this as a major reason people would reject the choice. I would be much more likely to go into a virtual reality if I retained knowledge of the real world and that I was in a virtual world.

Continue Reading »

Mar

21

2024

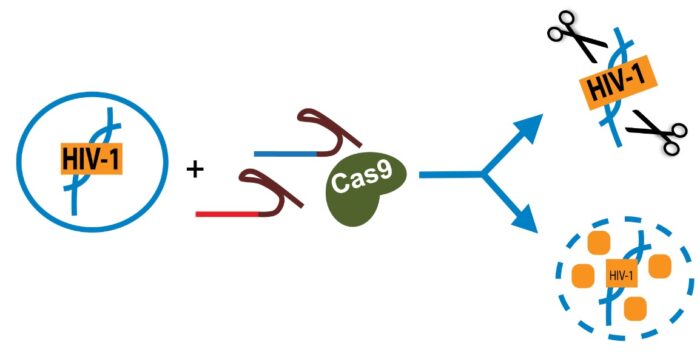

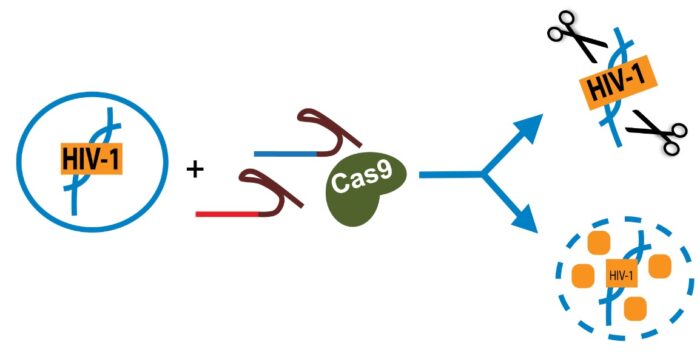

CRISPR has been big scientific news since it was introduced in 2012. The science actually goes back to 1987, but the CRISPR/Cas9 system was patented in 2012, and the developers won the Noble Prize in Chemistry in 2020. The system gives researchers the ability to quickly and cheaply make changes to DNA, by seeking out and matching a desired sequence and then making a cut in the DNA at that location. This can be done to inactivate a specific gene or, using the cells own repair machinery, to insert a gene at that location. This is a massive boon to genetics research but is also a powerful tool of genetic engineering.

CRISPR has been big scientific news since it was introduced in 2012. The science actually goes back to 1987, but the CRISPR/Cas9 system was patented in 2012, and the developers won the Noble Prize in Chemistry in 2020. The system gives researchers the ability to quickly and cheaply make changes to DNA, by seeking out and matching a desired sequence and then making a cut in the DNA at that location. This can be done to inactivate a specific gene or, using the cells own repair machinery, to insert a gene at that location. This is a massive boon to genetics research but is also a powerful tool of genetic engineering.

There is also the potential for CRISPR to be used as a direct therapy in medicine. In 2023 the first regulatory approval for CRISPR as a treatment for a disease was given to treatments for sickle cell disease and thalassemia. These diseases were targeted for a technical reason – you can take bone marrow out of a patient, use CRISPR to alter the genes for hemoglobin, and then put it back in. What’s really tricky about using CRISPR as a medical treatment is not necessarily the genetic change itself, but getting the CRISPR to the correct cells in the body. This requires a vector, and is the most challenging part of using CRISPR as a medical intervention. But if you can bring the cells to the CRISPR that eliminates the problem.

Continue Reading »

Mar

18

2024

For the last two decades electricity demand in the US has been fairly flat. While it has been increasing overall, the increase has been very low. This has been largely attributed to the fact that as the use of electrical devices has increased, the efficiency of those devices has also increased. The introduction of LED bulbs, increased building insulation, more energy efficient appliances has largely offset increased demand. However, the most recent reports show that US energy demand is turning up, and there is real fear that this recent spike is not a short term anomaly but the beginning of a long term trend. For example, the projection of increase in energy demand by 2028 has nearly doubled from the 2022 estimate to the 2023 estimate – ” from 2.6% to 4.7% growth over the next five years.”

For the last two decades electricity demand in the US has been fairly flat. While it has been increasing overall, the increase has been very low. This has been largely attributed to the fact that as the use of electrical devices has increased, the efficiency of those devices has also increased. The introduction of LED bulbs, increased building insulation, more energy efficient appliances has largely offset increased demand. However, the most recent reports show that US energy demand is turning up, and there is real fear that this recent spike is not a short term anomaly but the beginning of a long term trend. For example, the projection of increase in energy demand by 2028 has nearly doubled from the 2022 estimate to the 2023 estimate – ” from 2.6% to 4.7% growth over the next five years.”

First, I have to state my usual skeptical caveat – these are projections, and we have to be wary of projecting short term trends indefinitely into the future. The numbers look like a blip on the graph, and it seems weird to take that blip and extrapolate it out. But these forecasts are not just based on looking at such graphs and then extending the line of current trends. These are based on an industry analysis which includes projects that are already under way. So there is some meat behind these forecasts.

What are the factors that seem to be driving this current and projected increase in electricity demand? They are all the obvious ones you might think. First, something which I and other technology-watchers predicted, is the increase in the use of electrical vehicles. In the US there are more than 2.4 million registered electric vehicles. While this is only about 1% of the US fleet, EVs represent about 9% of new car sales, and growing. If we are successful in somewhat rapidly (it will still take 20-30 years) changing our fleet of cars from gasoline engine to electric or hybrid, that represents a lot of demand on the electricity grid. Some have argued that EV charging is mostly at night (off peak), so this will not necessarily require increased electricity production capacity, but that is only partly true. Many people will still need to charge up on the road, or will charge up at work during the day, for example. It’s hard to avoid the fact that EVs represent a potential massive increase in electricity demand. We need to factor this in when planning future electricity production.

Another factor is data centers. The world’s demand for computer cycles is increasing, and there are already plans for many new data centers, which are a lot faster to build than the plants to power them. Recent advances in AI only increase this demand. Again we may mitigate this somewhat by prioritizing computer advances that make computers more energy efficient, but this will only be a partial offset. We do also have to think about applications, and if they are worth it. The one that gets the most attention is crypto – by one estimate Bitcoin mining alone used 121 terra-watt hours of electricity in 2023, the same as the Netherlands (with a population of 17 million people).

Continue Reading »

Mar

11

2024

When thinking about potential future technology, one way to divide possible future tech is into probable and speculative. Probable future technology involves extrapolating existing technology into the future, such as imaging what advanced computers might be like. This category also includes technology that we know is possible, we just haven’t mastered it yet, like fusion power. For these technologies the question is more when than if.

When thinking about potential future technology, one way to divide possible future tech is into probable and speculative. Probable future technology involves extrapolating existing technology into the future, such as imaging what advanced computers might be like. This category also includes technology that we know is possible, we just haven’t mastered it yet, like fusion power. For these technologies the question is more when than if.

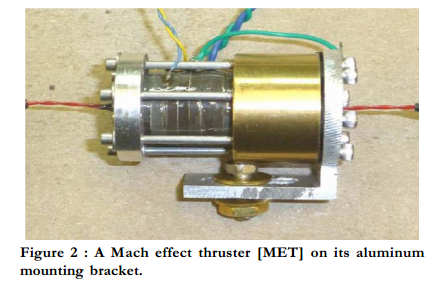

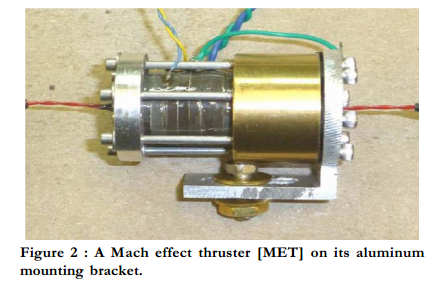

Speculative technology, however, may or may not even be possible within the laws of physics. Such technology is usually highly disruptive, seems magical in nature, but would be incredibly useful if it existed. Common technologies in this group include faster than light travel or communication, time travel, zero-point energy, cold fusion, anti-gravity, and propellantless thrust. I tend to think of these as science fiction technologies, not just speculative. The big question for these phenomena is how confident are we that they are impossible within the laws of physics. They would all be awesome if they existed (well, maybe not time travel – that one is tricky), but I am not holding my breath for any of them. If I had to bet, I would say none of these exist.

That last one, propellantless thrust, does not usually get as much attention as the other items on the list. The technology is rarely discussed explicitly in science fiction, but often it is portrayed and just taken for granted. Star Trek’s “impulse drive”, for example, seems to lack any propellant. Any ship that zips into orbit like the Millennium Falcon likely is also using some combination of anti-gravity and propellantless thrust. It certainly doesn’t have large fuel tanks or display any exhaust similar to a modern rocket.

In recent years NASA has tested two speculative technologies that claim to be able to produce thrust without propellant – the EM drive and the Mach Effect thruster (MET). For some reason the EM drive received more media attention (including from me), but the MET was actually the more interesting claim. All existing forms of internal thrust involve throwing something out the back end of the ship. The conservation of momentum means that there will be an equal and opposite reaction, and the ship will be thrust in the opposite direction. This is your basic rocket. We can get more efficient by accelerating the propellant to higher and higher velocity, so that you get maximal thrust from each atom or propellant your ship carries, but there is no escape from the basic physics. Ion drives are perhaps the most efficient thrusters we have, because they accelerate charged particles to relativistic speeds, but they produce very little thrust. So they are good for moving ships around in space but cannot get a ship off the surface of the Earth.

Continue Reading »

If all goes well, Boeing’s Starliner capsule will launch on Monday May 6th with two crew members aboard, Butch Wilmore and Suni Williams, who will be spending a week aboard the ISS. This is the last (hopefully) test of the new capsule, and if successful it will become officially in service. This will give NASA two commercial capsules, including the SpaceX Dragon capsule, on which it can purchase seats for its astronauts.

If all goes well, Boeing’s Starliner capsule will launch on Monday May 6th with two crew members aboard, Butch Wilmore and Suni Williams, who will be spending a week aboard the ISS. This is the last (hopefully) test of the new capsule, and if successful it will become officially in service. This will give NASA two commercial capsules, including the SpaceX Dragon capsule, on which it can purchase seats for its astronauts.

Digital life is getting more dangerous. Literally every day I have to fend off attempts at scamming me in one way or another. I get texts trying to lure me into responding. I get e-mails hoping I will click a malicious link on a reflex. I get phone calls from people warning me that I am being scammed, when in fact they are just trying to scam me. I even get snail mail trying to con me into sending in sensitive information. My social media feeds are also full of fake news and ads. Some of this is just the evolution of online scamming, but there has also been an uptick due artificial intelligence (AI). It’s now easier for scammers to create lots of fake content, and flood our digital space with traps and lures.

Digital life is getting more dangerous. Literally every day I have to fend off attempts at scamming me in one way or another. I get texts trying to lure me into responding. I get e-mails hoping I will click a malicious link on a reflex. I get phone calls from people warning me that I am being scammed, when in fact they are just trying to scam me. I even get snail mail trying to con me into sending in sensitive information. My social media feeds are also full of fake news and ads. Some of this is just the evolution of online scamming, but there has also been an uptick due artificial intelligence (AI). It’s now easier for scammers to create lots of fake content, and flood our digital space with traps and lures. Boston Dynamics (now owned by Hyundai) has revealed its

Boston Dynamics (now owned by Hyundai) has revealed its  Generative AI applications seem to be on the steep part of the development curve – not only is the technology getting better, but people are finding more and more uses for it. It’s a new powerful tool with broad applicability, and so there are countless startups and researchers exploring its potential. The last time, I think, a new technology had this type of explosion was the smartphone and the rapid introduction of millions of apps.

Generative AI applications seem to be on the steep part of the development curve – not only is the technology getting better, but people are finding more and more uses for it. It’s a new powerful tool with broad applicability, and so there are countless startups and researchers exploring its potential. The last time, I think, a new technology had this type of explosion was the smartphone and the rapid introduction of millions of apps. Over the weekend when I was in Dallas for the eclipse, I ran into a local businessman who works in the energy sector, mainly involved in new solar projects. This is not surprising as

Over the weekend when I was in Dallas for the eclipse, I ran into a local businessman who works in the energy sector, mainly involved in new solar projects. This is not surprising as  On a recent SGU live streaming discussion someone in the chat asked – aren’t frivolous AI applications just toys without any useful output? The question was meant to downplay recent advances in generative AI. I pointed out that the question is a bit circular – aren’t frivolous applications frivolous? But what about the non-frivolous applications?

On a recent SGU live streaming discussion someone in the chat asked – aren’t frivolous AI applications just toys without any useful output? The question was meant to downplay recent advances in generative AI. I pointed out that the question is a bit circular – aren’t frivolous applications frivolous? But what about the non-frivolous applications? In 1974 Robert Nozick published the book,

In 1974 Robert Nozick published the book,  CRISPR has been big scientific news since it was introduced in 2012. The science actually goes back to 1987, but the CRISPR/Cas9 system was patented in 2012, and the developers won the

CRISPR has been big scientific news since it was introduced in 2012. The science actually goes back to 1987, but the CRISPR/Cas9 system was patented in 2012, and the developers won the  For the last two decades electricity demand in the US has been fairly flat. While it has been increasing overall, the increase has been very low. This has been largely attributed to the fact that as the use of electrical devices has increased, the efficiency of those devices has also increased. The introduction of LED bulbs, increased building insulation, more energy efficient appliances has largely offset increased demand. However,

For the last two decades electricity demand in the US has been fairly flat. While it has been increasing overall, the increase has been very low. This has been largely attributed to the fact that as the use of electrical devices has increased, the efficiency of those devices has also increased. The introduction of LED bulbs, increased building insulation, more energy efficient appliances has largely offset increased demand. However,  When

When