Oct 20 2023

The Hardware Demands of AI

I am of the generation that essentially lived through the introduction and evolution of the personal computer. I have decades of experience as an active user and enthusiast, so I have been able to notice some patterns. One pattern is the relationship between the power of computing hardware and the demands of computing software. For the first couple of decades of personal computers, the capacity and speed of the hardware was definitely the limiting factor in terms of the end-user experience. Software was essentially fit into the available hardware, and as hardware continually improved, software power expanded to fill the available space. The same was true for hard drive capacity – each new drive seemed like a bottomless pit at first, but quickly file size increased to take advantage of the new capacity.

I am of the generation that essentially lived through the introduction and evolution of the personal computer. I have decades of experience as an active user and enthusiast, so I have been able to notice some patterns. One pattern is the relationship between the power of computing hardware and the demands of computing software. For the first couple of decades of personal computers, the capacity and speed of the hardware was definitely the limiting factor in terms of the end-user experience. Software was essentially fit into the available hardware, and as hardware continually improved, software power expanded to fill the available space. The same was true for hard drive capacity – each new drive seemed like a bottomless pit at first, but quickly file size increased to take advantage of the new capacity.

During these days my friends and I would intimately know every stat of our current hardware – RAM, hard drive capacity, processor speed, then the number of cores – and we engaged in a friendly arms race as we perpetually leap-frogged each other. The pattern shifted, however, sometime after 2000. For personal computers, hardware power seemed to finally get to the point where it was way more than enough for anything we might want to do. We stopped obsessing with things like processor speed – except, that is, for our gaming computer. Video games were the only everyday application that really stressed the power of our hardware. Suddenly, the stats of your graphics card became the most important stat.

That is beginning to wane also. I know there are gaming jockeys who still build sick rigs, pushing the limits of consumer computing, but for me, as long as my computer is basically up to date, I don’t have to worry about running the latest game. I still pay attention to my gaming card stats, however, especially when VR became a thing. There is always some new application that makes you want a hardware upgrade.

Today that new thing is artificial intelligence (AI), although this is not so much for the consumer as the big data centers. The latest crop of AI, like Chat GPT, which uses pretrained transformer technology, is hardware hungry. Interestingly, they mostly rely on graphics cards, which are the fastest mass-produced processors out there. The same is true for crypto mining, which led to a shortage of graphics cards and a spike in the price (damn crypto miners). Video games really are an important driver of computing hardware.

Experts estimate that while processing speed doubles about every 18 months (the famous Moore’s Law), the demands of AI are doubling about every 3.5 months. This will lead to an increasing gap between hardware power and the demands of AI software. GPT 3 used 100 times the processing power to train as GPT, and GPT 4 used 10 times the power as GPT3. Training GPT 4 cost $100 million. AI applications are now consuming as much energy as nations – one study estimated that the energy used to train and run Google’s AI used as much energy as Ireland. By 2027 it is estimated that AI applications will consume 85-134 terawatt-hours annually, as much as countries like Sweden.

The question is – will these hardware and energy use limitations slow down the development and use of AI? If my observations over the last half-century hold out, then I would say no. Just as software expands to fill the space created by hardware, hardware is developed specifically to meet the demands of popular software applications, which then feeds back into other software, and around it goes. Basic computing led to video games spawning more and more powerful graphics cards which facilitated crypto mining. (An over simplification, I know, but I think basically true.) What hardware is in the pipeline that may be fueling the AI of the near future? Here are a couple of possibilities.

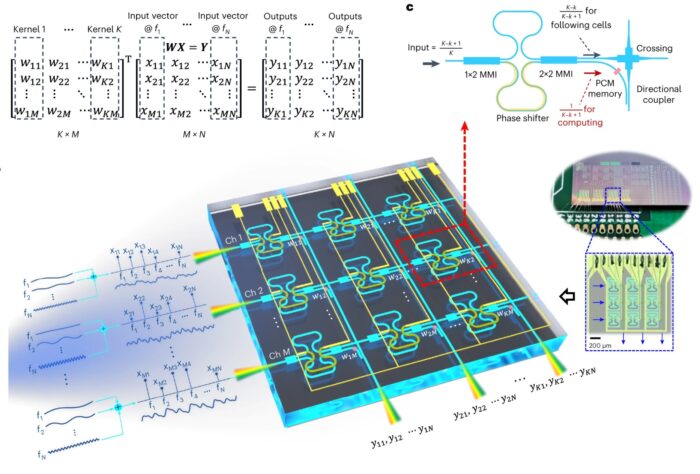

Here is a study recently published in nature photonics – Higher-dimensional processing using a photonic tensor core with continuous-time data. Essentially this technology uses light instead of electrons to transfer data. One of the advantages of using light (photonics) is that you can encode parallel streams of data in different wavelengths (colors) of light. Use 10 different wavelengths and suddenly you have 10 times the data transfer. Of course you could theoretically use many more than that. In this study they use radio frequencies. They also used a three-dimensional chip design, further increasing the parallel processing. They estimate that with 6 parallel inputs and outputs they can increase the processing energy efficiency and density 100 times. That would mean one data center doing the work of 100 data centers. But using photonics in this way may have much greater potential beyond that. This may put un on a technological track to many orders of magnitude increases in processing speed and efficiency.

Another potential boost to AI is quantum computing. I wrote an overview of quantum computing earlier this year. Basically quantum computers use qbits instead of bits – they are bits that are entangled and therefore can encode 2^n bits of information, rather than just n bits (with n being the number of qubits). As a result a quantum computer can solve some types of problems in minutes that would take even banks of traditional supercomputers longer than the age of the universe to complete. AI software designed to run on quantum computers could be incredibly more powerful than anything we have today. It’s hard to even imagine the potential.

However, quantum computers are still in development, and have to overcome several critical hurdles. One is error correction. The entangled qbits tend to become disentangled, losing their data, for example. But advances in quantum computers have been steady, and there is no reason to think these advances will slow or stop. One team at Harvard, for example, recently published a technique for self-correcting quantum computing. This could be a significant advance. Encouraging advances seem to be coming out every week. It’s still too early to tell what the timeline and future potential of quantum computers will be, but the rate of advance is extremely encouraging, and none of the problems seem unsolvable.

I think the bottom line is that we saw an incredible advance in AI software technology, leading to a host of new applications that are still exploding. There may be other software advances in the near future as well. It’s now hardware’s turn in the cycle to come up with breakthroughs to match the demands of AI. We may be on the cusp of a photonics revolution. One caveat is that I have been hearing about photonics for years, so it is still hard to tell (from my outsider perspective) how far away this technology is. Perhaps on the heels of photonics we may also see quantum computers come into their own. Or there may be some other hardware technology out there (graphene based?) poised to take the lead.

The AI industry will likely be worth trillions by the 2030s. That kind of potential spawns massive investments in technological R&D, which is a pretty good predictor of future technology.