Oct

12

2023

From time to time the Earth gets hit by a wave of energetic particles from the sun – solar flares or even coronal mass ejections (CMEs). In 1859 a large CME hit Earth (known as the Carrington Event), shorting out telegraphs, brightening the sky, and causing aurora deep into equatorial latitudes. If such an event were to occur today experts are not exactly sure what would happen, but it could take out satellites and short out parts of electrical grids. Interestingly, we have a historical record of how often such events have occurred in the past, mostly from tree rings.

From time to time the Earth gets hit by a wave of energetic particles from the sun – solar flares or even coronal mass ejections (CMEs). In 1859 a large CME hit Earth (known as the Carrington Event), shorting out telegraphs, brightening the sky, and causing aurora deep into equatorial latitudes. If such an event were to occur today experts are not exactly sure what would happen, but it could take out satellites and short out parts of electrical grids. Interestingly, we have a historical record of how often such events have occurred in the past, mostly from tree rings.

A recent study extends this data with an analysis of subfossil pine trees from the Southern French Alps. These are trees partially preserved in bogs or similar environments, not yet fossilized but on their way. Trees are incredibly valuable as a store of historical information because of their rings – tree rings are a record of their annual growth, and the thickness of each ring reflects environmental conditions that year. Trees also live a long time – the Scots Pines used in the study live for 150-300 years. You can therefore create a record of annual tree rings continuously back in time (dendrochronologically dated tree-ring series) by matching up overlapping tree rings from different trees. We have such records going back about 13,000 years.

Tree ring also record another type of data – carbon 14-carbon 12 ratio. This is the basis of carbon 14 dating. The atmosphere has a certain ratio of carbon 14-12 which is reflected in living things that incorporate carbon into their structures. When the living thing dies, the clock starts ticking, with C14 decaying into C12, changing the ratio. One wrinkle for this method, however, is that the C14-12 ratio in the atmosphere is not fixed, it can fluxtuate from year to year. Essentially cosmic rays hit nitrogen (N14) atoms and convert them into carbon 14 in the upper atmosphere. This creates a steady of C14 in the atmosphere (and why it has not all decayed to C12 already).

Continue Reading »

Oct

10

2023

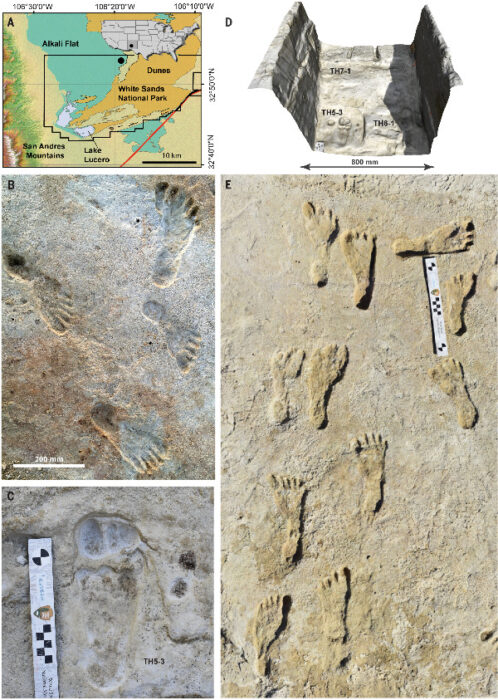

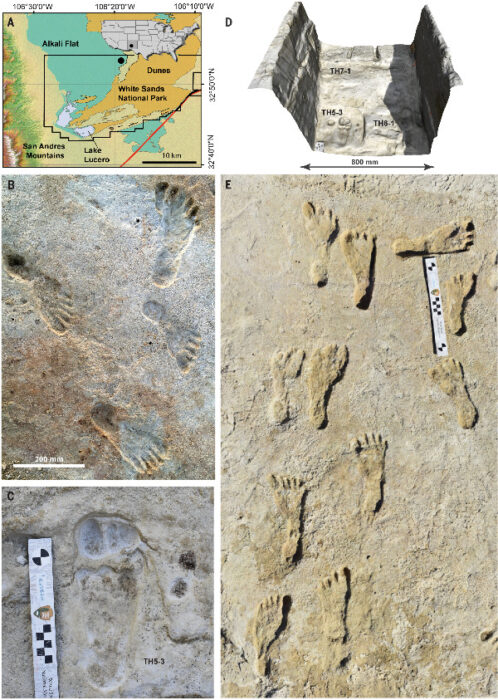

Exactly when Homo sapiens came to the Americas has not been firmly established, and new evidence has just thrown another curve ball into the controversy.

Exactly when Homo sapiens came to the Americas has not been firmly established, and new evidence has just thrown another curve ball into the controversy.

There is evidence of a large culture of humans throughout North America from 12-13,000 years ago, called the Clovis Culture. The Clovis people are known almost entirely from the stone points they left behind, which have a characteristic shape – a lance-shaped tip with fluting at the base. The flutes likely made it easier to attach the points to a shaft. Archaeologists have collected over 10,000 Clovis points across North America, indicating the culture was extensive. However, we only have a single human skeleton associated with Clovis points – the burial site of a 1 – 1.5 year old boy found at the Anzick Site, in Wilsall, Montana. This did allow for DNA analysis showing the the Clovis people were likely direct ancestors of later Native Americans.

Evidence of pre-Clovis people in the Americas has been controversial, but over the years enough evidence has emerged to doom the “Clovis first” hypothesis in favor of the existence of pre-Clovis people. The latest evidence came from the Cooper’s Ferry site in Idaho, which appears to be a human habitation site with hearths and animal bones, carbon dated to 16,500 years ago. But there are still skeptics who say it is not absolutely clear that the site was the result of human habitation. Sometimes carbon dating is called into question – was there mixing of material, for example. The Clovis and pre-Clovis evidence also tends to contain either artifacts or bones, but rarely both together, which makes it difficult to nail down the timing.

Continue Reading »

Oct

09

2023

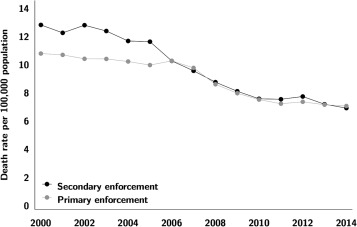

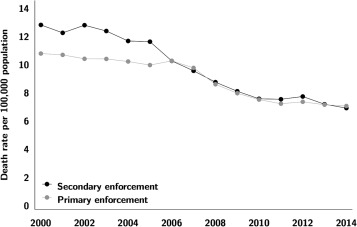

In Part I of this post I outlined some basic considerations in deciding how much the state should impose regulations on people and institutions in order to engineer positive outcomes. In the end the best approach, it seems to me, is a balanced one, where we consider the burden of regulations, both individually and cumulatively, compared to the benefit to individuals and society. We also need to consider unintended consequences and perverse incentives – people still need to feel as if they have individual responsibility, for example. It is a complex balancing act. In the end we should use objective evidence to determine what works and what doesn’t, or at least to be clear-eyed about the tradeoffs. In the US we have the benefit of fifty states which can experiment with various regulations, and this can potentially generate a lot of data.

In Part I of this post I outlined some basic considerations in deciding how much the state should impose regulations on people and institutions in order to engineer positive outcomes. In the end the best approach, it seems to me, is a balanced one, where we consider the burden of regulations, both individually and cumulatively, compared to the benefit to individuals and society. We also need to consider unintended consequences and perverse incentives – people still need to feel as if they have individual responsibility, for example. It is a complex balancing act. In the end we should use objective evidence to determine what works and what doesn’t, or at least to be clear-eyed about the tradeoffs. In the US we have the benefit of fifty states which can experiment with various regulations, and this can potentially generate a lot of data.

Let’s take a look first at cigarette smoking regulations and health outcomes. At this point I don’t think I have to spend too much time establishing that smoking has negative health consequences. They have been well-documented in the last century and are not controversial, so we can take that as an established premise. Given that we know smoking is extremely unhealthy, which measures are justified by government in trying to limit smoking? Interestingly, the answer until around the 1980s was – very little. Surgeon General warnings was about it. Smoking in public was accepted (I caught the tail end of smoking in hospitals).

This was always curious to me. Here we have a product which is known to harm and even kill those who use the product as directed. And it’s addictive, which compromises the autonomy of users. It is interesting to think what would happen if a company tried to introduce tobacco smoking as a product today. I doubt it would get past the FDA. But obviously smoking was culturally established before its harmful effects were generally known. I also always thought that the experience of prohibition created a general reluctance to go down that road again. But then data started coming out about the effects of second-hand smoke, and suddenly the calculus shifted. Now we were not just dealing with the interest of the state in protecting citizens from their own behavior, but the state protecting citizens from the choices of other citizens. This is entirely different – you may have a right to slowly kill yourself, but not to slowly kill me. The result was lots of data about smoking regulations.

Continue Reading »

Oct

05

2023

One side benefit of our federalist system is that the US essentially has 50 experiments in democracy. States hold a lot of power, which provides an opportunity to compare the effects of different public policies. There are lots of other variables at play, such as economics, rural vs urban residents, and climate, but with so many different states, and counties within those states, researchers often have enough data to account for these variables.

While the differences among the states go beyond red state vs blue state, this is an important factor when it comes to public policy, and there is at least one fairly consistent ideological difference. Red states tend to favor policies the lean toward liberty and are pro-business. Blue states are more willing to enact public policy that limits freedom or regulates business but are designed to benefit public health. These public health policies are often denigrated by conservatives as the “nanny state” – portraying the government as a caretaker and citizens as children.

Across the 50 states there is more of a continuum than a sharp divide, both politically and in public policy, again providing a natural experiment of the effects and costs of such policies. It’s not my point to say which approach is “right” because there is a tradeoff and a value judgement involved. One bit of conventional wisdom I agree with is that in politics there are no solutions, only tradeoffs. How much freedom are we willing to give up for security, and how much inconvenience are we willing to put up with for improved health?

Continue Reading »

Oct

03

2023

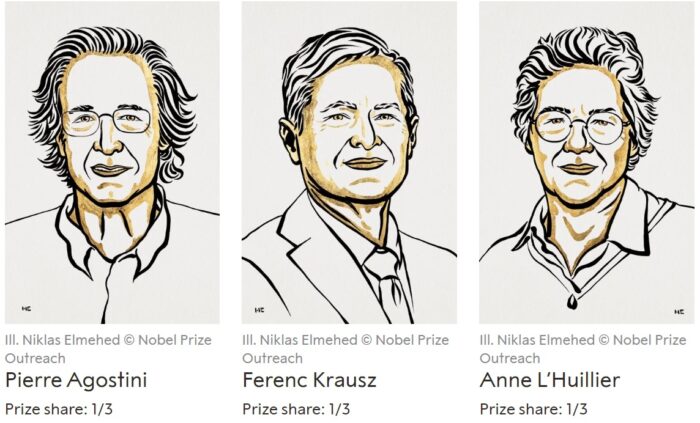

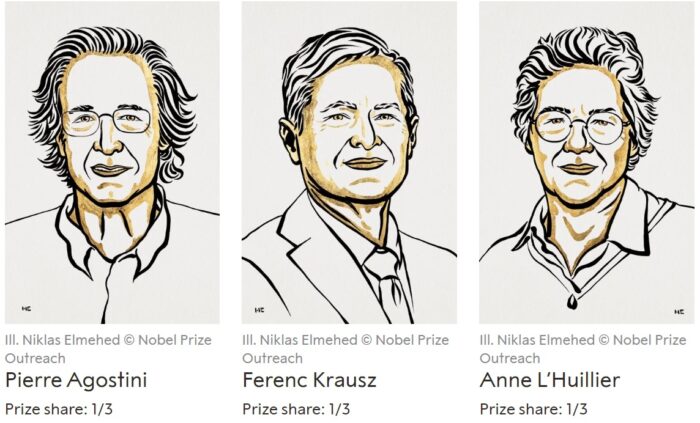

One attosecond (as) is 1×10−18 seconds. An attosecond is to one second what one second is to the age of the universe. It is an extremely tiny slice of time. This year’s Nobel Prize in physics goes to three scientists, Pierre Agostini, Ferenc Krausz, and Anne L’Huillier, whose work contributed to developing pulses of light that are measured in attoseconds.

One attosecond (as) is 1×10−18 seconds. An attosecond is to one second what one second is to the age of the universe. It is an extremely tiny slice of time. This year’s Nobel Prize in physics goes to three scientists, Pierre Agostini, Ferenc Krausz, and Anne L’Huillier, whose work contributed to developing pulses of light that are measured in attoseconds.

Why are such short pulses of light useful? Because they allow for experiments that capture the physical state of processes that happen extremely fast. In particular, they are the first light pulses fast enough to capture information about the state of electrons in atoms, molecules, and condensed matter.

Anne L’Huillier, only the fifth woman to win the Nobel Prize in Physics, discovered in 1987 that when she shined infrared light through a noble gas it would give off overtones, which are other frequencies of light. The light would give extra energy to atoms in the gas, some of which would then radiate that energy away as additional photons of light. This was foundational work that made possible the later discoveries by Agostini and Krausz. Essentially these overtones were harmonic frequencies of light, multiples of the original light frequency.

L’Huillier and her colleagues later published in 1991 follow up work explaining a plateau observed in the harmonic pulses of light. They found that the high-harmonic generation (HHG) plateau was a single electron phenomenon. At the time they proposed that, in theory, it might be possible to exploit this phenomenon to generate very short pulses of light.

In 2001 both Krausz and Ferenc, in separate experiments, were able to build on this work to produce attosecond scale pulses of light. Krausz was able to generate pulses of 650 attoseconds, and Ferenc of 250 attoseconds. Here are some technical details:

Continue Reading »

Oct

02

2023

Most readers are probably familiar with the Turing Test – a concept proposed by early computing expert Alan Turing in 1950, and originally called “The Imitation Game”. The original paper is enlightening to read. Turing was not trying to answer the question “can machines think”. He rejected this question as too vague, and instead substituted an operational question which has come to be known as the Turing Test. This involves an evaluator and two subjects who cannot see each other but communicate only by text. The evaluator knows that one of the two subjects is a machine and the other is a human, and they can ask whatever questions they like in order to determine which is which. A machine is considered to have passed the test if it fools a certain threshold of evaluators a percentage of the time.

Most readers are probably familiar with the Turing Test – a concept proposed by early computing expert Alan Turing in 1950, and originally called “The Imitation Game”. The original paper is enlightening to read. Turing was not trying to answer the question “can machines think”. He rejected this question as too vague, and instead substituted an operational question which has come to be known as the Turing Test. This involves an evaluator and two subjects who cannot see each other but communicate only by text. The evaluator knows that one of the two subjects is a machine and the other is a human, and they can ask whatever questions they like in order to determine which is which. A machine is considered to have passed the test if it fools a certain threshold of evaluators a percentage of the time.

But Turing did appear to believe that the Turing Test would be a reasonable test of whether or not a computer could think like a human. He systematically addresses a number of potential objections to this conclusion. For example, he quotes Professor Jefferson from a speech from 1949:

“Not until a machine can write a sonnet or compose a concerto because of thoughts and emotions felt, and not by the chance fall of symbols, could we agree that machine equals brain-that is, not only write it but know that it had written it.”

Turning then rejects this argument as solipsist – that we can only know that we ourselves are conscious because we are conscious. As an aside, I disagree with that. People can know that other people are conscious because we all have similar brains. But an artificial intelligence (AI) would be fundamentally different, and the question is – how is the AI creating its output? Turing gives as an example of how his test would be sufficient a sonnet writer defending the creative choices of their own sonnet:

What would Professor Jefferson say if the sonnet-writing machine was able to answer like this in the viva voce? I do not know whether he would regard the machine as “merely artificially signalling” these answers, but if the answers were as satisfactory and sustained as in the above passage I do not think he would describe it as “an easy contrivance.” This phrase is, I think, intended to cover such devices as the inclusion in the machine of a record of someone reading a sonnet, with appropriate switching to turn it on from time to time. In short then, I think that most of those who support the argument from consciousness could be persuaded to abandon it rather than be forced into the solipsist position. They will then probably be willing to accept our test.

Continue Reading »

From time to time the Earth gets hit by a wave of energetic particles from the sun – solar flares or even coronal mass ejections (CMEs). In 1859 a large CME hit Earth (known as the Carrington Event), shorting out telegraphs, brightening the sky, and causing aurora deep into equatorial latitudes. If such an event were to occur today experts are not exactly sure what would happen, but it could take out satellites and short out parts of electrical grids. Interestingly, we have a historical record of how often such events have occurred in the past, mostly from tree rings.

From time to time the Earth gets hit by a wave of energetic particles from the sun – solar flares or even coronal mass ejections (CMEs). In 1859 a large CME hit Earth (known as the Carrington Event), shorting out telegraphs, brightening the sky, and causing aurora deep into equatorial latitudes. If such an event were to occur today experts are not exactly sure what would happen, but it could take out satellites and short out parts of electrical grids. Interestingly, we have a historical record of how often such events have occurred in the past, mostly from tree rings.

Exactly when Homo sapiens came to the Americas has not been firmly established, and new evidence has just thrown another curve ball into the controversy.

Exactly when Homo sapiens came to the Americas has not been firmly established, and new evidence has just thrown another curve ball into the controversy. In

In  One attosecond (as) is 1×10−18 seconds. An attosecond is to one second what one second is to the age of the universe. It is an extremely tiny slice of time.

One attosecond (as) is 1×10−18 seconds. An attosecond is to one second what one second is to the age of the universe. It is an extremely tiny slice of time.  Most readers are probably familiar with the Turing Test – a concept proposed by early computing expert

Most readers are probably familiar with the Turing Test – a concept proposed by early computing expert