Aug

18

2023

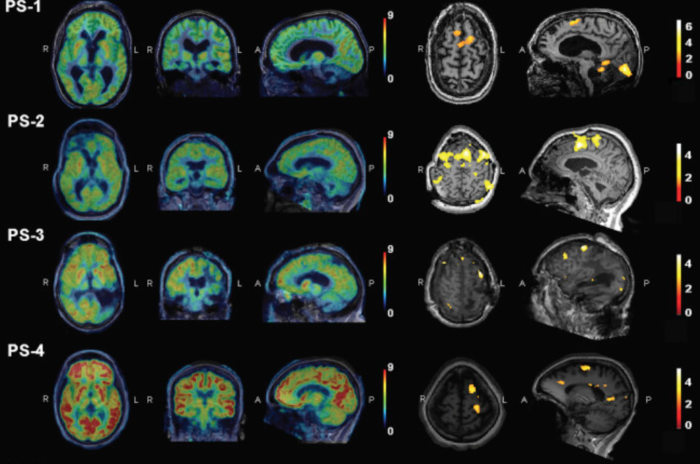

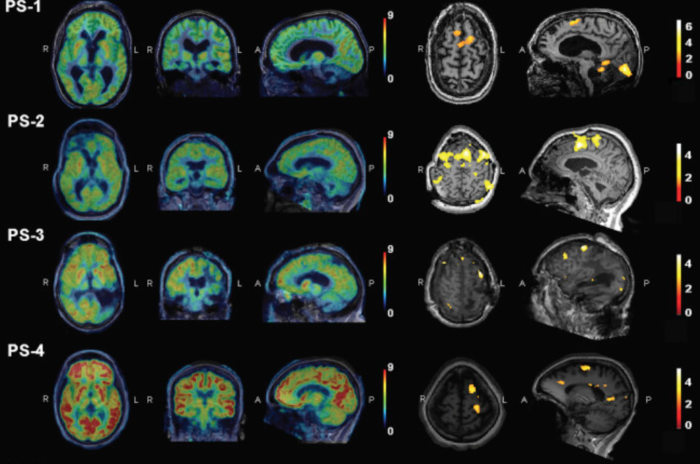

What’s going on in the minds of people who appear to be comatose? This has been an enduring neurological question from the beginning of neurology as a discipline. Recent technological advances have completely changed the game in terms of evaluating comatose patients, and now a recent study takes our understanding one step further by teasing apart how different systems in the brain contribute to conscious responsiveness.

What’s going on in the minds of people who appear to be comatose? This has been an enduring neurological question from the beginning of neurology as a discipline. Recent technological advances have completely changed the game in terms of evaluating comatose patients, and now a recent study takes our understanding one step further by teasing apart how different systems in the brain contribute to conscious responsiveness.

This has been a story I have been following closely for years, both as a practicing neurologist and science communicator. For background, when evaluating patients who have a reduced ability to execute aspects of the neurological exam, there is an important question to address in terms of interpretation – are they not able to perform a given task because of a focal deficit directly affecting that task, are they generally cognitively impaired or have decreased level of conscious awareness, or are other focal deficits getting in the way of carrying out the task? For example, if I ask a patient to raise their right arm and they don’t, is that because they have right arm weakness, because they are not awake enough to process the command, or because they are deaf? Perhaps they have a frozen shoulder, or they are just tired of being examined. We have to be careful in interpreting a failure to respond or carry out a requested action.

One way to deal with this uncertainty is to do a thorough exam. The more different types of examination you do, the better you are able to put each piece into the overall context. But this approach has its limits, especially when dealing with patients who have a severe impairment of consciousness, which gets us to the context of this latest study. For further background, there are different levels of impaired consciousness, but we are talking here about two in particular. A persistent vegetative state is defined as an impairment of consciousness in which the person has zero ability to respond to or interact with their environment. If there is any flicker of responsiveness, then we have to upgrade them to a minimally conscious state. The diagnosis of persistent vegetative state, therefore, is partly based on demonstrating the absence of a finding, which means it is only as reliable as the thoroughness with which one has looked. This is why coma specialists will often do an enhanced neurological exam, looking really closely and for a long time for any sign of responsiveness. Doing this picks up a percentage of patients who would otherwise have been diagnosed as persistent vegetative.

Continue Reading »

Dec

05

2016

The season finale of Westworld aired last night, a series based on a Michael Crichton book which was made into a 1973 film. I won’t give much away, so only very mild spoilers for those who haven’t seen it. I will say the last episode was probably the best of the season.

The season finale of Westworld aired last night, a series based on a Michael Crichton book which was made into a 1973 film. I won’t give much away, so only very mild spoilers for those who haven’t seen it. I will say the last episode was probably the best of the season.

The basic premise of the book/film/series is that it takes place in a futuristic theme park in which guests can visit the old west populated by robots that are there solely for their pleasure. They exist to lose gunfights, for sexual pleasure, to be victims or fill whatever role the guests want, and then be recycled to run through their plot loop all over again.

The HBO series uses the story line as an opportunity to explore the basic question of sentience. The robots are hyperrealistic. Unless you cut them open, you cannot tell them from a living human. They are extremely realistic in their behavior as well.

The robots clearly have a very advanced form of artificial intelligence, but are they self-aware? That is a central theme of the series. They have complex behavioral algorithms, they can reason, they express the full range of human emotions, and they have memory. They are kept under control largely by wiping their memory each time they are repaired, so that they don’t remember the horrible things that were done to them.

Some of the robots, however, start to break out of their confines. They “feel” as if they are trapped in a recurring nightmare, and have flashes of memory from their previous loops.

Continue Reading »

Jul

30

2012

Where is the “seat of consciousness” in the brain? This is often presented as an enduring mystery of modern neuroscience, and to an extent it is. It is a very complex question and we don’t yet have anything like a complete answer, or even a consensus. The question itself may contain false assumptions – what, exactly, is consciousness, and perhaps what we call consciousness emerges from the collective activity of the entire brain, not a subset. Perhaps every network in the brain is conscious to some degree, and what we experience as our consciousness is the aggregate effect of many little consciousnesses.

One way to approach this question (really a set of related questions) is to study different mental states – altered states of consciousness. How those differences relate to brain function are likely to tell us something about the contribution of that brain function to full wakeful consciousness.

A new study by scientists from the Max Planck Institutes of Psychiatry in Munich and for Human Cognitive and Brain Sciences in Leipzig and from Charité in Berlin attempts to do just that. They have studied the brain activity of those in normal dreaming and in a so-called lucid dreaming state.

Continue Reading »

May

17

2012

I hadn’t planned for this topic to take over my blog this week, but it happens. Judging by the comments there is significant interest in the issue of consciousness, and Kastrup and I are just getting to the real nub of the argument. So here is another installment – a reply to Kastrup’s latest offering. First, however, some background.

Materialism, Dualism, and Idealism

Philosophers of mind, such as David Chalmers, now recognize three general approaches to the question – what is consciousness? Materialism is the view that the mind is what the brain does. This is often stated as the mind is caused by the brain. Some commenters took exception to this phrase, saying it implies a dualist position, that the mind is its own thing, but I disagree. The brain is the physical substance, while the mind or consciousness is a process that emerges from the brain. A dead or deeply comatose brain has no mind, so they are manifestly not the same thing. Language here is a bit imprecise, but I think the phrase – the brain causes the mind – is an acceptable short hand for the materialist position.

Dualism is the position that consciousness is something separate from the brain and not entirely caused by it. It may be a separate property of the universe (property dualism) or be something beyond the confines of our material universe. Whatever it is, it does not reduce to the firing of neurons in the brain, which cannot, in the opinion of dualists, explain subjective experience.

Continue Reading »

Nov

14

2011

The diagnosis of coma, specifically persistent vegetative state (PVS), is not as straightforward as it might seem. The current standard of care is to perform a thorough neurological exam in order to assess function. The limitation here, however, is that we are inferring brain functioning from what the patient can do. Some parts of the neurological exam are straightforward, like pupillary reflexes. Reflexes are a more direct way of interrogating the nervous system because the examiner is able to see the response, and therefore the integrity, of one specific pathway. This is very useful in determining which parts of the nervous system are working and which are damaged.

Coma, however, is a condition of impaired consciousness – so we also need to determine, to the best of our ability, the exact level of consciousness of a patient. This is where it can get a little tricky. The parts of the exam that are most useful for determining level of consciousness are response to various types of stimuli and the ability to follow commands. If I say to a patient (without any non-verbal cues), “show me two fingers on your right hand,” and they then raise two fingers on their right hand, that tells me a lot about their function. They can hear, they can understand language sufficiently to understand the command, they are aware enough to make sense of the command and the context of the exam (they know I want them to do something), and they have the motor pathways necessary to move their fingers on the right hand.

The flip side of that, however, is that if the patient is unable to show me two fingers it could mean that they are deaf, aphasic (impaired language), delirious, or paralyzed in that hand. I cannot know for sure that their lack of response is due to impaired level of consciousness. We can compensate for this somewhat by giving various commands – using various body parts, language that is easiest to understand, and as loud as possible to be heard. But this is only a partial compensation. A patient who is locked-in, for example (conscious but paralyzed below the eyes), would give the same lack of response as someone who is in a PVS.

Continue Reading »

Mar

23

2010

As neuroscientists continue to build a more accurate and sophisticated model of the human brain, finding the neurological correlate of conscious awareness remains a tough nut to crack. The difficulty stems partly from the fact that consciousness is likely not localized in any one specific brain region.

But as our technology advances and we are able to look at brain function in real time and in greater detail, researchers are starting to zero in on the hardwiring that produces consciousness.

In this context, consciousness is operationally defined as being aware of sensory stimulation, as opposed to just being awake. We are not conscious of everything we see and hear, nor of all of the information processing occurring in our own brains. We are aware of only a small subset of input and processing, which is woven together into a continuous and seamless narrative that we experience.

Continue Reading »

Jan

08

2010

Raymond Tallis is an author and polymath; a physician, atheist, and philosopher. He has criticized post-modernism head on, so he must be all right.

And yet he takes what I consider to be a very curious position toward consciousness. As he write in the New Scientist: You won’t find consciousness in the brain. From reading this article it seems that Tallis is a dualist in the style of Chalmers – a philosopher who argues that we cannot fully explain consciousness as brain activity, but what is missing is something naturalistic – we just don’t know what it is yet.

Tallis has also written another article arguing that Darwinian mechanisms cannot explain the evolution of consciousness. Curiously, he does not really lay out an alternative, leading me to speculate what he thinks the alternative might be.

Continue Reading »

Nov

13

2009

The holy grail of modern neuroscience, and perhaps one of the toughest scientific problems we face, is understanding at a fundamental level the nature of consciousness. What is it about our brain function that makes us aware of our own existence?

It is not simply an emergent property of having enough neurons wired together. A popular notion in science fiction is that artificial intelligence may unexpectedly emerge out of a sufficiently powerful computer – such as Vger or SkyNet. But this scenario is highly unlikely. Consciousness appears to be a specific function of thinking systems, not just a consequence of complexity.

For example, the cerebellum is a specialized part of the brain involved with motor coordination. It contains about the same number of neurons and connections that the cortex does, but the cerebellum itself is not conscious nor does it appear to contribute to consciousness.

Continue Reading »

Oct

13

2008

My blog from last week on the upcoming Turing test provoked a great deal of interesting conversation in the comments – which is great. Short blog entries are often insufficient to fully explore a deep topic. Often I am just scratching the surface, and so there is often much more meat in the comments than the original post.

Some points came up in the comments that I thought would be good fodder for a follow up post.

Siener wrote:

Think about it this way: You are saying that a system can exists that acts like it is conscious, but unless it has some magical additive, some élan vital with absolutely zero affect on its behaviour it cannot be truly conscious.

That is not what I am saying at all. From my many previous posts on the topic it is clear that I am not a dualist of any sort. I essentially agree with Daniel Dennet’s approach to the question of dualism. When I wrote that behavior alone is insufficient to determine if computer AI is conscious I was not referring to some magical extra ingredient, but a purely materialistic aspect of the AI itself.

Continue Reading »

Mar

30

2007

Since the topic of artificial intelligence has garnered so much interest, and there were many excellent follow up questions, I thought I would dedicate my blog today to answering them and extending the discussion.

Noël Henderson asked: “Would a non-chemical AI unit, even with very complex processing and memory capabilities, be able to experience what we normally refer to as emotion? Is self-awareness (and in my layman’s understanding I tend to think in terms of ‘ego’) dependent upon the ability to experience emotion?”

Emotion is just one more thing that our brains do. There is no reason that AI with a different substrate cannot also create the experience of emotions. Emotions are a manifestation of how information is processed in the brain – which is why I said that an AI brain would not only have to be able to hold the information of the brain but would also need to duplicate its processing of that information. For example, the knowledge of the death of someone that the AI brain has learned to associate with positive feelings could produce the experience of sadness and loss by affecting the degree of activity in patterns of circuits that contribute to mood, focus our attention on pleasant or unpleasant details, make us anticipate our future happiness, etc. Basically, the same thing that happens in a biological brain. In other words, the experience of emotion is just as much a physical aspect of the brain as any other cognitive phenomenon.

Continue Reading »

What’s going on in the minds of people who appear to be comatose? This has been an enduring neurological question from the beginning of neurology as a discipline. Recent technological advances have completely changed the game in terms of evaluating comatose patients, and now a recent study takes our understanding one step further by teasing apart how different systems in the brain contribute to conscious responsiveness.

What’s going on in the minds of people who appear to be comatose? This has been an enduring neurological question from the beginning of neurology as a discipline. Recent technological advances have completely changed the game in terms of evaluating comatose patients, and now a recent study takes our understanding one step further by teasing apart how different systems in the brain contribute to conscious responsiveness.

The season finale of Westworld aired last night, a series based on a Michael Crichton book which was made into a 1973 film. I won’t give much away, so only very mild spoilers for those who haven’t seen it. I will say the last episode was probably the best of the season.

The season finale of Westworld aired last night, a series based on a Michael Crichton book which was made into a 1973 film. I won’t give much away, so only very mild spoilers for those who haven’t seen it. I will say the last episode was probably the best of the season.