Feb 12 2024

The Exoplanet Radius Gap

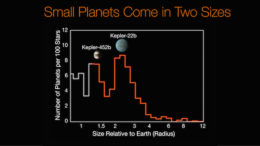

As of this writing, there are 5,573 confirmed exoplanets in 4,146 planetary systems. That is enough exoplanets, planets around stars other than our own sun, that we can do some statistics to describe what’s out there. One curious pattern that has emerged is a relative gap in the radii of exoplanets between 1.5 and 2.0 Earth radii. What is the significance, if any, of this gap?

As of this writing, there are 5,573 confirmed exoplanets in 4,146 planetary systems. That is enough exoplanets, planets around stars other than our own sun, that we can do some statistics to describe what’s out there. One curious pattern that has emerged is a relative gap in the radii of exoplanets between 1.5 and 2.0 Earth radii. What is the significance, if any, of this gap?

First we have to consider if this is an artifact of our detection methods. The most common method astronomers use to detect exoplanets is the transit method – carefully observe a star over time precisely measuring its brightness. If a planet moves in front of the star, the brightness will dip, remain low while the planet transits, and then return to its baseline brightness. This produces a classic light curve that astronomers recognize as a planet orbiting that start in the plane of observation from the Earth. The first time such a dip is observed that is a suspected exoplanet, and if the same dip is seen again that confirms it. This also gives us the orbital period. This method is biased toward exoplanets with short periods, because they are easier to confirm. If an exoplanet has a period of 60 years, that would take 60 years to confirm, so we haven’t confirmed a lot of those.

There is also the wobble method. We can observe the path that a star takes through the sky. If that path wobbles in a regular pattern that is likely due to the gravitational tug from a large planet or other dark companion that is orbiting it. This method favors more massive planets closer to their parent star. Sometimes we can also directly observe exoplanets by blocking out their parent star and seeing the tiny bit of reflected light from the planet. This method favors large planets distant from their parent star. There are also a small number of exoplanets discovered through gravitational microlensing, and effect of general relativity.

None of these methods, however, explain the 1.5 to 2.0 radii gap. It’s also likely not a statistical fluke given the number of exoplanets we have discovered. Therefore it may be telling us something about planetary evolution. But there are lots of variables that determine the size of an exoplanet, so it can be difficult to pin down a single explanation.

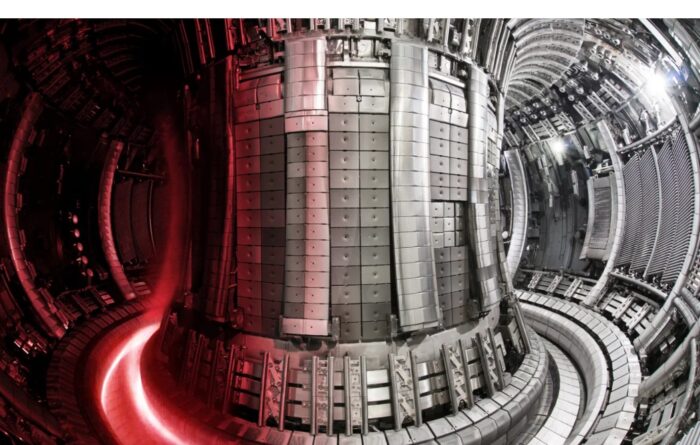

Don’t get excited. It’s always nice to see incremental progress being made with the various fusion experiments happening around the world, but we are still a long way off from commercial fusion power, and this experiment doesn’t really bring us any close,

Don’t get excited. It’s always nice to see incremental progress being made with the various fusion experiments happening around the world, but we are still a long way off from commercial fusion power, and this experiment doesn’t really bring us any close,  Have you ever been in a discussion where the person with whom you disagree dismisses your position because you got some tiny detail wrong or didn’t know the tiny detail? This is a common debating technique. For example, opponents of gun safety regulations will often use the relative ignorance of proponents regarding gun culture and technical details about guns to argue that they therefore don’t know what they are talking about and their position is invalid. But, at the same time, GMO opponents will often base their arguments on a misunderstanding of the science of genetics and genetic engineering.

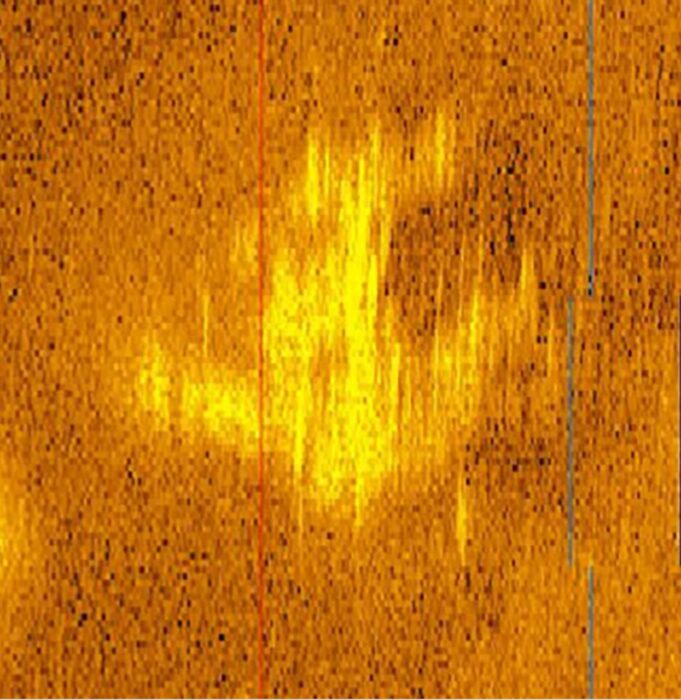

Have you ever been in a discussion where the person with whom you disagree dismisses your position because you got some tiny detail wrong or didn’t know the tiny detail? This is a common debating technique. For example, opponents of gun safety regulations will often use the relative ignorance of proponents regarding gun culture and technical details about guns to argue that they therefore don’t know what they are talking about and their position is invalid. But, at the same time, GMO opponents will often base their arguments on a misunderstanding of the science of genetics and genetic engineering. Is this sonar image taken at 16,000 feet below the surface about 100 miles from Howland island, that of a downed Lockheed Model 10-E Electra plane?

Is this sonar image taken at 16,000 feet below the surface about 100 miles from Howland island, that of a downed Lockheed Model 10-E Electra plane?  Homer: Not a bear in sight. The Bear Patrol must be working like a charm.

Homer: Not a bear in sight. The Bear Patrol must be working like a charm. It’s difficult to pick winners and losers in the future tech game. In reality you just have to see what happens when you try out a new technology in the real world with actual people. Many technologies that look good on paper run into logistical problems, difficulty scaling, fall victim to economics, or discover that people just don’t like using the tech. Meanwhile, surprises hits become indispensable or can transform the way we live our lives.

It’s difficult to pick winners and losers in the future tech game. In reality you just have to see what happens when you try out a new technology in the real world with actual people. Many technologies that look good on paper run into logistical problems, difficulty scaling, fall victim to economics, or discover that people just don’t like using the tech. Meanwhile, surprises hits become indispensable or can transform the way we live our lives. Elon Musk

Elon Musk  There is an ongoing battle in our society to control the narrative, to influence the flow of information, and thereby move the needle on what people think and how they behave. This is nothing new, but the mechanisms for controlling the narrative are evolving as our communication technology evolves. The latest addition to this technology is the large language model AIs.

There is an ongoing battle in our society to control the narrative, to influence the flow of information, and thereby move the needle on what people think and how they behave. This is nothing new, but the mechanisms for controlling the narrative are evolving as our communication technology evolves. The latest addition to this technology is the large language model AIs. My

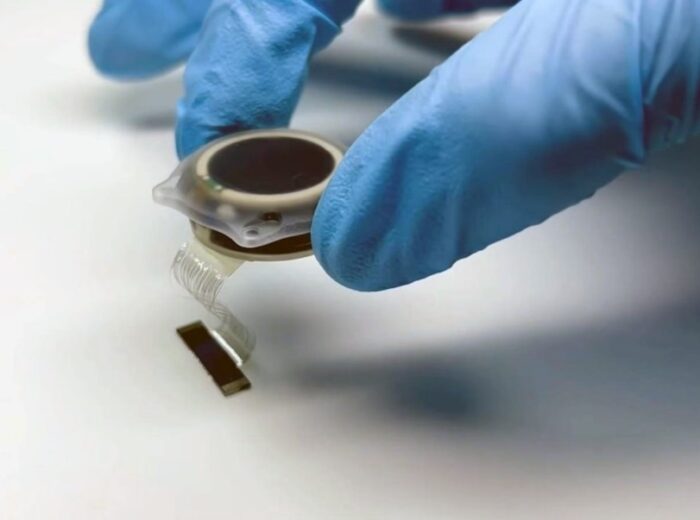

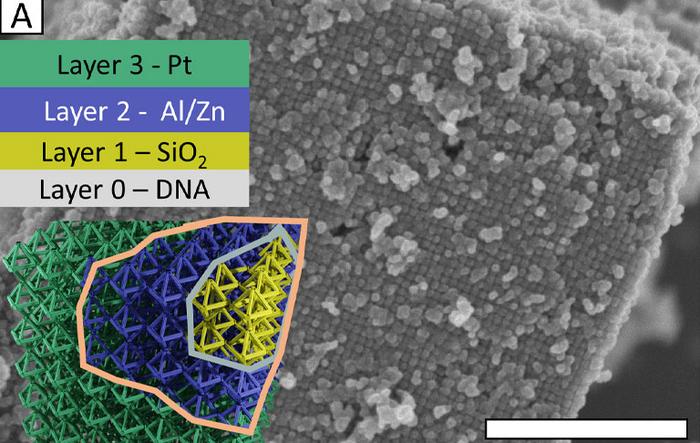

My  Arguably the type of advance that has the greatest impact on technology is material science. Technology can advance by doing more with the materials we have, but new materials can change the game entirely. It is no coincidence that we mark different technological ages by the dominant material used, such as the bronze age and iron age. But how do we invent new materials?

Arguably the type of advance that has the greatest impact on technology is material science. Technology can advance by doing more with the materials we have, but new materials can change the game entirely. It is no coincidence that we mark different technological ages by the dominant material used, such as the bronze age and iron age. But how do we invent new materials?