Jul 22 2022

Overconfidence and Opposition to Scientific Consensus

There has been a lot of research exploring the phenomenon of rejection of established science, even to the point of people believing demonstrably absurd things. This is a complex phenomenon, involving conspiracy thinking, scientific illiteracy, group identity, polarization, cognitive styles, and media ecosystems, but the research has made significant progress unpacking these various contributing factors. A recent study adds to the list, focusing on the rejection of scientific consensus.

There has been a lot of research exploring the phenomenon of rejection of established science, even to the point of people believing demonstrably absurd things. This is a complex phenomenon, involving conspiracy thinking, scientific illiteracy, group identity, polarization, cognitive styles, and media ecosystems, but the research has made significant progress unpacking these various contributing factors. A recent study adds to the list, focusing on the rejection of scientific consensus.

For most people, unless you are an expert in a relevant field, a good first approximation of what is most likely to be true is to understand and follow the consensus of expert scientific opinion. This is just probability – people who have an understanding of a topic that is orders of magnitude beyond yours are simply more likely to have an accurate opinion on that topic than you do. This does not mean experts are always right, or that there is no role for minority opinions. It mostly means that non-experts need to have an appropriate level of humility, and at least a basic understanding of the depth of knowledge that exists. I always invite people to consider the topic they know the best, and consider the level of knowledge of the average non-expert. Well, you are that non-expert on every other topic.

This is also why humility is the cornerstone of good scientific skepticism and critical thinking. We are all struggling to be just a little less wrong. As a science enthusiast we are trying to understanding a topic at a generally superficial technical level. This can still be a very meaningful and generally accurate understanding – just not technically deep or rigorous. It’s one thing to say – yeah, I get the basic concept of quantum computers, how they work, and why they can be so powerful. It’s another to be able to read and understand the technical literature, let alone contribute to it. Often people get into trouble when they confuse their lay understanding of a topic for a deep expert understanding, usually resulting in them becoming cranks.

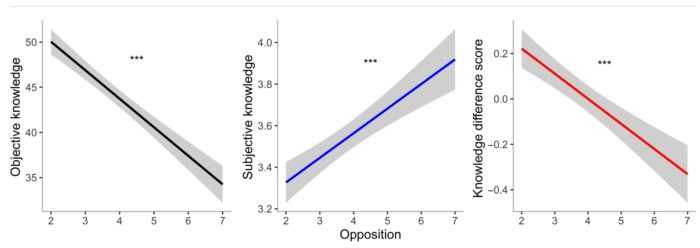

The recent study – Knowledge overconfidence is associated with anti-consensus views on controversial scientific issues, by Nicholas Light et al, is not surprising but is reassuringly solid in its outcome. The researchers compared peoples objective knowledge about various controversial topics (their knowledge of objective facts), with their subjective knowledge (assessment of their own knowledge) and opposition to consensus views. They found a robust effect in which opposition increased as the gap between objective and subjective knowledge increased (see graphs above the fold).

This may remind you of Dunning Kruger – the less people know the more they overestimate their knowledge (although subjective knowledge still decreases, just not as fast as objective knowledge). This is more of a super DK, those who know the least think they know the most. This has been found previously with specific topics – safety of GM food, genetic manipulation, and vaccines and autism. In addition to the super DK effect, this study shows that is correlates well with opposition to scientific consensus.

This study does not fully establish what causes such opposition, just correlates it with a dramatic lack of humility, lack of knowledge, and overestimation of one’s knowledge. There are studies and speculation trying to discern the ultimate causes of this pattern, and they are likely different for different issues. The classic explanation is the knowledge deficit model, that this pattern emerges as a result of lack of objective knowledge. But his model is mostly not true for most topics, although knowledge is still important and can even be dominant with some issues, like GM food. There is also the “cultural cognition” model, which posits that people hold beliefs in line with their culture (including political, social, and religious subcultures). This also is highly relevant for some issues more than others, like rejection of evolutionary science.

Other factors that have been implicated include cognitive style, with intuitive thinkers being more likely to fall into this opposition pattern than analytical thinkers. Intuitive thinking also correlates with another variable, conspiracy thinking, that also correlates with the rejection of consensus. Conspiracy thinking seems to occur in two flavors. There is opportunistic conspiracy thinking in which it seems to be not the driver of the false belief but a reinforcer. But there are also dedicated conspiracy theorists, who will accept any conspiracy, for which conspiracy thinking appears to be the driver.

The most important thing for the individual is to be aware of these various phenomena. To get outside yourself and think about your own thinking (metacognition) – are you just going along with your social group and identity, are you overestimating your own knowledge, are you trapped in a biased media ecosystem? We need to ask ourselves how we really know if something is true, and start at a point of maximal humility. Be the most skeptical of those views which reinforce your group identity. If your views on a scientific topic contrast with the consensus of scientific opinion, that should give you great pause. The chances are overwhelming that you are wrong, that is just a fact. If you think that GM foods are not safe, that vaccines cause autism, that the world is flat, or that anthropogenic global warming is a hoax, then you are objectively wrong (at least with a very high confidence interval). Have the humility to at least consider the possibility that you are wrong, that perhaps you are relying on unreliable information. At least do not casually dismiss the fact that a solid consensus of true experts disagree with you.