Jul 24 2023

Making Computers More Efficient

An analysis in 2021 found that 10% of the world’s electricity production is used by computers, including personal use, data centers, the internet and communication centers. The same analysis projected that this was likely to increase to 20% by 2025. This may have been an underestimate because it did not factor in the recent explosion of AI and large language models. Just training a large language model can cost $4-5 million and expend a lot of energy.

An analysis in 2021 found that 10% of the world’s electricity production is used by computers, including personal use, data centers, the internet and communication centers. The same analysis projected that this was likely to increase to 20% by 2025. This may have been an underestimate because it did not factor in the recent explosion of AI and large language models. Just training a large language model can cost $4-5 million and expend a lot of energy.

I am not trying to doomsay these statistics. Civilization gets a lot of useful work out of all this computing power, and it likely displaces much less efficient ways of doing things. One Zoom meeting vs an in-person meeting can be a huge energy savings. In fact, as long as we use all that computing power reasonably, it’s all good. We can talk about the utility of specific applications, like mining Bitcoins, but overall the dramatic advance of computing is a good thing. But it does shift our energy use, and it does represent the electrification of some technology. We therefore have to factor it in when extrapolating our future electricity uses (just like we need to consider the effect of shifting our car fleet from burning gasoline to using electricity).

The situation also presents an opportunity. As more and more of our energy use is shifted to computers as our world becomes more digital, that means we can have increasing improvement in our overall energy efficiency just by targeting one technology. For example, if computers used 20% of the world’s electricity, a 50% improvement in computer efficiency would result in a 10% drop in our energy demand (once fully implemented). Obviously such improvements would be implemented over years, but it points out how high the stakes are becoming for computer power efficiency. This means the industry needs to focus not just on doing things bigger, better, faster, but also more efficiently. We also need to think twice before adopting wasteful practices.

This is why a recent news item caught my eye, because as computing technology improves (both hardware and software) there can be a big impact on our energy demands. Here is the technical summary:

Ambipolar dual-gate transistors based on low-dimensional materials, such as graphene, carbon nanotubes, black phosphorus, and certain transition metal dichalcogenides (TMDs), enable reconfigurable logic circuits with a suppressed off-state current. These circuits achieve the same logical output as complementary metal–oxide semiconductor (CMOS) with fewer transistors and offer greater flexibility in design.

Logic gates, a basic component of transistors, normally can either conduct electrons or electron holes, but not both. An electron hole is simply a location where an electron could be but isn’t. What the researchers have created are “ambipolar transistors” that can conduct both. The effect was essentially to reduce the number of needed transistors to make a specific circuit in half. This would make the overall circuit more compact, which means it can be smaller and/or more powerful (in some combination). This increases the overall efficiency of the circuit.

This is another one of those proof-of-concept laboratory demonstrations. This technology would have to scale in order to translate to the desktop or data center. But we are seeing lots of these kinds of advances, and enough of them percolate through to keep the technology moving forward.

An aspect of this study that should not be missed is the use of “low-dimensional materials” such as graphene. Graphene-based computers and other electronics is definitely a promising future technology – but unfortunately is still a “future” technology. We are still in the “5-10 year” phase of when applications will hit the market, which could mean it will actually take much longer. Graphene, a 2-dimensional form of carbon, has incredibly properties for electronics. It conducts very well with little waste heat, and can be doped with other elements to make semi-conductors. It holds the promise of increasing computing speed by 1000 times, with a dramatic reduction in energy use and waste heat.

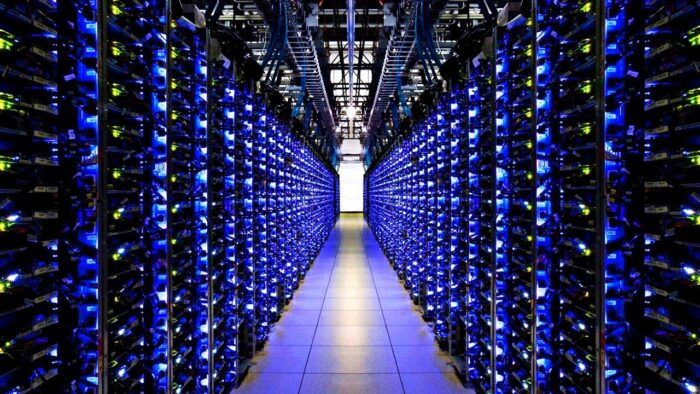

Most of the energy used by data centers, for example, go to either the computing processes themselves (around 50% of energy use) and cooling (30-40% of energy use). There are efforts to build data centers near other buildings that need to be heated, so the waste heat can be put to good use. But ideally new computer technology would simply reduce this waste heat. That is one of the promises of graphene computing. On a side note, making computer chips with much less waste heat is essentially for the future of brain-machine interface technology. Biological tissue doesn’t deal well with the waste heat from computer chips.

Even if these developments and others like them come to fruition, I doubt this will decrease the amount of energy our computing infrastructure uses. Energy use by computing will still increase, but hopefully these kinds of advances will limit this increase, or perhaps allow it to level off – so that essentially we can do more with the same amount or percentage of energy. But it also means, in terms of research, prioritizing efficiency is important. This is true of any industry that gets so big it stresses resources. Efficiency becomes an increasingly important component of future development, because you otherwise can’t keep growing the industry without limits. Computing is not just growing, to some extent we are concentrating our resources into this one industry – shifting energy expenditure from other areas to the digital world. This will continue to raise the stakes for computing efficiency in the future.