May 09 2022

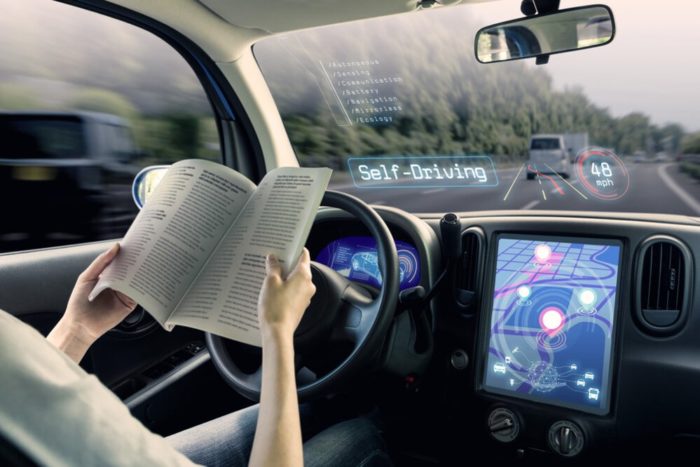

Making Better Self-Driving Cars

Human behavior is complex and can be very difficult to predict. This is one of the challenges of safe driving – what to do when right of way, for example, is ambiguous, or there are multiple players all interacting with each other? When people learn to drive they first master the rules, and learn their driving skills in controlled situations. As they progress they gain confidence driving in more and more complicated environments. Even still there are about 17,000 car accidents per day in the US.

Human behavior is complex and can be very difficult to predict. This is one of the challenges of safe driving – what to do when right of way, for example, is ambiguous, or there are multiple players all interacting with each other? When people learn to drive they first master the rules, and learn their driving skills in controlled situations. As they progress they gain confidence driving in more and more complicated environments. Even still there are about 17,000 car accidents per day in the US.

It should not have been surprising, therefore, that autonomous or self-driving car technology would also find the task incredibly challenging. Self-driving algorithms not only have to learn the rules, and master the basic technology of sensing the environment with sufficient accuracy and in real time, but also to master increasingly complex and inherently unpredictable environments. A decade ago, when the technology was rapidly advancing, enthusiasts predicted that the technology would be essentially ready for the mass market by the early 2020s – so, now. But they realized that the last 5% or so of performance ability was perhaps more challenging than the first 95%. Some problems become exponentially more difficult to solve when new variables are added (we still haven’t solved the three-body problem). We have seen this with other technologies, like fusion, general AI, speech recognition, and some applications of stem cells, where early predictions were overly optimistic.

This is the challenge that AI specialists are working on now – how to get self-driving car AI systems to perform well-enough to handle the challenging situations that crop up with regularity while driving? The further complicate the issue, these systems have to function in real time, so the solution cannot involve a dramatic increase in computing that would slow down the whole system.

Fortunately people are (collectively) clever and flexible and there are a lot of us, and experts have been working diligently to crack this problem. A recent advance in self-driving technology is worth noting, because it takes a different approach to the problem that may ultimately cross the finish-line. MIT researchers have developed a machine learning system (called M2I) that handles the problem of predicting behavior in a complex intersection with multiple actors by breaking it down into individual components. First it identifies the actors in the scene and then determines who has the right-of-way. That person, whether driver, cyclist, or pedestrian, is considered the passer, while those who do not have the right-of-way are yielders. The passer is considered to be an independent actor, because their behavior is not necessarily determined by what anyone else does, because they get to act first. Yielders, on the other hand, have to wait for the passer to act before they can move.

The system calculates the trajectories of the passer and yielder for the next 8 seconds, then picks the six most likely scenarios. So far they have trained this model on a vast dataset of actual behavior at intersections (Waymo Open Motion Dataset), but limited to one passer and one yielder. Early performance is very promising, out-performing existing machine learning models. The goal is to build the system on these individual calculations of one passer and one yielder. Essentially the system would work by breaking down a complex intersection into individual pairs of actors and conditional responses. This approach has the potential to be very powerful, while using less computing power.

It’s too early to tell if this specific approach, or something like it, will get us across the finish line. Either way, it is an incremental advance in self-driving technology, and it’s encouraging that progress is being made.

Also keep in mind, we already have self-driving cars, but there is a question of the level to witch they are self-driving – it’s not all-or-none. There are five recognized levels of self-driving (plus zero, which is no self-driving). 1 = driver assist. 2 = partial self-driving, or advanced driver assist where the car can take over in emergencies such as to break to avoid a collision. Levels 3-5 all involve the car fully self-driving, with a driver present but not driving at all. Level 3 = limited self driving where the car will demand that the driver take over at some point. Level 4 will never demand driver control, but is limited to certain driving conditions. Level 5 = full unlimited self-driving capability.

Right now we are at level 3, where the car can self-drive in many conditions but a human driver has to be present and able to take control at any time – so you cannot nap while the car is driving. We are quickly heading toward level 4, which is where I think wide adoption of the technology will happen. With level 4 self-driving the car can fully take over, for example, once you get on the highway, or on back roads where you will only encounter single-lane intersections. During these times the human driver can nap or do other tasks. Combined with GPS, such cars could map a route to your destination where it may be able to be in control the entire time, but the route may not be the shortest route in order to avoid complex situations the self-driving feature cannot handle.

I suspect we will be stuck in level 4 for a long time, and getting that last bit of reliability to be considered level 5 (as good or better than a human driver in all situations) again will take longer than enthusiasts predict. But level 4 is fine, we can work with that. Infrastructure and driving laws may need to be tweaked to adapt to this technology, but it will be worth it. As more cars on the road are level 4 self-driving the situation actually becomes safer, because it’s more predictable. Humans are an unwanted variable, because they are less predictable than algorithms. Road accidents will likely plummet with increased adoption of self-driving technology. You can still get home if you are drunk, or really sleepy. A single moment of distraction will not lead to a potentially fatal accident.

Self-driving cars are coming, just a decade or two longer than we thought.