Aug 05 2025

It’s Just A Correlation

Did you know that the number of Google searches for cat memes correlates tightly (P-value < 0.01) with England’s performance in cricket World Cups? What’s going on here? Is interest in funny cat videos driven by the excitement created by cricket victories. Perhaps cat memes are especially inspiring to English cricket players. Or more likely, this is just a spurious correlation, despite the impressive P-value.

Did you know that the number of Google searches for cat memes correlates tightly (P-value < 0.01) with England’s performance in cricket World Cups? What’s going on here? Is interest in funny cat videos driven by the excitement created by cricket victories. Perhaps cat memes are especially inspiring to English cricket players. Or more likely, this is just a spurious correlation, despite the impressive P-value.

Every day we are confronted with science news items that report on some correlation between variables. Observational or correlational research makes up a lot of science. But what does this evidence actually mean? The first question one should as when confronted with the claim for a correlation is – is this correlation even real? What I mean is – is this a true correlation resulting from some specific causation, or this is just a random alignment due to pure chance. We need to sort this out before we pontificate on what the correlation might mean. How do we approach this question?

There are a number of questions we can ask:

- How strong is the correlation (statistically speaking)

- How did the observation come about

- What methods were used in determining the correlation

- Has it been independently replicated

- Is there any plausibility to the correlation

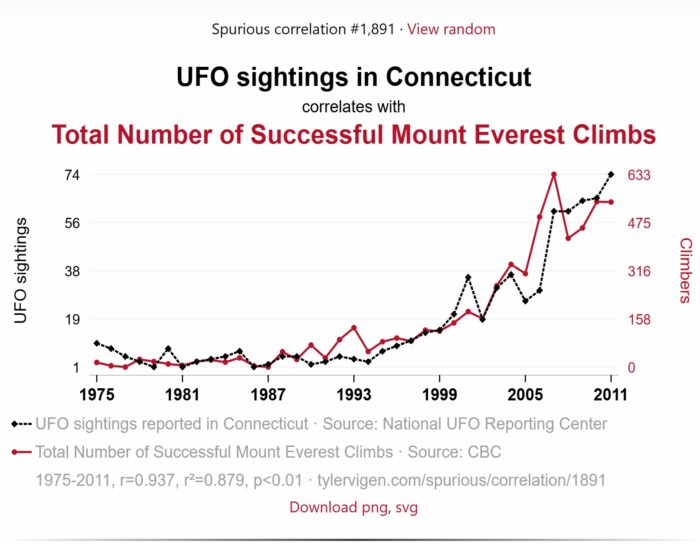

The first question, how strong is the correlation, may seem like the most important but I would argue it is probably the least. Sure, we can likely ignore weak correlations, but even a very strong correlation may be entirely spurious. To nicely demonstrate this, visit the website, spurious correlations. You can generate statistically strong but utterly meaningless correlations all day long. The number of lawyers in the US and organic food sales correlate quite tightly.

The website was created to demonstrate a key fact relevant to correlations – if you make a sufficiently large number of comparisons you will find correlations by chance alone. This is a form of p-hacking, where you essentially cheat in order to increase the chance of finding a correlation. The p-value assumes one comparison between two variables. It’s meaningless if you make multiple comparisons. Does this happen in science? All the time.

To highlight this journalist John Bohannon did a study looking at dark chocolate consumption. He measured a number of variables and found that consumption related to weight loss (while dieting), and published this as a significant result. But – it was fake, because he did not control for multiple comparisons. The media uncritically ate it up – dark chocolate makes you lose weight. But this process happens for real probably daily – scientists publish a dubious correlation, publish it, and the media credulously spreads it.

You need to know – why did they look for this correlation in the first place, how many comparisons did they make, and then did they replicated the correlation with an independent set of data? Even then, I would want to see the result consistently replicate before I take it seriously.

Let’s say the correlation holds up, it replicates, and it is probably real. The next step is determining what it means, and this is not always easy. Generically we can say that if A correlates with B then perhaps A causes B, B causes A, or C causes both A and B. That third option is what we call a confounding factor, and it is very common. The lawyers and organic food correlation above may be legit, because they could both simply be correlating with increasing population.

This is the primary weakness of observational or purely correlational data – it is difficult to think of all possible confounding variables. In controlled experiments you control for all variables, so you can isolate the variable of interest and make direct causational conclusions. Not so with observational data.

To show how tricky this can be there is the now classic example of the marshmallow test. In a seminal study researchers would give one marshmallow to a child and say – you can eat this whenever you want. But, I will leave the room and come back in 10 minutes with two marshmallows. If when I come back that marshmallow is uneaten, then you get two. This was designed as a test for executive function, and researchers over decades correlated the ability to defer gratification with all kinds of positive life outcomes.

Then someone asked – what if the children don’t trust that the adult will come back with two marshmallows. Then, taking the bird in the hand is a rational choice, not a failure of will power. It turns out, this correlates as well, children from less secure households were more likely to take the one marshmallow. In their world it does not make sense to trust that adults will come through on their promises. And of course, coming from such a background correlates with all the negative life outcomes studied.

Does all this means that correlations are worthless as evidence? No. The spurious correlation website states that “correlation is not causation” but this framing can be misleading. I prefer “correlation is not necessarily causation” – because it can be. For decades the tobacco industry denied that smoking causes lung cancer because “correlation is not causation”, the the research connecting smoking to cancer is correlational (you cannot do a study where you randomize people to smoke or not smoke).

However, we can use multiple correlations to triangulate to the most probable causation. It turns out that duration of smoking correlates with cancer risk, stopping then reduces the risk, there is a dose-response curve in terms of cigarettes per day and filtered vs unfiltered. There is also a plausible mechanism in that tobacco smoke contains a number of carcinogens. And yet, with a straight face, the tobacco industry tried to claim that cancer causes smoking (because the stress of cancer led people to seek out the calming effects of smoking).

Sometimes correlations are real and sometimes they mean exactly what we think they mean.

So – when confronted with a claim that A causes B, ask yourself – is this controlled data or observation, is the correlation real, and if so what is the most plausible causation and how has that been confirmed, if at all. We should not just dismiss all correlations, but should should not just accept them either, or any one interpretation of what they mean.

This fits with how we should approach all scientific questions. Plausibility matters. Context matters. And we need to have a fairly high threshold of evidence before we accept a claim as probably true. We also need to understand that the general media mostly does not do this. They tend to publish claims uncritically, often with the most sensational interpretation possible. So you have to be your own filter.