May 19 2020

Low Accuracy in Online Symptom Checkers

A new study published in Australia evaluates the accuracy of 27 online symptom checkers, or diagnostic advisers. The results are pretty disappointing. They found:

A new study published in Australia evaluates the accuracy of 27 online symptom checkers, or diagnostic advisers. The results are pretty disappointing. They found:

The 27 diagnostic SCs listed the correct diagnosis first in 421 of 1170 SC vignette tests (36%; 95% CI, 31–42%), among the top three results in 606 tests (52%; 95% CI, 47–59%), and among the top ten results in 681 tests (58%; 95% CI, 53–65%). SCs using artificial intelligence algorithms listed the correct diagnosis first in 46% of tests (95% CI, 40–57%), compared with 32% (95% CI, 26–38%) for other SCs. The mean rate of first correct results for individual SCs ranged between 12% and 61%. The 19 triage SCs provided correct advice for 338 of 688 vignette tests (49%; 95% CI, 44–54%). Appropriate triage advice was more frequent for emergency care (63%; 95% CI, 52–71%) and urgent care vignette tests (56%; 95% CI, 52–75%) than for non‐urgent care (30%; 95% CI, 11–39%) and self‐care tests (40%; 95% CI, 26–49%).

More distressing than the fact they the first choice was correct only 36% of the time, is that the correct diagnosis was only in the top 10 only 58% of the time. I would honestly not expect the correct diagnosis to be in the #1 slot most of the time. For any list of symptoms there are a number of possibilities. If there are 3-4 likely diagnoses, listing the correct one first about a third of the time is reasonable. You could argue that the problem there is simply not ordering the top choices optimally.

But not getting the correct diagnosis in the top 10 is a completely different problem. This implies that the correct diagnosis was entirely missed 42% of the time.

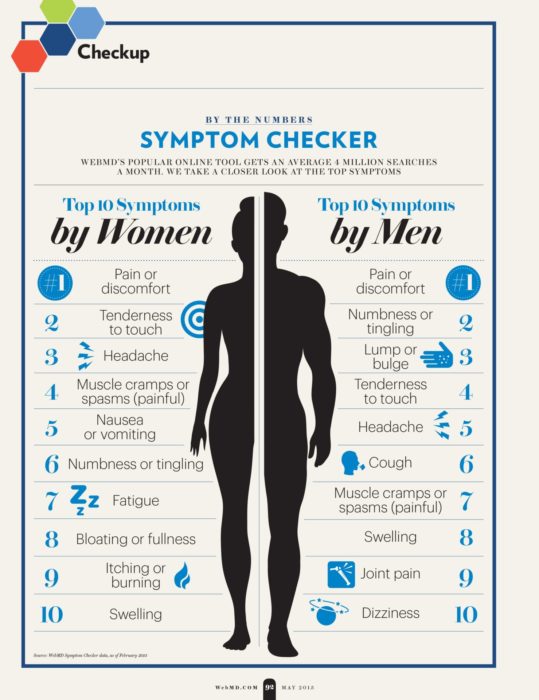

A word of caution on interpreting these results – they may not reflect real-world use. This is because people using online symptom checkers are more likely to have common problems (because common things are common). I did some of my own checking with several online symptom checkers (like WebMD and Mayo Clinic). For common stuff, like carpal tunnel syndrome, they do well. (One caveat to my caveat, as a doctor I may be better at describing symptoms than the average person.) When I started to describe less common entities, they started to fail, and even entirely miss the diagnosis. The examples used in the study may not reflect the distribution of common to rare diseases of people using symptom checkers out in the world.

But even with this caution, the outcomes are still pretty bad. It may also be true that people are more likely to use a symptom checker if they have something unusual, and not for super common things like a cold or carpal tunnel. There are also other studies showing poor performance.

It is also worth pointing out a couple of details. Symptom checkers using AI performed much better than those not using AI. Also, the performance for different systems varied from 12-61%. One problem, therefore, the authors point out is lack of regulation, leading to variability in quality. So these online symptom checkers could be much better, and some of them are, but there is simply no quality control.

Perhaps more important than whether or not these self-diagnosis apps work well, is the triage function – giving advice on if and where to seek medical attention (triage). Overall they gave good advice 49% of the time, which is very concerning. They tended to be overly cautious as well, erring more on the side of advising people to go to the ER rather than not. This is probably better than the other type of error, but could lead to overuse of ER resources.

We definitely need more data on how such systems are being used and their real-world effect. I have concerns about them from basic principles and my experience as a doctor. Often people use me as their “symptom checker” or for triage. What I have found is that people tend to fall into three broad categories when doing this. Some just want more information, and are basically reasonable. But sometimes people really don’t want to go to the ER or see their doctor, and they are trying to use me as an excuse not to. They basically want my permission to avoid dealing with the issue, because it is inconvenient or they are afraid. Then there are those at the other end of the spectrum – those who are anxious and looking for more things to be anxious about. They want to hear about all the horrible things they might have, and if anything are looking for justification for even more utilization of health care resources.

This is partly why online symptom checkers are always going to be limited (and why I am not surprised by these poor results). Taking a history from a patient is a dynamic process, that involves multiple layers. One of those layers is determining what the patient’s narrative of their own illness is. Because everyone has a story they use to make sense of what they are experiencing, and this story biases which information they choose to give and how they present it. It may also change the information to fit the narrative. This narrative is often personal, but may also have a cultural angle. Further, people have different personalities, as I described above (and much more) and this colors how they present information as well.

Taking a history is not a passive process, but an active investigation. You have to deconstruct the story you are given, determine the objective facts as best as possible, put them into context, and then figure out how to best reconstruct all the information into the most likely medical scenarios (the differential diagnosis). No symptom checker is going to be able to do this. Using AI algorithms may approximate this process, which is why they work better, but even then the human element is necessary.

This study does highlight the fact that we need more uniform quality for these apps. I’m not sure how best to accomplish this goal, but it is a worthy goal.

But the data I really want to see, which would be difficult to get, is this – what is the net effect of using an online symptom checker in the real world? Is it net positive or negative? It could be either way, and is probably both in different contexts. How much anxiety is produced when a serious diagnosis comes up first but really is a terrible match for the patient? This brings up another aspect of this – doctors are trained how to give information to patients, which is also a dynamic interaction. Just listing possible diagnoses is not terribly useful, or even ethical in some cases.

For me I think the bottom line is that online symptom checkers is a dubious idea to begin with, but at least they should perform optimally. A poorly performing symptom checker is clearly a negative thing.