Apr

16

2024

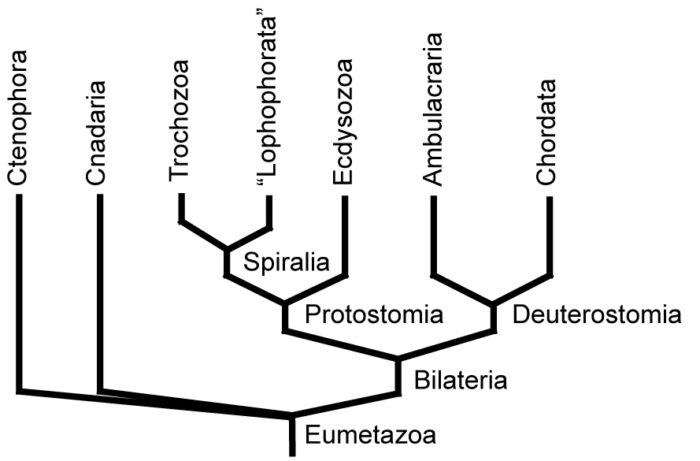

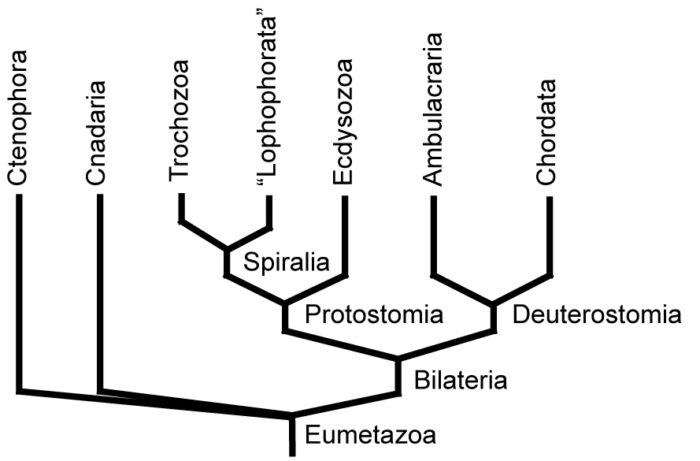

Evolution deniers (I know there is a spectrum, but generally speaking) are terrible scientists and logicians. The obvious reason is because they are committing the primary mortal sin of pseudoscience – working backwards from a desired conclusion rather than following evidence and logic wherever it leads. They therefore clasp onto arguments that are fatally flawed because they feel they can use them to support their position. One could literally write a book using bad creationist arguments to demonstrate every type of poor reasoning and pseudoscience (I should know).

Evolution deniers (I know there is a spectrum, but generally speaking) are terrible scientists and logicians. The obvious reason is because they are committing the primary mortal sin of pseudoscience – working backwards from a desired conclusion rather than following evidence and logic wherever it leads. They therefore clasp onto arguments that are fatally flawed because they feel they can use them to support their position. One could literally write a book using bad creationist arguments to demonstrate every type of poor reasoning and pseudoscience (I should know).

A classic example is an argument mainly promoted as part of so-called “intelligent design”, which is just evolution denial desperately seeking academic respectability (and failing). The argument goes that natural selection cannot increase information, only reduce it. It does not explain the origin of complex information. For example:

A big obstacle for evolutionary belief is this: What mechanism could possibly have added all the extra information required to transform a one-celled creature progressively into pelicans, palm trees, and people? Natural selection alone can’t do it—selection involves getting rid of information. A group of creatures might become more adapted to the cold, for example, by the elimination of those which don’t carry the genetic information to make thick fur. But that doesn’t explain the origin of the information to make thick fur.

I am an educator, so I can forgive asking a naive question. Asking it in a public forum in order to defend a specific position is more dodgy, but if it were done in good faith, that could still propel public understanding forward. But evolution deniers continue to ask the same questions over and over, even after they have been definitively answered by countless experts. That demonstrates bad faith. They know the answer. They cannot respond to the answer. So they pretend it doesn’t exist, or when confronted directly, respond with the equivalent of, “Hey, look over there.”

Continue Reading »

Feb

20

2024

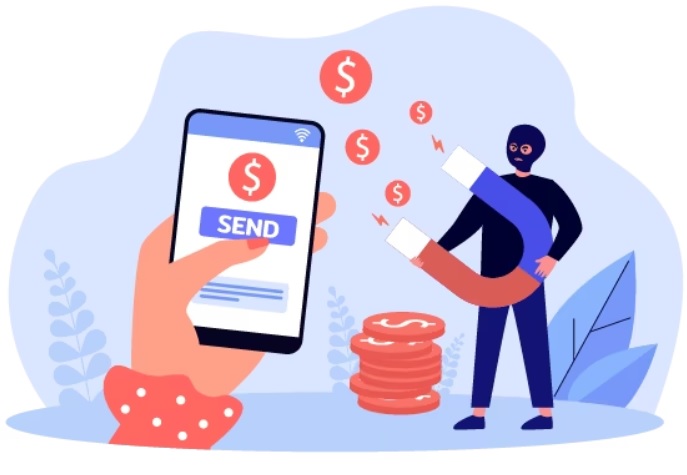

Good rule of thumb – assume it’s a scam. Anyone who contacts you, or any unusual encounter, assume it’s a scam and you will probably be right. Recently I was called on my cell phone by someone claiming to be from Venmo. They asked me to confirm if I had just made two fund transfers from my Venmo account, both in the several hundred dollar range. I had not. OK, they said, these were suspicious withdrawals and if I did not make them then someone has hacked my account. They then transferred me to someone from the bank that my Venmo account is linked to.

Good rule of thumb – assume it’s a scam. Anyone who contacts you, or any unusual encounter, assume it’s a scam and you will probably be right. Recently I was called on my cell phone by someone claiming to be from Venmo. They asked me to confirm if I had just made two fund transfers from my Venmo account, both in the several hundred dollar range. I had not. OK, they said, these were suspicious withdrawals and if I did not make them then someone has hacked my account. They then transferred me to someone from the bank that my Venmo account is linked to.

I instantly knew this was a scam for several reasons, but even just the overall tone and feel of the exchange had my spidey senses tingling. The person was just a bit too helpful and friendly. They reassured me multiple times that they will not ask for any personal identifying information. And there was the constant and building pressure that I needed to act immediately to secure my account, but not to worry, they would walk me through what I needed to do. I played along, to learn what the scam was. At what point was the sting coming?

Meanwhile, I went directly to my bank account on a separate device and could see there were no such withdrawals. When I pointed this out they said that was because the transactions were still pending (but I could stop them if I acted fast). Of course, my account would show pending transactions. When I pointed this out I got a complicated answer that didn’t quite make sense. They gave me a report number that would identify this event, and I could use that number when they transferred me to someone allegedly from my bank to get further details. Again, I was reassured that they would not ask me for any identifying information. It all sounded very official. The bank person confirmed (even though it still did not appear on my account) that there was an attempt to withdraw funds and sent me back to the Venmo person who would walk be through the remedy.

Continue Reading »

Feb

13

2024

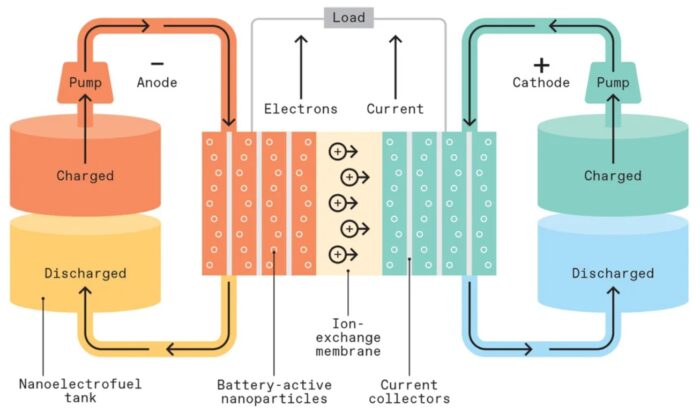

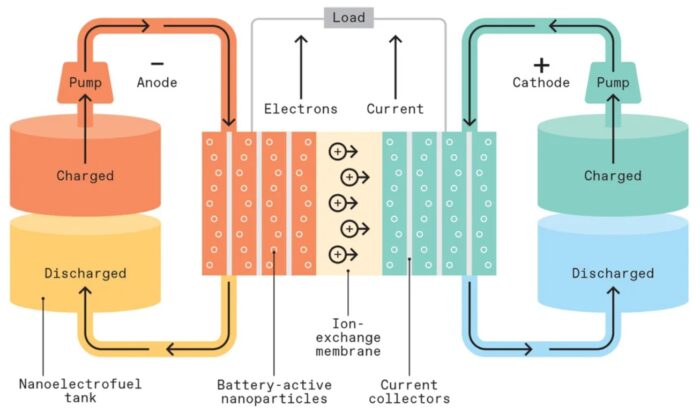

Battery technology has been advancing nicely over the last few decades, with a fairly predictable incremental increase in energy density, charging time, stability, and lifecycle. We now have lithium-ion batteries with a specific energy of 296 Wh/kg – these are in use in existing Teslas. This translates to BE vehicles with ranges from 250-350 miles per charge, depending on the vehicle. That is more than enough range for most users. Incremental advances continue, and every year we should expect newer Li-ion batteries with slightly better specs, which add up quickly over time. But still, range anxiety is a thing, and batteries with that range are heavy.

Battery technology has been advancing nicely over the last few decades, with a fairly predictable incremental increase in energy density, charging time, stability, and lifecycle. We now have lithium-ion batteries with a specific energy of 296 Wh/kg – these are in use in existing Teslas. This translates to BE vehicles with ranges from 250-350 miles per charge, depending on the vehicle. That is more than enough range for most users. Incremental advances continue, and every year we should expect newer Li-ion batteries with slightly better specs, which add up quickly over time. But still, range anxiety is a thing, and batteries with that range are heavy.

What would be nice is a shift to a new battery technology with a leap in performance. There are many battery technologies being developed that promise just that. We actually already have one, shifting from graphite anodes to silicon anodes in the Li-ion battery, with an increase in specific energy to 500 Wh/kg. Amprius is producing these batteries, currently for aviation but with plans to produce them for BEVs within a couple of years. Panasonic, who builds 10% of the world’s EV batteries and contracts with Tesla, is also working on a silocon anode battery and promises to have one in production soon. That is basically a doubling of battery capacity from the average in use today, and puts us on a path to further incremental advances. Silicon anode lithium-ion batteries should triple battery capacity over the next decade, while also making a more stable battery that uses less (or no – they are working on this too) rare earth elements and no cobalt. So even without any new battery breakthroughs, there is a very bright future for battery technology.

But of course, we want more. Battery technology is critical to our green energy future, so while we are tweaking Li-ion technology and getting the most out of that tech, companies are working to develop something to replace (or at least complement) Li-ion batteries. Here is a good overview of the best technologies being developed, which include sodium-ion, lithium-sulphur, lithium-metal, and solid state lithium-air batteries. As an aside, the reason lithium is a common element here is because it is the third-lightest element (after hydrogen and helium) and the first that can be used for this sort of battery chemistry. Sodium is right below lithium on the period table, so it is the next lightest element with similar chemistry.

Continue Reading »

Feb

05

2024

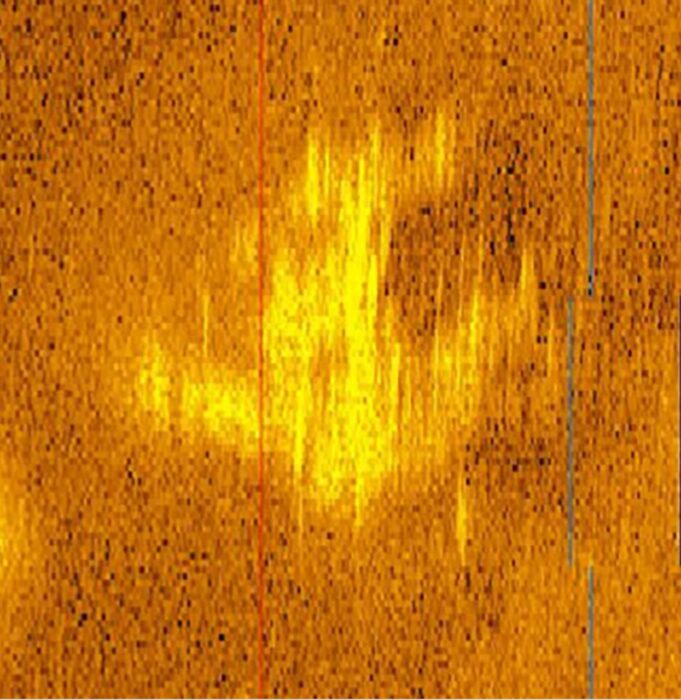

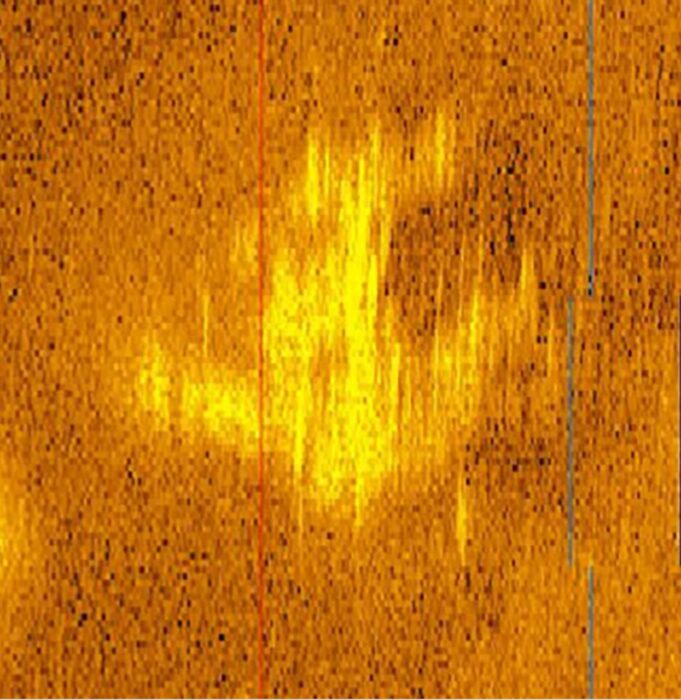

Is this sonar image taken at 16,000 feet below the surface about 100 miles from Howland island, that of a downed Lockheed Model 10-E Electra plane? Tony Romeo hopes it is. He spent $9 million to purchase an underwater drone, the Hugan 6000, then hired a crew and scoured 5,200 square miles in a 100 day search hoping to find exactly that. He was looking, of course, for the lost plane of Amelia Earhart. Has he found it? Let’s explore how we answer that question.

Is this sonar image taken at 16,000 feet below the surface about 100 miles from Howland island, that of a downed Lockheed Model 10-E Electra plane? Tony Romeo hopes it is. He spent $9 million to purchase an underwater drone, the Hugan 6000, then hired a crew and scoured 5,200 square miles in a 100 day search hoping to find exactly that. He was looking, of course, for the lost plane of Amelia Earhart. Has he found it? Let’s explore how we answer that question.

First some quick background – most people know Amelia Earhart was a famous (and much beloved) early female pilot, the first female to cross the Atlantic solo. She was engaged in a mission to be the first solo pilot (with her navigator, Fred Noonan) to circumnavigate the globe. She started off in Oakland California flying east. She made it all the way to Papua New Guinea. From there her plan was to fly to Howland Island, then Honolulu, and back to Oakland. So she had three legs of her journey left. However, she never made it to Howland Island. This is a small island in the middle of the Pacific ocean and navigating to it is an extreme challenge. The last communication from Earhart was that she was running low on fuel.

That was the last anyone heard from her. The primary assumption has always been that she never found Howland Island, her plane ran out of fuel and crashed into the ocean. This happened in 1937. But people love mysteries and there has been endless speculation about what may have happened to her. Did she go of course and arrive at the Marshall Islands 1000 miles away? Was she captured by the Japanese (remember, this was right before WWII)? Every now and then a tidbit of suggestive evidence crops up, but always evaporates on close inspection. It’s all just wishful thinking and anomaly hunting.

Continue Reading »

Jan

30

2024

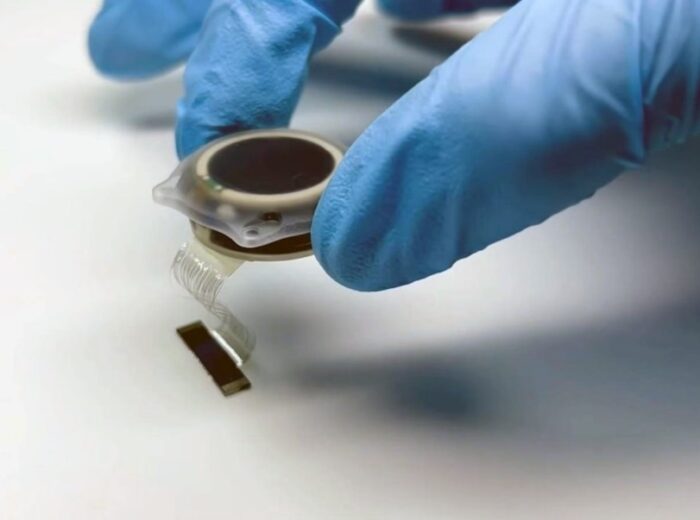

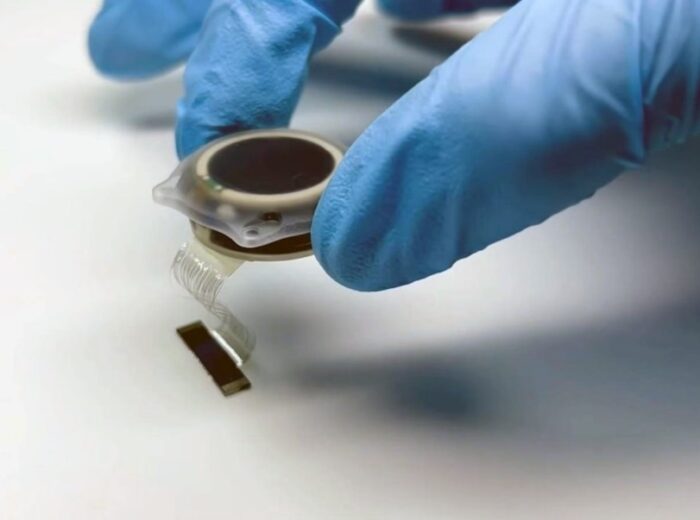

Elon Musk has announced that his company, Neuralink, has implanted their first wireless computer chip into a human. The chip, which they plan on calling Telepathy (not sure how I feel about that) connects with 64 thin hair-like electrodes, is battery powered and can be recharged remotely. This is exciting news, but of course needs to be put into context. First, let’s get the Musk thing out of the way.

Elon Musk has announced that his company, Neuralink, has implanted their first wireless computer chip into a human. The chip, which they plan on calling Telepathy (not sure how I feel about that) connects with 64 thin hair-like electrodes, is battery powered and can be recharged remotely. This is exciting news, but of course needs to be put into context. First, let’s get the Musk thing out of the way.

Because this is Elon Musk the achievement gets more attention than it probably deserves, but also more criticism. It gets wrapped up in the Musk debate – is he a genuine innovator, or just an exploiter and showman? I think the truth is a little bit of both. Yes, the technologies he is famous for advancing (EVs, reusable rockets, digging tunnels, and now brain-machine interface) all existed before him (at least potentially) and were advancing without him. But he did more than just gobble up existing companies or people and slap his brand on it (as his harshest critics claim). Especially with Tesla and SpaceX, he invested his own fortune and provided a specific vision which pushed these companies through to successful products, and very likely advanced their respective industries considerably.

What about Neuralink and BMI (brain-machine interface) technology? I think Musk’s impact in this industry is much less than with EVs and reusable rockets. But he is increasing the profile of the industry, providing funding for research and development, and perhaps increasing the competition. In the end I think Neuralink will have a more modest, but perhaps not negligible, impact on bringing BMI applications to the world. I think it will end up being a net positive, and anything that accelerates this technology is a good thing.

Continue Reading »

Dec

21

2023

This is not exactly a “best of” because I don’t know how that applies to science news, but here are what I consider to be the most impactful science news stories of 2023 (or at least the ones that caught by biased attention).

This is not exactly a “best of” because I don’t know how that applies to science news, but here are what I consider to be the most impactful science news stories of 2023 (or at least the ones that caught by biased attention).

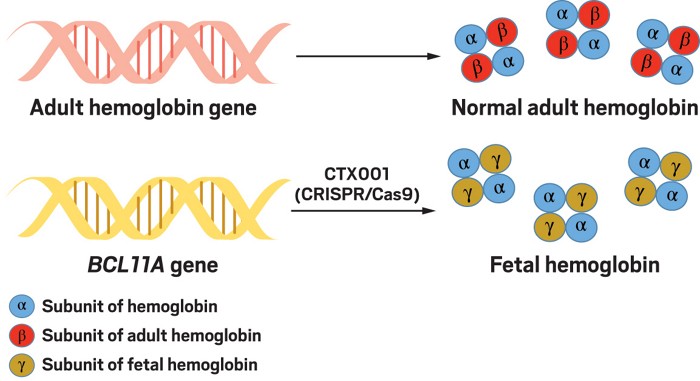

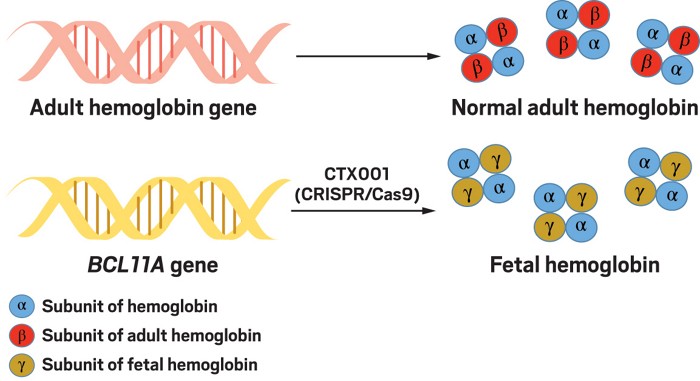

This was a big year for medical breakthroughs. We are seeing technologies that have been in the works for decades come to fruition with specific applications. The FDA recently approved a CRISPR treatment for sickle cell anemia. The UK already approved this treatment for sickle cell and beta thalassemia. This is the first CRISPR-based treatment approval. The technology itself is fascinating – I have been writing about CRISPR since it was developed, it’s a technology for making specific alterations to DNA at a specific target site. It can be used to permanently inactivate a gene, insert a new gene, or reversibly turn a gene off and then on again. Importantly, the technology is faster and cheaper than prior technologies. It is a powerful genetics research tool, and is a boon to genetic engineering. But since the beginning we have also speculated about its potential as a medical intervention, and now we have proof of concept.

The procedure is to take bone-marrow from the patient, then use CRISPR to silence a specific gene that turns off the production of fetal hemoglobin. The altered blood stem cells are then transplanted back into the patient. Both of these diseases, sickle cell and thalassemia, are genetic mutations of adult hemoglobin. The fetal hemoglobin is unaffected. By turning back on the production of fetal hemoglobin, this effectively reduces or even eliminates the negative effects of the mutations. Sickle cell patients do not go into crisis and thalassemia patients do not need constant blood transfusions.

This is an important milestone – we can control the CRISPR technique sufficiently that it is a safe and effective tool for treating genetically based diseases. This does not mean we can now cure all genetic diseases. There is still the challenge of getting the CRISPR to the right cells (using some vector). Bone-marrow based disease is low hanging fruit because we can take the cells to the CRISPR. But still – this is a lot of potential disease targets – anything blood or bone marrow based. Also, any place in the body where we can inject CRISPR into a contained space, like the eye, is an easy target. Other targets will not be as easy, but that technology is advancing as well. This all opens up a new type of medical intervention, through precise genetic alteration. Every future story about this technology will likely refer back to 2023 as the year of the first approved CRISPR treatment.

Continue Reading »

Dec

11

2023

Not a crow.

One of the core tenets of scientific skepticism is what I call neuropsychological humility – the recognition that while the human brain is a powerful information processing machine, it also has many frailties. One of those frailties is perception – we do not perceive the world in a neutral or objective way. Our perception of the world is constructed from multiple sensory streams processed together and filtered through internal systems that include our memories, expectations, biases, assumptions and (critically) attention. In many ways, we see what we know, what we are looking for, and what we expect to see. Perhaps the most internet-famous example of this is the invisible gorilla, a dramatic example of inattentional blindness.

Far more subtle is what might be called cultural blindness – we can perceive differences that we already know exist or with which we are very familiar, but otherwise may miss differences as a background blur. On my personal intellectual journey, one dramatic example I often refer to is my perception before and after becoming a birder. For most of my life birds were something in the background I paid little attention to. My internal birding map consisted of a few local species and broad groups. I could recognize cardinals, blue jays, crows, pigeons, and mourning doves. Any raptor was a “hawk”. There were ducks and geese, and then there was – everything else. I would probably call any small bird a sparrow, if I thought to call it anything at all. I knew of other birds from nature shows, but they were not part of my world.

The birding learning curve was very steep, and completely changed my perception. What I called “crows” consisted not only of crows but ravens and at least two types of grackle. I can identify the field markings of several hawks and two vultures. I can tell the subtle differences between a downy and hairy woodpecker. At first I had difficulty telling a chickadee from a nuthatch, now the difference is obvious. I can even tell some sparrow species apart. My internal birding map is vastly different, and that affects how I perceive the world.

Continue Reading »

Oct

31

2023

It’s Halloween, so there are a lot of fluff pieces about ghosts and similar phenomena circulating in the media. There are some good skeptical pieces as well, which is always nice to see. For this piece I did not want to frame the headline as a question, which I think is gratuitous, especially when my regular readers know what answer I am going to give. The best current scientific evidence has a solid answer to this question – ghosts are not a real scientific phenomenon.

It’s Halloween, so there are a lot of fluff pieces about ghosts and similar phenomena circulating in the media. There are some good skeptical pieces as well, which is always nice to see. For this piece I did not want to frame the headline as a question, which I think is gratuitous, especially when my regular readers know what answer I am going to give. The best current scientific evidence has a solid answer to this question – ghosts are not a real scientific phenomenon.

For most scientists the story pretty much ends there. Spending any more serious time on the issue is a waste, even an academic embarrassment. But for a scientific skeptic there are several real and interesting questions. Why do so many people believe in ghosts? What naturalistic phenomena are being mistaken for ghostly phenomena? What specific errors in critical thinking lead to the misinterpretation of experiences as evidence for ghosts? Is what ghost-hunters are doing science, and if not, why not?

The first question is mostly sociological. A recent survey finds that 41% of Americans believe in ghosts, and 20% believe they have had an encounter with a ghost. We know that there are some personality traits associated with belief in ghosts. Of the big five, openness to experience and sensation is the biggest predictor. Also, intuitive thinking style rather than analytical is associated with a greater belief in the paranormal in general, including ghosts.

The relationship between religious belief and paranormal belief, including ghosts, is complicated. About half of studies show the two go together, while the rest show that being religous reduces the chance of believing in the paranormal. It likely depends on the religion, the paranormal belief, and how questions are asked. Some religious preach that certain paranormal beliefs are evil, therefore creating a stigma against them.

Continue Reading »

Oct

16

2023

There are 33 billion chickens in the world, mostly domestic species raised for egg-laying or meat. They are a high efficiency source of high quality protein. It’s the kind of thing we need to do if we want to feed 8 billion people. Similarly we have planted 4.62 billion acres of cropland. About 75% of the food we consume comes from 12 plant species, and 5 animal species. But there is an unavoidable problem with growing so much biological material – we are not the only things that want to eat them.

There are 33 billion chickens in the world, mostly domestic species raised for egg-laying or meat. They are a high efficiency source of high quality protein. It’s the kind of thing we need to do if we want to feed 8 billion people. Similarly we have planted 4.62 billion acres of cropland. About 75% of the food we consume comes from 12 plant species, and 5 animal species. But there is an unavoidable problem with growing so much biological material – we are not the only things that want to eat them.

This is an – if you build it they will come – scenario. We are creating a food source for other organisms to eat and infect, which creates a lot of evolutionary pressure to do so. We are therefore locked in an evolutionary arms race against anything that would eat our lunch. And there is no easy way out of this. We have already has some epic failures, such as a fungus wiping out the global banana crop – yes, that already happened, a hundred years ago. And now it is happening again with the replacement banana. A virus almost wiped out the Hawaiian papaya industry, and citrus greening is threatening Florida’s citrus industry. The American chestnut essentially disappeared due to a fungus.

And now there is a threat to the world’s chickens. Last year millions were culled or died from the bird flu. As the avian flu virus evolves, it is quite possible that we will have a bird pandemic that could devastate a vital food source. Such viruses are also a potential source of zoonotic crossover to humans. Fighting this evolving threat requires that we use every tool we have. Best practices in terms of hygiene, maintaining biodiversity, and integrated pest management are all necessary. But they only mitigate the problem, not eliminate it. Vaccines are another option, and they will likely play an important role, but vaccines can be expensive and it’s difficult to administer 33 billion doses of chicken vaccines every year.

A recent study is a proof of concept for another approach – using modern gene editing tools to make chickens more resistant to infection. This approach saved the papaya industry, and brought back the American chestnut. It is also the best hope for crop bananas and citrus. Could it also stop the bird flu? H5N1 subtype clade 2.3.4.4b is an avian flu virus that is highly pathogenic, affects domestic and wild birds, and has cause numerous spillovers to mammals, including humans.

Continue Reading »

Oct

12

2023

From time to time the Earth gets hit by a wave of energetic particles from the sun – solar flares or even coronal mass ejections (CMEs). In 1859 a large CME hit Earth (known as the Carrington Event), shorting out telegraphs, brightening the sky, and causing aurora deep into equatorial latitudes. If such an event were to occur today experts are not exactly sure what would happen, but it could take out satellites and short out parts of electrical grids. Interestingly, we have a historical record of how often such events have occurred in the past, mostly from tree rings.

From time to time the Earth gets hit by a wave of energetic particles from the sun – solar flares or even coronal mass ejections (CMEs). In 1859 a large CME hit Earth (known as the Carrington Event), shorting out telegraphs, brightening the sky, and causing aurora deep into equatorial latitudes. If such an event were to occur today experts are not exactly sure what would happen, but it could take out satellites and short out parts of electrical grids. Interestingly, we have a historical record of how often such events have occurred in the past, mostly from tree rings.

A recent study extends this data with an analysis of subfossil pine trees from the Southern French Alps. These are trees partially preserved in bogs or similar environments, not yet fossilized but on their way. Trees are incredibly valuable as a store of historical information because of their rings – tree rings are a record of their annual growth, and the thickness of each ring reflects environmental conditions that year. Trees also live a long time – the Scots Pines used in the study live for 150-300 years. You can therefore create a record of annual tree rings continuously back in time (dendrochronologically dated tree-ring series) by matching up overlapping tree rings from different trees. We have such records going back about 13,000 years.

Tree ring also record another type of data – carbon 14-carbon 12 ratio. This is the basis of carbon 14 dating. The atmosphere has a certain ratio of carbon 14-12 which is reflected in living things that incorporate carbon into their structures. When the living thing dies, the clock starts ticking, with C14 decaying into C12, changing the ratio. One wrinkle for this method, however, is that the C14-12 ratio in the atmosphere is not fixed, it can fluxtuate from year to year. Essentially cosmic rays hit nitrogen (N14) atoms and convert them into carbon 14 in the upper atmosphere. This creates a steady of C14 in the atmosphere (and why it has not all decayed to C12 already).

Continue Reading »

Evolution deniers (I know there is a spectrum, but generally speaking) are terrible scientists and logicians. The obvious reason is because they are committing the primary mortal sin of pseudoscience – working backwards from a desired conclusion rather than following evidence and logic wherever it leads. They therefore clasp onto arguments that are fatally flawed because they feel they can use them to support their position. One could literally write a book using bad creationist arguments to demonstrate every type of poor reasoning and pseudoscience (I should know).

Evolution deniers (I know there is a spectrum, but generally speaking) are terrible scientists and logicians. The obvious reason is because they are committing the primary mortal sin of pseudoscience – working backwards from a desired conclusion rather than following evidence and logic wherever it leads. They therefore clasp onto arguments that are fatally flawed because they feel they can use them to support their position. One could literally write a book using bad creationist arguments to demonstrate every type of poor reasoning and pseudoscience (I should know).

Good rule of thumb – assume it’s a scam. Anyone who contacts you, or any unusual encounter, assume it’s a scam and you will probably be right. Recently I was called on my cell phone by someone claiming to be from Venmo. They asked me to confirm if I had just made two fund transfers from my Venmo account, both in the several hundred dollar range. I had not. OK, they said, these were suspicious withdrawals and if I did not make them then someone has hacked my account. They then transferred me to someone from the bank that my Venmo account is linked to.

Good rule of thumb – assume it’s a scam. Anyone who contacts you, or any unusual encounter, assume it’s a scam and you will probably be right. Recently I was called on my cell phone by someone claiming to be from Venmo. They asked me to confirm if I had just made two fund transfers from my Venmo account, both in the several hundred dollar range. I had not. OK, they said, these were suspicious withdrawals and if I did not make them then someone has hacked my account. They then transferred me to someone from the bank that my Venmo account is linked to. Battery technology has been advancing nicely over the last few decades, with a fairly predictable incremental increase in energy density, charging time, stability, and lifecycle. We now have lithium-ion batteries with a specific energy of 296 Wh/kg – these are in use in existing Teslas. This translates to BE vehicles with ranges from 250-350 miles per charge, depending on the vehicle. That is more than enough range for most users. Incremental advances continue, and every year we should expect newer Li-ion batteries with slightly better specs, which add up quickly over time. But still, range anxiety is a thing, and batteries with that range are heavy.

Battery technology has been advancing nicely over the last few decades, with a fairly predictable incremental increase in energy density, charging time, stability, and lifecycle. We now have lithium-ion batteries with a specific energy of 296 Wh/kg – these are in use in existing Teslas. This translates to BE vehicles with ranges from 250-350 miles per charge, depending on the vehicle. That is more than enough range for most users. Incremental advances continue, and every year we should expect newer Li-ion batteries with slightly better specs, which add up quickly over time. But still, range anxiety is a thing, and batteries with that range are heavy. Is this sonar image taken at 16,000 feet below the surface about 100 miles from Howland island, that of a downed Lockheed Model 10-E Electra plane?

Is this sonar image taken at 16,000 feet below the surface about 100 miles from Howland island, that of a downed Lockheed Model 10-E Electra plane?  Elon Musk

Elon Musk  This is not exactly a “best of” because I don’t know how that applies to science news, but here are what I consider to be the most impactful science news stories of 2023 (or at least the ones that caught by biased attention).

This is not exactly a “best of” because I don’t know how that applies to science news, but here are what I consider to be the most impactful science news stories of 2023 (or at least the ones that caught by biased attention).

It’s Halloween, so there are a lot of fluff pieces about ghosts and similar phenomena circulating in the media. There are some

It’s Halloween, so there are a lot of fluff pieces about ghosts and similar phenomena circulating in the media. There are some  There are

There are  From time to time the Earth gets hit by a wave of energetic particles from the sun – solar flares or even coronal mass ejections (CMEs). In 1859 a large CME hit Earth (known as the Carrington Event), shorting out telegraphs, brightening the sky, and causing aurora deep into equatorial latitudes. If such an event were to occur today experts are not exactly sure what would happen, but it could take out satellites and short out parts of electrical grids. Interestingly, we have a historical record of how often such events have occurred in the past, mostly from tree rings.

From time to time the Earth gets hit by a wave of energetic particles from the sun – solar flares or even coronal mass ejections (CMEs). In 1859 a large CME hit Earth (known as the Carrington Event), shorting out telegraphs, brightening the sky, and causing aurora deep into equatorial latitudes. If such an event were to occur today experts are not exactly sure what would happen, but it could take out satellites and short out parts of electrical grids. Interestingly, we have a historical record of how often such events have occurred in the past, mostly from tree rings.