Aug 22 2019

AI and Scaffolding Networks

A recent commentary in Nature Communications echoes, I think, a key understanding of animal intelligence, and therefore provides an important lesson for artificial intelligence (AI). The author, Anthony Zador, extends what has been an important paradigm shift in our approach to AI.

A recent commentary in Nature Communications echoes, I think, a key understanding of animal intelligence, and therefore provides an important lesson for artificial intelligence (AI). The author, Anthony Zador, extends what has been an important paradigm shift in our approach to AI.

Early concepts of AI, as reflected in science fiction at least (which I know does not necessarily track with actual developments in the industry) was that the ultimate goal was to develop a general AI that could master tasks from the top down through abstract understanding – like humans. Actual developers of AI, however, quickly learned that this might not be the best approach, and in any case is decades away at least. I remember reading in the 1980s about approaching AI more from the ground up.

The first analogy I recall is that of walking – how do we program a robot to walk? We don’t need a human cortex to do this. Insects can walk. Also, much of the processing required to walk is in the deeper more primitive parts of our brain, not the more complex cortex. So maybe we should create the technology for a robot to walk by starting with the most basic algorithms similar to those used by the simplest creatures, and then build up from there.

My memory, at least, is that this completely flipped my concept of how we were approaching AI. Don’t build a robot with general intelligence who can do anything and then teach it to walk. You don’t even build algorithms that can walk. You break walking down into its component parts, and then build algorithms that can master and combine each of those parts. This was reinforced by my later study of neuroscience. Yeah – that is exactly how our brains work. We have modules and networks that do very specific things, and they combine together to produce more and more sophisticated behavior.

For example, we have one circuit who’s only job is to sense our orientation with respect to gravity (vestibular function). If that circuit is broken, it produces a specific abnormality of walking. There are multiple specific neurological disorders of walking, all manifesting differently, and each representing a problem with one subsystem that has its own contribution to the overall task of walking.

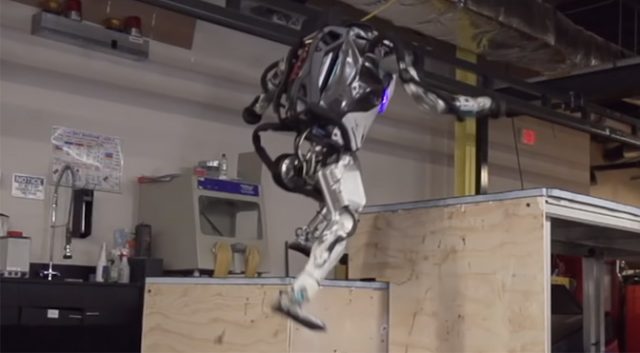

So now here we are 30+ years later and the benefits of this approach are really being felt. You have probably seen videos from Boston Dynamics of their various walking robots, two and four-legged. Success is undeniable.

This approach has also worked for abstract tasks, like playing chess or go. Add in a recursive algorithm for self-learning, and AI can master complex abstract tasks incredibly fast, beating even the best humans. These AI are not self-aware. They are not general AI. They are mostly task specific, although the underlying basic AI tech can be applied to many tasks. We also now have different AIs evaluate each other – one algorithm to do a task, another to evaluate the results and give feedback to the first, which then iterates and gives it another go.

This brings us to Zador’s paper. What is he adding to this conversation? He writes:

Here we argue that most animal behavior is not the result of clever learning algorithms—supervised or unsupervised—but is encoded in the genome. Specifically, animals are born with highly structured brain connectivity, which enables them to learn very rapidly. Because the wiring diagram is far too complex to be specified explicitly in the genome, it must be compressed through a “genomic bottleneck”. The genomic bottleneck suggests a path toward ANNs capable of rapid learning.

So again he is saying we should use animals as a template for our AI, but in a way to add a new layer to our approach. He also argues that we should be applying this approach not just to abstract tasks, like playing chess, but for interacting with the physical world. Sure, but I think Boston Dynamics would say that they are already doing that. So what is the new bit?

There are two concepts here. One is that animals are not born with brains that are blank slates. Mice babies and squirrel babies are not born with the same brains, that then learn how to either behave like a mouse or a squirrel. A squirrel is born with a squirrel brain. It is pre-wired for certain tasks, like living in the trees. This much is not really new. This is old news. Actually all of his points are old news, but I think that’s the point. These are already understood by neuroscientists, but he is emphasizing that we can apply this to AI.

Further, what does it mean to be pre-wired for tree climbing? A Squirrel brain has much more information even at birth than the genes that code for it. The genes, in a way, are a compressed version of this information. What the genes contain is not a schematic of the final architecture of the brain, but just the process by which the brain will develop. The development process itself then adds the extra information.

This is exactly like how bees build a complex nest. There is no bee with the ultimate design of the nest in their tiny brain. They just have the algorithm of what simple steps to take in building the next, and the structure of the nest emerges from this process. The nest is an emergent property – just like brains are an emergent property of the development process encoded in genes.

But there’s more. This process does not stop at birth, but continues with learning. Because what the squirrel brain contains is not the programming for climbing trees bu the neurological scaffolding for learning how to climb trees. So essentially what he is saying is that baby squirrels are not necessarily better than baby mice at climbing trees, but they are better at learning how to climb trees.

To give a human analogy – human babies cannot speak better than chimp babies. But human babies have the neurological scaffolding to learn language, and that is what they are massively better at then chimps – learning how to speak.

There are, of course, some purely innate behaviors in animals. Zador points out that for each behavior there is a tradeoff between innate wiring and learning. Innate behaviors hit the ground running, and therefore have the advantage of time. But learned behaviors may achieve a better final ability, and therefore have an advantage in overall effectiveness. For each behavior there is some mixture of innate plus learned elements, and he acknowledges there isn’t always a sharp demarcation between the two. Some behaviors, like running in some animals, are there from the beginning. Others, like knowing how to find food, may need to be mostly learned. Learning can also be divided into supervised (taught by a parent) and unsupervised (learned on its own by doing).

Zador is saying that we should apply all this to our AI. Build AI scaffolding that is designed to learn how to do a specific task. We are already doing this with abstract AI tasks, like playing chess. He is arguing that we should also apply this approach to interacting with the physical world.

Again, in the end I don’t think there is any new concept here. Zador is mostly giving a good overview of what is known from neuroscience and pointing out how it can be applied across the board to AI, acknowledging that to some extent it already is. I don’t know how much it already is, and I’m sure the people at Boston Dynamics have some opinion on this topic. I would like to hear from AI experts to see how they react to Zador’s commentary. My sense is it’s part of a conversation that is already happening, but it may be helpful to pull it all together in one place.

It is a good opportunity to point out yet again that there is a very interesting synergy happening between neuroscience and AI. We are learning how to build better AI by taking clues from how brains work, and computer technology is, in turn, helping advance neuroscience. I do think the end stage of this process will be the development of an artificial human brain, or at least human-level general AI. I am curious as to how similar and dissimilar the final result will be, and perhaps there will be many versions.

There will be the push to develop a virtual or hard-wired human brain just for neuroscience research. But this will get us into ethically murky territory. For ethical reasons alone, AI tech may continue to advance in the direction of task-specific learning, rather than general AI.