Oct 18 2022

AI Snake oil

Humanity has an uncanny ability to turn any new potential boon into con. The promise of stem cell technology quickly spawned fraudulent stem-cell clinics to exploit the desperate. There is snake oil based on lasers, holograms, and radio waves. Any new tech or scientific discovery becomes a marketing scam, going back to electromagnetism and continuing today with “nanotechnology”. There is some indication that artificial intelligence (AI) will be no exception.

Humanity has an uncanny ability to turn any new potential boon into con. The promise of stem cell technology quickly spawned fraudulent stem-cell clinics to exploit the desperate. There is snake oil based on lasers, holograms, and radio waves. Any new tech or scientific discovery becomes a marketing scam, going back to electromagnetism and continuing today with “nanotechnology”. There is some indication that artificial intelligence (AI) will be no exception.

I am a big fan of AI technology, and clearly it has reached a turning point where the potential applications are exploding. The basic algorithms haven’t changed, but with faster computers, an internet full of training data, and AI scientists finding more ways to cleverly leverage the technology, we are seeing more and more amazing applications, from self-driving cars to AI art programs. AI is likely to be increasingly embedded in everything we do.

But with great potential comes great hype. Also, for many people, AI is a black box of science and technology they don’t understand. It may as well be magic. And that is a recipe for exploitation. A recent BBC article, for example, highlights to risks of relying on AI in evaluating job applicants. It’s a great example of what is likely to become a far larger problem.

I think the core issue is that for many people, those for whom AI is mostly a black box, there is the risk of attributing false authority to AI and treating it like a magic wand. Companies can therefore offer AI services that are essentially pure pseudoscience, but since it involves AI, people will buy it. In the case of hiring practices, AI is being applied to inherently bogus analysis, which doesn’t change the nature of the analysis, it just gives it a patina of impeachable technology, which makes it more dangerous.

Here is an example – technical analysis of the stock market. For a ridiculously quick summary, fundamental analysis looks at the intrinsic value of a company (assets, market penetration, brand, etc.) and determines its true value compared to its stock price to determine if it’s a good investment. Technical analysis ignores all this and simply looks at statistical trends in trading patterns and price movements. It falsely assumes that past performance predicts future trends, and is similar to numerology or astrology for the stock market. (Yes, there is some nuance to all this I am glossing over, but this is a fair summary.) Let’s say someone develops an AI algorithm (probably already exists) to do fast and powerful technical analysis of the stock market. This will not change the inherent nature of technical analysis, just make it seem high tech and more compelling.

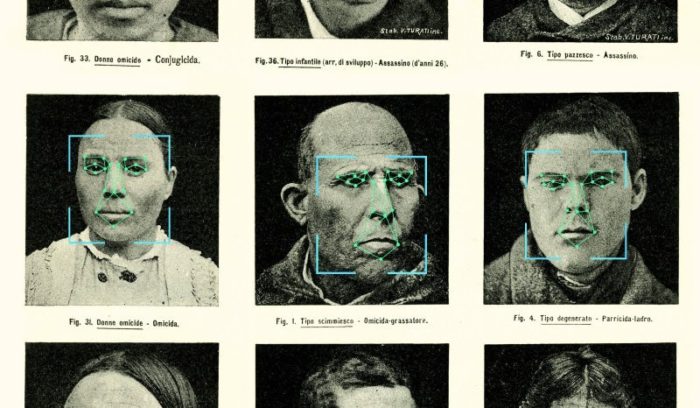

The same is true of hiring practices. Using a picture of someone to generate a personality profile is pseudoscience. Using AI to accomplish this task does not make it any less pseudoscience. Corporations love to find the “one easy trick” to accomplish some task. For large corporations, even small efficiency gains or cost savings can translate into millions. They also have lots of money to invest in such schemes, so they are preyed upon by every flavor of charlatan, many peddling these personality-profiles nonsense. The sheen of AI is simply going to supercharge this snake oil.

As the BBC reports, the data we have so far indicates that using AI algorithms does not magically strip away bias from applicant selection, it just locks existing biases in place. Gender, cultural, and racial cues are baked into the information, and translate through an AI analysis. AI can also introduce new biases, or simply get distracted by irrelevant information (such as the brightness of the picture used in the analysis).

One might argue that these problems can all potentially be fixed by better AI, and that’s fair. I think that is probably true, for some issues. The point is that AI is just a powerful tool. How the tool is used matters. If AI is used to do handwriting analysis to determine an applicant’s personality and their potential as an employee, there is no fix for that. Handwriting analysis is inherently pseudoscience, and using high-tech to perform the analysis doesn’t change that. But better AI can be better at filtering out bias, if it is specifically trained to identify and filter out sources of bias. But this won’t necessarily happen automatically, or simply by making AI applications more powerful.

There is a tendency to treat technology like pixie dust, just sprinkle it on and problems magically disappear. AI is positioned to become the greatest example of this phenomenon, and it’s already started. This is especially true because AI has the word “intelligence” right in the name, so it encourages people to think of it as an agent rather than a tool, and that it will therefore fix problems on its own. But AI is not an agent (not the narrow AI we have now). It is a powerful tool that can be used for good or bad.