Sep

29

2025

We are all familiar with the notion of “being on autopilot” – the tendency to initiate and even execute behaviors out of pure habit rather than conscious decision-making. When I shower in the morning I go through roughly the identical sequence of behaviors, while my mind is mostly elsewhere. If I am driving to a familiar location the word “autopilot” seems especially apt, as I can execute the drive with little thought. Of course, sometimes this leads me to taking my most common route by habit even when I intend to go somewhere else. You can, of course, override the habit through conscious effort.

We are all familiar with the notion of “being on autopilot” – the tendency to initiate and even execute behaviors out of pure habit rather than conscious decision-making. When I shower in the morning I go through roughly the identical sequence of behaviors, while my mind is mostly elsewhere. If I am driving to a familiar location the word “autopilot” seems especially apt, as I can execute the drive with little thought. Of course, sometimes this leads me to taking my most common route by habit even when I intend to go somewhere else. You can, of course, override the habit through conscious effort.

That last word – effort – is likely key. Psychologists have found that humans have a tendency to maximize efficiency, which is another way of saying that we prioritize laziness. Being lazy sounds like a vice, but evolutionarily it probably is about not wasting energy. Animals, for example, tend to be active only as much as is absolutely necessary for survival, but we tend to see their laziness as conserving precious energy.

We developed for conservation of mental energy as well. We are not using all of our conscious thought and attention to do everyday activities, like walking. Some activities (breathing-walking) are so critical that there are specialized circuits in the brain for executing them. Other activities are voluntary or situation, like shooting baskets, but may still be important to us, so there is a neurological mechanism for learning these behaviors. The more we do them, the more subconscious and automatic they become. Sometimes we call this “muscle memory” but it’s really mostly in the brain, particularly the cerebellum. This is critical for mental efficiency. It also allows us to do one common task that we have “automated” while using our conscious brain power to do something else more important.

Continue Reading »

Sep

23

2025

Yesterday, Trump and RFK Jr had a press conference which some are characterizing as the absolutely worst firehose of medical misinformation coming from the White House in American history. I think that is fair. This was the presser we knew was coming, and many of us were dreading. It was worse than I anticipated.

Yesterday, Trump and RFK Jr had a press conference which some are characterizing as the absolutely worst firehose of medical misinformation coming from the White House in American history. I think that is fair. This was the presser we knew was coming, and many of us were dreading. It was worse than I anticipated.

I suspect much of this stems from RFKs previous promise that in six months he would find the cause of autism so that we can start eliminating these exposures – six months is September. This was an absurd claim given that there has been and continues to be extensive international research into autism for decades, and absolutely no reason to suspect any major breakthrough in those six months. Those of us following RFK’s career knew what he meant – he believes he already knows the causes, that they are environmental (hence “exposures”) and include vaccines.

So Kennedy had to gin up some big autism announcement this month, and there is always plenty of preliminary or inconclusive research going on that you can cherry pick to support some preexisting narrative. It was basically leaked that his target was going to be an alleged link between Tylenol (acetaminophen) use in pregnancy and autism. This gave us an opportunity to pre-debunk this claim, which many did. Just read my linked article in SBM to review the evidence – bottom line, there is no established cause and effect and two really good reasons to doubt one exists: lack of a dose response curve, and when you control for genetics, any association vanishes.

Continue Reading »

Sep

22

2025

Quantum computers are a significant challenge for science communicators for a few reasons. One, of course, is that they involve quantum mechanics, which is not intuitive. It’s also difficult to understand why they represent a potential benefit for computing. But even with those technical challenges aside – I find it tricky to strike the optimal balance of optimism and skepticism. How likely are quantum computers, anyway. How much of what we hear is just hype? (There is a similar challenge with discussing AI.)

Quantum computers are a significant challenge for science communicators for a few reasons. One, of course, is that they involve quantum mechanics, which is not intuitive. It’s also difficult to understand why they represent a potential benefit for computing. But even with those technical challenges aside – I find it tricky to strike the optimal balance of optimism and skepticism. How likely are quantum computers, anyway. How much of what we hear is just hype? (There is a similar challenge with discussing AI.)

So I want to discuss what to me sounds like a genuine breakthrough in quantum computing. But I have to caveat this by saying that only true experts really know how much closer this brings us to large scale practical quantum computers, and even they are probably not sure. There are still too many unknowns. But the recent advance is interesting in any case, and I hope it’s as good as it sounds.

For background, quantum computers are different than classical computers in that they store information and do calculations using quantum effects. A classical computer stores information as bits, a binary piece of data, like a 1 or 0. This can be encoded in any physical system that has two states and can switch between those states, and can be connected together in a circuit. A quantum computer, rather, uses qbits, which are in a superposition of 1 and 0, and are entangled with other qbits. This is the messy quantum mechanics I referred to.

Continue Reading »

Sep

15

2025

This is not really anything new, but it is taking on a new scope. The WSJ recently wrote about The Rise of ‘Conspiracy Physics’ (hat tip to “Quasiparticular” for pointing to this in the topic suggestions), which discusses the popularity of social media influencers who claim there is a vast conspiracy among academic physicists. Back in the before time (pre world wide web), if you were a crank – someone who thinks they understand science better than they do and that they have revolutionized science without ever checking their ideas with actual experts – you would likely mail your manifesto to random academics hoping to get their attention. The academics would almost universally take the hundreds of pages full of mostly nonsense and place it in the circular file, unread. I myself have received several of these (although I usually did read them, at least in part, for skeptical fodder).

This is not really anything new, but it is taking on a new scope. The WSJ recently wrote about The Rise of ‘Conspiracy Physics’ (hat tip to “Quasiparticular” for pointing to this in the topic suggestions), which discusses the popularity of social media influencers who claim there is a vast conspiracy among academic physicists. Back in the before time (pre world wide web), if you were a crank – someone who thinks they understand science better than they do and that they have revolutionized science without ever checking their ideas with actual experts – you would likely mail your manifesto to random academics hoping to get their attention. The academics would almost universally take the hundreds of pages full of mostly nonsense and place it in the circular file, unread. I myself have received several of these (although I usually did read them, at least in part, for skeptical fodder).

With the web, cranks had another outlet. They could post their manifesto on a homemade web page and try to get attention there. The classic example of this was the “Time Cube” – the site is now inactive but you can see a capture on the wayback machine. This site came to typify the typical format of such pages – a long vertical scrawl, almost unreadable color scheme, filled with boasting about how brilliant the creator is, and claiming a conspiracy of silence among scientists.

With web 2.0 and social media, the cranks adapted, and they have continued to adapt as social media and society evolves. Today, as pointed out in the WSJ article, there is a wave of anti-establishment sentiment, and the cranks are riding this wave. If you read the comments to the WSJ article you will see evidence of some of the contributing factors. There is, for example, a lot of “blame the victim” sentiment – blaming physicists, or scientists, academics, experts in general. They did not do a good enough job of explaining their field to the public. They ignored the cranks and let them flourish. They responded to the cranks and gave them attention. They are too closed to fringe ideas that challenge their authority.

Continue Reading »

Sep

08

2025

It is becoming increasingly clear, in my opinion, that we need to further shift from an overall economic system based on a linear model of extraction-manufacture-use-waste to a more circular model where as much waste as possible becomes feedstock for another manufacturing process. It also seems clear, after reading about such things for a long time, that economics ultimately drives such decisions. If the one-way road to waste is the cheapest pathway, that is the path industry will take. Unfortunately, this model historically has lead to massive pollution, growing waste, and a changing climate. How do we switch to an economically viable circular economy, to minimize waste and environmental impact without decreasing standard of living? That is always the $64,000 question.

It is becoming increasingly clear, in my opinion, that we need to further shift from an overall economic system based on a linear model of extraction-manufacture-use-waste to a more circular model where as much waste as possible becomes feedstock for another manufacturing process. It also seems clear, after reading about such things for a long time, that economics ultimately drives such decisions. If the one-way road to waste is the cheapest pathway, that is the path industry will take. Unfortunately, this model historically has lead to massive pollution, growing waste, and a changing climate. How do we switch to an economically viable circular economy, to minimize waste and environmental impact without decreasing standard of living? That is always the $64,000 question.

Here are two recent possibilities I came across. They have nothing to do with each other, but both represent possible ways to think differently about our priorities. The first one has to do with mineral extraction. The method currently used for developing mines and refining metals is driven entirely by economics. The percentage of a metal in ore that is deemed worth refining depends entirely on the value of that metal. Precious metals like gold may be refined from ore with as little as 0.001%. Copper ore typically has 0.6% copper. High grade iron ore has the highest percentage at about 50%. Any mineral present at too low a concentration to be economically viable is considered a by-product, and simply becomes part of the ore waste.

The industry has largely evolved to pick the low-hanging fruit – find high grade ores for specific metals, perhaps recover some high value lower concentration metals, and the rest is waste. To meet growing needs, new mines are opened. Right now the US has 75 “hard rock” mines in operation. Opening a new mine, assuming a site with high grade ore is identified, takes on average 18 years and costs up to a billion dollars. Mining waste also has to be managed. This is not just rocks that can be dumped anywhere, ore is often crushed into a powder to be refined. Properly disposing of the waste (which is a complex issue – see here) can also be costly and have environmental impact. Further, as we deplete high-grade ore, new mines often go after lower and lower quality ore, with more waste.

Continue Reading »

Sep

04

2025

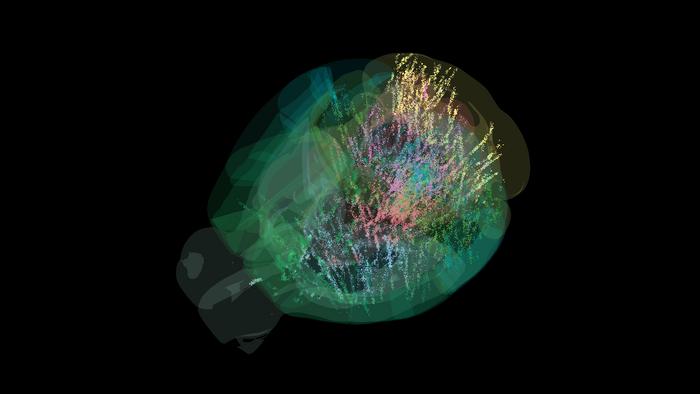

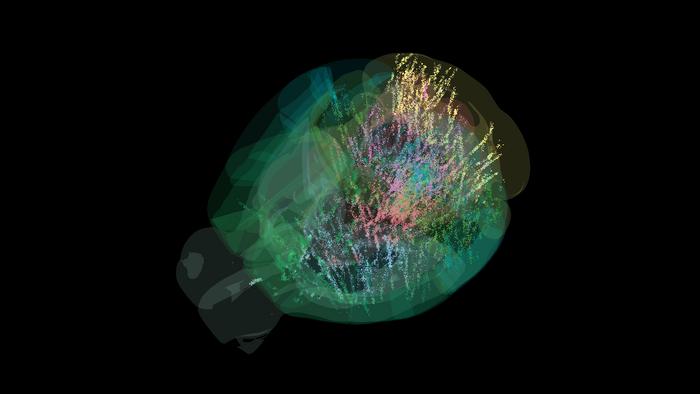

Researchers have just presented the results of a collaboration among 22 neuroscience labs mapping the activity of the mouse brain down to the individual cell. The goal was to see brain activity during decision-making. Here is a summary of their findings:

Researchers have just presented the results of a collaboration among 22 neuroscience labs mapping the activity of the mouse brain down to the individual cell. The goal was to see brain activity during decision-making. Here is a summary of their findings:

“Representations of visual stimuli transiently appeared in classical visual areas after stimulus onset and then spread to ramp-like activity in a collection of midbrain and hindbrain regions that also encoded choices. Neural responses correlated with impending motor action almost everywhere in the brain. Responses to reward delivery and consumption were also widespread. This publicly available dataset represents a resource for understanding how computations distributed across and within brain areas drive behaviour.”

Essentially, activity in the brain correlating with a specific decision-making task was more widely distributed in the mouse brain than they had previously suspected. But more specifically, the key question is – how does such widely distributed brain activity lead to coherent behavior. The entire set of data is now publicly available, so other researchers can access it to ask further research questions. Here is the specific behavior they studied:

“Mice sat in front of a screen that intermittently displayed a black-and-white striped circle for a brief amount of time on either the left or right side. A mouse could earn a sip of sugar water if they quickly moved the circle toward the center of the screen by operating a tiny steering wheel in the same direction, often doing so within one second.”

Further, the mice learned the task, and were able to guess which side they needed to steer towards even when the circle was very dim based on their past experience. This enabled the researchers to study anticipation and planning. They were also able to vary specific task details to see how the change affected brain function. Any they recorded the activity of single neurons to see how their activity was predicted by the specific tasks.

Continue Reading »

Sep

02

2025

The World Wide Web has proven to be a transformative communication technology (we are using it right now). At the same time there have been some rather negative unforeseen consequences. Significantly lowering the threshold for establishing a communications outlet has democratized content creation and allows users unprecedented access to information from around the world. But it has also lowered the threshold for unscrupulous agents, allowing for a flood of misinformation, disinformation, low quality information, spam, and all sorts of cons.

The World Wide Web has proven to be a transformative communication technology (we are using it right now). At the same time there have been some rather negative unforeseen consequences. Significantly lowering the threshold for establishing a communications outlet has democratized content creation and allows users unprecedented access to information from around the world. But it has also lowered the threshold for unscrupulous agents, allowing for a flood of misinformation, disinformation, low quality information, spam, and all sorts of cons.

One area where this has been perhaps especially destructive is in scientific publishing. Here we see a classic example of the trade-off dilemma between editorial quality and open access. Scientific publishing is one area where it is easy to see the need for quality control. Science is a collective endeavor where all research is building on prior research. Scientists cite each other’s work, include the work of others in systematic reviews, and use the collective research to make many important decisions – about funding, their own research, investment in technology, and regulations.

When this collective body of scientific research becomes contaminated with either fraudulent or low-quality research, it gums up the whole system. It creates massive inefficiency and adversely affects decision-making. You certainly wouldn’t want your doctor to be making treatment recommendations on fraudulent or poor-quality research. This is why there is a system in place to evaluate research quality – from funding organizations to universities, journal editors, peer reviewers, and the scientific community at large. But this process can have its own biases, and might inhibit legitimate but controversial research. A journal editor might deem research to be of low quality partly because its conclusions conflict with their own research or scientific conclusions.

Continue Reading »

We are all familiar with the notion of “being on autopilot” – the tendency to initiate and even execute behaviors out of pure habit rather than conscious decision-making. When I shower in the morning I go through roughly the identical sequence of behaviors, while my mind is mostly elsewhere. If I am driving to a familiar location the word “autopilot” seems especially apt, as I can execute the drive with little thought. Of course, sometimes this leads me to taking my most common route by habit even when I intend to go somewhere else. You can, of course, override the habit through conscious effort.

We are all familiar with the notion of “being on autopilot” – the tendency to initiate and even execute behaviors out of pure habit rather than conscious decision-making. When I shower in the morning I go through roughly the identical sequence of behaviors, while my mind is mostly elsewhere. If I am driving to a familiar location the word “autopilot” seems especially apt, as I can execute the drive with little thought. Of course, sometimes this leads me to taking my most common route by habit even when I intend to go somewhere else. You can, of course, override the habit through conscious effort.

Yesterday, Trump and RFK Jr had a press conference which some are characterizing as the absolutely

Yesterday, Trump and RFK Jr had a press conference which some are characterizing as the absolutely  Quantum computers are a significant challenge for science communicators for a few reasons. One, of course, is that they involve quantum mechanics, which is not intuitive. It’s also difficult to understand why they represent a potential benefit for computing. But even with those technical challenges aside – I find it tricky to strike the optimal balance of optimism and skepticism. How likely are quantum computers, anyway. How much of what we hear is just hype? (There is a similar challenge with discussing AI.)

Quantum computers are a significant challenge for science communicators for a few reasons. One, of course, is that they involve quantum mechanics, which is not intuitive. It’s also difficult to understand why they represent a potential benefit for computing. But even with those technical challenges aside – I find it tricky to strike the optimal balance of optimism and skepticism. How likely are quantum computers, anyway. How much of what we hear is just hype? (There is a similar challenge with discussing AI.) This is not really anything new, but it is taking on a new scope. The WSJ recently wrote about

This is not really anything new, but it is taking on a new scope. The WSJ recently wrote about  It is becoming increasingly clear, in my opinion, that we need to further shift from an overall economic system based on a linear model of extraction-manufacture-use-waste to a more circular model where as much waste as possible becomes feedstock for another manufacturing process. It also seems clear, after reading about such things for a long time, that economics ultimately drives such decisions. If the one-way road to waste is the cheapest pathway, that is the path industry will take. Unfortunately, this model historically has lead to massive pollution, growing waste, and a changing climate. How do we switch to an economically viable circular economy, to minimize waste and environmental impact without decreasing standard of living? That is always the $64,000 question.

It is becoming increasingly clear, in my opinion, that we need to further shift from an overall economic system based on a linear model of extraction-manufacture-use-waste to a more circular model where as much waste as possible becomes feedstock for another manufacturing process. It also seems clear, after reading about such things for a long time, that economics ultimately drives such decisions. If the one-way road to waste is the cheapest pathway, that is the path industry will take. Unfortunately, this model historically has lead to massive pollution, growing waste, and a changing climate. How do we switch to an economically viable circular economy, to minimize waste and environmental impact without decreasing standard of living? That is always the $64,000 question. Researchers

Researchers  The World Wide Web has proven to be a transformative communication technology (we are using it right now). At the same time there have been some rather negative unforeseen consequences. Significantly lowering the threshold for establishing a communications outlet has democratized content creation and allows users unprecedented access to information from around the world. But it has also lowered the threshold for unscrupulous agents, allowing for a flood of misinformation, disinformation, low quality information, spam, and all sorts of cons.

The World Wide Web has proven to be a transformative communication technology (we are using it right now). At the same time there have been some rather negative unforeseen consequences. Significantly lowering the threshold for establishing a communications outlet has democratized content creation and allows users unprecedented access to information from around the world. But it has also lowered the threshold for unscrupulous agents, allowing for a flood of misinformation, disinformation, low quality information, spam, and all sorts of cons.