Sep

30

2024

I can’t resist a good science story involving technology that we can possibly use to stabilize our climate in the face of anthropogenic global warming. This one is a fun story and an interesting, and potentially useful, idea. As we map out potential carbon pathways into the future, focusing on the rest of this century, it is pretty clear that it is going to be extremely difficult to completely decarbonize our civilization. This means we can only slow down, but not stop or reverse global warming. Once carbon is released into the ecosystem, it will remain there for hundreds or even thousands of years. So waiting for natural processes isn’t a great solution.

I can’t resist a good science story involving technology that we can possibly use to stabilize our climate in the face of anthropogenic global warming. This one is a fun story and an interesting, and potentially useful, idea. As we map out potential carbon pathways into the future, focusing on the rest of this century, it is pretty clear that it is going to be extremely difficult to completely decarbonize our civilization. This means we can only slow down, but not stop or reverse global warming. Once carbon is released into the ecosystem, it will remain there for hundreds or even thousands of years. So waiting for natural processes isn’t a great solution.

What we could really use is a way to cost-effectively at scale remove CO2 already in the atmosphere (or from seawater – another huge reservoir) to compensate for whatever carbon release we cannot eliminate from industry, and even to reverse some of the CO2 build up. This is often referred to as carbon capture and sequestration. There is a lot of research in this area, but we do not currently have a technology that fits the bill. Carbon capture is small scale and expensive. The most useful methods are chemical carbon capture done at power plants, to reduce some of the carbon released.

There is, however, a “technology” that cheaply and automatically captures carbon from the air and binds it up in solid form – trees. This is why there is much discussion of planting trees as a climate change mitigation strategy. Trees, however, eventually give up their captured carbon back into the atmosphere. So at best they are a finite carbon reservoir. A 2019 study found that if we restored global forests by planting half a trillion trees, that would capture about 20 years worth of CO2 at the current rate of release, or about half of all the CO2 released since 1960 (at least as of 2019). But once those trees matured we would reach a new steady state and further sequestering would stop. This is at least better than continuing to cut down forests and reducing their store of carbon. Tree planting can still be a useful strategy to help buy time as we further decarbonize technology.

Continue Reading »

Sep

26

2024

Of every world known to humans outside the Earth, Mars is likely the most habitable. We have not found any genuinely Earth-like exoplanets. They are almost sure to exist, but we just haven’t found any yet. The closest so far is Kepler 452-b, which is a super Earth, specifically 60% larger than Earth. It is potentially in the habitable zone, but we don’t know what the surface conditions are like. Within our own solar system, Mars is by far more habitable for humans than any other world.

Of every world known to humans outside the Earth, Mars is likely the most habitable. We have not found any genuinely Earth-like exoplanets. They are almost sure to exist, but we just haven’t found any yet. The closest so far is Kepler 452-b, which is a super Earth, specifically 60% larger than Earth. It is potentially in the habitable zone, but we don’t know what the surface conditions are like. Within our own solar system, Mars is by far more habitable for humans than any other world.

And still, that’s not very habitable. It’s surface gravity is 38% that of Earth, it has no global magnetic field to protect against radiation, and its surface temperature ranges from -225°F (-153°C) to 70°F (20°C), with a median temperature of -85°F (-65°C). But things might have been different, and they were in the past. Once upon a time Mars had a more substantial atmosphere – today its atmosphere is less than 1% as dense as Earth’s. That atmosphere was not breathable, but contained CO2 which warmed the planet allowing for there to be liquid water on the surface. A human could likely walk on the surface of Mars 3 billion years ago with just a face mask and oxygen tank. But then the atmosphere mostly went away, leaving Mars the dry barren world we see today. What happened?

It’s likely that the primary factor was the lack of a global magnetic field, like we have on Earth. Earth’ magnetic field is like a protective shield that protects the Earth from the solar wind, which is charged so the particles are mostly diverted away from the Earth or drawn to the magnetic poles. On Mars the solar wind did not encounter a magnetic field, and it slowly stripped away the atmosphere on Mars. If we were somehow able to reconstitute a thick atmosphere on Mars, it too would slowly be stripped away, although that would take thousands of years to be significant, and perhaps millions of years in total.

Continue Reading »

Sep

24

2024

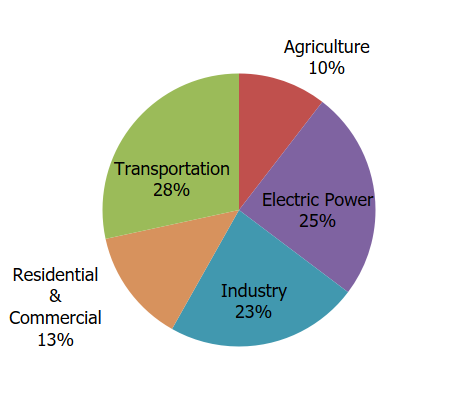

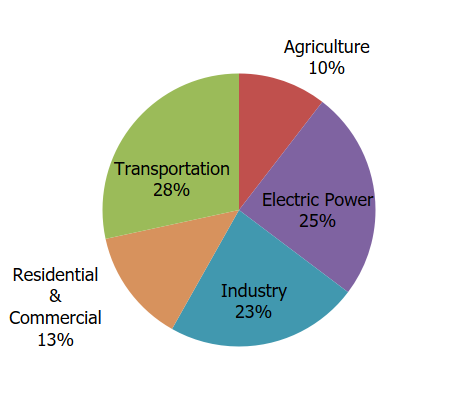

When we talk about reducing carbon release in order to slow down and hopefully stop anthropogenic global warming much of the focus is on the energy and transportation sectors. There is a good reason for this – the energy sector is responsible for 25% of greenhouse gas (GHG) emissions, while the transportation sector is responsible for 28% (if you separate out energy production and not include it in the end-user category). But that is just over half of GHG emissions. We can’t ignore the other half. Agriculture is responsible for 10% of GHG emissions, while industry is responsible for 23%, and residential and commercial activity 13%. Further, the transportation sector has many components, not just cars and trucks. It includes mass transit, rail, and aviation.

When we talk about reducing carbon release in order to slow down and hopefully stop anthropogenic global warming much of the focus is on the energy and transportation sectors. There is a good reason for this – the energy sector is responsible for 25% of greenhouse gas (GHG) emissions, while the transportation sector is responsible for 28% (if you separate out energy production and not include it in the end-user category). But that is just over half of GHG emissions. We can’t ignore the other half. Agriculture is responsible for 10% of GHG emissions, while industry is responsible for 23%, and residential and commercial activity 13%. Further, the transportation sector has many components, not just cars and trucks. It includes mass transit, rail, and aviation.

Any plan to deeply decarbonize our civilization must consider all sectors. We won’t get anywhere near net zero with just green energy and electric cars. It is tempting to focus on energy and cars because at least there we know exactly what to do, and we are, in fact, doing it. Most of the disagreement is about the optimal path to take and what the optimal mix of green energy options would be in different locations. For electric vehicles the discussion is mostly about how to make the transition happen faster – do we focus on subsidies, infrastructure, incentives, or mandates?

Industry is a different situation, and has been a tough nut to crack, although we are making progress. There are many GHG intensive processes in industry (like steel and concrete), and each requires different solutions and difficult transitions. Also, the solution often involves electrifying some aspect of industry, which works only if the energy sector is green, and will increase the demand for clean energy. Conservative estimates are that the energy sector will increase by 50% by 2050, but if we are successful in electrifying transportation and industry (not to mention all those data centers for AI applications) this estimate may be way off. This is yet another reason why we need an all-of-the-above approach to green energy.

Continue Reading »

Sep

19

2024

On the SGU we recently talked about aphantasia, the condition in which some people have a decreased or entirely absent ability to imagine things. The term was coined recently, in 2015, by neurologist Adam Zeman, who described the condition of “congenital aphantasia,” that he described as being with mental imagery. After we discussed in on the show we received numerous e-mails from people with the condition, many of which were unaware that they were different from most other people. Here is one recent example:

On the SGU we recently talked about aphantasia, the condition in which some people have a decreased or entirely absent ability to imagine things. The term was coined recently, in 2015, by neurologist Adam Zeman, who described the condition of “congenital aphantasia,” that he described as being with mental imagery. After we discussed in on the show we received numerous e-mails from people with the condition, many of which were unaware that they were different from most other people. Here is one recent example:

“Your segment on aphantasia really struck a chord with me. At 49, I discovered that I have total multisensory aphantasia and Severely Deficient Autobiographical Memory (SDAM). It’s been a fascinating and eye-opening experience delving into the unique way my brain processes information.

Since making this discovery, I’ve been on a wild ride of self-exploration, and it’s been incredible. I’ve had conversations with artists, musicians, educators, and many others about how my experience differs from theirs, and it has been so enlightening.

I’ve learned to appreciate living in the moment because that’s where I thrive. It’s been a life-changing journey, and I’m incredibly grateful for the impact you’ve had on me.”

Perhaps more interesting than the condition itself, and what I want to talk about today, is that the e-mailer was entirely unaware that most of the rest of humanity have a very different experience of their own existence. This makes sense when you think about it – how would they know? How can you know the subjective experience happening inside one’s brain? We tend to assume that other people’s brains function similar to our own, and therefore their experience must be similar. This is partly a reasonable assumption, and partly projection. We do this psychologically as well. When we speculate about other people’s motivations, we generally are just projecting our own motivations onto them.

Projecting our neurological experience, however, is a little different. What the aphantasia experience demonstrates is a couple of things, beginning with the fact that whatever is normal for you is normal. We don’t know, for example, if we have a deficit because we cannot detect what is missing. We can only really know by sharing other people’s experiences.

Continue Reading »

Sep

17

2024

In my book, which I will now shamelessly promote – The Skeptics’ Guide to the Future – my coauthors and I discuss the incredible potential of information-based technologies. As we increasingly transition to digital technology, we can leverage the increasing power of computer hardware and software. This is not just increasing linearly, but geometrically. Further, there are technologies that make other technologies more information-based or digital, such as 3D printing. The physical world and the virtual world are merging.

In my book, which I will now shamelessly promote – The Skeptics’ Guide to the Future – my coauthors and I discuss the incredible potential of information-based technologies. As we increasingly transition to digital technology, we can leverage the increasing power of computer hardware and software. This is not just increasing linearly, but geometrically. Further, there are technologies that make other technologies more information-based or digital, such as 3D printing. The physical world and the virtual world are merging.

With current technology this is perhaps most profound when it comes to genetics. The genetic code of life is essentially a digital technology. Efficient gene-editing tools, like CRISPR, give us increasing control over the genetic code. Arguably two of the most dramatic science and technology news stories over the last decade have been advances in gene editing and advances in artificial intelligence (AI). These two technologies also work well together – the genome is a large complex system of interacting information, and AI tools excel at dealing with large complex systems of interacting information. This is definitely a “you got chocolate in my peanut butter” situation.

A recent paper nicely illustrates the synergistic power of these two technologies – Interpreting cis-regulatory interactions from large-scale deep neural networks. Let’s break it down.

Cis-regulatory interactions refer to several regulatory functions of non-coding DNA. Coding DNA, which is contained within genes (genes contain both coding and non-coding elements) directly code for amino acids which are assembled into polypeptides and then folded into functional proteins. Remember the ATCG four letter base code, with three bases coding for a specific amino acid (or coding function, like a stop signal). This is coding DNA. Noncoding DAN regulates how coding DNA is transcribed into proteins.

Continue Reading »

Sep

16

2024

Last month my flight home from Chicago was canceled because of an intense rainstorm. In CT the storm was intense enough to cause flash flooding, which washed out roads and bridges and shut down traffic in many areas. The epicenter of the rainfall was in Oxford, CT (where my brother happens to live), which qualified as a 1,000 year flood (on average a flood of this intensity would occur once every 1,000 years). The flooding killed two people, with an estimated $300 million of personal property damage, and much more costly damage to infrastructure.

Last month my flight home from Chicago was canceled because of an intense rainstorm. In CT the storm was intense enough to cause flash flooding, which washed out roads and bridges and shut down traffic in many areas. The epicenter of the rainfall was in Oxford, CT (where my brother happens to live), which qualified as a 1,000 year flood (on average a flood of this intensity would occur once every 1,000 years). The flooding killed two people, with an estimated $300 million of personal property damage, and much more costly damage to infrastructure.

Is this now the new normal? Will we start seeing 1,000 year floods on a regular basis? How much of this is due to global climate change? The answers to these questions are complicated and dynamic, but basically yes, yes, and partly. This is just one more thing we are not ready for that will require some investment and change in behavior.

First, some flooding basics. There are three categories of floods. Fluvial floods (the most common in the US) occur near rivers and lakes, and essentially result from existing bodies of water overflowing their banks due to heavy cumulative or sudden rainfall. There are also pluvial floods which are also due to rainfall, but occur independent of any existing body of water. The CT flood were mainly pluvial. Finally, there are coastal flood related to the ocean. These can be due to extremely high tide, storm surges from intense storms like hurricanes, and tsunamis which are essentially giant waves.

Continue Reading »

Sep

12

2024

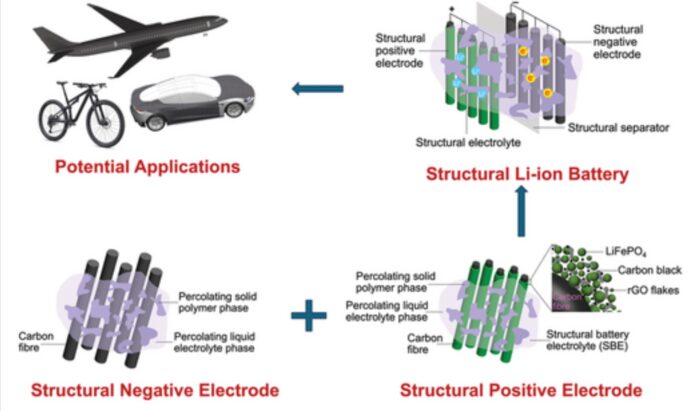

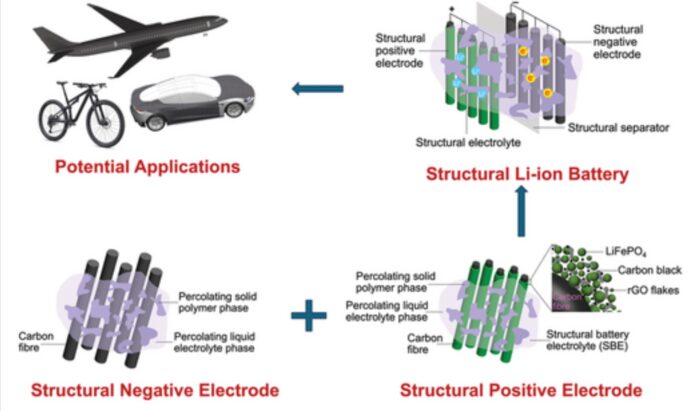

I have written previously about the concept of structural batteries, such as this recent post on a concrete battery. The basic idea is a battery made out of material that is strong enough that it can bare a load. Essentially we’re asking the material to do two things at once – be a structural material and be a battery. I am generally wary of such approaches to technology as you often wind up with something that is bad at two things, rather than simply optimizing each function.

I have written previously about the concept of structural batteries, such as this recent post on a concrete battery. The basic idea is a battery made out of material that is strong enough that it can bare a load. Essentially we’re asking the material to do two things at once – be a structural material and be a battery. I am generally wary of such approaches to technology as you often wind up with something that is bad at two things, rather than simply optimizing each function.

In medicine, for example, I generally don’t like combo medications – a single pill with two drugs meant to take together. I would rather mix and match the best options for each function. But sometimes there is such a convenience in the combination that it’s worth it. As with any technology, we have to consider the overall tradeoffs.

With structural batteries there is one huge gain – the weight and/or volume savings of having a material do double duty. The potential here is too great to ignore. For the concrete battery the advantage is about volume, not weight. The idea is to have the foundation of a building serve as individual or even grid power storage. For a structural battery that will save weight, we need a material is light and strong. One potential material is carbon fiber, which may be getting close to characteristics with practical applications.

Material scientists have created in the lab a carbon fiber battery material that could serve as a structural battery. Carbon fiber is a good substrate because it is light and strong, and also can be easily shaped as needed. Many modern jets are largely made out of carbon fiber for this reason. Of course you compromise the strength when you introduce materials needed for the energy storage, and these researchers have been working on achieving an optimal compromise. Their latest product has an elastic modulus that exceeds 76 GPa. For comparison, aluminum, which is also used for aircraft, has an elastic modulus of 70 GPa. Optimized carbon fiber has an elastic modulus of 200-500 GPa. Elastic modulus is one type of strength, specifically the resistance to non-permanent deformity. Being stronger than aluminum means it is in the range that is suitable for making lots of things, from laptops to airplanes.

Continue Reading »

Sep

10

2024

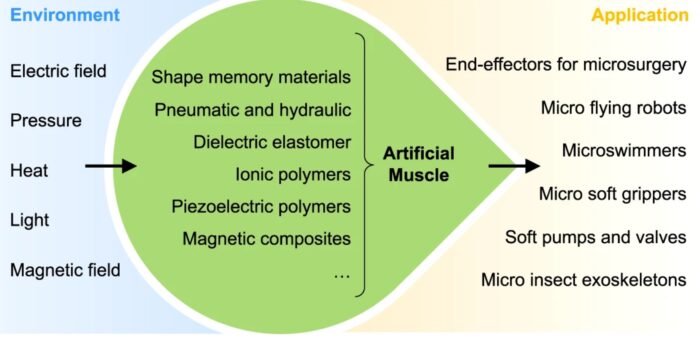

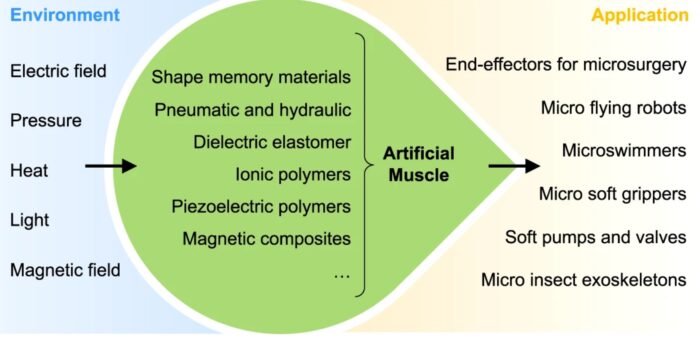

By now we have all seen the impressive robot videos, such as the ones from Boston Dynamics, in which robots show incredible flexibility and agility. These are amazing, but I understand they are a bit like trick-shot videos – we are being shown the ones that worked, which may not represent a typical outcome. Current robot technology, however, is a bit like steam-punk – we are making the most out of an old technology, but that technology is inherently limiting.

By now we have all seen the impressive robot videos, such as the ones from Boston Dynamics, in which robots show incredible flexibility and agility. These are amazing, but I understand they are a bit like trick-shot videos – we are being shown the ones that worked, which may not represent a typical outcome. Current robot technology, however, is a bit like steam-punk – we are making the most out of an old technology, but that technology is inherently limiting.

The tech I am talking about is motor-driven actuators. An actuator is a device that converts energy into mechanical force, such a torque or displacement. This is a technology that is about 200 years old. While they get the job done, they have a couple of significant limitations. One is that they use a lot of energy, much of which is wasted as heat. This is important as we try to make battery-driven robots that are not tethered to a power cord. Dog-like and humanoid robots typically last 60-90 minutes on one charge. Current designs are also relatively hard, so that limits their interaction with the environment. They also depend heavily on sensors to read their environment.

By contrast we can think about biological systems. Muscles are much more energy efficient, are soft, can be incredibly precise, are silent, and contain some of their own feedback to augment control. Developing artificial robotic muscles that would perform similar to biological systems is now a goal of robotics research, but it is a very challenging problem to crack. Such a system would also need to contract slowly or quickly, and even produce bursts of speed (if, for example, you want your robot to jump). They would need to be able to produce a lot of power, enough for the robot to move itself and carry out whatever function it has. It would also need to be able to efficiently hold a position for long periods of times.

Continue Reading »

Sep

03

2024

Humans identify and call each other by specific names. So far this advanced cognitive behavior has only been identified in a few other species, dolphins, elephants, and some parrots. Interestingly, it has never been documented in our closest relatives, non-human primates – that is, until now. A recent study finds that marmoset monkeys have unique calls, “phee-calls”, that they use to identify specific individual members of their group. The study also found that within a group of marmosets, all members use the same name to refer to the same individual, so they are learning the names from each other. Also interesting, different families of marmosets use different kinds of sounds in their names, as if each family has their own dialect.

Humans identify and call each other by specific names. So far this advanced cognitive behavior has only been identified in a few other species, dolphins, elephants, and some parrots. Interestingly, it has never been documented in our closest relatives, non-human primates – that is, until now. A recent study finds that marmoset monkeys have unique calls, “phee-calls”, that they use to identify specific individual members of their group. The study also found that within a group of marmosets, all members use the same name to refer to the same individual, so they are learning the names from each other. Also interesting, different families of marmosets use different kinds of sounds in their names, as if each family has their own dialect.

In these behaviors we can see the roots of language and culture. It is not surprising that we see these roots in our close relatives. It is perhaps more surprising that we don’t see it more in the very closest relatives, like chimps and gorillas. What this implies is that these sorts of high-level behaviors, learning names for specific individuals in your group, is not merely a consequence of neurological develop. You need something else. There needs to be an evolutionary pressure.

That pressure is likely living in an environment and situation where families members are likely to be out of visual contact of each other. Part of this is the ability to communicate at long enough distance that will put individuals out of visual contact. For example, elephants can communicate over miles. Dolphins often swim in murky water with low visibility. Parrots and marmosets live in dense jungle. Of course, you need to have that evolutionary pressure and the neurological sophistication for the behavior – the potential and the need have to align.

Continue Reading »

I can’t resist a good science story involving technology that we can possibly use to stabilize our climate in the face of anthropogenic global warming. This one is a fun story and an interesting, and potentially useful, idea. As we map out potential carbon pathways into the future, focusing on the rest of this century, it is pretty clear that it is going to be extremely difficult to completely decarbonize our civilization. This means we can only slow down, but not stop or reverse global warming. Once carbon is released into the ecosystem, it will remain there for hundreds or even thousands of years. So waiting for natural processes isn’t a great solution.

I can’t resist a good science story involving technology that we can possibly use to stabilize our climate in the face of anthropogenic global warming. This one is a fun story and an interesting, and potentially useful, idea. As we map out potential carbon pathways into the future, focusing on the rest of this century, it is pretty clear that it is going to be extremely difficult to completely decarbonize our civilization. This means we can only slow down, but not stop or reverse global warming. Once carbon is released into the ecosystem, it will remain there for hundreds or even thousands of years. So waiting for natural processes isn’t a great solution.

Of every world known to humans outside the Earth, Mars is likely the most habitable. We have not found any genuinely Earth-like exoplanets. They are almost sure to exist, but we just haven’t found any yet. The closest so far is Kepler 452-b, which is a super Earth, specifically 60% larger than Earth. It is potentially in the habitable zone, but we don’t know what the surface conditions are like. Within our own solar system, Mars is by far more habitable for humans than any other world.

Of every world known to humans outside the Earth, Mars is likely the most habitable. We have not found any genuinely Earth-like exoplanets. They are almost sure to exist, but we just haven’t found any yet. The closest so far is Kepler 452-b, which is a super Earth, specifically 60% larger than Earth. It is potentially in the habitable zone, but we don’t know what the surface conditions are like. Within our own solar system, Mars is by far more habitable for humans than any other world. When we talk about reducing carbon release in order to slow down and hopefully stop anthropogenic global warming much of the focus is on the energy and transportation sectors. There is a good reason for this – the energy sector is responsible for 25% of greenhouse gas (GHG) emissions, while the transportation sector is responsible for 28% (if you separate out energy production and not include it in the end-user category). But that is just over half of GHG emissions. We can’t ignore the other half. Agriculture is responsible for 10% of GHG emissions, while industry is responsible for 23%, and residential and commercial activity 13%. Further, the transportation sector has many components, not just cars and trucks. It includes mass transit, rail, and aviation.

When we talk about reducing carbon release in order to slow down and hopefully stop anthropogenic global warming much of the focus is on the energy and transportation sectors. There is a good reason for this – the energy sector is responsible for 25% of greenhouse gas (GHG) emissions, while the transportation sector is responsible for 28% (if you separate out energy production and not include it in the end-user category). But that is just over half of GHG emissions. We can’t ignore the other half. Agriculture is responsible for 10% of GHG emissions, while industry is responsible for 23%, and residential and commercial activity 13%. Further, the transportation sector has many components, not just cars and trucks. It includes mass transit, rail, and aviation. On the SGU we recently talked about aphantasia, the condition in which some people have a decreased or entirely absent ability to imagine things. The term was

On the SGU we recently talked about aphantasia, the condition in which some people have a decreased or entirely absent ability to imagine things. The term was  In my book, which I will now shamelessly promote –

In my book, which I will now shamelessly promote –  Last month my flight home from Chicago was canceled because of an intense rainstorm. In CT the storm was intense enough to cause flash flooding, which washed out roads and bridges and shut down traffic in many areas. The epicenter of the rainfall

Last month my flight home from Chicago was canceled because of an intense rainstorm. In CT the storm was intense enough to cause flash flooding, which washed out roads and bridges and shut down traffic in many areas. The epicenter of the rainfall  I have written previously about the concept of structural batteries, such as this

I have written previously about the concept of structural batteries, such as this  By now we have all seen the impressive robot videos, such as the

By now we have all seen the impressive robot videos, such as the  Humans identify and call each other by specific names. So far this advanced cognitive behavior has only been identified in a few other species, dolphins, elephants, and some parrots. Interestingly, it has never been documented in our closest relatives, non-human primates – that is, until now.

Humans identify and call each other by specific names. So far this advanced cognitive behavior has only been identified in a few other species, dolphins, elephants, and some parrots. Interestingly, it has never been documented in our closest relatives, non-human primates – that is, until now.