Oct

29

2021

Framing is important, and often exists below our conscious awareness. If we are not even aware that we are framing an issue in a certain way, and that other framings are possible, this will influence our thinking in ways that we cannot anticipate or correct for. Sometimes a particular framing is deliberate, a strategy for propaganda and rhetorical advantage. This is often done in order to win a debate before it even begins, by rigging the intellectual venue. It’s therefore critical to keep on the alert for how you and others frame particular issues.

Framing has come up quite a bit in the recent comment discussions on global warming, so I would like to address the framing issue directly. The question is – what kind of a problem is global warming, from the perspective of how we can solve it. One framing, of course, is that it is not a problem, that it’s some kind of hoax, but I will simply reject that framing outright. There is an overwhelming scientific consensus that anthropogenic global warming is happening and is already causing problems, with big problems likely ahead. The question we should focus on now is, how should we mitigate AGW?

I have encountered four different ways to approach this question: scientific, economic, political, and social. If we ask, is AGW primarily a scientific, economic, political, or social problem, the answer is, “Yes”. It’s all of them at the same time, and there is interaction among these different factors. Human psychology, however, tends to favor oversimplification and moral purity, so there is a tendency to frame the issue from only one perspective. There is also a tendency to then stake out that framing as the one “correct” view, and then criticize the other perspectives by making straw men out of them, partly by pointing at their most fringe elements as if they were mainstream. But let’s take a more thorough and nuanced approach.

Continue Reading »

Oct

28

2021

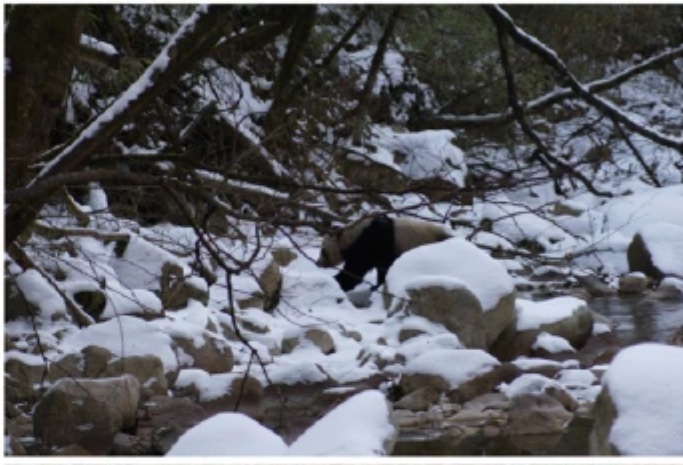

When thinking of highly camouflaged animals, giant pandas are probably not on the short list. Their striking black and white fur pattern, if anything, makes them stand out. This, at least, has been the conventional wisdom, but a new study shows that the panda’s coloration is an effective form of camouflage.

When thinking of highly camouflaged animals, giant pandas are probably not on the short list. Their striking black and white fur pattern, if anything, makes them stand out. This, at least, has been the conventional wisdom, but a new study shows that the panda’s coloration is an effective form of camouflage.

When we think of animals with camouflage we generally imagine those with dull mottled colors matching their surroundings, or perhaps the uniform tawny color of animals who live and hunt in tall grass. Animals with striking or bold coloration don’t come to mind. But camouflage can take many forms, and when judging how effective it is we need to consider several contextual factors, including surroundings, distance, and the viewer.

When we view pandas it is usually in an unnatural setting, not in their native habitat. Because they are reclusive, pictures of giant pandas in their native environment are rare, but the researchers were able to obtain enough photos of pandas to perform an analysis. We also tend to view pandas relatively close up. This is a variable you might not think of right away. It turns out that the black and white pattern on pandas is good for disrupting their overall outline. This camouflage strategy is most effective at long distance.

It’s easy to imagine why animals might evolve camouflage that is more effective at distance. Close up, predators can rely on other sensory information to detect their potential prey, such as sound and smell. The effectiveness of camouflage, especially for a very large animal like a panda, is therefore limited at short distances. But camouflage may be highly effective at longer distances, preventing a predator from detecting the panda in the first place. Long distance camouflage may also have a statistically greater advantage, as there is more likely to be a predator within a larger range.

Continue Reading »

Oct

26

2021

For most of recorded human history we knew of only those planets that were naked-eye visible (Mercury, Venus, Mars, Jupiter and Saturn). We new these dots of light in the sky were different from the other stars because they were not fixed, they wandered about. The invention of the telescope and its use in astronomy allowed us to study the planets and see that they were worlds of their own, while adding Uranus, Neptune and Pluto to the list. Pluto has since been recategorized as a dwarf planet, with four others added to the list, and many more likely.

For most of recorded human history we knew of only those planets that were naked-eye visible (Mercury, Venus, Mars, Jupiter and Saturn). We new these dots of light in the sky were different from the other stars because they were not fixed, they wandered about. The invention of the telescope and its use in astronomy allowed us to study the planets and see that they were worlds of their own, while adding Uranus, Neptune and Pluto to the list. Pluto has since been recategorized as a dwarf planet, with four others added to the list, and many more likely.

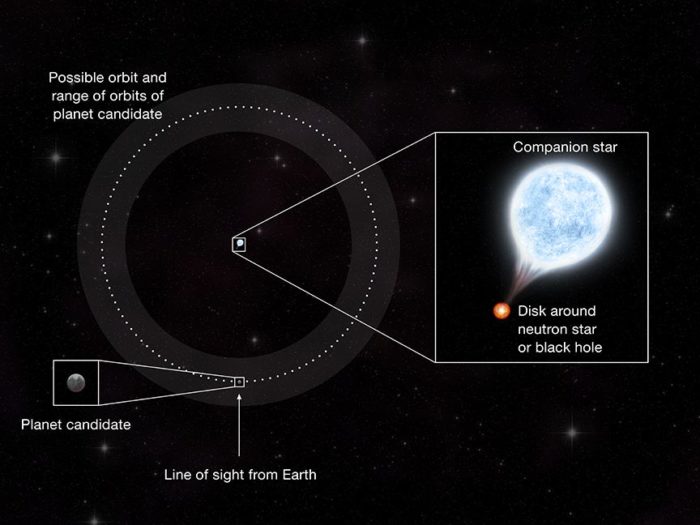

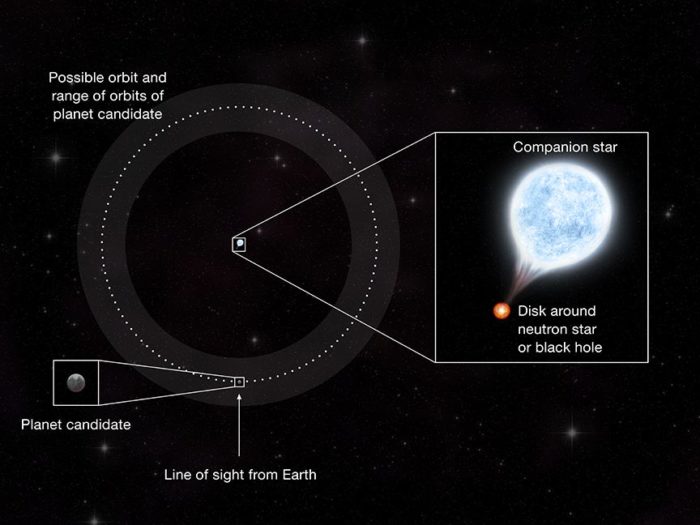

Of course astronomers suspected that our own solar system was not unique and therefore other stars likely had their own planets. The first observation of a disc of material around another star was in 1984, supporting the idea of planet formation around other stars. The first exoplanets (as planets outside our solar system are called) was discovered in 1992, orbiting a pulsar. In 1995 the first planet orbiting a main sequence star (like our own) was discovered. This confirmed the possibility of planetary systems theoretically capable of supporting organic life. So far more than 4,000 exoplanets have been confirmed.

The most common method of detecting an exoplanet is the transit method. For systems where the plane of planetary orbits aligns with the angle of view from Earth, orbiting planets will pass in front of their parent star. Astronomers measure what they call the “light curve” of the star, and when a planet transits the light curve dips then returns to baseline. From this they can infer the size and distance of the planet. If the orbit is short enough, they can confirm the exoplanet and measure its year by detecting multiple transits.

Continue Reading »

Oct

25

2021

I have been writing a lot about energy recently, partly because there is a lot of energy news, and also I find it helpful to focus on a topic for a while to help me see how all the various pieces fit together. It is often easy to see how individual components of energy and other technology might operate in isolation, and more challenging to see how they will all work together as part of a complete system. But the system approach is critical. We can calculate the carbon and energy efficiency of installing solar panels on a home and easily understand how they would function. A completely different analysis is required to imagine solar power supplying 50% or more of our energy – now we have to consider things like grid function, grid storage, energy balancing, regulations for consumers and industry, sourcing raw material, disposal and recycling.

I have been writing a lot about energy recently, partly because there is a lot of energy news, and also I find it helpful to focus on a topic for a while to help me see how all the various pieces fit together. It is often easy to see how individual components of energy and other technology might operate in isolation, and more challenging to see how they will all work together as part of a complete system. But the system approach is critical. We can calculate the carbon and energy efficiency of installing solar panels on a home and easily understand how they would function. A completely different analysis is required to imagine solar power supplying 50% or more of our energy – now we have to consider things like grid function, grid storage, energy balancing, regulations for consumers and industry, sourcing raw material, disposal and recycling.

This is why just letting the market sort everything out will likely not result in optimal outcomes. Market decisions tend to be individual and short term. When EVs and solar panels are cost effective, everyone will want one. Demand is likely to outstrip supply. Supply chains could bottleneck. The grid won’t support rooftop solar beyond the early adopters. And where is everyone going to charge their EVs? At scale, widespread change in technology often requires new infrastructure and sometimes systems planning.

This can create an infrastructure dilemma – what future technology to you build for? You can take the “build it and they will come” approach, which assumes that infrastructure investment will affect and even determine the future direction of the market. California discovered the limits of this approach when they tried to bootstrap a hydrogen vehicle revolution by building a hydrogen infrastructure. Or you can backfill infrastructure as technology requires it, but this doesn’t quite work either. People won’t buy cars until there are roads, and won’t want to invest in roads until lots of people have cars. At some point you have to bet on future technology – just be flexible and willing to change course as technology evolves.

Continue Reading »

Oct

21

2021

There are different ways of looking at history. The traditional way, the one most of us were likely taught in school, is mostly as a sequence of events focusing on the state level – world leaders, their political battles, and their wars with each other. This focus, however, can be shifted in many ways. It can be shifted horizontally to focus on different aspects of history, such as cultural or scientific. It can also zoom in or out to different levels of detail. I find especially fascinating those takes on history that zoom all the way out, take the biggest perspective possible and look for general trends.

There are different ways of looking at history. The traditional way, the one most of us were likely taught in school, is mostly as a sequence of events focusing on the state level – world leaders, their political battles, and their wars with each other. This focus, however, can be shifted in many ways. It can be shifted horizontally to focus on different aspects of history, such as cultural or scientific. It can also zoom in or out to different levels of detail. I find especially fascinating those takes on history that zoom all the way out, take the biggest perspective possible and look for general trends.

A recent study does just that, looking at societal factors that drive the development of military technology in pre-industrial societies, covering a span of 10,000 years. They chose military technology for two main reasons. The first is simply convenience – military technology is particularly well preserved in historical records. The second is that military technology is a good marker for overall technology in most societies, and tends to drive other technologies. This remains true today, as cutting edge military technology (think GPS) often trickles down to the civilian world.

As an interesting aside, the researchers relied on Seshat: Global History Databank. This is a massive databank of 200,000 entries on 500 societies over 10,000 years. This kind of data is necessary to do this level of research efficiently, and is a good demonstration of this more general trend in scientific research – in increasing areas of research, it’s all about big data.

Continue Reading »

Oct

19

2021

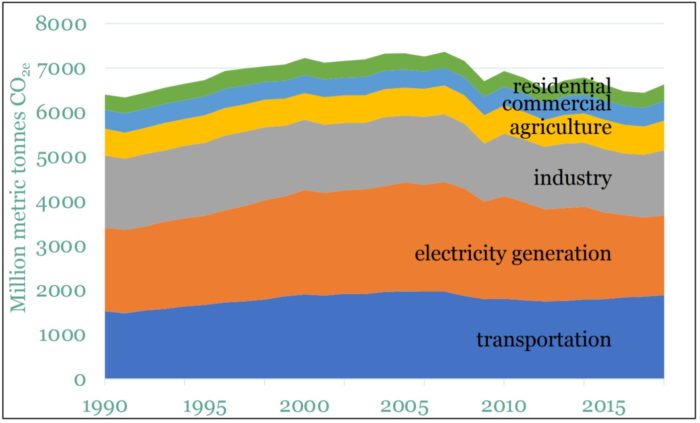

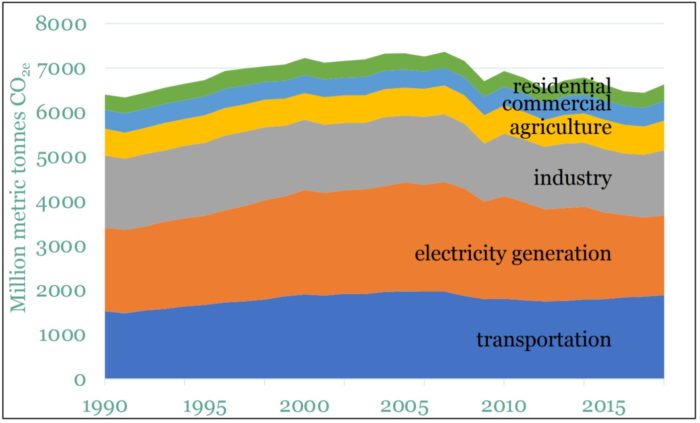

Next month the UN will host the 26th conference on climate change, the COP26. At this point the discussion is not so much what the goal should be, it’s how to achieve that goal. The Paris Accords set that goal at limiting global warming to 1.5 C above pre-industrial levels. In order to achieve this outcome goal the consensus is that we need to achieve the primary goal of net zero green house gas (GHG) emissions by 2050. There is considerable disagreement about whether or not net zero by 2050 will achieve the outcome goal of limiting warming to 1.5 C. Some experts think it’s already too late for 1.5 C, and we should be planning on at least 2.0 C and what the world will be like with that much warming.

Next month the UN will host the 26th conference on climate change, the COP26. At this point the discussion is not so much what the goal should be, it’s how to achieve that goal. The Paris Accords set that goal at limiting global warming to 1.5 C above pre-industrial levels. In order to achieve this outcome goal the consensus is that we need to achieve the primary goal of net zero green house gas (GHG) emissions by 2050. There is considerable disagreement about whether or not net zero by 2050 will achieve the outcome goal of limiting warming to 1.5 C. Some experts think it’s already too late for 1.5 C, and we should be planning on at least 2.0 C and what the world will be like with that much warming.

Either way, there is agreement that we should focus instead on what we can actually do, achieve net zero by 2050, rather than the outcome which we cannot predict with that level of precision. If we agree on this goal, the conversation then shifts to the question of how we can achieve this goal. From one perspective the answer is easy – we need to stop burning fossil fuels, convert those industries with the greatest carbon footprint to produce less CO2, and add some carbon capture to compensate for whatever CO2 emissions we cannot fully get rid of. But that’s like saying – in order to win football games you need to score more points than the other team, mostly through touchdowns and field goals. That’s correct, but doesn’t really give you the information you need. How, exactly, will we achieve these ends?

This is the conversation we should have been focusing on for the last 20 years, rather than dealing with denialists who refuse to accept the scientific reality, or the delay tactics by industry and their paid representatives (i.e. politicians). It’s pretty clear at this point we are never going to convince the deniers (not in this political climate – and I’m sure the comments to this post will adequately demonstrate my point), and industry is going to run out the clock any way they can. The bottom line is that achieving net zero by 2050 will require leaving fossil fuels in the ground, unburned. For the fossil fuel industry this means leaving a lot of money on the table, which is not going to happen simply out of a desire to be good corporate citizens.

Continue Reading »

Oct

18

2021

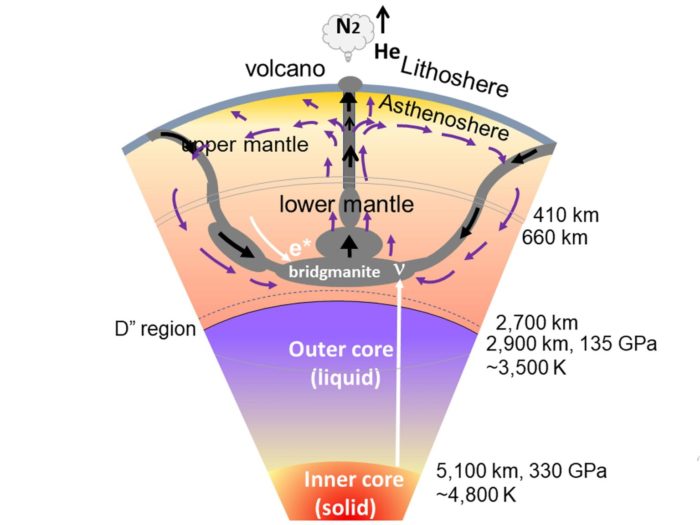

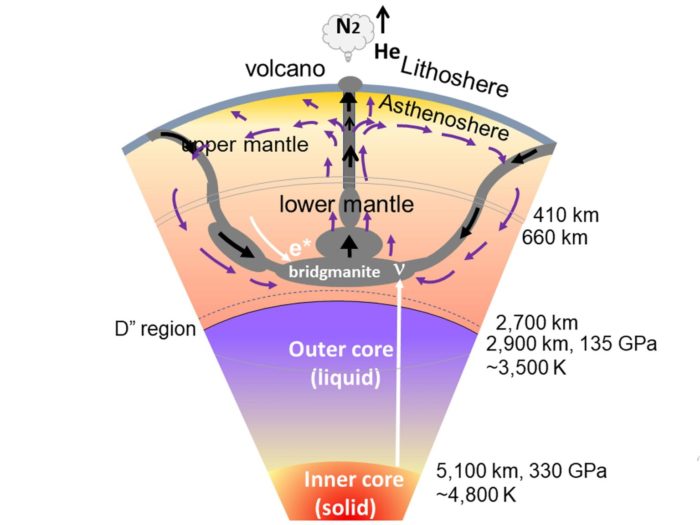

Perhaps the most famous line from Carl Sagan’s Cosmos series is, “We’re made of star stuff”. In this statement Sagan was referring to the fact that most of the elements that make up people (and everything else) were created (through nuclear fusion) inside long dead stars. While this core claims is true, physicists are finding potential supplemental sources of heavy elements, including in some surprising locations.

Perhaps the most famous line from Carl Sagan’s Cosmos series is, “We’re made of star stuff”. In this statement Sagan was referring to the fact that most of the elements that make up people (and everything else) were created (through nuclear fusion) inside long dead stars. While this core claims is true, physicists are finding potential supplemental sources of heavy elements, including in some surprising locations.

Elements are defined entirely by their number of protons, with hydrogen being the lightest element at one proton. The isotope of an element is determined by the number of neutrons, which can vary. The number of electrons are determined by the number of protons. An atom with greater or fewer electrons than protons is an ion, because it carries an electric charge. The next lightest element is helium, at two protons, and then lithium, at three protons. Current theories, observations, and models suggest that these three elements were the only ones created in the Big Bang, in a process known as nucleosynthesis.

It actually took about 1 second after the Big Bang for the universe to cool enough for protons and neutrons to exist, and then for the next three minutes or so all the elemental nuclei formed – about 75% hydrogen, 25% helium, and trace lithium (by mass). It then took 380,000 years for the universe to cool enough for these nuclei to capture electrons. Where, then, did the elements heavier than lithium come from? This is where Sagan’s “star stuff” comes in.

Stars are fueled by nuclear fusion in their cores, the process of combining lighter elements into heavier elements. The more massive the star, the more heat and pressure they can generate in their core, and the heavier the elements they can fuse. Fusion of these elements is exothermic, it produces the energy necessary to sustain the fusion. This lasts until enough of the heavier fusion product builds up in the core to effectively stop fusion. Then the star will collapse further, becoming hotter and denser until it can start fusing the heavier element. This process continues until the star is no longer able to fuse the elements in its core because it is simply not massive enough, there is therefore nothing to stop its collapse and it becomes a white dwarf.

Continue Reading »

Oct

15

2021

Some planets have planetary magnetic fields, while others don’t. Mercury has a weak magnetic field, while Venus and Mars have no significant magnetic field. This was bad news for Mars (or any critters living on Mars in the past) because the lack of a significant magnetic field allowed the solar wind to slowly strip away most of its atmosphere. Life on Earth enjoys the protection of a strong planetary magnetic field, protecting us from solar radiation.

Some planets have planetary magnetic fields, while others don’t. Mercury has a weak magnetic field, while Venus and Mars have no significant magnetic field. This was bad news for Mars (or any critters living on Mars in the past) because the lack of a significant magnetic field allowed the solar wind to slowly strip away most of its atmosphere. Life on Earth enjoys the protection of a strong planetary magnetic field, protecting us from solar radiation.

The Earth’s magnetic field is created by molten iron in the outer core. Rotating electrical charges generate magnetic fields, and iron is a conductive material. This is called the dynamo theory, with the vast momentum of the spinning iron core translating some of its energy into generating a magnetic field. In fact, the molten outer core is rotating a little faster than the rest of the Earth. This phenomenon is also likely the source of Mercury’s weak magnetic field.

The two gas giants have magnetic fields, with Jupiter having the strongest field of any planet (the sun has the strongest magnetic field in the solar system). It’s largest moon, Ganymede, also has a weak magnetic field, making it the only moon in our solar system to have one. Jupiter’s magnetic field is 20,000 times stronger than Earth’s. It’s massive and powerful. The question is – what is generating the magnetic field inside Jupiter? It’s probably not a molten iron core, like on Earth. Based on Jupiter’s mass and other features, astronomers suspect that the magnetic field is generated by liquid hydrogen in its core. Under extreme pressure, even at high temperatures, hydrogen can become a metallic liquid, capable of carrying a charge, and therefore generating a magnetic field. This is likely also the source of Saturn’s magnetic field, although it’s field is slightly weaker than Earth’s.

Continue Reading »

Oct

14

2021

At the turn of the 19th century there were three relatively equal contenders for automobile technology, electric cars, steam powered, and the internal combustion engine (ICE). It was not obvious at the time which technology would emerge dominant, or even if they would all continue to have market share. By 1905, however, the ICE began to dominate, and by 1920 electric cars fell out of production. The last steam car company ended production in 1930, perhaps later than you might have guessed.

At the turn of the 19th century there were three relatively equal contenders for automobile technology, electric cars, steam powered, and the internal combustion engine (ICE). It was not obvious at the time which technology would emerge dominant, or even if they would all continue to have market share. By 1905, however, the ICE began to dominate, and by 1920 electric cars fell out of production. The last steam car company ended production in 1930, perhaps later than you might have guessed.

This provides an excellent historical case for debate over which factors ultimately determined the winner of this marketplace competition (right up there with VHS vs Betamax). We will never definitively know the answer – we can’t rerun history with different variables to see what happens. Also, the ICE won out the world over because the international industry consolidated around that choice, meaning that other countries were not truly independent experiments.

The debate comes down to internal vs external factors – the inherent attributes of each technology vs infrastructure. Each technology had its advantages and disadvantages. Steam engines worked just fine, and had the advantage of being flexible in terms of fuel. These were external combustion engines, as the combustion took place separately, outside the engine itself. But they also needed a boiler, which produced the steam to power the engine. Steam cars were more powerful than ICE cars, and also quieter and (depending on their configuration) produced less pollution. They had better torque characteristics, obviating the need for a transmission. The big disadvantage was that they needed water for the boiler, which required either a condenser or frequent topping off. They could also take a few minutes to get up to operating temperature, but this problem was solved in later models with a flash boiler.

Continue Reading »

Oct

12

2021

As the world is contemplating ways to make its food production systems more efficient, productive, sustainable, and environmentally friendly, biotechnology is probably our best tool. I won’t argue it’s our only tool – there are many aspects of agriculture and they should all be leveraged to achieve our goals. I simply don’t think that we should take any tools off the table because of misguided philosophy, or worse, marketing narratives. The most pernicious such philosophy is the appeal to nature fallacy, where some arbitrary and vague sense of what is “natural” is used to argue (without or even against the evidence) that some options are better than others. We don’t really have this luxury anymore. We need to follow the science.

As the world is contemplating ways to make its food production systems more efficient, productive, sustainable, and environmentally friendly, biotechnology is probably our best tool. I won’t argue it’s our only tool – there are many aspects of agriculture and they should all be leveraged to achieve our goals. I simply don’t think that we should take any tools off the table because of misguided philosophy, or worse, marketing narratives. The most pernicious such philosophy is the appeal to nature fallacy, where some arbitrary and vague sense of what is “natural” is used to argue (without or even against the evidence) that some options are better than others. We don’t really have this luxury anymore. We need to follow the science.

Essentially we should not fear genetic technology. Genetically modified and gene edited crops have proven to be entirely safe and can offer significant advantages in our quest for better agriculture. The technology has also proven useful in medicine and industry through the use of genetically modified microorganisms, like bacteria and yeast, for industrial scale production of certain proteins. Insulin is a great example, and is essential to modern treatment of diabetes. The cheese industry is mostly dependent on enzymes created with GMO organisms.

This, by the way, is often the “dirty little secret” of many legislative GMO initiatives. They usually include carve out exceptions for critical GMO applications. In Hawaii, perhaps the most anti-GMO state, their regulations exclude GMO papayas, because they saved the papaya industry from blight, and Hawaii apparently is not so dedicated to their anti-GMO bias that they would be willing to kill off a vital industry. Vermont passed the most aggressive GMO labeling law in the States, but made an exception for the cheese industry. These exceptions are good, but they show the hypocrisy in the anti-GMO crowd – “GMO’s are bad (except when we can’t live without them)”.

Continue Reading »

When thinking of highly camouflaged animals, giant pandas are probably not on the short list. Their striking black and white fur pattern, if anything, makes them stand out. This, at least, has been the conventional wisdom,

When thinking of highly camouflaged animals, giant pandas are probably not on the short list. Their striking black and white fur pattern, if anything, makes them stand out. This, at least, has been the conventional wisdom,  For most of recorded human history we knew of only those planets that were naked-eye visible (Mercury, Venus, Mars, Jupiter and Saturn). We new these dots of light in the sky were different from the other stars because they were not fixed, they wandered about. The invention of the telescope and its use in astronomy allowed us to study the planets and see that they were worlds of their own, while adding Uranus, Neptune and Pluto to the list. Pluto has since been recategorized as a dwarf planet, with four others added to the list, and many more likely.

For most of recorded human history we knew of only those planets that were naked-eye visible (Mercury, Venus, Mars, Jupiter and Saturn). We new these dots of light in the sky were different from the other stars because they were not fixed, they wandered about. The invention of the telescope and its use in astronomy allowed us to study the planets and see that they were worlds of their own, while adding Uranus, Neptune and Pluto to the list. Pluto has since been recategorized as a dwarf planet, with four others added to the list, and many more likely. I have been writing a lot about energy recently, partly because there is a lot of energy news, and also I find it helpful to focus on a topic for a while to help me see how all the various pieces fit together. It is often easy to see how individual components of energy and other technology might operate in isolation, and more challenging to see how they will all work together as part of a complete system. But the system approach is critical. We can calculate the carbon and energy efficiency of installing solar panels on a home and easily understand how they would function. A completely different analysis is required to imagine solar power supplying 50% or more of our energy – now we have to consider things like grid function, grid storage, energy balancing, regulations for consumers and industry, sourcing raw material, disposal and recycling.

I have been writing a lot about energy recently, partly because there is a lot of energy news, and also I find it helpful to focus on a topic for a while to help me see how all the various pieces fit together. It is often easy to see how individual components of energy and other technology might operate in isolation, and more challenging to see how they will all work together as part of a complete system. But the system approach is critical. We can calculate the carbon and energy efficiency of installing solar panels on a home and easily understand how they would function. A completely different analysis is required to imagine solar power supplying 50% or more of our energy – now we have to consider things like grid function, grid storage, energy balancing, regulations for consumers and industry, sourcing raw material, disposal and recycling. There are different ways of looking at history. The traditional way, the one most of us were likely taught in school, is mostly as a sequence of events focusing on the state level – world leaders, their political battles, and their wars with each other. This focus, however, can be shifted in many ways. It can be shifted horizontally to focus on different aspects of history, such as cultural or scientific. It can also zoom in or out to different levels of detail. I find especially fascinating those takes on history that zoom all the way out, take the biggest perspective possible and look for general trends.

There are different ways of looking at history. The traditional way, the one most of us were likely taught in school, is mostly as a sequence of events focusing on the state level – world leaders, their political battles, and their wars with each other. This focus, however, can be shifted in many ways. It can be shifted horizontally to focus on different aspects of history, such as cultural or scientific. It can also zoom in or out to different levels of detail. I find especially fascinating those takes on history that zoom all the way out, take the biggest perspective possible and look for general trends. Next month the UN will host the 26th conference on climate change,

Next month the UN will host the 26th conference on climate change,  Perhaps the most famous line from Carl Sagan’s Cosmos series is, “We’re made of star stuff”. In this statement Sagan was referring to the fact that most of the elements that make up people (and everything else) were created (through nuclear fusion) inside long dead stars. While this core claims is true, physicists are finding potential supplemental sources of heavy elements, including in some surprising locations.

Perhaps the most famous line from Carl Sagan’s Cosmos series is, “We’re made of star stuff”. In this statement Sagan was referring to the fact that most of the elements that make up people (and everything else) were created (through nuclear fusion) inside long dead stars. While this core claims is true, physicists are finding potential supplemental sources of heavy elements, including in some surprising locations. Some planets have planetary magnetic fields, while others don’t. Mercury has a weak magnetic field, while Venus and Mars have no significant magnetic field. This was bad news for Mars (or any critters living on Mars in the past) because the lack of a significant magnetic field allowed the solar wind to slowly strip away most of its atmosphere. Life on Earth enjoys the protection of a strong planetary magnetic field, protecting us from solar radiation.

Some planets have planetary magnetic fields, while others don’t. Mercury has a weak magnetic field, while Venus and Mars have no significant magnetic field. This was bad news for Mars (or any critters living on Mars in the past) because the lack of a significant magnetic field allowed the solar wind to slowly strip away most of its atmosphere. Life on Earth enjoys the protection of a strong planetary magnetic field, protecting us from solar radiation. At the turn of the 19th century there were three relatively equal contenders for automobile technology, electric cars, steam powered, and the internal combustion engine (ICE). It was not obvious at the time which technology would emerge dominant, or even if they would all continue to have market share. By 1905, however,

At the turn of the 19th century there were three relatively equal contenders for automobile technology, electric cars, steam powered, and the internal combustion engine (ICE). It was not obvious at the time which technology would emerge dominant, or even if they would all continue to have market share. By 1905, however, As the world is contemplating ways to make its food production systems more efficient, productive, sustainable, and environmentally friendly, biotechnology is probably our best tool. I won’t argue it’s our only tool – there are many aspects of agriculture and they should all be leveraged to achieve our goals. I simply don’t think that we should take any tools off the table because of misguided philosophy, or worse, marketing narratives. The most pernicious such philosophy is the appeal to nature fallacy, where some arbitrary and vague sense of what is “natural” is used to argue (without or even against the evidence) that some options are better than others. We don’t really have this luxury anymore. We need to follow the science.

As the world is contemplating ways to make its food production systems more efficient, productive, sustainable, and environmentally friendly, biotechnology is probably our best tool. I won’t argue it’s our only tool – there are many aspects of agriculture and they should all be leveraged to achieve our goals. I simply don’t think that we should take any tools off the table because of misguided philosophy, or worse, marketing narratives. The most pernicious such philosophy is the appeal to nature fallacy, where some arbitrary and vague sense of what is “natural” is used to argue (without or even against the evidence) that some options are better than others. We don’t really have this luxury anymore. We need to follow the science.