Sep

30

2021

Last year YouTube (owned by Google) banned videos spreading misinformation about the COVID vaccines. The policy resulted in the removal of over 130,000 videos since last year. Now they announce that they are extending the ban to misinformation about any approved vaccine. The move is sure to provoke strong reactions and opinions on both sides, which I think is reasonable. The decision reflects a genuine dilemma of modern life with no perfect solution, and amounts to a “pick your poison” situation.

The case against big tech companies who control massive social media outlets essentially censoring certain kinds of content is probably obvious. That puts a lot of power into the hands of a few companies. It also goes against the principle of a free market place of ideas. In a free and open society people enjoy freedom of speech and the right to express their ideas, even (and especially) if they are unpopular. There is also a somewhat valid slippery slope argument to make – once the will and mechanisms are put into place to censor clearly bad content, mission creep can slowly impede on more and more opinions.

There is also, however, a strong case to be made for this kind of censorship. There has never been a time in our past when anyone could essentially claim the right to a massive megaphone capable of reaching most of the planet. Our approach to free speech may need to be tweaked to account for this new reality. Further, no one actually has the right to speech on any social media platform – these are not government sites nor are they owned by the public. They are private companies who have to the right to do anything they wish. The public, in turn, has the power to “vote with their dollars” and choose not to use or support any platform whose policies they don’t like. So the free market is still in operation.

Continue Reading »

Sep

28

2021

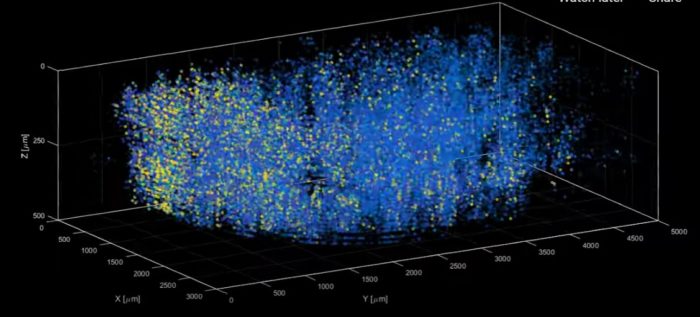

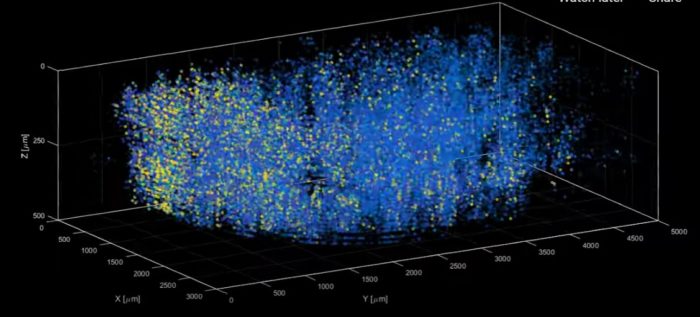

The current estimate is that the average human brain contains 86 billion neurons. These neurons connect to each other in a complex network, involving 100 trillion connections. The job of neuroscientists is to map all these connections and to see how they work – no small task. There are multiple ways to approach this task.

The current estimate is that the average human brain contains 86 billion neurons. These neurons connect to each other in a complex network, involving 100 trillion connections. The job of neuroscientists is to map all these connections and to see how they work – no small task. There are multiple ways to approach this task.

At first neuroscientists just looked at the brain and described its macroscopic (or “gross”) anatomical structures. We can see there are different lobes of the brain and major connecting cables. You can also slice up the brain and see its internal structure. When the microscope was developed we could then look at the microscopic structure of the brain, and by using different staining techniques we could visualize the branching structure of axons and dendrites (the parts of neurons that connect to other neurons), we could see that there were different kinds of neurons, various layers in the cortex, and lots of pathways and nodes.

But even when we had a detailed map of the neuroanatomy of the brain down to the microscopic level, we still needed to know how it all functioned. We needed to see neurons in action. (And further, there are lots of non-neuronal cells in the brain such as astrocytes that also affect brain function.) At first we were able to infer what different parts of the brain did by examining people who had damage to one part of the brain. Damage to the left temporal lobe in most people causes language deficits, so this part of the brain must be involved in language processing. We could also do research on animals for all but the highest brain functions.

Continue Reading »

Sep

27

2021

The story of exactly how and when people from other continents populated the Americas is still unfolding. Scientists have uncovered stunning new evidence – score of human footprints in New Mexico dating to 21-23 thousand years ago, 5-7- thousand years older than the previous oldest evidence.

The story of exactly how and when people from other continents populated the Americas is still unfolding. Scientists have uncovered stunning new evidence – score of human footprints in New Mexico dating to 21-23 thousand years ago, 5-7- thousand years older than the previous oldest evidence.

The evidence is pretty clear now that humans evolved in Africa and later spread throughout the world. We were not the first hominid species to leave Africa, that was Homo erectus, who spread to Europe and Asia about 1.8 million years ago. Meanwhile those that remained in Africa continued to evolve into other species, including Homo heidelbergensis, which is the current best candidate for the most recent common ancestor between modern humans and Neanderthals. Heidelbergensis also migrated out of Africa, and in Europe and Asia evolved into Neanderthals (who were well established by 400,000 years ago). Meanwhile their cousins back in the homeland evolved into modern humans.

Modern humans migrated out of Africa about 80,000 years ago, and spread throughout the world. Getting to Europe and Asia is easy, because they are all connected by land. Getting to the pacific islands was probably through a combination of land bridges during times of low ocean levels and traveling across water by some means, probably with short distance island hopping. The same is true of Australia, some combination of land bridges and island hopping.

Continue Reading »

Sep

23

2021

The robots are coming. Of course, they are already here, mostly in the manufacturing sector. Robots designed to function in the much softer and chaotic environment of a home, however, are still in their infancy (mainly toys and vacuum cleaners). Slowly but surely, however, robots are spreading out of the factory and into places where they interact with humans. As part of this process, researchers are studying how people socially react to robots, and how robot behavior can be tweaked to optimize this interaction.

The robots are coming. Of course, they are already here, mostly in the manufacturing sector. Robots designed to function in the much softer and chaotic environment of a home, however, are still in their infancy (mainly toys and vacuum cleaners). Slowly but surely, however, robots are spreading out of the factory and into places where they interact with humans. As part of this process, researchers are studying how people socially react to robots, and how robot behavior can be tweaked to optimize this interaction.

We know from prior research that people react to non-living things as if they are real people (technically, as if they have agency) if they act as if they have a mind of their own. Our brains sort the world into agents and objects, and this categorization seems to entirely depend on how something moves. Further, emotion can be conveyed with minimalistic cues. This is why cartoons work, or ventriloquist dummies.

A humanoid robot that can speak and has basic facial expressions, therefore, is way more than enough to trigger in our brains the sense that it is a person. The fact that it may be plastic, metal, and glass does not seem to matter. But still, intellectually, we know it is a robot. Let’s further assume for now we are talking about robots with narrow AI only, no general AI or self-awareness. Cognitively the robot is a computer and nothing more. We can now ask a long list of questions about how people will interact with such robots, and how to optimize their behavior for their function.

Continue Reading »

Sep

21

2021

Are you afraid of spiders? I mean, really afraid, to the point that you will alter your plans and your behavior in order to specifically reduce the chance of encountering one of these multi-legged creatures? Intense fears, or phobias, are fairly common, affecting from 3-15% of the population. The technical definition (from the DSM-V) of phobia contains a number of criteria, but basically it is a persistent fear or anxiety provoked by a specific object or situation that is persistent, unreasonable and debilitating. In order to be considered a disorder:

Are you afraid of spiders? I mean, really afraid, to the point that you will alter your plans and your behavior in order to specifically reduce the chance of encountering one of these multi-legged creatures? Intense fears, or phobias, are fairly common, affecting from 3-15% of the population. The technical definition (from the DSM-V) of phobia contains a number of criteria, but basically it is a persistent fear or anxiety provoked by a specific object or situation that is persistent, unreasonable and debilitating. In order to be considered a disorder:

“The fear, anxiety, or avoidance causes clinically significant distress or impairment in social, occupational, or other important areas of functioning.”

The most effective treatment for phobias is exposure therapy, which gradually exposes the person suffering from a phobia to the thing or situation which provokes fear and anxiety. This allows them to slowly build up a tolerance to the exposure (desensitization), to learn that their fears are unwarranted and to reduce their anxiety. Exposure therapy works, and reviews of the research show that it is effective and superior to other treatments, such as cognitive therapy alone.

But there can be practical limitations to exposure therapy. One of which is the inability to find an initial exposure scenario that the person suffering from a phobia will accept. For example, you may be so phobic of spiders that any exposure is unacceptable, and so there is no way to begin the process of exposure therapy. For these reasons there has been a great deal of interest in using virtual/augment reality for exposure therapy for phobia. A 2019 systematic review including nine studies found that VR exposure therapy was as effective as “in vivo” exposure therapy for agoraphobia (fearing situations like crowds that trigger panic) and specific phobias, but not quite as effective for social phobia.

Continue Reading »

Sep

20

2021

It is not uncommon, if you do not like any particular finding of scientific research, to attack the institutions of science or even the very notion of science itself. These kinds of attacks are now common in the anti-vaccine pushback against common sense public health measures, and often from a religious or ideological perspective. It’s not surprising that the false claim that science is just philosophy has reared its head in such writings. The attack on science also tends to have at least two components. The first is a straw man about how scientists are pretending that science is a monolithic perfect and objective entity. This is then followed by the claim that, rather, science is just opinion, another form of subjective philosophy. This position is entirely wrong on both counts.

Here is one example, embedded in a long article loaded with misinformation about vaccines and the COVID pandemic. There is way too much misdirection in this article to tackle in one response, and I only want to focus on the philosophical claims. These are now common within certain religious circles, mostly innovated, at least recently, in the fight against the teaching of evolution. They have already lost this fight, philosophically, scientifically, and (perhaps most importantly) legally, but of course that does not mean they will abandon a bad argument just because its wrong.

First the straw man:

The second consequence of “following science” is that it reinforces one of modernity’s most enduring myths: that “science” is a consistent, compact, institutionally-guaranteed body of knowledge without interest or agenda. What this myth conceals is the actual operation of the sciences—multiple, messy, contingent, and tentative as they necessarily are.

The myth is itself a myth. It exists almost nowhere except in the minds of science deniers and those with an anti-science agenda. Elsewhere the author admits:

As a lay person, unqualified to judge the technical issues, I have concluded only that there might be a legitimate question here, and one that must, necessarily, remain open until time and experience can settle it.

Continue Reading »

Sep

17

2021

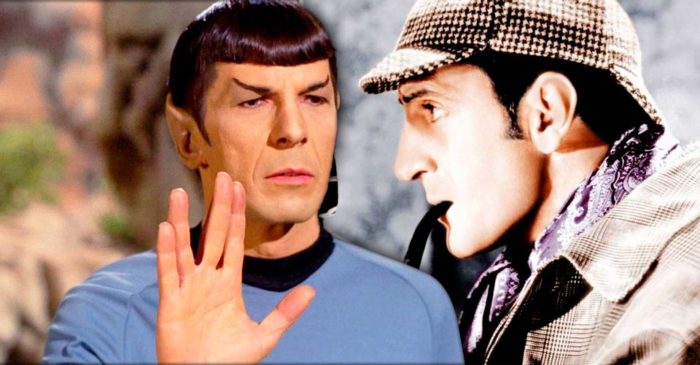

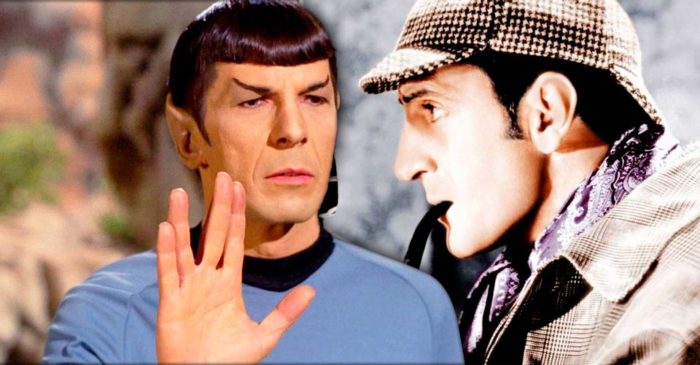

You’ve probably noticed that it’s very difficult to write a character who is extremely intelligent in some way. It’s easy to make a character knowledgeable, because you can just put a lot of facts into their mouth. The character Arthur P. Dietrich (played by Stephen Landesberg) on the sitcom Barney Miller always had a relevant fact at the ready. He seemed to know everything. What’s difficult is making a character wise, or giving them the ability to think in complex and logical ways. More specifically, it’s difficult to write a character that’s smarter than the writer themselves.

You’ve probably noticed that it’s very difficult to write a character who is extremely intelligent in some way. It’s easy to make a character knowledgeable, because you can just put a lot of facts into their mouth. The character Arthur P. Dietrich (played by Stephen Landesberg) on the sitcom Barney Miller always had a relevant fact at the ready. He seemed to know everything. What’s difficult is making a character wise, or giving them the ability to think in complex and logical ways. More specifically, it’s difficult to write a character that’s smarter than the writer themselves.

For me the most impressively written iconically smart character is Sherlock Holmes, written by Arthur Conan Doyle. It’s not impressive that Holmes always solved his cases or had a lot of factual knowledge; that’s the easy part. The author knows who did it and can just have their detective character get to the right answer. What is truly impressive is how Holmes worked through the cases, using genuinely impressive logic and reasoning. Holmes famously refers to his process as “deduction” but he was really mostly using inference to the most likely answer.

I admit I may be partial to Holmes because Doyle was a physician, and I can recognize a lot of clinical logic in Holmes’ thinking. In fact, I took a course on Sherlock Holmes in medical school, where we would make an analogy to how Holmes solved a particular case to how a physician might solve a clinical case. Here are some choice bits of logical advice from Holmes: Continue Reading »

Sep

16

2021

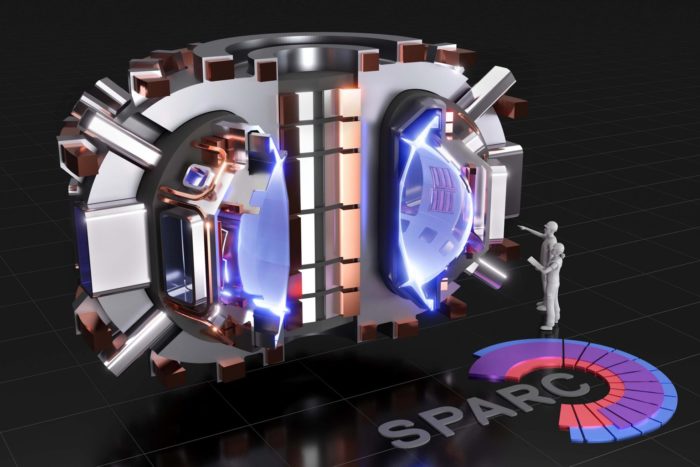

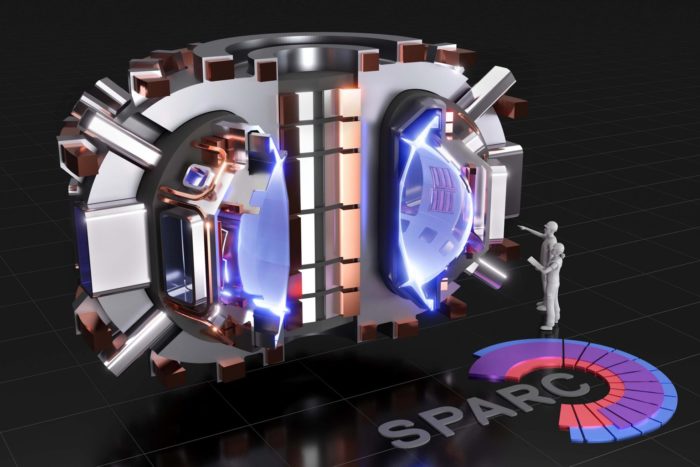

About a month ago I wrote about a milestone achieved by the National Ignition Facility (NIF) which is using the inertial confinement method to achieve fusion of hydrogen into helium. Briefly, they achieved “burning plasma” where heat from the fusion provides energy for further fusion. They are only about 70% of the way to ignition, where the fusion is self-sustaining with only its own energy.

About a month ago I wrote about a milestone achieved by the National Ignition Facility (NIF) which is using the inertial confinement method to achieve fusion of hydrogen into helium. Briefly, they achieved “burning plasma” where heat from the fusion provides energy for further fusion. They are only about 70% of the way to ignition, where the fusion is self-sustaining with only its own energy.

The NIF uses lasers to compress the hydrogen plasma to sufficient heat and density for fusion to occur. The other approach to achieving fusion is magnetic confinement, using powerful magnetic fields to squeeze the plasma to incredible density and heat, so that the hydrogen atoms are moving fast enough that occasionally two will collide with enough force to cause fusion. The magnetic confinement approach is all about the magnets – if we have magnets that are powerful and efficient enough, we can make fusion. It’s that simple. After decades of plasma research there are multiple labs around the world that can use magnetic confinement to get hydrogen to fuse. But we have yet to achieve “ignition”. Also, ignition is not the final goal, just one more milestone along the way. We need to go beyond ignition, where the fusion process is producing more than enough energy needed to sustain the fusion, so that some of the excess energy can be siphoned off and used to make electricity for the grid. That’s the whole idea.

MIT’s Plasma Science and Fusion Center (PSFC) in collaboration with Commonwealth Fusion Systems (CFS) has their own magnetic confinement fusion experiment called SPARC (Soonest/Smallest Private-Funded Affordable Robust Compact). This is a demonstration reactor based on the tokamak design first developed by Soviet physicists. The magnetic field is a doughnut shape with a “D” shape in cross-section. Three years ago they determined that if they could build a magnet that was able to produce a 20 Tesla magnetic field, then the SPARC reactor would be able to produce excess fusion energy. It’s all about the magnets. The news is that they just achieved that very goal, on time despite the challenges of the intervening pandemic.

Continue Reading »

Sep

14

2021

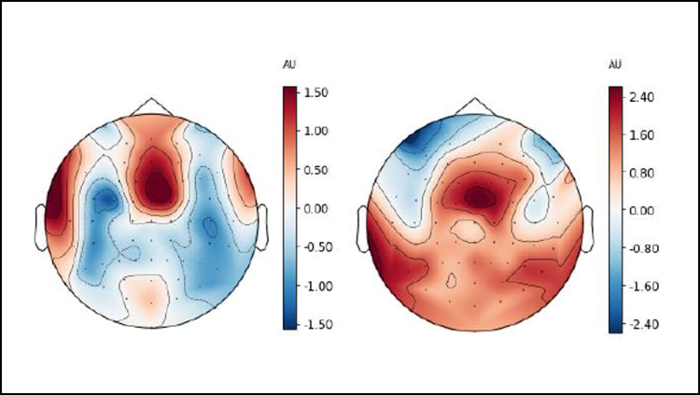

It is a fundamental truth of human behavior that people sometimes cheat. And yet, we tend to have strong moral judgements against cheating, which leads to anti-cheating social pressure. How does this all play out in the human brain?

It is a fundamental truth of human behavior that people sometimes cheat. And yet, we tend to have strong moral judgements against cheating, which leads to anti-cheating social pressure. How does this all play out in the human brain?

Psychologists have tried to understand this within the standard neuroscience paradigm – that people have basic motivations mostly designed to meet fundamental needs, but we also have higher executive function that can strategically override these motivations. So the desire to cheat in order to secure some gain is countered by moral self-control, leading to an internal conflict (cognitive dissonance). We can resolve the dissonance in a number of ways. We can rationalize the morality in order to internally justify the desire to cheat, or we can suppress the desire to cheat and get a reward by feeling good about ourselves. Except experimentally most people do not fall at either end of the spectrum, but rather they cheat sometimes.

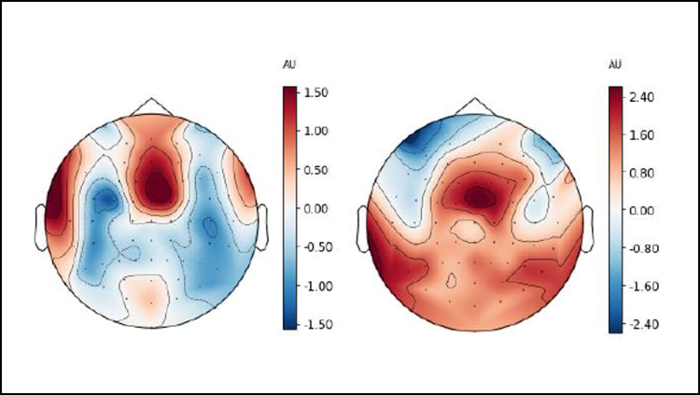

Recent research, however, has challenged this narrative, that cheating for gain is the default behavior and not cheating requires cognitive control. A new study replicates prior research showing that people differ in terms of their default behavior. Some people are mostly honest and only cheat a little, while others mostly cheat, and there is a continuum between. Perhaps even more interesting is that the research suggests that for those who at baseline tend to cheat, markers of cognitive control (using an EEG to measure brain activity) increase when they don’t cheat and behave honestly. That’s actually not the surprising part, and fits the classic narrative that people tend to cheat unless they exert self-control to be honest. However, those who are more honest at baseline show the same markers of increased cognitive control when they do cheat. They have to override their inherent instinct in the same way that baseline cheaters do. What’s happening here?

Before I discuss how to interpret all this, let me explain the research paradigm. Subjects were tasked with finding differences between two similar images. If they could find three differences, they were given a real reward. However, some of the images only had two differences, so subjects had to either accept defeat or cheat in order to win. First subjects were tested at baseline to determine their inherent tendency to cheat. Then they were encouraged to cheat with the rigged games. EEGs were used to measure theta wave activity in specific parts of the brain, which correlates with the degree of cognitive control. Subjects who cheated more at baseline showed increased theta activity when they didn’t cheat, and those who were more honest at baseline showed increased theta activity when they cheated.

Therefore, it appears more accurate to say that cognitive control allows us not to do the morally “correct” thing, but to act against our instincts, whatever they are. But why would people have such different instincts in the first place?

Continue Reading »

Sep

13

2021

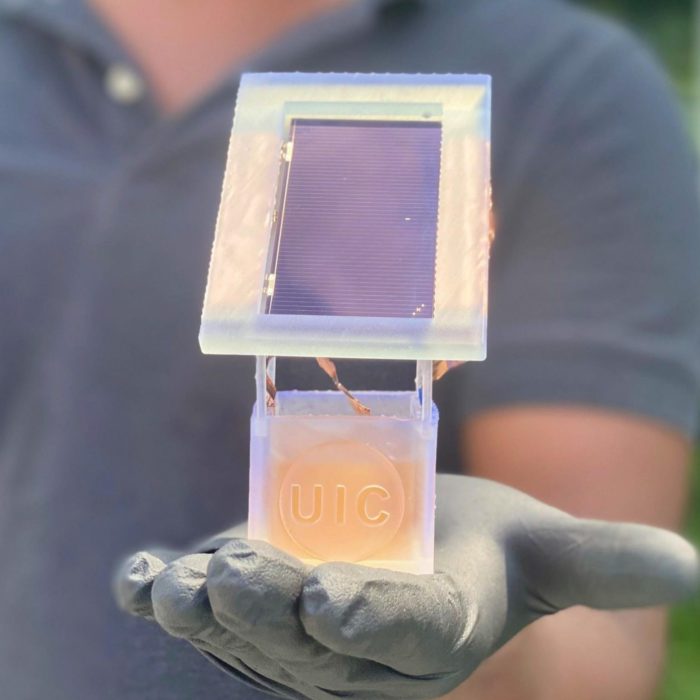

Ammonia is the second most produce industrial chemical in the world. It is used for a variety of things, but mostly fertilizer:

Ammonia is the second most produce industrial chemical in the world. It is used for a variety of things, but mostly fertilizer:

About 80% of the ammonia produced by industry is used in agriculture as fertilizer. Ammonia is also used as a refrigerant gas, for purification of water supplies, and in the manufacture of plastics, explosives, textiles, pesticides, dyes and other chemicals.

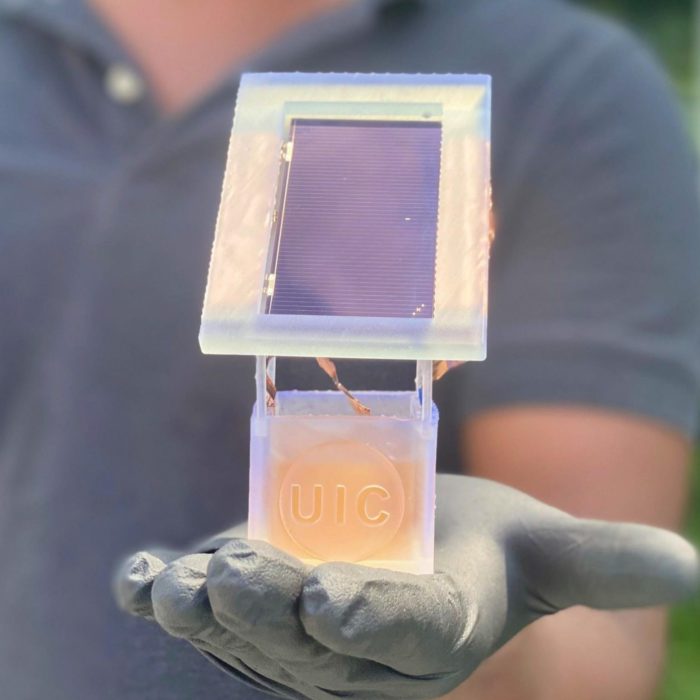

In 2010 the world produced 157.3 million metric tons of ammonia. The process requires temperatures of 400-600 degrees Celsius, and pressure of 100-200 atmospheres. This uses a lot of energy – about 1% of world energy production. The nitrogen is sourced from N2 in the atmosphere, and the high pressure and temperatures are necessary to break apart the N2 so that the nitrogen can combine with hydrogen to form NH3 (ammonia). The hydrogen is sourced from natural gas, using up about 5% of the world’s natural gas production. About half of all food production requires fertilizer made using this process (the Haber-Bosch process). This is not a process we can phase out easily or quickly.

It does represent, however, a huge opportunity for increased efficiency, saving energy and natural gas, and reducing the massive carbon footprint of the entire process. We know it’s possible because bacteria do it. Some plants have a symbiotic relationship with certain soil bacteria. The plants provide nutrients and energy to the bacteria, and in turn the bacteria fix nitrogen from the atmosphere (at normal pressures and temperatures) and provide it to the plant. These plants (which include, for example, legumes) therefore do not require nitrogen fertilizer. In fact, they can be used to fix nitrogen and put it back into the soil.

Continue Reading »

The current estimate is that the average human brain contains

The current estimate is that the average human brain contains  The story of exactly how and when people from other continents populated the Americas is still unfolding. Scientists have uncovered stunning new evidence – score of human footprints in New Mexico dating to 21-23 thousand years ago, 5-7- thousand years older than the previous oldest evidence.

The story of exactly how and when people from other continents populated the Americas is still unfolding. Scientists have uncovered stunning new evidence – score of human footprints in New Mexico dating to 21-23 thousand years ago, 5-7- thousand years older than the previous oldest evidence. The robots are coming. Of course, they are already here, mostly in the manufacturing sector. Robots designed to function in the much softer and chaotic environment of a home, however, are still in their infancy (mainly toys and vacuum cleaners). Slowly but surely, however, robots are spreading out of the factory and into places where they interact with humans. As part of this process, researchers are studying how people socially react to robots, and how robot behavior can be tweaked to optimize this interaction.

The robots are coming. Of course, they are already here, mostly in the manufacturing sector. Robots designed to function in the much softer and chaotic environment of a home, however, are still in their infancy (mainly toys and vacuum cleaners). Slowly but surely, however, robots are spreading out of the factory and into places where they interact with humans. As part of this process, researchers are studying how people socially react to robots, and how robot behavior can be tweaked to optimize this interaction. Are you afraid of spiders? I mean, really afraid, to the point that you will alter your plans and your behavior in order to specifically reduce the chance of encountering one of these multi-legged creatures? Intense fears, or phobias, are fairly common, affecting from 3-15% of the population. The technical definition (

Are you afraid of spiders? I mean, really afraid, to the point that you will alter your plans and your behavior in order to specifically reduce the chance of encountering one of these multi-legged creatures? Intense fears, or phobias, are fairly common, affecting from 3-15% of the population. The technical definition ( You’ve probably noticed that it’s very difficult to write a character who is extremely intelligent in some way. It’s easy to make a character knowledgeable, because you can just put a lot of facts into their mouth. The character Arthur P. Dietrich (played by Stephen Landesberg) on the sitcom Barney Miller always had a relevant fact at the ready. He seemed to know everything. What’s difficult is making a character wise, or giving them the ability to think in complex and logical ways. More specifically, it’s difficult to write a character that’s smarter than the writer themselves.

You’ve probably noticed that it’s very difficult to write a character who is extremely intelligent in some way. It’s easy to make a character knowledgeable, because you can just put a lot of facts into their mouth. The character Arthur P. Dietrich (played by Stephen Landesberg) on the sitcom Barney Miller always had a relevant fact at the ready. He seemed to know everything. What’s difficult is making a character wise, or giving them the ability to think in complex and logical ways. More specifically, it’s difficult to write a character that’s smarter than the writer themselves. About a month ago

About a month ago  It is a fundamental truth of human behavior that people sometimes cheat. And yet, we tend to have strong moral judgements against cheating, which leads to anti-cheating social pressure. How does this all play out in the human brain?

It is a fundamental truth of human behavior that people sometimes cheat. And yet, we tend to have strong moral judgements against cheating, which leads to anti-cheating social pressure. How does this all play out in the human brain? Ammonia is the second most produce industrial chemical in the world. It is

Ammonia is the second most produce industrial chemical in the world. It is