Sep

30

2019

In the US we have semi-compulsory vaccination. Keeping up to date on the vaccine schedule is required in order to attend public school. However, families always have the option of attending private schools, which can determine their own vaccination policy, or they can home-school. Also, each state can determine its own exemption policy. Medical exemptions are uncontroversial – if you medically cannot get a vaccine, that is up to your doctor, not the state. However, some states have religious or personal (philosophical) exemptions. These have been under increasing scrutiny recently.

In the US we have semi-compulsory vaccination. Keeping up to date on the vaccine schedule is required in order to attend public school. However, families always have the option of attending private schools, which can determine their own vaccination policy, or they can home-school. Also, each state can determine its own exemption policy. Medical exemptions are uncontroversial – if you medically cannot get a vaccine, that is up to your doctor, not the state. However, some states have religious or personal (philosophical) exemptions. These have been under increasing scrutiny recently.

Other countries have different systems. In the UK, for example, there is no compulsory vaccination to attend school.

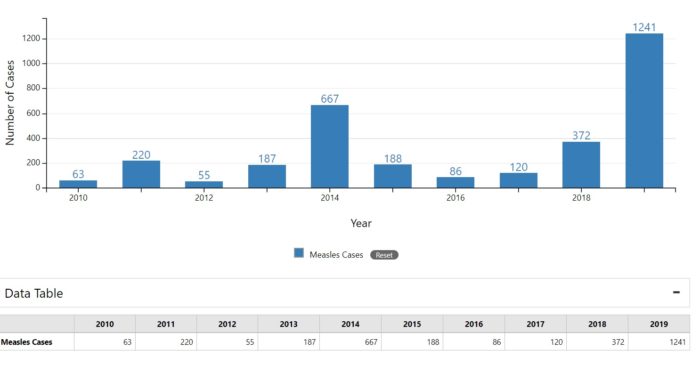

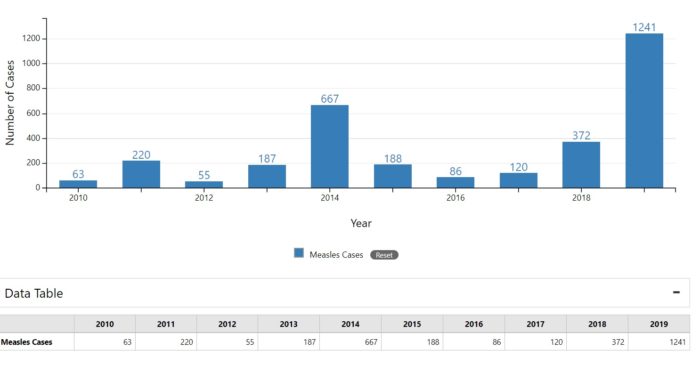

These policies are all being reexamined in the wake of the burgeoning anti-vaccine movement and the resulting return of previously controlled vaccine-preventable illnesses. Let’s take measles, for example. As you can see by the chart, measles cases have increased by greater than 20 fold from 2010 to 2019 in the US. But if we pull back even further, in 1980 measles caused 2.6 million deaths worldwide, with cases in the US alone measured in the hundreds of thousands. By 2000 routine use of the MMR vaccine had prevented an estimated 80 million cases of measles.

Also by 2000 measles had been eliminated in the US – that means there was no circulating virus in the wild. All cases came from outside the country. Outbreaks were also very limited, because there was no fertile ground for the virus to spread. The antivaccine movement has changed that.

The rate of children getting MMR has not changed much in the US due to compulsory vaccines, ranging from 90-93% over the last two decades. It has dipped to around 91.1% recently, but the overall number remains high. However, this statistic hides what is really going on. Vaccine refusal clusters, by neighborhood and by school. If a private school does not require compulsory vaccination, then anti-vaxxers will cluster there. These pockets of very low vaccination rates then serve as potential locations for outbreaks, and that is exactly what happens. In recent years these outbreaks have been large enough to spread into the general population.

Continue Reading »

Sep

27

2019

The Mandela Effect remains a fascinating phenomenon, although not for the reason that some people believe. I discussed the basic effect before – people often remember historical events as being different than current evidence reveals, and sometimes many people misremember the events in a similar way. This has led some to argue that it is not our memories that are flawed, but the universe has actually shifted reality of time.

The Mandela Effect remains a fascinating phenomenon, although not for the reason that some people believe. I discussed the basic effect before – people often remember historical events as being different than current evidence reveals, and sometimes many people misremember the events in a similar way. This has led some to argue that it is not our memories that are flawed, but the universe has actually shifted reality of time.

I recently came across a site that tests how “Mandela Effected” you are with a list of ten questions. First, should it be “Mandela Affected?” Affect is the verb, effect is the noun. But it’s how affected are you by the Mandela Effect. We’ll call it poetic license.

Some of the questions on the test are interesting examples of the Mandela Effect. However, the test itself is needlessly horrible, rendering the results meaningless. First, they show pictures relating to the question. While they warn you that the picture may not be accurate, it’s obvious that they will have a biasing effect on the results. Second, the questions are a forced binary choice. There’s no, “I don’t know” option. A 5 point Likert scale would be better – definitely A, probably A, not sure, probably B, definitely B. I know this isn’t meant to be a scientific test, it’s just for fun, but why make it so blatantly terrible? It’s bad enough to spoil the fun.

But in any case – the reason I like discussing the Mandela Effect is because of what it really reflects. It is not a glitch in the Matrix, nor a shifting of alternate universes. It is a combination of factors, mostly the fallibility of memory and the complexity of cultural influences and evolution. When there is a mismatch between our memory or perception and reality, however, we tend to first assume there is a problem with reality, rather than a problem with our brains. This is especially true in pathological conditions, when the brain is broken. We have a hard time perceiving the deficit, and rather assume the world is broken.

Continue Reading »

Sep

26

2019

A recent systematic review and meta-analysis of studies comparing humans to artificial intelligence (AI) in diagnosing radiographic images found:

and meta-analysis of studies comparing humans to artificial intelligence (AI) in diagnosing radiographic images found:

Analysis of data from 14 studies comparing the performance of deep learning with humans in the same sample found that at best, deep learning algorithms can correctly detect disease in 87% of cases, compared to 86% achieved by health-care professionals.

This is the first time we have evidence that the performance of AI has ticked over that of humans. The authors also state, as is often the case, that we need more studies, we need real world validation, and we need more direct comparisons. But at the very least AI is in the ball park of human performance. If our experience with chess and Go are any guide, the AI algorithms will only get better from here. In fact, given the lag in research, publication, and then review time, it probably already is.

I think AI is poised to overtake humans in diagnosis more broadly, because this particular task is right in the sweet spot of deep learning algorithms. Also, it is very challenging for humans, who fall prey to a host of cognitive biases and heuristics that hamper optimal diagnosis. A lot of medical education is focused on correcting these biases, and replacing them with clinical decision-making that works better. But no clinician is perfect or without blind-spots. Even the most experience clinician also has to contend with an overwhelming amount of information.

There are a couple ways to approach diagnosis. The one in which human excel is the gestalt approach, which is essentially based on pattern recognition. With experience clinicians learn to recognize the overall pattern of a disease. This pattern may include signs or symptoms that are particularly predictive. Eventually the pieces just click into place automatically.

Continue Reading »

Sep

24

2019

Have you ever traveled with a large group of friends? When a group gets beyond a certain “critical mass” it becomes geometrically more difficult to make decisions. Even going to a restaurant or a movie become laborious. Decision making seems to break down in large groups, especially if there isn’t an established hierarchy or process in place. That’s why the “by committee” cliche exists – group decision making can be a highly flawed and problematic process.

Have you ever traveled with a large group of friends? When a group gets beyond a certain “critical mass” it becomes geometrically more difficult to make decisions. Even going to a restaurant or a movie become laborious. Decision making seems to break down in large groups, especially if there isn’t an established hierarchy or process in place. That’s why the “by committee” cliche exists – group decision making can be a highly flawed and problematic process.

I can’t escape the nagging sensation that the world is having this problem. We seem to be politically frozen and unable to take decisive timely action. We are metaphorically driving toward a cliff, and we can’t even take our foot off the accelerator, let alone apply the brakes.

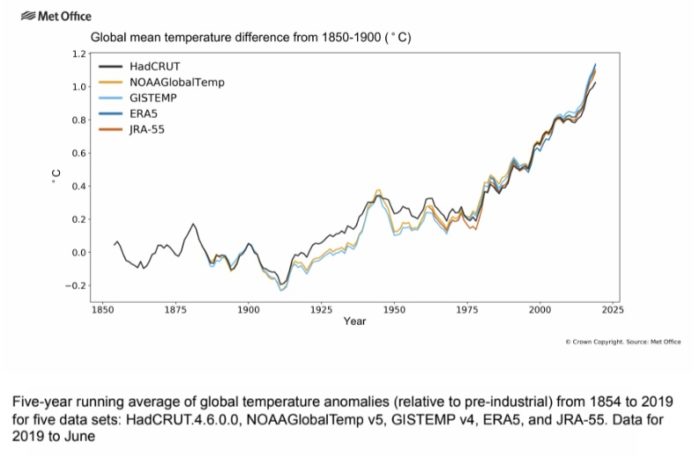

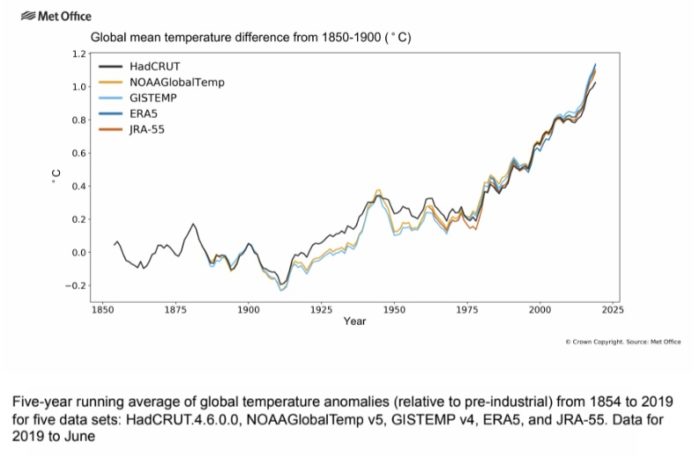

I am talking, of course, about climate change. The World Meteorological Organization (WMO) compiled data in preparation for a UN summit on climate change in New York (which the US will not, ironically, be attending). They found:

- 2014-2019 are the hottest 5 years on record

- Global temperature have risen by 1.1 C since 1850, but 0.2 C between 2011-2015.

- CO2 release between 2014-2019 was 20% higher than the previous 5 years

- Sea level rise has been 3.2 mm per year on average since 1993, but is 5mm per year averaged over the last five years.

- Ice loss is accelerating. For example – “The amount of ice lost annually from the Antarctic ice sheet increased at least six-fold, from 40 Gt per year in 1979-1990 to 252 Gt per year in 2009-2017.”

- Heatwaves, wild fires, and extreme weather events are increasing and causing increasing damage and costs.

There is a decisive scientific consensus that these facts are basically accurate, that human activity is causing warming, and that the results are not going to be good. There is a growing consensus among economists that the costs of global warming will be huge, in the billions for the US alone. Even if there is still a little uncertainty, there is enough data and enough consensus to act. So what’s holding us back?

Continue Reading »

Sep

23

2019

One experience I have had many times is with colleagues or friends who are not aware of the problem of pseudoscience in medicine. At first they are in relative denial – “it can’t be that bad.” “Homeopathy cannot possibly be that dumb!” Once I have had an opportunity to explain it to them sufficiently and they see the evidence for themselves, they go from denial to outrage. They seem to go from one extreme to the other, thinking pseudoscience is hopelessly rampant, and even feeling nihilistic.

One experience I have had many times is with colleagues or friends who are not aware of the problem of pseudoscience in medicine. At first they are in relative denial – “it can’t be that bad.” “Homeopathy cannot possibly be that dumb!” Once I have had an opportunity to explain it to them sufficiently and they see the evidence for themselves, they go from denial to outrage. They seem to go from one extreme to the other, thinking pseudoscience is hopelessly rampant, and even feeling nihilistic.

This general phenomenon is not limited to medical pseudoscience, and I think it applies broadly. We may be unaware of a problem, but once we learn to recognize it we see it everywhere. Confirmation bias kicks in, and we initially overapply the lessons we recently learned.

I have this problem when I give skeptical lectures. I can spend an hour discussing publication bias, researcher bias, p-hacking, the statistics about error in scientific publications, and all the problems with scientific journals. At first I was a little surprised at the questions I would get, expressing overall nihilism toward science in general. I inadvertently gave the wrong impression by failing to properly balance the lecture. These are all challenges to good science, but good science can and does get done. It’s just harder than many people think.

This relates to Aristotle’s philosophy of the mean – virtue is often a balance between two extreme vices. Similarly, I find there is often a nuanced position on many topics balanced precariously between two extremes. We can neither trust science and scientists explicitly, nor should we dismiss all of science as hopelessly biased and flawed. Freedom of speech is critical for democracy, but that does not mean freedom from the consequences of your speech, or that everyone has a right to any venue they choose.

A recent Guardian article about our current post-truth world reminded me of this philosophy of the mean. To a certain extent, society has gone from one extreme to the other when it comes to facts, expertise, and trusting authoritative sources. This is a massive oversimplification, and of course there have always been people everywhere along this spectrum. But there does seem to have been a shift. In the pre-social media age most people obtained their news from mainstream sources that were curated and edited. Talking head experts were basically trusted, and at least the broad center had a source of shared facts from which to debate.

Continue Reading »

Sep

20

2019

The current world population is 7.7 Billion. World population will approach 10 billion by 2050. The primary limiting factor on human population is the availability of food. We are already currently using essentially all the available practical arable land. Expanding farmland further at this point would involve using less productive land, cutting down forests, or displacing populations. Converting ecosystems into farmland also has a huge impact on the environment and species diversity.

The current world population is 7.7 Billion. World population will approach 10 billion by 2050. The primary limiting factor on human population is the availability of food. We are already currently using essentially all the available practical arable land. Expanding farmland further at this point would involve using less productive land, cutting down forests, or displacing populations. Converting ecosystems into farmland also has a huge impact on the environment and species diversity.

So how are we going to feed 10 billion people in 30 years? So far agricultural development has kept up with demand. It’s tempting to assume that such development will continue to keep up with our needs. This is the endless “peak whatever” debate – if we extrapolate any finite resource into the future, it always seems like it will run out. But historically scientific development and simple ingenuity has generally changed the game, pushing off any resource crash into the future. This has lead to two schools of thought – those who argue that history will continue to repeat itself and we will indefinitely push our resources as needed, and those who argue that no matter how clever we are, finite is finite and will eventually run out.

When we are talking about food, the result of a crash in resources means mass starvation. That is how nature solves the limited food problem; animals starve and reduce the population until a natural equilibrium is reached. And you cannot say that mass starvation has never happened. In the 1960s at least 36 million people in China starved to death due to mismanagement of their agricultural system. We may be setting ourselves up for repeats of this situation. If we push our agricultural system to its limits in order to feed a growing population, does that system become increasingly vulnerable? What if a blight wipes out a staple crop, or bad weather significantly reduces yield? We may have less and less reserve or buffer in the system to handle these kinds of events.

We can talk about population control, and that is a valid approach. I mostly favor population control through lifting people out of poverty and gender equality, which are the most significant causes of overpopulation. Regardless, there is no reasonable expectation that we will stop the human population from reaching 10 billion and it’s not clear where we will level off. We need a plan to feed this population. I reject the radical suggestion that we should keep food production where it is, or even reduce it, and let that be a natural check on population growth. This solution is mostly suggested by those will little chance of starving as a result, a burden that will mostly fall on the poor and oppressed.

Continue Reading »

Sep

19

2019

In 2017 astronomers detected and confirmed the first interstellar object, an asteroid from another solar system racing through our own – Oumuamua. The object is long and thin, like a cigar, and appears to have some outgassing altering its trajectory. So it’s not quite a comet, but not just a rock either. We didn’t detect Oumuamua until after it has passed by its closest approach to the Earth. It whipped around the sun and is now heading up out of the plane of our solar system.

In 2017 astronomers detected and confirmed the first interstellar object, an asteroid from another solar system racing through our own – Oumuamua. The object is long and thin, like a cigar, and appears to have some outgassing altering its trajectory. So it’s not quite a comet, but not just a rock either. We didn’t detect Oumuamua until after it has passed by its closest approach to the Earth. It whipped around the sun and is now heading up out of the plane of our solar system.

We know Oumuamua is an interstellar object because it is going very fast and is on a trajectory that is not bound to the sun. There are two interesting aspects to Oumuamua. The first is that it is changing course slightly, as if it is outgassing, but we don’t see the outgassing. It is not a regular comet. The second is that its course was aimed, on a cosmic scale, right at the sun. If you look at the path (below) and extrapolate out to interstellar distances, that is a phenomenal bullseye. If you do the math, and one astronomer did, the probability of such a close encounter with an interstellar object is 100 million to one.

Oumuamua came within 0.25 AU of the sun and 0.15 AU of the Earth. If this were random, they calculate that stellar systems on average would have to eject 10^15 such objects – which is 100 million times more than projected. That means that our chance encounter with Oumuamua was a 1 in 100 million chance – that’s like winning the interstellar lottery. This lead that astronomer to speculate that perhaps Oumuamua is not a random asteroid, but a ship that was aimed at our sun. Perhaps solar sails explain the course change without visible outgassing.

Continue Reading »

Sep

17

2019

Sometimes technology is developed for a specific function. At other times, however, technology is developed simply because it is possible, and then uses are found for the technology later. Most often, however, it seems that technology is developed with some specific application in mind, but once it exists out in the world many new functions are developed. In fact the “killer app” may have nothing to do with the original purpose. This is partly why it is so difficult to predict future technology, because it is impossible to replicate the effects of a massive marketplace.

Sometimes technology is developed for a specific function. At other times, however, technology is developed simply because it is possible, and then uses are found for the technology later. Most often, however, it seems that technology is developed with some specific application in mind, but once it exists out in the world many new functions are developed. In fact the “killer app” may have nothing to do with the original purpose. This is partly why it is so difficult to predict future technology, because it is impossible to replicate the effects of a massive marketplace.

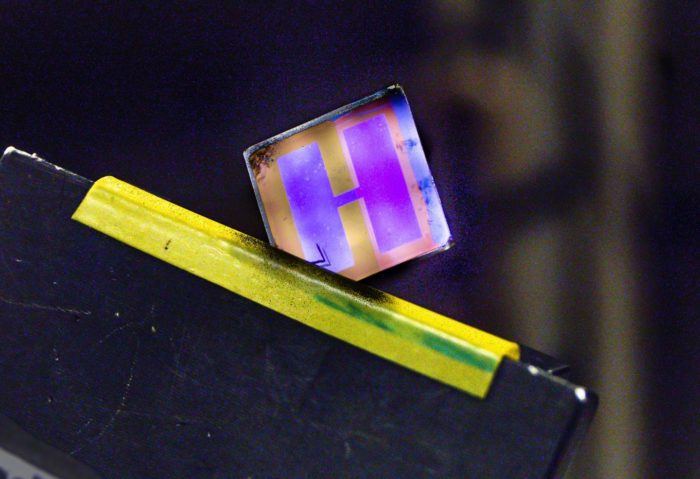

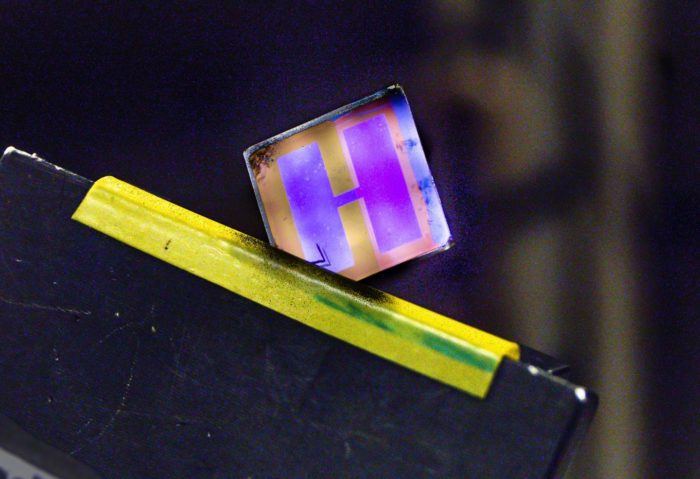

This is what I was thinking when I read about a somewhat new technology – indoor solar cells. This is actually not totally new, it’s basically organic solar cells. However, the solar cells have been optimized for the spectrum of light typical in indoor environments. There are, in fact, already indoor solar cell products on the market, but they are basically just regular solar cells sized for indoor applications. What’s innovative about these new indoor solar cells is their greater efficiency in indoor environments – the researchers claim a 26.1% efficiency. Further:

“The organic solar cell delivered a high voltage of above 1 V for more than 1000 hours in ambient light that varied between 200 and 1000 lux. The larger solar cell still maintained an energy efficiency of 23%.”

That’s pretty interesting, but not surprising. We are still on the steep part of the curve when it comes to solar cell development. I reviewed the technology recently – there are basically three types. Silicon-based cells are the ones currently dominating the market. Thin film, like perovskite, are being developed and promise to be better and cheaper, but there are still some technical hurdles to overcome. They are not yet stable for long term use, for example.

Continue Reading »

Sep

12

2019

I’m not a fan of the Star Trek movies reboot. While I do like the cast, and as a Trek fan I have some level of enjoyment of anything in the franchise, the movies were disappointing. As is usually the case with big budget movie failures, the problem was in the writing. Case in point – red matter. This is a mysterious substance invented by Vulcans in the future, a single drop of which could produce a singularity. It appears as a blob of red liquid. In the end it was a silly physics-breaking plot device that took you out of the movie.

I’m not a fan of the Star Trek movies reboot. While I do like the cast, and as a Trek fan I have some level of enjoyment of anything in the franchise, the movies were disappointing. As is usually the case with big budget movie failures, the problem was in the writing. Case in point – red matter. This is a mysterious substance invented by Vulcans in the future, a single drop of which could produce a singularity. It appears as a blob of red liquid. In the end it was a silly physics-breaking plot device that took you out of the movie.

I was reminded of this with recent reports of another mysterious red matter – so-called red mercury. As far as I know there is no connection between the two, and the similarity is pure coincidence. Perhaps the only connection is a psychological one. Mercury is already a fascinating substance, a metal that is liquid at room temperature. It would be fun to play with, if it weren’t so toxic. Red mercury would be an even more exotic form of this amazing element, and that is perhaps the same wonder-factor that the movie writers were going for.

In any case – as with red matter, red mercury does not actually exist. I write about a lot of things here that don’t actually exist, in order to deconstruct persistent belief in something nonexistent. People, apparently, are good at believing in things that aren’t real, a manifestation of the many flaws and limitations in our belief-generating machinery.

Beliefs can be generated and supported by a number of mechanisms – first hand experience, a phenomenon having a real effect in the world, misperception, biased memories, deliberate cons, wish fulfillment, cultural inertia, and a host of cognitive biases. There are sufficient mechanisms of belief at work to create and sustain belief in something without any basis in objective reality. Every culture, in fact, is overwhelmed with such beliefs.

Continue Reading »

Sep

10

2019

Our brains are constantly assailed by sensory stimuli. Sound, in particular, may bombard us from every direction, depending on the environment. That is a lot of information for brains to process, and so mammalian brains evolved mechanisms to filter out stimuli that is less likely to be useful. As our understanding of these mechanisms has become more sophisticated it has become clear that the brain is operating at multiple levels simultaneously.

Our brains are constantly assailed by sensory stimuli. Sound, in particular, may bombard us from every direction, depending on the environment. That is a lot of information for brains to process, and so mammalian brains evolved mechanisms to filter out stimuli that is less likely to be useful. As our understanding of these mechanisms has become more sophisticated it has become clear that the brain is operating at multiple levels simultaneously.

A recent study both highlights these insights and gives a surprising result about one mechanism for auditory processing. Neuroscientists have long known about auditory sensory gating (ASG) – if the brain is exposed to identical sounds close together, subsequent sounds are significantly reduced. This fits with the general observation that the brain responds more to new stimuli and changes in stimuli, and quickly become tolerant to repeated stimuli. This is just one way to filter out background noise and pay attention to the bits that are most likely to be important.

Further, for ASG specifically, it has been observed that schizophrenics lack this filter. You can even diagnoses schizophrenia partly by doing what is called a P50 test – you give two identical auditory stimuli 500ms apart, and then measure the response in the auditory part of the brain. In typical people (and mice and other mammals) there is a significant (60% or more) reduction in the response to the second sound. In some patients with schizophrenia, this reduction does not occur.

In fact researchers have identified a genetic mutation, the 22q11 deletion syndrome, that can be associated with a higher risk of schizophrenia and a failure of ASG. Reduced ASG may be the cause of some symptoms in these patients with schizophrenia, but is also clearly not the whole picture. It’s common for a single mutation is a gene that contributes to brain development or function to result in a host of changes to ultimate brain function.

Continue Reading »

In the US we have semi-compulsory vaccination. Keeping up to date on the vaccine schedule is required in order to attend public school. However, families always have the option of attending private schools, which can determine their own vaccination policy, or they can home-school. Also, each state can determine its own exemption policy. Medical exemptions are uncontroversial – if you medically cannot get a vaccine, that is up to your doctor, not the state. However, some states have religious or personal (philosophical) exemptions. These have been under increasing scrutiny recently.

In the US we have semi-compulsory vaccination. Keeping up to date on the vaccine schedule is required in order to attend public school. However, families always have the option of attending private schools, which can determine their own vaccination policy, or they can home-school. Also, each state can determine its own exemption policy. Medical exemptions are uncontroversial – if you medically cannot get a vaccine, that is up to your doctor, not the state. However, some states have religious or personal (philosophical) exemptions. These have been under increasing scrutiny recently.

The Mandela Effect remains a fascinating phenomenon, although not for the reason that some people believe.

The Mandela Effect remains a fascinating phenomenon, although not for the reason that some people believe.

Have you ever traveled with a large group of friends? When a group gets beyond a certain “critical mass” it becomes geometrically more difficult to make decisions. Even going to a restaurant or a movie become laborious. Decision making seems to break down in large groups, especially if there isn’t an established hierarchy or process in place. That’s why the “by committee” cliche exists – group decision making can be a highly flawed and problematic process.

Have you ever traveled with a large group of friends? When a group gets beyond a certain “critical mass” it becomes geometrically more difficult to make decisions. Even going to a restaurant or a movie become laborious. Decision making seems to break down in large groups, especially if there isn’t an established hierarchy or process in place. That’s why the “by committee” cliche exists – group decision making can be a highly flawed and problematic process. One experience I have had many times is with colleagues or friends who are not aware of the problem of pseudoscience in medicine. At first they are in relative denial – “it can’t be that bad.” “Homeopathy cannot possibly be that dumb!” Once I have had an opportunity to explain it to them sufficiently and they see the evidence for themselves, they go from denial to outrage. They seem to go from one extreme to the other, thinking pseudoscience is hopelessly rampant, and even feeling nihilistic.

One experience I have had many times is with colleagues or friends who are not aware of the problem of pseudoscience in medicine. At first they are in relative denial – “it can’t be that bad.” “Homeopathy cannot possibly be that dumb!” Once I have had an opportunity to explain it to them sufficiently and they see the evidence for themselves, they go from denial to outrage. They seem to go from one extreme to the other, thinking pseudoscience is hopelessly rampant, and even feeling nihilistic. The

The  In 2017 astronomers detected and confirmed

In 2017 astronomers detected and confirmed Sometimes technology is developed for a specific function. At other times, however, technology is developed simply because it is possible, and then uses are found for the technology later. Most often, however, it seems that technology is developed with some specific application in mind, but once it exists out in the world many new functions are developed. In fact the “killer app” may have nothing to do with the original purpose. This is partly why it is so difficult to predict future technology, because it is impossible to replicate the effects of a massive marketplace.

Sometimes technology is developed for a specific function. At other times, however, technology is developed simply because it is possible, and then uses are found for the technology later. Most often, however, it seems that technology is developed with some specific application in mind, but once it exists out in the world many new functions are developed. In fact the “killer app” may have nothing to do with the original purpose. This is partly why it is so difficult to predict future technology, because it is impossible to replicate the effects of a massive marketplace. I’m not a fan of the Star Trek movies reboot. While I do like the cast, and as a Trek fan I have some level of enjoyment of anything in the franchise, the movies were disappointing. As is usually the case with big budget movie failures, the problem was in the writing. Case in point – red matter. This is a mysterious substance invented by Vulcans in the future, a single drop of which could produce a singularity. It appears as a blob of red liquid. In the end it was a silly physics-breaking plot device that took you out of the movie.

I’m not a fan of the Star Trek movies reboot. While I do like the cast, and as a Trek fan I have some level of enjoyment of anything in the franchise, the movies were disappointing. As is usually the case with big budget movie failures, the problem was in the writing. Case in point – red matter. This is a mysterious substance invented by Vulcans in the future, a single drop of which could produce a singularity. It appears as a blob of red liquid. In the end it was a silly physics-breaking plot device that took you out of the movie. Our brains are constantly assailed by sensory stimuli. Sound, in particular, may bombard us from every direction, depending on the environment. That is a lot of information for brains to process, and so mammalian brains evolved mechanisms to filter out stimuli that is less likely to be useful. As our understanding of these mechanisms has become more sophisticated it has become clear that the brain is operating at multiple levels simultaneously.

Our brains are constantly assailed by sensory stimuli. Sound, in particular, may bombard us from every direction, depending on the environment. That is a lot of information for brains to process, and so mammalian brains evolved mechanisms to filter out stimuli that is less likely to be useful. As our understanding of these mechanisms has become more sophisticated it has become clear that the brain is operating at multiple levels simultaneously.