Dec 15 2023

Solution Aversion Fallacy

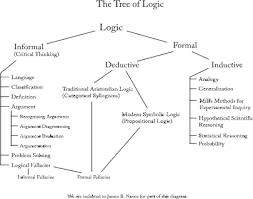

I like to think deeply about informal logical fallacies. I write about them a lot, and even have an occasional segment of the SGU dedicated to them. They are a great way to crystalize our thinking about the many ways in which logic can go wrong. Formal logic deals with arguments that are always true, by there very construction. If A=B and B=C then A=C, is always true. Informal logical fallacies, on the other hand, are context dependent. They are a great way to police the sharpness of arguments, but they require a lot of context-dependent judgement.

I like to think deeply about informal logical fallacies. I write about them a lot, and even have an occasional segment of the SGU dedicated to them. They are a great way to crystalize our thinking about the many ways in which logic can go wrong. Formal logic deals with arguments that are always true, by there very construction. If A=B and B=C then A=C, is always true. Informal logical fallacies, on the other hand, are context dependent. They are a great way to police the sharpness of arguments, but they require a lot of context-dependent judgement.

For example, the Argument from Authority fallacy recognizes that a fact claim is not necessarily true just because an authority states it, but it could be true. And recognizing meaningful authority is useful, it’s just not absolute. It’s more of a probability and the weight of opinion. But authority itself does not render a claim true or false. The same is true of the Argument ad Absurdum, or the Slippery Slope fallacy – they are about taking an argument to an unjustified extreme. But what’s “extreme”? This subjectivity might cause one to think that they are therefore not legitimate logical fallacies at all, but that’s just the False Continuum logical fallacy – the notion that if there is not a sharp demarcation line somewhere along a spectrum, then we can ignore or deny the extremes of the spectrum.

I recently received the following question about a potential informal fallacy:

I’ve noticed this more and more with politics. Party A proposes a ludicrous solution to an issue. Party B objects to the policy. Party A then accuse Party B of being in favour of the issue. It’s happening in the immigration debate in the UK where the government are trying to deport asylum seekers to Rwanda (current capacity around 200 per year) in order to solve our “record levels of immigration” (over 700,000 this year of which less than 50,000 are asylum seekers). When you object to the policy you are accused of being in support of unlimited immigration.

This feels like something wider, that may even be a logical fallacy, but I’ve not been able to locate anything describing it. I can imagine it comes up in Skepticisim relatively often.

When trying to define a potential fallacy we need to first acknowledge the lumper/splitter problem. This comes up with many attempts at categorization – how finely do we chop up the categories. Do we have big categories with lots of variation within each type, or do we split it up by every small nuanced difference. In medicine, for example, this comes up all the time in terms of naming diseases. Is ALS one disease? If there are subtypes, how do we break them up? How big a difference is enough to justify a new designation.

The same is true of the informal logical fallacies. We can have very general types of fallacies, or split them up into specific variations. I tend to remain agnostic on this particular debate and embrace both approaches. I like to start as big as possible and then focus in – so I both lump and then split. I find it helps me think about the core problem with any particular argument. I fit what the e-mailer is describing above into a category that includes the basic strategy of saying something unfairly negative about a position you want to argue against, and then falsely conclude that the position must be wrong, or at least is unsavory. I tend to use the term “Strawman Fallacy” to refer generically to this strategy – trying to argue against an unfairly weakened version of a position.

But there are variations that are worth noting. Poisoning the Well is very similar – attaching the position to another position, or an ideology or even a specific person that is wrong or unpopular. One version of this is so common it has received its own name – the Argument ad Hitlerum (now that’s splitting). Well, you believe that, and so did Hitler, so, you know, there’s that.

The Argument ad Absurdum may also fall under the Strawman category. This is when you take a position and extrapolate it to the extreme. You believe in free speech? Well, what if someone constantly threatened to murder you and your family in horrific ways, is that speech protected? This is a legitimate way to stress-test any position. But, this strategy can also be perverted. You can say – you are in favor of free speech, therefore you think that fraud and violence are OK. Or – you want to lower the drinking age to 18? What’s next, allowing 10 year old’s to drive?

What we have above, saying that if you oppose a specific proposed solution you must therefore support the identified “problem” or not think it’s a big deal, is a type of Strawman fallacy. I don’t think it’s an Argument ad Absurdum, however, although it can have that flavor. It may be it’s own subtype of Strawman. We encounter two versions of this – one has already been recognized as “solution aversion” – if you don’t like the solution, then you deny the problem. Or, you use a strawman version of a proposed solution to argue that the problem is not real or serious. We see this a lot with global warming. The “Libs” just want to take over industry and stop everyone from eating steak and driving cars, therefore global warming isn’t real.

This is more of a “solution strawman” – as the e-mailer says, you don’t like my solution, then you must support the problem. You don’t think we should have armed police officers on every street corner? You must not care about crime. You are “pro-crime”. This type of argument is extremely common in politics, and I think it is mostly deliberate. This is what politicians consider “spin”, and it’s a deliberate strategy, a way or painting your opponents in the most negative light possible. I really becomes a fallacy when partisan members of the public internalize the argument as if it were valid.

We also have terms for when a logical fallacy is raised to extreme levels. When a series of strawman fallacies and poisoning the well strategies are deployed against a person or group, we call that “demonizing” or creating a “cardboard villain”. Recently another term has become popular – when there is an organized campaign of deploying multiple fallacies combined with misinformation and disinformation to create an entire false reality, we call that “gaslighting”.

It’s a shame we need to have terms for all of these things, but understanding them is the first line of defense against them. But most importantly of all, we need to recognize them in ourselves, so that we don’t unknowingly fall into them ourselves. But knowledge cuts both ways – it also gives some people a greater ability to deliberately deploy these fallacies as a political strategy. All the more reason we must recognize and defend against them.