Nov 10 2020

Pre-Bunking Game

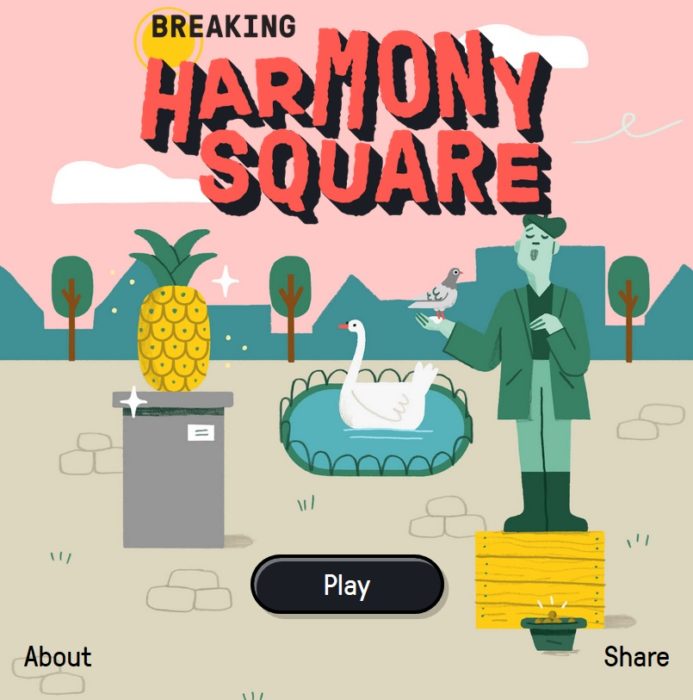

A new game called Harmony Square was released today. The game hires you, the player, as a Chief Disinformation Officer, and then walks you through a campaign to cause political chaos in this otherwise placid town. The game is based upon research showing that exposing people to the tactics of disinformation “inoculates” them against similar tactics in the real world. The study showed, among other things, that susceptibility to fake news headlines declined by 21% after playing the game. Here is the full abstract:

A new game called Harmony Square was released today. The game hires you, the player, as a Chief Disinformation Officer, and then walks you through a campaign to cause political chaos in this otherwise placid town. The game is based upon research showing that exposing people to the tactics of disinformation “inoculates” them against similar tactics in the real world. The study showed, among other things, that susceptibility to fake news headlines declined by 21% after playing the game. Here is the full abstract:

The spread of online misinformation poses serious challenges to societies worldwide. In a novel attempt to address this issue, we designed a psychological intervention in the form of an online browser game. In the game, players take on the role of a fake news producer and learn to master six documented techniques commonly used in the production of misinformation: polarisation, invoking emotions, spreading conspiracy theories, trolling people online, deflecting blame, and impersonating fake accounts. The game draws on an inoculation metaphor, where preemptively exposing, warning, and familiarising people with the strategies used in the production of fake news helps confer cognitive immunity when exposed to real misinformation. We conducted a large-scale evaluation of the game with N = 15,000 participants in a pre-post gameplay design. We provide initial evidence that people’s ability to spot and resist misinformation improves after gameplay, irrespective of education, age, political ideology, and cognitive style.

While encouraging, I think there are some caveats to the current incarnations of this approach. But first, let me say that I think the concept is solid. The best way to understand mechanisms of deception and manipulation is to learn how to do them yourself. This is similar to the old adage – you can’t con a con artist. I think “can’t” is a little strong, but the idea is that someone familiar with con artist techniques is more likely to spot them in others. Along similar lines, there is a strong tradition of skepticism among professional magicians. They know how to deceive, and will spot others using similar deceptive techniques. (The famous rivalry between James Randi and Uri Geller is a good example of this.)

The “pre-bunking” technique is essentially training the player to become a troll in the hopes that they will be less susceptible to trolling techniques themselves. Anyone who spends time on social media will likely recognize the techniques outlined in the abstract, but still it is helpful to have them in the front of your mind when reading comments or Facebook posts. I also agree that it is much more effective to give people the critical thinking and media savvy skills ahead of time rather than trying to disabuse them of a position they already hold. It is very clear in the psychological literature that people cling to existing beliefs – you need to prepare them to recognize and reject false ideas themselves.

But here come the caveats. First, in terms of the effects seen in these studies, previous studies of similar effects have shown that anytime you encourage people to think about whether or not something is true, that enhances their skepticism. Even if you just say to people – “what I am about to tell you is absolutely true” – you put into their mind the question of truth. Therefore, there is likely to be a non-specific effect from the context of the study itself, and may not be a specific effect of the pre-bunking techniques they are using.

Further, is learning to recognize common techniques of misinformation (media savvy) enough? It’s probably better than not recognizing them, but in order to be effective it may need to be combined with certain critical thinking skills, such as really understanding confirmation bias and motivated reasoning. The same goes for scientific literacy – it is certainly necessary to deal with scientific controversies, but it’s not enough. Motivated reasoning will overwhelm scientific literacy when it comes to acceptance of certain positions. In fact, the more scientifically literate people are the more sophisticated they become in their defense of their position, even if that is the objectively wrong position that they hold for ideological reasons.

Finally, what typically happens when people learn basic skills like this is that they use them more as a weapon, to criticize other people, than as a tool to police their own thinking and behavior. This can still be useful, to point out when someone is using a common trolling technique, but it needs to be part of a larger effort to create a culture of high standards of communication. Rather, I see it used as just another way of trolling (ironically) – addressing the form of someone’s comment rather than the content. Also, you can’t teach someone to recognize trolling techniques without teaching them how to engage in those techniques. In fact, that is the exact design of the game. How do the researchers know that in the real world people who play that game won’t use their knowledge for evil rather than good? You are literally teaching them how to be more effective trolls.

I see this as an interesting baby step which may have utility, but by itself is unlikely to have a significant positive impact on the culture. If you look at the totality of research, and this conforms to my personal experience, people need not only media savvy, but also scientific literacy and critical thinking skills to have real resistance to misinformation and deception. And these skills need to be fairly advanced. But also the skills themselves are not enough, they have to be combined with a genuine desire to believe only what is true, and have the willingness and humility to acknowledge that what you currently believe may be wrong (and is almost certainly incomplete). There has to be a genuine dedication to process and validity that is greater than the desire to maintain a certain position, even if that position is closely tied to your ideology or identity.