Nov

16

2023

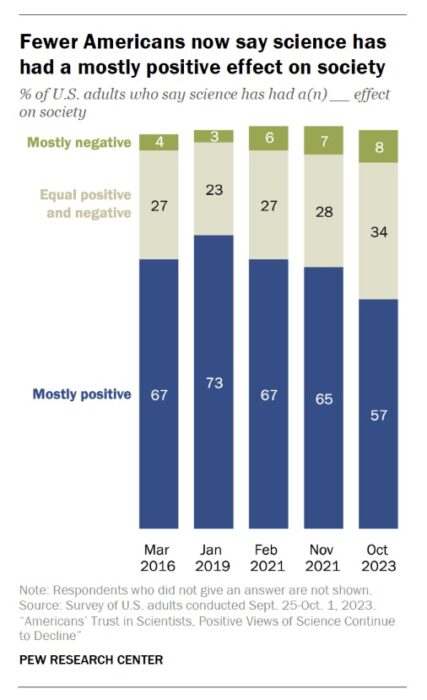

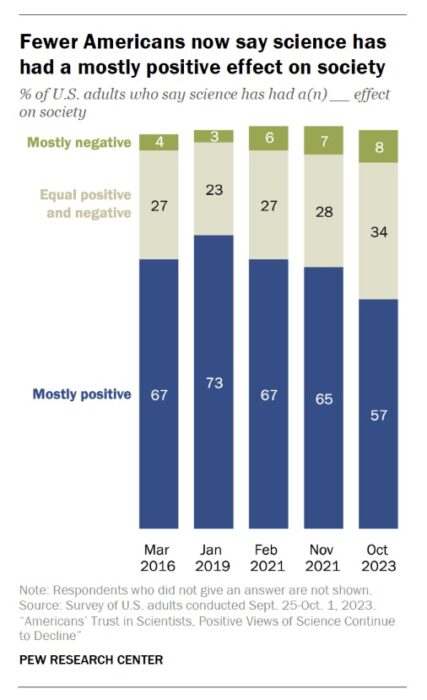

How much does the public trust in science and scientists? Well, there’s some good news and some bad news. Let’s start with the bad news – a recent Pew survey finds that trust in scientist has been in decline for the last few years. From its recent peak in 2019, those who answered that science has a mostly positive effect on society decreased from 73% to 57%. Those who say it has a mostly negative effect increased from 3 to 8%. Those who trust in scientists a fair amount or a great deal decreased from 86 to 73%. Those who think that scientific investments are worthwhile remain strong at 78%.

How much does the public trust in science and scientists? Well, there’s some good news and some bad news. Let’s start with the bad news – a recent Pew survey finds that trust in scientist has been in decline for the last few years. From its recent peak in 2019, those who answered that science has a mostly positive effect on society decreased from 73% to 57%. Those who say it has a mostly negative effect increased from 3 to 8%. Those who trust in scientists a fair amount or a great deal decreased from 86 to 73%. Those who think that scientific investments are worthwhile remain strong at 78%.

The good news is that these numbers are relatively high compared to other institutions and professions. Science and scientists still remain among the most respected professions, behind the military, teachers, and medical doctors, and way above journalists, clergy, business executives, and lawyers. So overall a majority of Americans feel that science and scientists are trustworthy, valuable, and a worthwhile investment.

But we need to pay attention to early warning signs that this respect may be in jeopardy. If we get to the point that a majority of the public do not feel that investment in research is worthwhile, or that the findings of science can be trusted, that is a recipe for disaster. In the modern world, such a society is likely not sustainable, certainly not as a stable democracy and economic leader. It’s worthwhile, therefore, to dig deeper on what might be behind the recent dip in numbers.

It’s worth pointing out some caveats. Surveys are always tricky, and the results depend heavily on how questions are asked. For example, if you ask people if they trust “doctors” in the abstract the number is typically lower than if you ask them if they trust their doctor. People tend to separate their personal experience from what they think is going on generally in society, and are perhaps too willing to dismiss their own evidence as “exceptions”. If they were favoring data over personal anecdote, that would be fine. But they are often favoring rumor, fearmongering, and sensationalism. Surveys like this, therefore, often reflect the public mood, rather than deeply held beliefs.

Continue Reading »

Jan

26

2023

Grand conspiracy theories are a curious thing. What would lead someone to readily believe that the world is secretly run by evil supervillains? Belief in conspiracies correlates with feelings of helplessness, which suggests that some people would rather believe that an evil villain is secretly in control than the more prosaic reality that no one is in control and we live in a complex and chaotic universe.

Grand conspiracy theories are a curious thing. What would lead someone to readily believe that the world is secretly run by evil supervillains? Belief in conspiracies correlates with feelings of helplessness, which suggests that some people would rather believe that an evil villain is secretly in control than the more prosaic reality that no one is in control and we live in a complex and chaotic universe.

The COVID-19 pandemic provided a natural experiment to see how conspiracy belief reacted and spread. A recent study examines this phenomenon by tracking tweets and other social media posts relating to COVID conspiracy theories. Their primary method was to identify specific content type within the tweets and correlate that with how quickly and how far those tweets were shared across the network.

The researchers identified nine features of COVID-related conspiracy tweets: malicious purposes, secretive action, statement of belief, attempt at authentication (such as linking to a source), directive (asking the reader to take action), rhetorical question, who are the conspirators, methods of secrecy, and conditions under which the conspiracy theory is proposed (I got COVID from that 5G tower near my home). They also propose a breakdown of different types of conspiracies: conspiracy sub-narrative, issue specific, villain-based, and mega-conspiracy.

Continue Reading »

Feb

07

2022

The latest controversy over Joe Rogan and Spotify is a symptom of a long-standing trend, exacerbated by social media but not caused by it. The problem is with the algorithms used by media outlets to determine what to include on their platform.

The latest controversy over Joe Rogan and Spotify is a symptom of a long-standing trend, exacerbated by social media but not caused by it. The problem is with the algorithms used by media outlets to determine what to include on their platform.

The quick summary is that Joe Rogan’s podcast is the most popular podcast in the world with millions of listeners. Rogan follows a long interview format, and he is sometimes criticized for having on guests that promote pseudoscience or misinformation, for not holding them to account, or for promoting misinformation himself. In particular he has come under fire for spreading dangerous COVID misinformation during a health crisis, specifically his interview with Dr. Malone. In an open letter to Rogan’s podcast host, Spotify, health experts wrote:

“With an estimated 11 million listeners per episode, JRE, which is hosted exclusively on Spotify, is the world’s largest podcast and has tremendous influence,” the letter reads. “Spotify has a responsibility to mitigate the spread of misinformation on its platform, though the company presently has no misinformation policy.”

Then Neil Young gave Spotify an ultimatum – either Rogan goes, or he goes. Spotify did not respond, leading to Young pulling his entire catalog of music from the platform. Other artists have also joined the boycott. This entire episode has prompted yet another round of discussion over censorship and the responsibility of media platforms, outlets, and content producers. Rogan himself produced a video to explain his position. The video is definitively not an apology or even an attempt at one. In it Rogan makes two core points. The first is that he himself is not an expert of any kind, therefore he should not be held responsible for the scientific accuracy of what he says or the questions he asks. Second, his goal with the podcast is to simply interview interesting people. Rogan has long used these two points to absolve himself of any journalistic responsibility, so this is nothing new. He did muddy the waters a little when he went on to say that maybe he can research his interviewees more thoroughly to ask better informed questions, but this was presented as more of an afterthought. He stands by his core justifications.

Continue Reading »

Aug

18

2020

This is one of those things that futurists did not predict at all, but now seems obvious and unavoidable – the degree to which computer algorithms affect your life. It’s always hard to make negative statements, and they have to be qualified – but I am not aware of any pre-2000 science fiction or futurism that even discussed the role of social media algorithms or other informational algorithms on society and culture (as always, let me know if I’m missing something). But in a very short period of time they have become a major challenge for many societies. It also is now easy to imagine how computer algorithms will be a dominant topic in the future. People will likely debate their role, who controls them and who should control them, and what regulations, if any, should be put in place.

This is one of those things that futurists did not predict at all, but now seems obvious and unavoidable – the degree to which computer algorithms affect your life. It’s always hard to make negative statements, and they have to be qualified – but I am not aware of any pre-2000 science fiction or futurism that even discussed the role of social media algorithms or other informational algorithms on society and culture (as always, let me know if I’m missing something). But in a very short period of time they have become a major challenge for many societies. It also is now easy to imagine how computer algorithms will be a dominant topic in the future. People will likely debate their role, who controls them and who should control them, and what regulations, if any, should be put in place.

The worse outcome is if this doesn’t happen, meaning that people are not aware of the role of algorithms in their life and who controls them. That is essentially what is happening in China and other authoritarian nations. Social media algorithms are an authoritarian’s dream – they give them incredible power to control what people see, what information they get exposed to, and to some extent what they think. This is 1984 on steroids. Orwell imagined that in order to control what and how people think authoritarians would control language (double-plus good). Constrain language and you constrain thought. That was an interesting idea pre-web and pre-social media. Now computer algorithms can control the flow of information, and by extension what people know and think, seamlessly, invisibly, and powerfully to a scary degree.

Even in open democratic societies, however, the invisible hand of computer algorithms can wreak havoc. Social scientists studying this phenomenon are increasing sounding warning bells. A recent example is an anti-extremist group in the UK who now are warning, according to their research, that Facebook algorithms are actively promoting holocaust denial and other conspiracy theories. They found, unsurprisingly, that visitors to Facebook pages that deny the holocaust were then referred to other pages that also deny the holocaust. This in turn leads to other conspiracies that also refer to still other conspiracy content, and down the rabbit hole you go.

Continue Reading »

Sep

23

2019

One experience I have had many times is with colleagues or friends who are not aware of the problem of pseudoscience in medicine. At first they are in relative denial – “it can’t be that bad.” “Homeopathy cannot possibly be that dumb!” Once I have had an opportunity to explain it to them sufficiently and they see the evidence for themselves, they go from denial to outrage. They seem to go from one extreme to the other, thinking pseudoscience is hopelessly rampant, and even feeling nihilistic.

One experience I have had many times is with colleagues or friends who are not aware of the problem of pseudoscience in medicine. At first they are in relative denial – “it can’t be that bad.” “Homeopathy cannot possibly be that dumb!” Once I have had an opportunity to explain it to them sufficiently and they see the evidence for themselves, they go from denial to outrage. They seem to go from one extreme to the other, thinking pseudoscience is hopelessly rampant, and even feeling nihilistic.

This general phenomenon is not limited to medical pseudoscience, and I think it applies broadly. We may be unaware of a problem, but once we learn to recognize it we see it everywhere. Confirmation bias kicks in, and we initially overapply the lessons we recently learned.

I have this problem when I give skeptical lectures. I can spend an hour discussing publication bias, researcher bias, p-hacking, the statistics about error in scientific publications, and all the problems with scientific journals. At first I was a little surprised at the questions I would get, expressing overall nihilism toward science in general. I inadvertently gave the wrong impression by failing to properly balance the lecture. These are all challenges to good science, but good science can and does get done. It’s just harder than many people think.

This relates to Aristotle’s philosophy of the mean – virtue is often a balance between two extreme vices. Similarly, I find there is often a nuanced position on many topics balanced precariously between two extremes. We can neither trust science and scientists explicitly, nor should we dismiss all of science as hopelessly biased and flawed. Freedom of speech is critical for democracy, but that does not mean freedom from the consequences of your speech, or that everyone has a right to any venue they choose.

A recent Guardian article about our current post-truth world reminded me of this philosophy of the mean. To a certain extent, society has gone from one extreme to the other when it comes to facts, expertise, and trusting authoritative sources. This is a massive oversimplification, and of course there have always been people everywhere along this spectrum. But there does seem to have been a shift. In the pre-social media age most people obtained their news from mainstream sources that were curated and edited. Talking head experts were basically trusted, and at least the broad center had a source of shared facts from which to debate.

Continue Reading »

Aug

12

2019

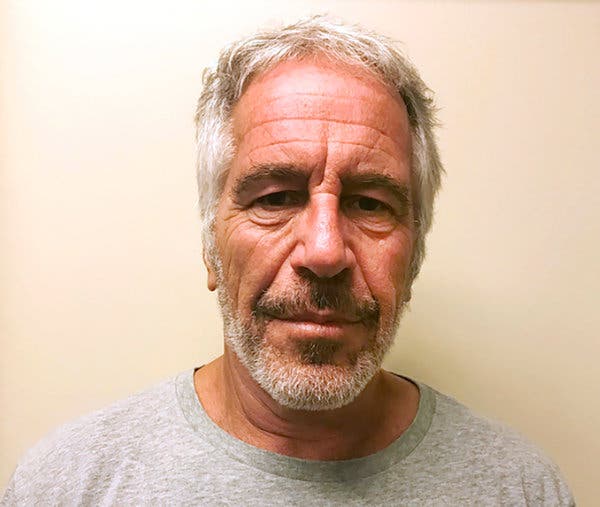

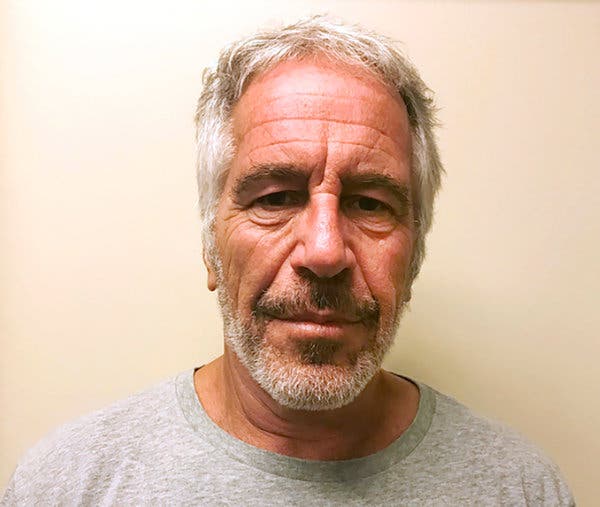

This was highly predictable. Of course there are conspiracies surrounding the apparent recent suicide of Jeffrey Epstein while in prison. That’s just background noise now. There are conspiracies about everything. Apparently the two shootings last weekend were false flag operations, because #conspiracies.

This was highly predictable. Of course there are conspiracies surrounding the apparent recent suicide of Jeffrey Epstein while in prison. That’s just background noise now. There are conspiracies about everything. Apparently the two shootings last weekend were false flag operations, because #conspiracies.

Just as predictably, news about the conspiracy theories, how they spread, and how they are treated by the media is itself news. And yes I get the irony that here I am blogging about it. It’s turtles all the way down. I guess in order to have something interesting to say I have to get one level more meta than everyone else – does that do it? Getting meta about being meta?

By now this phenomenon is old news. The traditional editorial filters are no longer in place. They have largely been replaced by algorithms which determine which news items are “trending.” This becomes a self-reinforcing feedback loop that allows the worst information to spread. Of course, this has always happened. Sensationalism and propaganda spread because they are interesting. They break up the mundane monotony of our lives. They are a real-life soap opera.

Jonathan Swift observed in 1710, “Falsehood flies, and the Truth comes limping after it.” There are also several versions of, “A Lie Can Travel Halfway Around the World While the Truth Is Putting On Its Shoes.” The source of this quote, often mistakingly attributed to Mark Twain, is unclear. The point is – the inherent advantage that false but sensational information has in the human mind over prosaic truth has long been observed. And that, of course, is the ultimate medium, the human mind. The external method of spreading false information is incidental to the core phenomenon, but it can influence the speed with which such information spreads and the credibility it is given.

Continue Reading »

Oct

22

2018

In testimony before congress, Mark Zuckerberg predicted that artificially intelligent algorithms that will be able to filter out “fake news” will be available in 5-10 years. In a recent NYT editorial, neuroscientist Gary Marcus and computer scientist Ernest Davis argue that this prediction is overly optimistic. I think both sides have a point, so let’s dissect their arguments a bit.

In testimony before congress, Mark Zuckerberg predicted that artificially intelligent algorithms that will be able to filter out “fake news” will be available in 5-10 years. In a recent NYT editorial, neuroscientist Gary Marcus and computer scientist Ernest Davis argue that this prediction is overly optimistic. I think both sides have a point, so let’s dissect their arguments a bit.

Fake news is the recently popular term for deliberate and often strategic propaganda masquerading as real news. There is, of course, a continuum from rigorous and fair journalism to 100% fakery, with lots of biased, ideological, and simply lazy reporting along the way. This has always existed, but seems to be on the rise due to the ease of spread through social media. Accusations of fake news have also been on the rise, as a strategy for dismissing any news that is unwanted or inconvenient.

Obviously this is a deep social issue that will not be solved by any computer algorithm. I would argue the only real solution is to foster a well-educated electorate with the critical thinking skills to sort out real from fake news. That is a worthwhile and long term goal, but even if successful there will always be a problem with fake news, regardless of the medium of its spread.

The real context here is the role that social media serves in spreading fake news. The reason that Zuckerberg, the creator of Facebook, is involved is because Facebook and other social media platforms have become a main source of news for many people. Further, they represent a new model for pairing people with the news they want.

The traditional model is to build respected news outlets who cultivate a reputation for quality and have a heavy editorial policy that filters the news. People then go to news outlets they respect, and get whatever news is fed to them, perhaps divided into sections of interest. But even this model has always been corrupted by ideologically biased editorial policy, and by sensationalism. You can attract eyes not only through well-earned respect by providing quality, but also by sensational headlines, or by catering to preexisting world-views.

Continue Reading »

Mar

09

2018

The battle between truth and fiction is asymmetrical. While that seems to be the case, now we have some empirical evidence to back up this conclusion. In a recent study researchers report:

The battle between truth and fiction is asymmetrical. While that seems to be the case, now we have some empirical evidence to back up this conclusion. In a recent study researchers report:

To understand how false news spreads, Vosoughi et al. used a data set of rumor cascades on Twitter from 2006 to 2017. About 126,000 rumors were spread by ∼3 million people. False news reached more people than the truth; the top 1% of false news cascades diffused to between 1000 and 100,000 people, whereas the truth rarely diffused to more than 1000 people. Falsehood also diffused faster than the truth. The degree of novelty and the emotional reactions of recipients may be responsible for the differences observed.

This reflects the inherent asymmetry. Factual information is constrained by reality. You can also look at it as factual information is optimized to be true and accurate. While false information is not constrained by reality and can be optimized to evoke an emotional reaction, to be good storytelling, and for drama.

We see this in many contexts. In medicine, the rise of so-called alternative medicine has been greatly aided by the fact that alternative practitioners can tell patients what they want to hear. They can craft their diagnoses and treatments for optimal marketing, rather than optimal outcomes.

Continue Reading »

Apr

07

2017

Pierre Omidyar, founder of eBay, is one of the world’s richest men. He recently announced that his philanthropic investment firm will dedicate $100 million to combat the “global trust deficit.” By this he means the current lack of trust in information and institutions born by the age of misinformation, fake news, and alternative facts.

I agree that this is a phenomenon that needs to be studied and tackled, but I hope that he is just getting started with the $100 million, because it’s going to take a lot more than that. I also don’t think we can rely on a few philanthropists to fix this problem.

As an aside I find it historically interesting that the internet boom lead to a crop of very young very rich people, not only Omidyar but also Bezos, Musk, Zuckerberg and others. Omidyar notes that:

“We sort of skipped the ‘regular rich’ and we went straight to ‘ridiculous rich’,” he said of his overnight fortune.

“I had the notion that, okay, so now we have all of this wealth, we could buy not only one expensive car, we could buy all of them. As soon as you realise that you could buy all of them, none of them are particularly interesting or satisfying.”

So we have a crop of young bored billionaires looking to change the world. I think that’s cool. I hope they succeed.

Continue Reading »

Feb

23

2017

It appears that Google has removed all Natural News content from their indexing. This means that Natural News pages will not appear in organic Google searches.

It appears that Google has removed all Natural News content from their indexing. This means that Natural News pages will not appear in organic Google searches.

This is big news for skeptics, but it is also complicated and sure to spark vigorous discussion.

For those who may not know, Mike Adams, who runs Natural News, is a crank conspiracy theorist supreme. He hawks snake oil on his site that he markets partly by spreading the worst medical misinformation on the net. He also routinely personally attacks his critics. He has launched a smear-campaign against my colleague, David Gorski, for example.

A few years ago Adams put up a post in which he listed people who support the science of GMOs to the public, comparing them to Nazis and arguing that it would be ethical (even a moral obligation) to kill them. So he essentially made a kill-list for his conspiracy-addled followers. Mine was one of the names on that list, as were other journalists and science-communicators.

In short Adams is a dangerous loon spreading misinformation and harmful conspiracy theories in order to sell snake oil, and will smear and threaten those who call him out. He is an active menace to the health of the public.

Adams is a good example of the dark underbelly of social media. It makes it possible to build a massive empire out of click-bait and sensationalism.

Continue Reading »

How much does the public trust in science and scientists? Well, there’s some good news and some bad news. Let’s start with the bad news – a recent Pew survey finds that trust in scientist has been in decline for the last few years. From its recent peak in 2019, those who answered that science has a mostly positive effect on society decreased from 73% to 57%. Those who say it has a mostly negative effect increased from 3 to 8%. Those who trust in scientists a fair amount or a great deal decreased from 86 to 73%. Those who think that scientific investments are worthwhile remain strong at 78%.

How much does the public trust in science and scientists? Well, there’s some good news and some bad news. Let’s start with the bad news – a recent Pew survey finds that trust in scientist has been in decline for the last few years. From its recent peak in 2019, those who answered that science has a mostly positive effect on society decreased from 73% to 57%. Those who say it has a mostly negative effect increased from 3 to 8%. Those who trust in scientists a fair amount or a great deal decreased from 86 to 73%. Those who think that scientific investments are worthwhile remain strong at 78%.

Grand conspiracy theories are a curious thing. What would lead someone to readily believe that the world is secretly run by evil supervillains? Belief in conspiracies correlates with feelings of helplessness, which suggests that some people would rather believe that an evil villain is secretly in control than the more prosaic reality that no one is in control and we live in a complex and chaotic universe.

Grand conspiracy theories are a curious thing. What would lead someone to readily believe that the world is secretly run by evil supervillains? Belief in conspiracies correlates with feelings of helplessness, which suggests that some people would rather believe that an evil villain is secretly in control than the more prosaic reality that no one is in control and we live in a complex and chaotic universe. The latest controversy over Joe Rogan and Spotify is a symptom of a long-standing trend, exacerbated by social media but not caused by it. The problem is with the algorithms used by media outlets to determine what to include on their platform.

The latest controversy over Joe Rogan and Spotify is a symptom of a long-standing trend, exacerbated by social media but not caused by it. The problem is with the algorithms used by media outlets to determine what to include on their platform. This is one of those things that futurists did not predict at all, but now seems obvious and unavoidable – the degree to which computer algorithms affect your life. It’s always hard to make negative statements, and they have to be qualified – but I am not aware of any pre-2000 science fiction or futurism that even discussed the role of social media algorithms or other informational algorithms on society and culture (as always, let me know if I’m missing something). But in a very short period of time they have become a major challenge for many societies. It also is now easy to imagine how computer algorithms will be a dominant topic in the future. People will likely debate their role, who controls them and who should control them, and what regulations, if any, should be put in place.

This is one of those things that futurists did not predict at all, but now seems obvious and unavoidable – the degree to which computer algorithms affect your life. It’s always hard to make negative statements, and they have to be qualified – but I am not aware of any pre-2000 science fiction or futurism that even discussed the role of social media algorithms or other informational algorithms on society and culture (as always, let me know if I’m missing something). But in a very short period of time they have become a major challenge for many societies. It also is now easy to imagine how computer algorithms will be a dominant topic in the future. People will likely debate their role, who controls them and who should control them, and what regulations, if any, should be put in place. One experience I have had many times is with colleagues or friends who are not aware of the problem of pseudoscience in medicine. At first they are in relative denial – “it can’t be that bad.” “Homeopathy cannot possibly be that dumb!” Once I have had an opportunity to explain it to them sufficiently and they see the evidence for themselves, they go from denial to outrage. They seem to go from one extreme to the other, thinking pseudoscience is hopelessly rampant, and even feeling nihilistic.

One experience I have had many times is with colleagues or friends who are not aware of the problem of pseudoscience in medicine. At first they are in relative denial – “it can’t be that bad.” “Homeopathy cannot possibly be that dumb!” Once I have had an opportunity to explain it to them sufficiently and they see the evidence for themselves, they go from denial to outrage. They seem to go from one extreme to the other, thinking pseudoscience is hopelessly rampant, and even feeling nihilistic. This was highly predictable. Of course there are conspiracies surrounding the apparent recent suicide of Jeffrey Epstein while in prison. That’s just background noise now. There are conspiracies about everything. Apparently the two shootings last weekend were false flag operations, because #conspiracies.

This was highly predictable. Of course there are conspiracies surrounding the apparent recent suicide of Jeffrey Epstein while in prison. That’s just background noise now. There are conspiracies about everything. Apparently the two shootings last weekend were false flag operations, because #conspiracies. In testimony before congress, Mark Zuckerberg predicted that artificially intelligent algorithms that will be able to filter out “fake news” will be available in 5-10 years.

In testimony before congress, Mark Zuckerberg predicted that artificially intelligent algorithms that will be able to filter out “fake news” will be available in 5-10 years.  The battle between truth and fiction is asymmetrical. While that seems to be the case, now we have some empirical evidence to back up this conclusion. In a recent study researchers report:

The battle between truth and fiction is asymmetrical. While that seems to be the case, now we have some empirical evidence to back up this conclusion. In a recent study researchers report:

It appears that Google has removed all Natural News content from their indexing. This means that Natural News pages will not appear in organic Google searches.

It appears that Google has removed all Natural News content from their indexing. This means that Natural News pages will not appear in organic Google searches.