Feb

12

2024

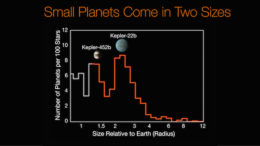

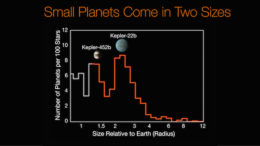

As of this writing, there are 5,573 confirmed exoplanets in 4,146 planetary systems. That is enough exoplanets, planets around stars other than our own sun, that we can do some statistics to describe what’s out there. One curious pattern that has emerged is a relative gap in the radii of exoplanets between 1.5 and 2.0 Earth radii. What is the significance, if any, of this gap?

As of this writing, there are 5,573 confirmed exoplanets in 4,146 planetary systems. That is enough exoplanets, planets around stars other than our own sun, that we can do some statistics to describe what’s out there. One curious pattern that has emerged is a relative gap in the radii of exoplanets between 1.5 and 2.0 Earth radii. What is the significance, if any, of this gap?

First we have to consider if this is an artifact of our detection methods. The most common method astronomers use to detect exoplanets is the transit method – carefully observe a star over time precisely measuring its brightness. If a planet moves in front of the star, the brightness will dip, remain low while the planet transits, and then return to its baseline brightness. This produces a classic light curve that astronomers recognize as a planet orbiting that start in the plane of observation from the Earth. The first time such a dip is observed that is a suspected exoplanet, and if the same dip is seen again that confirms it. This also gives us the orbital period. This method is biased toward exoplanets with short periods, because they are easier to confirm. If an exoplanet has a period of 60 years, that would take 60 years to confirm, so we haven’t confirmed a lot of those.

There is also the wobble method. We can observe the path that a star takes through the sky. If that path wobbles in a regular pattern that is likely due to the gravitational tug from a large planet or other dark companion that is orbiting it. This method favors more massive planets closer to their parent star. Sometimes we can also directly observe exoplanets by blocking out their parent star and seeing the tiny bit of reflected light from the planet. This method favors large planets distant from their parent star. There are also a small number of exoplanets discovered through gravitational microlensing, and effect of general relativity.

None of these methods, however, explain the 1.5 to 2.0 radii gap. It’s also likely not a statistical fluke given the number of exoplanets we have discovered. Therefore it may be telling us something about planetary evolution. But there are lots of variables that determine the size of an exoplanet, so it can be difficult to pin down a single explanation.

Continue Reading »

Jan

12

2024

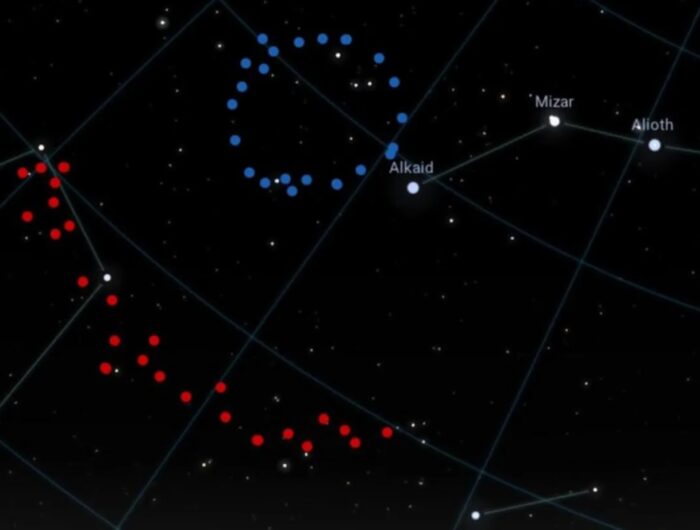

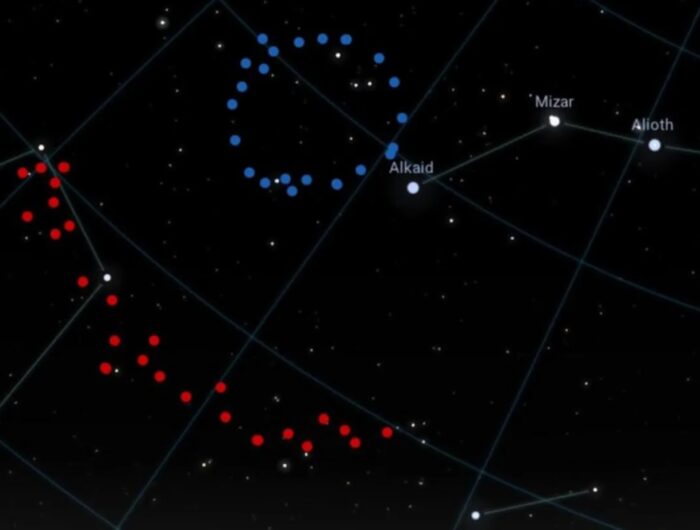

University of Central Lancashire (UCLan) PhD student Alexia Lopez, who two years ago discovered a giant arc of galaxy clusters in the distant universe, has now discovered a Big Ring. This (if real) is one of the largest structures in the observable universe at 1.3 billion light years in diameter. The problem is – such a large structure should not be possible based on current cosmological theory. It violates what is known as the Cosmological Principle (CP), the notion that at the largest scales the universe is uniform with evenly distributed matter.

University of Central Lancashire (UCLan) PhD student Alexia Lopez, who two years ago discovered a giant arc of galaxy clusters in the distant universe, has now discovered a Big Ring. This (if real) is one of the largest structures in the observable universe at 1.3 billion light years in diameter. The problem is – such a large structure should not be possible based on current cosmological theory. It violates what is known as the Cosmological Principle (CP), the notion that at the largest scales the universe is uniform with evenly distributed matter.

The CP actually has two components. One is called isotropy, which means that if you look in any direction in the universe, the distribution of matter should be the same. The other component is homogeneity, which means that wherever you are in the universe, the distribution of matter should be smooth. Of course, this is only true beyond a certain scale. At small scale, like within a galaxy or even galaxy cluster, matter is not evenly distributed, and it does matter which direction you look. But at some point in scale, isotropy and heterogeneity are the rule. Another way to look at this is – there is an upper limit to the size of any structure in the universe. The Giant Arc and Big Ring are both too big. If the CP is correct, they should not exist. There are also a handful of other giant structures in the universe, so these are not the first to violate the CP.

The Big Ring is just that, a two-dimensional structure in the shape of a near-perfect ring facing Earth (likely not a coincidence but rather the reason it was discoverable from Earth). Alexia Lopez later discovered that the ring is actually a corkscrew shape. The Giant Arc is just that, the arc of a circle. Interestingly, it is in the same region of space and the same distance as the Big Ring, so the two structures exist at the same time and place. This suggests they may be part of an even bigger structure.

How certain are we that these structures are real, and not just a coincidence? Professor Don Pollacco, of the department of physics at the University of Warwick, said the probability of this being a statistical fluke is “vanishingly small”. But still, it seems premature to hang our hat on these observations just yet. I would like to see some replications and attempts at poking holes in Lopez’s conclusions. That is the normal process of science, and it takes time to play out. But so far, it seems like solid work.

Continue Reading »

Jan

04

2024

This is one of the biggest thought experiments in science today – as we look for life elsewhere in the universe, what should we be looking for, exactly? Other stellar systems are too far away to examine directly, and even our most powerful telescopes can only resolve points of light. So how do we tell if there is life on a distant exoplanet? Also, how could we detect a distant technological civilization?

This is one of the biggest thought experiments in science today – as we look for life elsewhere in the universe, what should we be looking for, exactly? Other stellar systems are too far away to examine directly, and even our most powerful telescopes can only resolve points of light. So how do we tell if there is life on a distant exoplanet? Also, how could we detect a distant technological civilization?

Here is where the thought experiment comes in. We know what life on Earth is like, and we know what human technology is like, so obviously we can search for other examples of what we already know. But the question is – how might life different from life on Earth be detected? What are the possible signatures of a planet covered in living things that perhaps look nothing like life on Earth. Similarly, what alien technologies might theoretically exist, and how could we detect them?

A recent paper explores this question from one particular angle – are there conditions on a planet that are necessary for the development of technology? They hypothesize that there is an “oxygen bottleneck”, a minimum concentration of oxygen in the atmosphere of a planet, that is necessary for the development of advanced technology. Specifically they argue that open air combustion, which requires a partial pressure (PO2) of oxygen of ≥ 18% (it’s about 21% on Earth), is necessary for fire and metallurgy, and that these are necessary stepping stones on the path to advanced technology.

Continue Reading »

Dec

19

2023

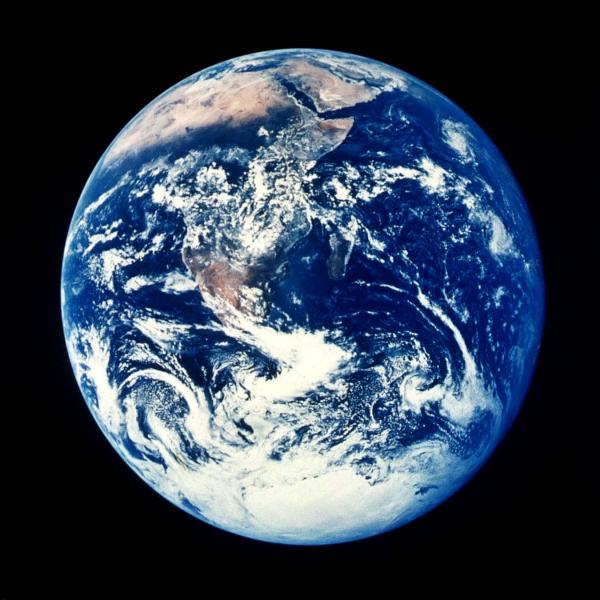

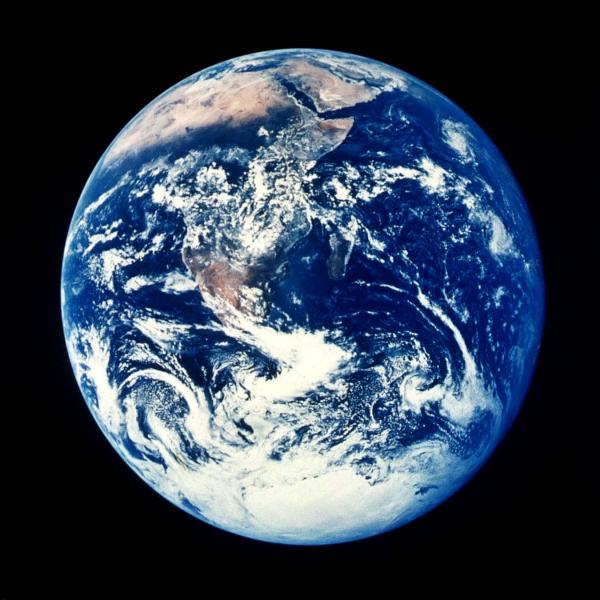

One of the biggest questions of exoplanet astronomy is how many potentially habitable planets are out there in the galaxy. By one estimate the answer is 6 billion Earth-like planets in the Milky Way. But of course we have to set parameters and make estimates, so this number can vary significantly depending on details.

One of the biggest questions of exoplanet astronomy is how many potentially habitable planets are out there in the galaxy. By one estimate the answer is 6 billion Earth-like planets in the Milky Way. But of course we have to set parameters and make estimates, so this number can vary significantly depending on details.

And yet – how many exoplanets have we discovered so far that are “Earth-like”, meaning they are a rocky world orbiting a sun-like star in the habitable zone, not tidally locked to their parent star, with the potential for liquid water on the surface? Zero. Not a single one, out of the over 5,500 exoplanets confirmed so far. This is not a random survey, however, because it is biased by the techniques we use to discover exoplanets, which favor larger worlds and worlds closer to their stars. But still, zero is a pretty disappointing number.

I am old enough to remember when the number of confirmed exoplanets was also zero, and when the first one was discovered in 1995. Basically since then I have been waiting for the first confirmed Earth-like exoplanet. I’m still waiting.

A recent simulation, if correct, may mean there are even fewer Earth-like exoplanets than we think. The study looks at the transition from a planet like Earth to one like Venus, where a runaway greenhouse effect leads to a dry and sterile planet with a surface temperature of hundreds of degrees. The question being explored by this simulation is this – how delicate is the equilibrium we have on Earth? What would it take to tip the Earth into a similar climate as Venus? The answer is – not much.

Continue Reading »

Oct

24

2023

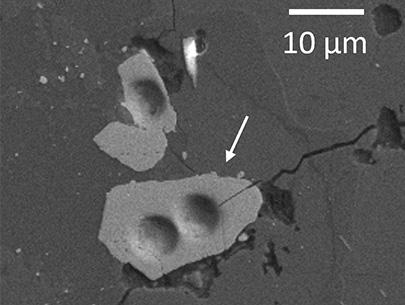

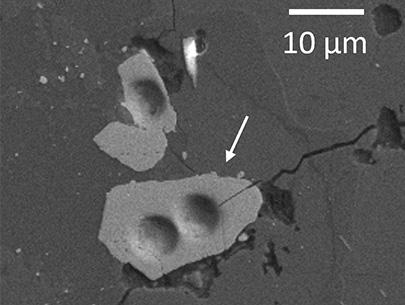

There are a few interesting stories lurking in this news item, but lets start with the top level – a new study revises the minimum age of the Moon to 4.46 billion years, 40 million years older than the previous estimate. That in itself is interesting, but not game-changing. It’s really a tweak, an incremental increase in precision. How scientists made this calculation, however, is more interesting.

There are a few interesting stories lurking in this news item, but lets start with the top level – a new study revises the minimum age of the Moon to 4.46 billion years, 40 million years older than the previous estimate. That in itself is interesting, but not game-changing. It’s really a tweak, an incremental increase in precision. How scientists made this calculation, however, is more interesting.

The researchers studied zircon crystals brought back from Apollo 17. Zircon is a crystal silicate that often contains some uranium. These crystals would have formed when the magma surface of the Moon cooled. The current dominant theory is that a Mars-sized planet slammed into the proto-Earth about four and a half billion years ago, creating the Earth as we know it. The collision also threw up a tremendous amount of material, with the bulk of it coalescing into our Moon. The surface of both worlds would have been molten from the heat of the collision, but it is easier to date the Moon because the surface is better preserved. The surface of the Earth undergoes constant turnover of one type or another, while the lunar surface is ancient. So dating the Moon tells us something about the age of the Earth also.

The method of dating employed in this latest study is called atom probe tomography. First they use an ion beam microscope to carve the tip of a crystal to a sharp point. Then they use UV lasers to evaporate atoms off the tip of the crystal. These atoms pass through a mass spectrometer, which uses the time it takes to pass through as a measure of mass, which identifies the element. The researchers are interested in the proportion of uranium to lead. Uranium is a common element found in zircon, and it also undergoes radioactive decay into lead at a known rate. In any sample you can therefore use the ratio of uranium to lead to calculate the age of that sample. Doing so yielded an age of 4.46 billion years old – the new minimum age of the Moon. It’s possible the Moon could be older than this, but it can’t be any younger.

Continue Reading »

Sep

18

2023

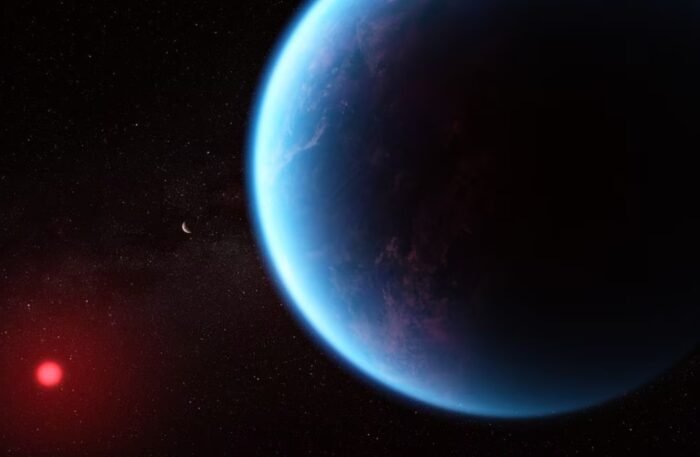

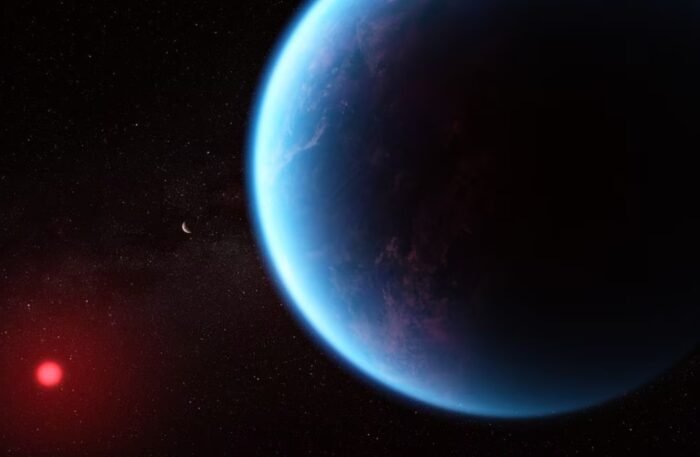

The James Webb Space Telescope spectroscopic analysis of K2-18b, an exoplanet 124 light years from Earth, shows signs that the atmosphere may contain dimethyl sulphide (DMS). This finding is more impressive when you know that DMS on Earth is only produced by living organisms, not by any geological process. The atmosphere of K2-18b also contains methane and CO2, which could be compatible with liquid water on the surface. Methane is also a possible signature of life, but it can also be produced by geological processes. This is pretty exciting, but the astronomers caution that this is a preliminary result. It must be confirmed by more detailed analysis and observation, which will likely take a year.

The James Webb Space Telescope spectroscopic analysis of K2-18b, an exoplanet 124 light years from Earth, shows signs that the atmosphere may contain dimethyl sulphide (DMS). This finding is more impressive when you know that DMS on Earth is only produced by living organisms, not by any geological process. The atmosphere of K2-18b also contains methane and CO2, which could be compatible with liquid water on the surface. Methane is also a possible signature of life, but it can also be produced by geological processes. This is pretty exciting, but the astronomers caution that this is a preliminary result. It must be confirmed by more detailed analysis and observation, which will likely take a year.

According to NASA:

K2-18 b is a super Earth exoplanet that orbits an M-type star. Its mass is 8.92 Earths, it takes 32.9 days to complete one orbit of its star, and is 0.1429 AU from its star. Its discovery was announced in 2015.

This planet was discovered with the transit method, so we have some idea of its radius and therefore density. It’s surface gravity is likely about 12 m/s^2 (Earth’s is 9.8). It has a hydrogen-rich atmosphere, which would explain the methane without the need for life. It orbits a red dwarf, and is likely tidally locked, or in a tidal resonance orbit. It receives an amount of radiation from its star similar to Earth. The big question – is K2-18 b potentially habitable?

Continue Reading »

Aug

25

2023

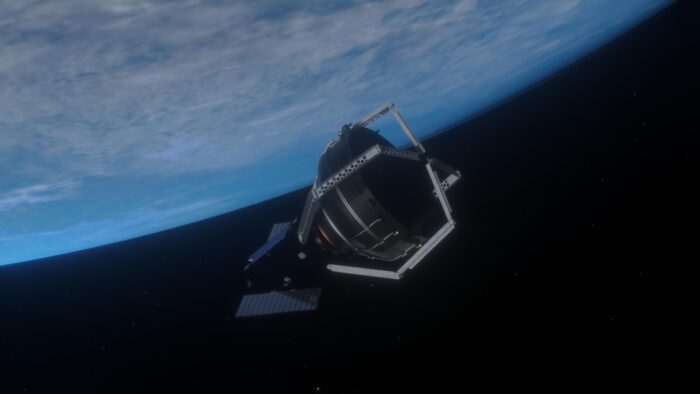

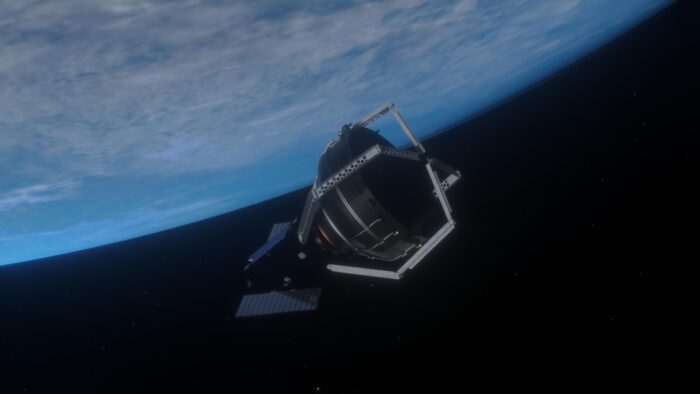

I know you don’t need one more thing to worry about, but I have already written about the growing problem of space debris. At least this update is about a mission to help clear some of that debris – ClearSpace-1. This is an ESA mission which they contracted out to a Swiss company, Clearspace SA, who is making a satellite whose purpose is to grab large pieces of space junk and de-orbit it.

I know you don’t need one more thing to worry about, but I have already written about the growing problem of space debris. At least this update is about a mission to help clear some of that debris – ClearSpace-1. This is an ESA mission which they contracted out to a Swiss company, Clearspace SA, who is making a satellite whose purpose is to grab large pieces of space junk and de-orbit it.

The problem this mission is trying to solve is the fact that we have put millions of pieces of debris into various orbits around the Earth. While space is big, usable near-Earth orbits are finite, and if you put millions of pieces of debris there zipping around at fast speeds, there will be collisions. The worst-case scenario is what’s called a Kessler cascade, in which a collision causes more debris which then increases the probability of further collisions which increases the amount of debris, and the cycle continues. This won’t be an event so much as a process that unfolds over a long period of time. But it has the potential of rendering Earth orbit increasingly dangerous to the point of being unusable. It also makes the task of cleaning up orbit exponentially more difficult.

The Clearspace craft looks like a mechanical squid with 4 arms which can grab tightly onto a large piece of space debris. The planned first mission with target the upper stage of Vespa, part of the ESA Vega launcher. This is a 112 kg target. Once it grabs the debris it with then undergo a controlled deorbit. The mission is planned for 2026, and if successful will be the first mission of its kind. This is a proof-of-concept mission, because removing one piece of large debris is insignificant compared to how much debris is already up there. But we need to demonstrate that the whole system works, and we also need to confirm how much each such mission will cost.

Continue Reading »

Jul

20

2023

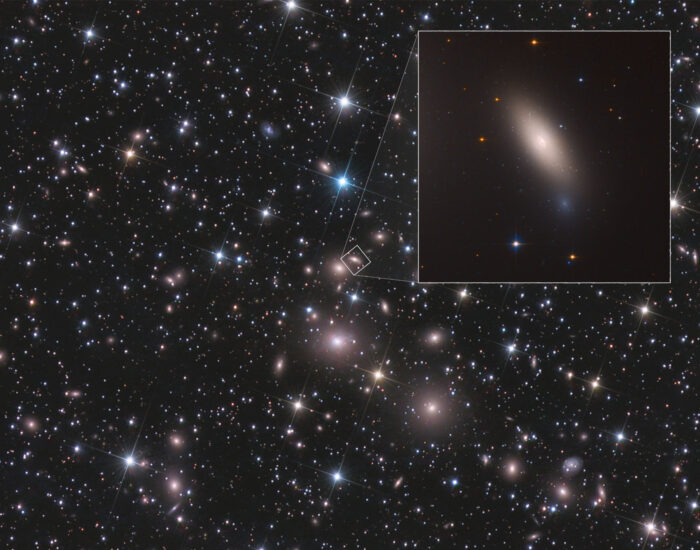

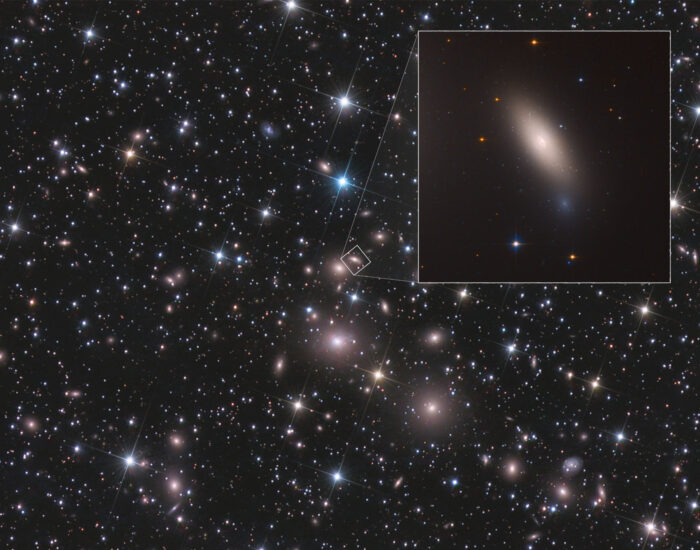

Dark matter is one of the greatest current scientific mysteries. It’s a fascinating story playing out in real time, although over years, so you have to be patient. Future generations might be able to binge the dark matter show, but not us. We have to wait for each episode to drop. Another episode did just drop, in the form of an analysis of the massive relic galaxy NGC 1277, but let’s get caught up before we watch this episode.

Dark matter is one of the greatest current scientific mysteries. It’s a fascinating story playing out in real time, although over years, so you have to be patient. Future generations might be able to binge the dark matter show, but not us. We have to wait for each episode to drop. Another episode did just drop, in the form of an analysis of the massive relic galaxy NGC 1277, but let’s get caught up before we watch this episode.

The term “dark matter” was coined by astronomer Fritz Zwicky in 1933 as one possible explanation for the rotation of the Coma Galaxy Cluster. The galaxies were essentially moving too quickly, implying that there was more gravity (and hence more matter) present in the cluster than was observed. This matter could not be seen, therefore it was dark. The notion was a mere footnote, however, until the 1970s when astronomer Vera Rubin analyzed the rotation curves of many individual galaxies. She found that galaxies were rotating too quickly. The stars should be flying apart because there was insufficient gravity to hold them together (or alternatively they should be rotating more slowly). There must be more gravity that can be seen. The notion of dark matter was therefore solidified, and has been a matter of debate ever since.

Half a century after Rubin confirmed the existence of dark matter, we still don’t know what it is. It must be some kind of particle that does not interact much with other stuff in the universe, does not give off or reflect radiation, but possesses significant mass and therefore gravity. There are candidate particles, such as wimps (weakly interacting massive particles), MACHOs (massive astrophysical compact halo object), axions (particles with a tiny amount of mass but could be very common) or perhaps even several particles currently not accounted for in the standard model of particle physics.

This is one of the exciting things about dark matter – when we figure out what dark matter is, it could break the standard model, pointing the way to a new and deeper understanding of physics. But how certain are we that dark matter exists? To a degree the existence of dark matter is an argument from ignorance – it is a placeholder filling in a gap in our knowledge. We can only infer its existence because we cannot explain with our current models of gravity how stuff is moving in the universe. Perhaps our current models of gravity are wrong?

Continue Reading »

Jun

29

2023

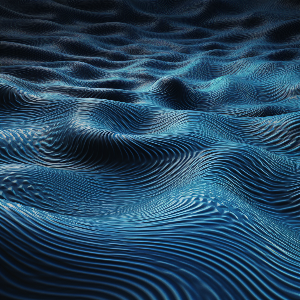

It’s always exciting when a scientific institution announces that they are going to make an announcement. Earlier this week we were told that there was going to be a major announcement today (June 29th) regarding a gravitational wave discovery. The goal of the pre-announcement is to generate buzz and media attention, although I almost always find the reveal to be disappointing. I guess we are too programmed by movie plotlines where such reveals are truly earthshattering. So I have learned to moderate my expectations (a generally good strategy to avoid disappointment).

It’s always exciting when a scientific institution announces that they are going to make an announcement. Earlier this week we were told that there was going to be a major announcement today (June 29th) regarding a gravitational wave discovery. The goal of the pre-announcement is to generate buzz and media attention, although I almost always find the reveal to be disappointing. I guess we are too programmed by movie plotlines where such reveals are truly earthshattering. So I have learned to moderate my expectations (a generally good strategy to avoid disappointment).

The North American Nanohertz Observatory for Gravitational Waves (NANOGrav) team released five papers late last night in the Astrophysical Journal Letters – I think my moderated expectations were pretty much on target. This is awesomely cool science, but didn’t shatter my world. I can see why the scientists were so excited, however. This was the culmination of 15 years of investigation. The bottom line discovery is that spacetime is constantly rippling, in line with Einstein’s predictions of General Relativity. Let’s get into the details.

Gravitational wave astronomy is a new window onto the universe. Most of the recent news has been made by the Laser Interferometer Gravitational-wave Observatory (LIGO). This is a large instrument, with two powerful lasers at right angles to each other, with each arm about 4 km long firing through a vacuum pipeline. Where the lasers cross they create an interference pattern. The slightest disturbance in the lasers can change the interference, and therefore it is a very sensitive detector. The primary challenge is isolating LIGO from background noise and filtering it out. What is left is a signal produced by gravitational waves, ripples in spacetime created by massive gravitational events. LIGO is able to detect high frequency gravitational waves formed by the collision of black holes and/or neutron stars with each other.

Continue Reading »

Jun

02

2023

What will be the ultimate fate of our universe? There are a number of theories and possibilities, but at present the most likely scenario seems to be that the universe will continue to expand, most mass will eventually find its way into a black hole, and those black holes will slowly evaporate into Hawking Radiation, resulting in what is called the “heat death” of the universe. Don’t worry, this will likely take 1.7×10106 years, so we got some time.

But what about objects, like stellar remnants, that are not black holes? Will the ultimate fate of the universe still contain some neutron stars and cold white dwarfs that managed to never get sucked up by a black hole? To answer this question we have to back up a bit and talk about Hawking Radiation.

Stephen Hawking famously proposed this idea in 1975 – he was asked if black holes have a temperature, and that sent him down another type of hole until Hawking Radiation popped out as the answer. But what is Hawking Radiation? The conventional answer is that the vacuum of space isn’t really nothing, it still contains the quantum fields that make up spacetime. Those quantum field do not have to have zero energy, and so occasionally virtual particles will pop into existence, always in pairs with opposite properties (like opposite charge and spin), and then they join back together, cancelling each other out. But at the event horizon of black holes, the distance at which light can just barely escape the black hole’s gravity, a virtual pair might occur where one particle gets sucked into the black hole and the other escapes. The escaping particle is Hawking Radiation. It carries away a little mass from the black hole, causing it to glow slightly and evaporate very slowly. This evaporation gets quicker as the black hole becomes less massive, until eventually it explodes in gamma radiation.

Continue Reading »

As of this writing, there are 5,573 confirmed exoplanets in 4,146 planetary systems. That is enough exoplanets, planets around stars other than our own sun, that we can do some statistics to describe what’s out there. One curious pattern that has emerged is a relative gap in the radii of exoplanets between 1.5 and 2.0 Earth radii. What is the significance, if any, of this gap?

As of this writing, there are 5,573 confirmed exoplanets in 4,146 planetary systems. That is enough exoplanets, planets around stars other than our own sun, that we can do some statistics to describe what’s out there. One curious pattern that has emerged is a relative gap in the radii of exoplanets between 1.5 and 2.0 Earth radii. What is the significance, if any, of this gap?

University of Central Lancashire (UCLan) PhD student Alexia Lopez, who two years ago discovered a giant arc of galaxy clusters in the distant universe,

University of Central Lancashire (UCLan) PhD student Alexia Lopez, who two years ago discovered a giant arc of galaxy clusters in the distant universe, This is one of the biggest thought experiments in science today – as we look for life elsewhere in the universe, what should we be looking for, exactly? Other stellar systems are too far away to examine directly, and even our most powerful telescopes can only resolve points of light. So how do we tell if there is life on a distant exoplanet? Also, how could we detect a distant technological civilization?

This is one of the biggest thought experiments in science today – as we look for life elsewhere in the universe, what should we be looking for, exactly? Other stellar systems are too far away to examine directly, and even our most powerful telescopes can only resolve points of light. So how do we tell if there is life on a distant exoplanet? Also, how could we detect a distant technological civilization? One of the biggest questions of exoplanet astronomy is how many potentially habitable planets are out there in the galaxy.

One of the biggest questions of exoplanet astronomy is how many potentially habitable planets are out there in the galaxy.  There are a few interesting stories lurking

There are a few interesting stories lurking  The James Webb Space Telescope spectroscopic analysis of K2-18b, an exoplanet 124 light years from Earth, shows

The James Webb Space Telescope spectroscopic analysis of K2-18b, an exoplanet 124 light years from Earth, shows  I know you don’t need one more thing to worry about, but I have already written about the

I know you don’t need one more thing to worry about, but I have already written about the  Dark matter is one of the greatest current scientific mysteries. It’s a fascinating story playing out in real time, although over years, so you have to be patient. Future generations might be able to binge the dark matter show, but not us. We have to wait for each episode to drop.

Dark matter is one of the greatest current scientific mysteries. It’s a fascinating story playing out in real time, although over years, so you have to be patient. Future generations might be able to binge the dark matter show, but not us. We have to wait for each episode to drop.  It’s always exciting when a scientific institution announces that they are going to make an announcement. Earlier this week we were told that there was going to be a major announcement today (June 29th) regarding a gravitational wave discovery. The goal of the pre-announcement is to generate buzz and media attention, although I almost always find the reveal to be disappointing. I guess we are too programmed by movie plotlines where such reveals are truly earthshattering. So I have learned to moderate my expectations (a generally good strategy to avoid disappointment).

It’s always exciting when a scientific institution announces that they are going to make an announcement. Earlier this week we were told that there was going to be a major announcement today (June 29th) regarding a gravitational wave discovery. The goal of the pre-announcement is to generate buzz and media attention, although I almost always find the reveal to be disappointing. I guess we are too programmed by movie plotlines where such reveals are truly earthshattering. So I have learned to moderate my expectations (a generally good strategy to avoid disappointment).