Nov 30 2021

Self-Replicating Xenobots

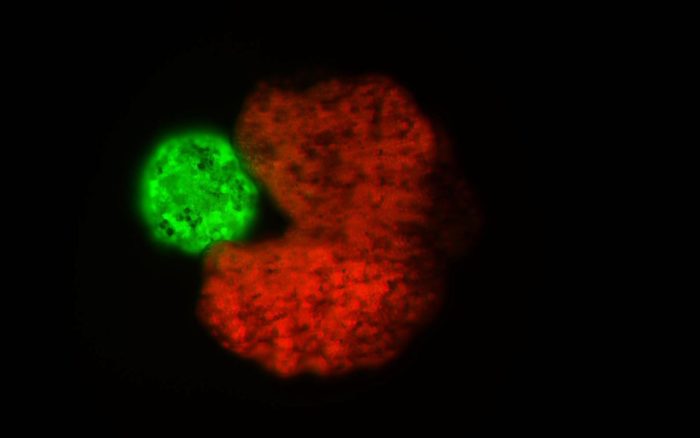

Placing “self-replicating” and any kind of “bots” in the same sentence immediately raises red flags, conjuring the image of reducing the surface of the world to gray goo. But that is not a concern here, for reasons that will become clear. There is a lot to unpack here, so let’s start with what xenobots are. They are biological machines, little “robots” assembled from living cells. In this case the source cells are embryonic pluripotent stem cells taken from the frog species Xenopus laevis. Researchers at the Allen Discovery Center at Tufts University have been experimenting with assembling these cells into functional biological machines, and have now added self-replication to their list of abilities.

Placing “self-replicating” and any kind of “bots” in the same sentence immediately raises red flags, conjuring the image of reducing the surface of the world to gray goo. But that is not a concern here, for reasons that will become clear. There is a lot to unpack here, so let’s start with what xenobots are. They are biological machines, little “robots” assembled from living cells. In this case the source cells are embryonic pluripotent stem cells taken from the frog species Xenopus laevis. Researchers at the Allen Discovery Center at Tufts University have been experimenting with assembling these cells into functional biological machines, and have now added self-replication to their list of abilities.

Further, these xenobots replicate in a unique way, by what is known as kinematic self-replication. This is the first instance of this type of replication at the cell or organism level. The researchers point out that life has many ways of replicating itself: “fission, budding, fragmentation, spore formation, vegetative propagation, parthenogenesis, sexual reproduction, hermaphroditism, and viral propagation.” However, all these forms of self-replication have one thing in common – they happen through growth within or on the organism itself. By contrast, kinematic self-replication occurs entirely outside the organism itself, through the assemblage of external source material.

This process has been known at the molecular level, where molecules (like proteins) can guide the assemblage of identical molecules using external resources. However, this process is entirely unknown at the cellular level or above.

In the case of xenobots, the researchers placed them in an environment with lots of individual stem cells. The xenobots spontaneously gathered these stem cells into copies of themselves. However, these copies were not able to replicate themselves, so the process ended after one or a very limited number of generations. In a new study, the researchers set out to design an optimal xenobot that could sustain many generations of self-replication. They did not do this the old-fashioned way, through extensive trial and error. Rather, they used an AI simulation, which calculated for literally months, testing billions of possible configurations. It came up with a simple shape – a sphere with a mouth, looking incredibly like a Pac-Man. These xenobots are comprised of about 3,000 cells. The researchers assembled their xenobot Pac-Men and when placed in an environment with available stem cells they spontaneously herded them into spheres and then into copies of themselves. These copies were also able to make more copies of themselves, and so-on for many generations.

Experts knew, and had been warning, that delta was not going to be the last Greek letter to sweep across the world. The World Health Organization (WHO)

Experts knew, and had been warning, that delta was not going to be the last Greek letter to sweep across the world. The World Health Organization (WHO)

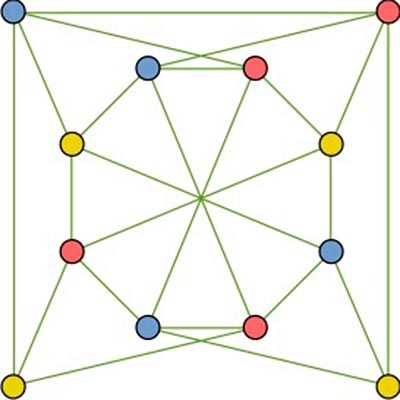

As our world becomes increasingly digital, math becomes more and more important (not that it wasn’t always important). Even in ancient times, math was a critical technology improving our ability to predict the seasons, design buildings and roads, and have a functioning economy. In recent decades our world has been becoming increasingly virtual and digital, run by mathematical algorithms, simulations, and digital representations. We are increasingly building our world using methods that are driven by computers, and the clear trend in technology is toward a greater meshing of the virtual with the physical. One possible future destination of this trend is programmable matter, in which the physical world literally becomes a manifestation of a digital creation.

As our world becomes increasingly digital, math becomes more and more important (not that it wasn’t always important). Even in ancient times, math was a critical technology improving our ability to predict the seasons, design buildings and roads, and have a functioning economy. In recent decades our world has been becoming increasingly virtual and digital, run by mathematical algorithms, simulations, and digital representations. We are increasingly building our world using methods that are driven by computers, and the clear trend in technology is toward a greater meshing of the virtual with the physical. One possible future destination of this trend is programmable matter, in which the physical world literally becomes a manifestation of a digital creation. In

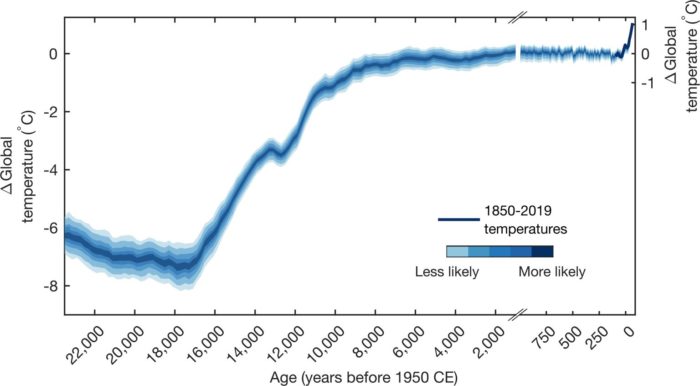

In  While the world debates how best to reverse the trend of anthropogenic global warming (AGW), scientists continue to refine their data on historical global temperatures.

While the world debates how best to reverse the trend of anthropogenic global warming (AGW), scientists continue to refine their data on historical global temperatures.  A

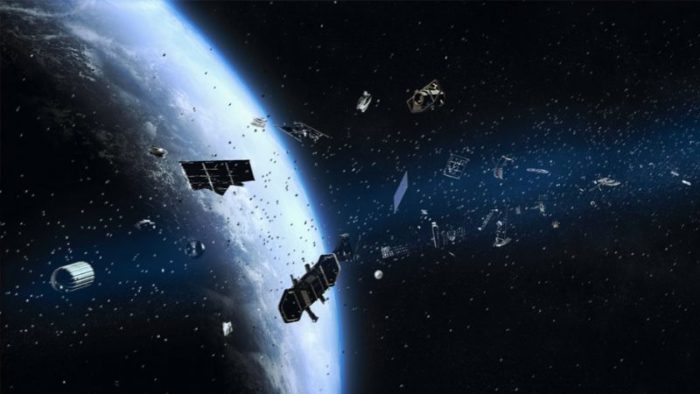

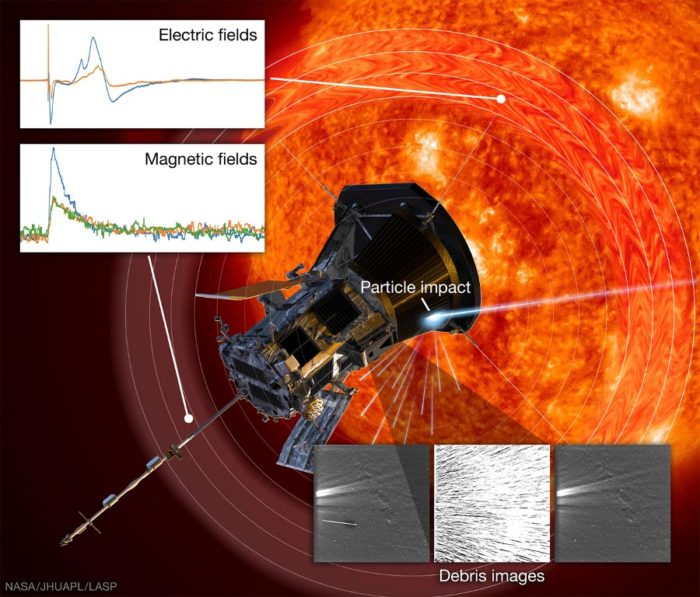

A  Space is an incredibly hostile environment, and we are learning more about the challenges of living and traveling in space the more we study it. Apart from the obvious near vacuum and near absolute zero temperatures, space is full of harmful radiation. We live comfortably beneath a blanket of protective atmosphere and a magnetic shield, but in space we are exposed.

Space is an incredibly hostile environment, and we are learning more about the challenges of living and traveling in space the more we study it. Apart from the obvious near vacuum and near absolute zero temperatures, space is full of harmful radiation. We live comfortably beneath a blanket of protective atmosphere and a magnetic shield, but in space we are exposed. Data security sounds like a boring topic. However, it is quickly becoming one of the most important technologies in our modern world. Our data, communications, and transactions are increasingly digital, and they are all vulnerable to hacking. It’s estimated that hacking costs the world about

Data security sounds like a boring topic. However, it is quickly becoming one of the most important technologies in our modern world. Our data, communications, and transactions are increasingly digital, and they are all vulnerable to hacking. It’s estimated that hacking costs the world about