Jan

13

2012

Here we go again – facilitated communication (FC) rears its pseudoscientific head again, and (surprise) the HuffPo publishes a completely uncritical article about it.

Kathleen Miles discusses the case of Jacob Artson, a boy with autism who is non-verbal. The article is framed in the typical gullible pseudo-journalistic style of – a brave family finds a “miracle” that allows them to communicate with their non-verbal son. Miles describes the case in gushing terms, with only the barest reference to those nasty “skeptics” who are skeptical of such claims (and she completely botches even her short reference to the controversy, which is actually not even a controversy.)

Before I discuss the case of Jacob further, here is a quick background on FC. In the late 1980s and into the 1990 FC was proposed and rapidly incorporated as a technique for communicating to clients who were not able to communicate on their own, for a variety of reasons. The technique is simple – a facilitator “supports” the arm of the client allowing them to type on a keyboard or point to letters in order to spell out words.

Continue Reading »

Jan

12

2012

I love the X-Prize concept, and I love the concept of a Star Trek style tricorder – so both in one is awesome squared.

I love the X-Prize concept, and I love the concept of a Star Trek style tricorder – so both in one is awesome squared.

At the Consumer Electronics show in Vega, Qualcomm announced their tricorder X-prize – $10 million dollars for the first group to produce a device that weighs less than 5 pounds and is capable of non-invasively sensing a variety of health metrics (like temperature and blood pressure) and diagnosing 15 diseases.

The details are not available yet, and the specific rules for this contest have not been formalized. I am particularly interested in what the requirements will be, for the sensors to be either remote, allow direct contact to the subject, or will it also allow any invasive techniques, like drawing blood or other fluid.

It seems that one key feature will be that all of the diagnostic modalities have to be contained in one small (5 pound limit) device, but that device can have as many components as necessary.

A few thoughts come to mind:

Continue Reading »

Jan

10

2012

This is one of those memes that refuses to die. It’s a zombie-meme, the terminator of myths, one of those ideas of popular culture that everyone knows but is simply wrong – the idea that individuals can be categorized as either left-brain or right-brain in terms of their personality and the way they process information. Related to this is the notion that any individual can either engage their left brain or their right brain in a particular task.

The most pernicious myths tend to have a kernel of truth, but are misleading or oversimplified in a significant way. In the case of brain anatomy and function, it is true that our brains are divided into two hemispheres, left and right. Each hemisphere is capable of generating wakeful consciousness by itself. And there are many higher cognitive functions that lateralize, meaning they are largely located in one hemisphere or the other.

For example, language function lateralizes to the dominant hemisphere, which is the left hemisphere for most people. Visuo-spacial reasoning lateralizes to the non-dominant hemisphere (right hemisphere for most people).

Continue Reading »

Jan

09

2012

A “crank” is a particular variety of pseudoscientist or “true believer” – one that tries very hard to be a real scientist but is hopelessly crippled by a combination of incompetence and a tendency to interpret their own incompetence as overwhelming genius. In a recent article in Slate (republished from New Scientist) Margaret Wertheim tries, for some reason, to defend those cranks who believe they have developed an alternate theory of physics. In the article she does a good job of painting a picture of what a crank is, but it seems almost incidental as the main thrust of her article is to criticize science for being inaccessible. The result is confused and misleading.

In order see exactly why a crank is a crank one needs to have a clear idea of how mainstream science works and why (something that cranks often lack themselves). Science is often portrayed in popular culture in the quaint manner of the lone genius working away in their lab and developing ideas largely on their own. Further, any true advance is met by nothing but scorn from their colleagues and the scientific establishment. This view may have been somewhat relevant in the 19th century and earlier, but rarely has any relevance to modern science.

Science has progressed in most areas to the point that a large body of knowledge needs to be mastered before meaningful contributions are possible. New ideas and information are shared with the community throughout the process of research and discovery, in papers and at meetings, and ideas are criticized and picked over. Each component of a scientific theory needs to be experimentally or observationally established, and there should be good reasons to distinguish one theory from another. Any viable theory needs to at the very least account for existing evidence and should be compatible with well-established theories or facts, or have a compelling explanation for why they aren’t.

Continue Reading »

Jan

06

2012

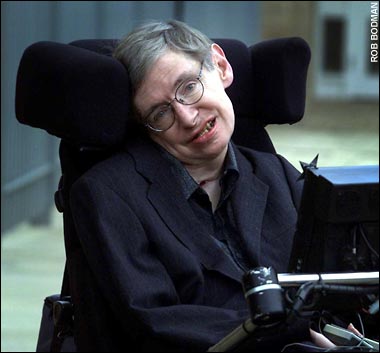

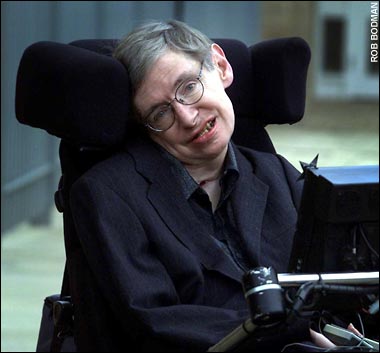

Stephen Hawking, Professor of mathematics and astronomy, Queen Mary, University of London, is turning 70 – a numerical milestone that is unremarkable in itself, but it does provide a reasonable excuse to look back and admire the career of the famous astrophysicist.

Stephen Hawking, Professor of mathematics and astronomy, Queen Mary, University of London, is turning 70 – a numerical milestone that is unremarkable in itself, but it does provide a reasonable excuse to look back and admire the career of the famous astrophysicist.

Hawking is a member of that rare club – the celebrity scientist. Apparently Albert Einstein was the very first member of this club – someone who is not just famous as a scientist among fellow scientists and intellectuals, but a true celebrity and household name. Hawking is one of the few who have truly followed in his footsteps.

It’s interesting to think about how Hawking became as famous as a scientist as he is. Clearly he is a brilliant scientist. His major contribution that initially brought him attention was the hypothesis that black holes emit radiation due to quantum effects (called Hawking radiation). This may seem like an obscure phenomenon, but it was the first to unite quantum effects with general relativity and thermodynamics.

Continue Reading »

Jan

05

2012

Recently researchers published a paper in which their data show, with statistical significance, that listening to a song about old age (When I’m 64) actually made people younger – not just feel younger, but to rejuvenate to a younger age. Of course, the claim lacks plausibility, and that was the point. Simmons, Nelson, and Simonsohn deliberately chose a hypothesis that was impossible in order to make a point: how easy it is to manipulate data in order to generate a false positive result.

In their paper Simmons et al describe in detail what skeptical scientists have known and been saying for years, and what other research has also demonstrated, that researcher bias can have a profound influence on the outcome of a study. They are looking specifically at how data is collected and analyzed and showing that the choices the researcher make can influence the outcome. They referred to these choices as “researcher degrees of freedom;” choices, for example, about which variables to include, when to stop collecting data, which comparisons to make, and which statistical analyses to use.

Each of these choices may be innocent and reasonable, and the researchers can easily justify the choices they make. But when added together these degrees of freedom allow for researchers to extract statistical significance out of almost any data set. Simmons and his colleagues, in fact, found that using four common decisions about data (using two dependent variables, adding 10 more observations, controlling for gender, or dropping a condition from the test) would allow for false positive statistical significance at the p<0.05 level 60% of the time, and p<0.01 level 21% of the time.

This means that any paper published with a statistical significance of p<0.05 could be more likely to be a false positive than true positive.

Continue Reading »

Jan

03

2012

There is an historical pattern with which skeptics and scientists should be very familiar – the dubious phenomenon that vanishes under double-blind testing. We saw this with N-rays, which now stands as a famous cautionary tale. The ephemeral rays could only be seen by those who knew what to look for, mostly French scientists. The introduction of a blinded test, however, quickly proved N-rays to be all illusion and wishful thinking. We have seen this with mesmerization, homeopathy, and a long list of other useless medical interventions, with electromagnetic sensitivity, and recently with the Power Bands. In countless cases over hundreds of years many people were utterly convinced of the reality of a phenomenon, and they could even apparently demonstrate or detect it (when they knew what they were supposed to see), but under blinded conditions the phenomenon evaporated.

It is for this reason that scientists do not (and should not) accept the reality of a new phenomenon until it has been demonstrated in such a way that bias and illusion have been ruled out. There is a general tendency to underestimate the degree to which bias and suggestibility can influence perception, no matter how many times it is experienced.

Perception is especially vulnerable to suggestion, and the influence of other senses. For example, Morrot, Brochet, and Dubourdieu performed a study in which they colored white wine red, and then had 54 tasters describe the wine. They used red metaphors to describe the wine, as would typically be used to describe a red, rather than white, wine.

Continue Reading »

Jan

02

2012

One concept that is important to being a scientist or critical thinker is that terms need to be defined precisely and unambiguously. Words are ideas and ideas can be sharp or fuzzy – fuzzy ideas lead to fuzzy thinking. An obstacle to using language precisely is that words often have multiple definitions, and many words that have a specific technical definition also have a colloquial use that is different than the technical use, or at least not as precise.

Recently on the SGU we talked about randomness, a concept that can use more exploration than we had time for on the show. The term “random” has a colloquial use – it is often used to mean a non-sequitur, or something that is out of context. It is also used colloquially in the mathematical sense, as a sequence or arrangement that does not have any apparent or actual pattern. However, people have a generally poor naive sense about what is random, mathematically speaking.

There are at least two specific technical definitions of the term random I want to discuss. The first is mathematical randomness. Here there is a specific operational definition; a random sequence of numbers is one in which every digit has a statistically equal chance of occurring at any position. That’s pretty straightforward. This operation can be applied to many sequences to see if they conform to a statistically random sequence. Gambling is one such application. The sequence of numbers that come up at the roulette table, for example, should be mathematically random. No one number should come up more often than any other (over a sufficiently large sample size), and there should be no pattern to the sequence. Every number should have an equal chance of appearing at any time. Otherwise players would be able to take advantage of the non-randomness to increased their odds of winning.

Continue Reading »