Dec 23 2022

A Quick Review of Facilitated Communication

Facilitated communication (FC) is a technique that involves a facilitator supporting the hand or arm of a person with severe communication disabilities, such as autism or cerebral palsy, as they type on a keyboard or communicate through other means. The theory behind FC is that the facilitator’s physical support allows the person to overcome any motor impairments and communicate more effectively. However, FC has been the subject of considerable controversy and skepticism within the scientific community.

Facilitated communication (FC) is a technique that involves a facilitator supporting the hand or arm of a person with severe communication disabilities, such as autism or cerebral palsy, as they type on a keyboard or communicate through other means. The theory behind FC is that the facilitator’s physical support allows the person to overcome any motor impairments and communicate more effectively. However, FC has been the subject of considerable controversy and skepticism within the scientific community.

One major issue with FC is that there is little scientific evidence to support its effectiveness. Despite being used for decades, FC has never been rigorously tested in controlled, double-blind studies. This is problematic because it is impossible to determine whether the messages being communicated through FC are actually coming from the person with disabilities or from the facilitator. Some researchers have suggested that FC may be susceptible to ideomotor effect, which is when unconscious movements or responses are influenced by a person’s thoughts or beliefs. This means that the facilitator’s own thoughts and beliefs could be influencing the messages that are being communicated.

Another issue with FC is that there have been numerous cases where the messages communicated through FC have been shown to be incorrect or misleading. For example, in one well-known case, a woman with severe communication disabilities was believed to have communicated through FC that she had been sexually abused as a child. However, subsequent investigations revealed that the allegations were not true and that the facilitator had likely influenced the woman’s responses.

Given these concerns, it is important to be cautious about the validity of FC as a means of communication. While it may be tempting to believe that FC can provide a way for people with severe communication disabilities to express themselves, the lack of scientific evidence and the potential for misleading or false messages make it difficult to rely on FC as a reliable source of information. Instead, it may be more productive to focus on other, more established communication methods, such as augmentative and alternative communication (AAC) devices or sign language.

In conclusion, while FC may be a well-intentioned approach to helping people with severe communication disabilities communicate, the lack of scientific evidence and the potential for misleading or false messages make it difficult to rely on as a reliable source of information. Until there is more rigorous scientific evidence to support the effectiveness of FC, it is important to approach it with skepticism and consider alternative methods for communication.

Now…

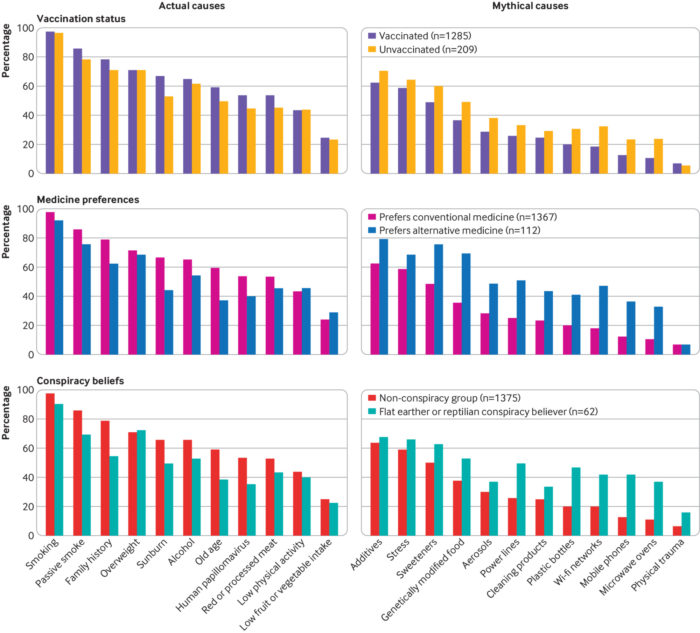

What are the known factors that increase the risk of getting cancer? Most people know about smoking, but can probably only guess at other factors, and are likely to endorse things that do not contribute to cancer risk. The

What are the known factors that increase the risk of getting cancer? Most people know about smoking, but can probably only guess at other factors, and are likely to endorse things that do not contribute to cancer risk. The  It’s always fun and interesting to look back at the science news of the previous year, mainly because of how much of it I have forgotten. What makes a science news item noteworthy? Ultimately it’s fairly subjective, and we don’t yet have enough time to really see what the long term impact of any particular discovery or incremental advance was. So I am not going to give any ranked list, just reminisce about some of the cool science and technology new from the past year, in no particular order. I encourage you to extend the discussion to the comments – let me know what you though had or will have the most impact from the past year.

It’s always fun and interesting to look back at the science news of the previous year, mainly because of how much of it I have forgotten. What makes a science news item noteworthy? Ultimately it’s fairly subjective, and we don’t yet have enough time to really see what the long term impact of any particular discovery or incremental advance was. So I am not going to give any ranked list, just reminisce about some of the cool science and technology new from the past year, in no particular order. I encourage you to extend the discussion to the comments – let me know what you though had or will have the most impact from the past year. I

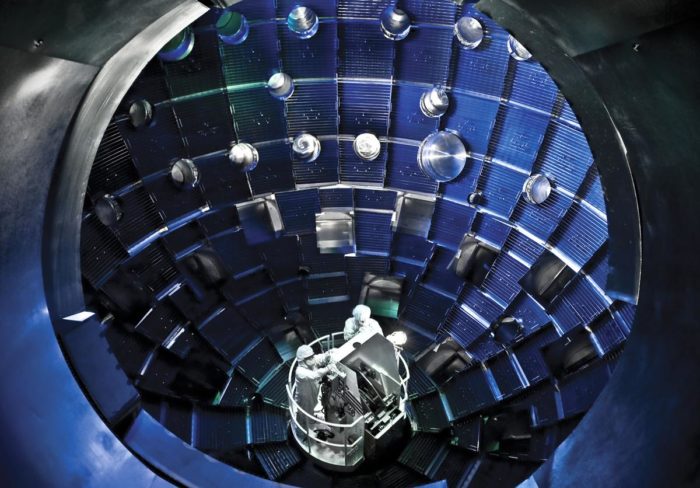

I  Much of the discussion about how we are going to rapidly change over our energy infrastructure to low carbon energy involves existing technology, or at most incremental advancements. The problem is, of course, that we are up against the clock and the best solutions are ones that we can implement immediately. Even next generation fission reactors are controversial because they are not a tried-and-true technology, even though fission technology itself is. It certainly would not be prudent to count on an entirely new technology as our solution. If some game-changing technology emerges, great, but until then we will make due with what we know works.

Much of the discussion about how we are going to rapidly change over our energy infrastructure to low carbon energy involves existing technology, or at most incremental advancements. The problem is, of course, that we are up against the clock and the best solutions are ones that we can implement immediately. Even next generation fission reactors are controversial because they are not a tried-and-true technology, even though fission technology itself is. It certainly would not be prudent to count on an entirely new technology as our solution. If some game-changing technology emerges, great, but until then we will make due with what we know works. Our ability to detect, amplify, and sequence tiny amount of DNA has lead to a scientific revolution. We can now take a small sample of water from a lake, and by analyzing the environmental DNA in that water determine all of the things that live in the lake. This is an amazingly powerful tool. My favorite application of this technique was to demonstrate

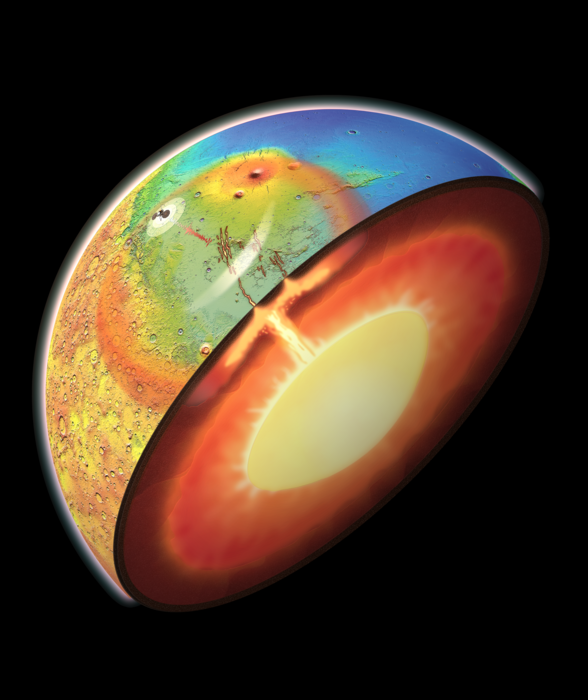

Our ability to detect, amplify, and sequence tiny amount of DNA has lead to a scientific revolution. We can now take a small sample of water from a lake, and by analyzing the environmental DNA in that water determine all of the things that live in the lake. This is an amazingly powerful tool. My favorite application of this technique was to demonstrate  Mars is perhaps the best candidate world in our solar system for a settlement off Earth. Venus is too inhospitable. The Moon is a lot closer, but the extremely low gravity (.166 g) is a problem for long-term habitation. Mars gravity is 0.38 g, still low by Earth standards but better than the Moon. But there are some other differences between Earth and Mars. Mars has only a very thin atmosphere, less than 1% that of Earth’s. That’s just enough to cause annoying sand storms, but not enough to avoid the need for pressure suits. Mars lost its atmosphere because it was stripped away by the solar wind – because Mars also does not have a global magnetic field to protect itself. The thin atmosphere and lack of magnetic field also exposes the surface to lots of radiation.

Mars is perhaps the best candidate world in our solar system for a settlement off Earth. Venus is too inhospitable. The Moon is a lot closer, but the extremely low gravity (.166 g) is a problem for long-term habitation. Mars gravity is 0.38 g, still low by Earth standards but better than the Moon. But there are some other differences between Earth and Mars. Mars has only a very thin atmosphere, less than 1% that of Earth’s. That’s just enough to cause annoying sand storms, but not enough to avoid the need for pressure suits. Mars lost its atmosphere because it was stripped away by the solar wind – because Mars also does not have a global magnetic field to protect itself. The thin atmosphere and lack of magnetic field also exposes the surface to lots of radiation. Construction begins this week on what will be the largest radio telescope in the world –

Construction begins this week on what will be the largest radio telescope in the world –  Yesterday

Yesterday  People tend to understand the world through the development of narratives – we tell stories about the past, the present, ourselves, others, and the world. That is how we make sense of things. I always find it interesting, the many and often subtle ways in which our narratives distort reality. One common narrative is that the past was simpler and more primitive than it actually was, and that progress is linear, objective, and inevitable. I remember watching

People tend to understand the world through the development of narratives – we tell stories about the past, the present, ourselves, others, and the world. That is how we make sense of things. I always find it interesting, the many and often subtle ways in which our narratives distort reality. One common narrative is that the past was simpler and more primitive than it actually was, and that progress is linear, objective, and inevitable. I remember watching