Mar 31 2020

Decoding Speech from Brainwaves

Here is yet another incremental advance in brain-machine interface (BMI) technology – decoding what someone is saying from their brainwaves using a neural network and machine learning. We are still a distance away from using a system like this to allow someone who cannot speak to communicate, but the study nicely illustrates where the technology is. Here is the BBC’s reporting:

Here is yet another incremental advance in brain-machine interface (BMI) technology – decoding what someone is saying from their brainwaves using a neural network and machine learning. We are still a distance away from using a system like this to allow someone who cannot speak to communicate, but the study nicely illustrates where the technology is. Here is the BBC’s reporting:

Scientists have taken a step forward in their ability to decode what a person is saying just by looking at their brainwaves when they speak.

They trained algorithms to transfer the brain patterns into sentences in real-time and with word error rates as low as 3%.

Previously, these so-called “brain-machine interfaces” have had limited success in decoding neural activity.

Now here are all the caveats from the paper. First, the technology used electrocorticography (ECoG), which is an EEG with brain surface electrodes. So this requires an invasive procedure, and persistent electrodes inside the skull and on top of brain tissue. Also, in order to get the best performance, they used a lot of electrodes – resulting in 256 channels (a channel is a comparison in the electrical activity between two electrodes). They simulated what would happen with fewer electrodes by eliminating many of the channels in the data, down to 64, and found that the error rates were about four times greater. The authors argue this is “still within the usable range” but they consider usable range up to a 20-25% error rate. What this shows is that – yes, more electrodes matter. You need the very fine discrimination of brain activity in order to get good (usable) results.

Perhaps even more significant a limitation, in terms of real-world applications, is that the subjects were trained on a limited number of sentences – 30-50 sentences with a 250 word vocabulary. For background, decoder systems like this need to focus on a specific level of speech, meaning that they try to decode phonemes (sound parts of speech), or entire words, or entire phrases or sentences. The larger a chunk of speech the system decodes the more accurate it is, but the more limiting its versatility and the greater the number of possibilities it has to be trained on (there are many more possible sentences than words, and words than phonemes). The question is – what is the best path to get to decoding all human speech without limitations?

This study focuses on words, and the authors make a good case for that approach. Phonemes are too variable; they can change based on what comes before them in a word, for example. Focusing on sentences is too limiting (there are way too many possibilities). Decoding words, therefore, is just right. If you can get a system to recognize a few thousand individual words, that will cover the most used words. As an aside, the average English speaker (this does vary considerably with language) has a vocabulary of between 20,000-35,000 words. But you can communicate a lot using only the most common 1-2 thousand words. For someone who is otherwise unable to speak, even a 500 or so word vocabulary will be extremely useful.

In this study the neural network learning system they used tried to construct sentences from recognizing individual words. However – the system was trained in a limited number of sentences. The implication of this is shown by the fact that when the system did make an error, it would include entire phrases taken from one of the other trained sentences. So clearly there was some at least phrase-level learning going on.

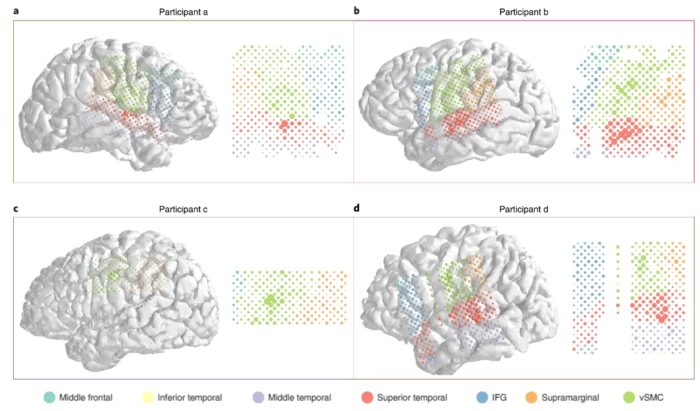

Another limitation is that the subjects read the sentences out loud. This mattered, because when they didn’t the error rate again jumped 3-4 times. They further looked at which brain regions (meaning which electrodes) were most important in predicting speech from the ECoG. They found that the two areas that were most important were the superior temporal gyrus, which is the primary speech area involved in decoding language, but also the auditory area that hears speech. So part of the success of this system was due to the subjects hearing themselves speak. Again, this points to the need to have subjects speaking out loud for the system to work well.

Given all these limitations, I don’t think this technology is at the practical application stage yet, or even close. But this study is an important proof of concept. It shows that recording the electrical activity of the brain during speech can be translated into what is being said. On the bright side, when they pretrained the system with speech outside the 30-50 target sentences, performance did improve a little. This implies some level of generalizability.

But – we still need many electrodes, requiring an invasive procedure, and high performance is limited to subjects speaking out loud and training on a very limited set of target sentences. Obviously what we want to get to is a system that works well enough to functionally communicate without the need to actually produce speech (otherwise, why bother) and with open-ended target sentences. If such a system can build up a vocabulary of 500+ words then it would be useful to those otherwise not able to communicate.

The authors also point out that this study used limited training, just two training trials minimum, for about 30 minutes. Imagine a patient with days, weeks, or months for the system to refine its dataset. We don’t know where such a system will plateau in terms of performance, but it does create the possibility of greater performance than with limited training.

As I wrote recently, other researchers are working on improving the electrode technology that is critical to BMI. If we can develop a system, for example, that uses microwires as electrodes, that can also go deep into the brain rather than remain on the surface, that could be a huge boon to a language-decoding system like this. Again – the proof of concept here is perhaps the most important thing. Brain activity does vary precisely and predictably enough with speech that decoding is possible. Put another way, with such systems there are theoretical limits to resolution and practical (technological) limits. This study suggests that the theoretical limits are not critical. Now we just need incremental technological advance.