Nov 30 2020

AI Doctor’s Assistant

I have discussed often before how advances in artificial intelligence (AI) are already transforming our world, but are likely to do so much more in the future (even near term). I am interested in one particular application that I think does not get enough attention – using AI to support clinical decision-making. So I was happy to read that one such project will share in a grant from the UK government.

I have discussed often before how advances in artificial intelligence (AI) are already transforming our world, but are likely to do so much more in the future (even near term). I am interested in one particular application that I think does not get enough attention – using AI to support clinical decision-making. So I was happy to read that one such project will share in a grant from the UK government.

The grant of £20m will be shared among 15 UK universities working on various AI projects, but one of those projects is developing an AI doctor’s assistant. They called this the Turing Fellowship, after Alan Turing, who was one of the pioneers of machine intelligence. As the BBC reports:

The doctor’s assistant, or clinical colleague, is a project being led by Professor Aldo Faisal, of Imperial College London. It would be able to recommend medical interventions such as prescribing drugs or changing doses in a way that is understandable to decision makers, such as doctors.

This could help them make the best final decision on a course of action for a patient. This technology will use “reinforcement learning”, a form of machine learning that trains AI to make decisions.

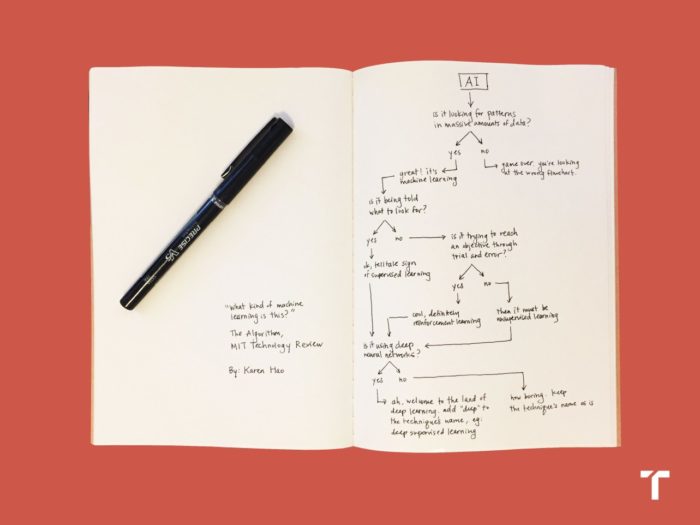

This is great to hear, and should be among the highest priority in terms of developing such AI applications. In fact, it’s a bit disappointing that similar systems are not already in widespread use. There are several types of machine learning. At its core, machine learning involves looking for patterns in large sets of data. If the computer algorithm is being told what to look for, then that is supervised learning. If not, then it is unsupervised. If it’s using lots of trial and error, that is reinforcement learning. And if it is using deep neural networks, then it is also deep learning. In this case they are focusing on reinforcement learning, so the AI will make decisions, be given feedback, and then iterate its decision-making algorithm with each piece of data.

How will this integrate into clinical practice? As I have discussed before, this will not replace human doctors or what they do but rather augment it. Human clinicians do a few things well. Experience can train a practitioner to have great pattern recognition. It also helps them anticipate possible pathways of diagnosis and treatment, avoid pitfalls, and anticipate issues. An extended training period also arms them with lots of factual knowledge. Clinicians, depending on their specialty, will also develop some specific skills, such as surgical or other technical skills, and the ability to interface effectively with patients.

However, it is already recognized that human clinicians are easily overwhelmed by the sheer volume of information needed to optimize any clinical decision, and the complexity of taking into consideration all the statistical implications of the many variables involved in even apparently straight-forward cases. We handle this in a few ways. Mostly we blend analytical and intuitive approaches, using that pattern recognition to guide our analytical choices and priorities. We also tend to oversimplify, boiling down all the complexity to a manageable system (which might literally be a treatment or diagnostic algorithm). Panels of experts constantly review the literature to update standards and clinical pathways, and practitioners have to constantly keep themselves updated.

What clinicians do not do well is deal with a massive amount of data in real time, and make complex statistical analyses. In fact, we can often be mislead by our own quirky experience, or fall victim to cognitive biases and flawed heuristics. For example, we tend to over-rely on the representativeness heuristic. We tend to think that a diagnosis is more likely if the presenting signs and symptoms really look like that diagnosis. However, this is only partly true, and the base rate of the diagnosis might have a much larger effect on that probability, but be given too little consideration. Clinicians also routinely make statistical errors in thinking, because statistics is not intuitive.

Now imagine that a human clinician has at their disposal, at the point of patient care when decisions are being made, an AI expert system that can shore up those weaknesses. Such a system could crunch through large amounts of data and come to statistical conclusions that would be impossible for a human, for example – not only coming up with a list of potential diagnoses, but attaching hard statistical numbers to each possible diagnosis. The AI could then help optimize the diagnostic pathway, indicating which study would have the greatest chance of sorting through those possible diagnoses the fastest, safest, cheapest, and overall most effectively.

Such a system could not only look at getting the right diagnosis, but what the ultimate outcome is. Getting the right diagnosis might not even be the most effective pathway to the best outcome, as counterintuitive as that may seem. You have to consider which diagnoses are treatable, what are the consequences of making or missing a diagnosis, and what the risk is of the tests necessary to make a diagnosis. These are all things clinicians consider, but again having instant hard numbers on the many possible pathways, taking into consideration many personal patient details, would be hugely effective.

To give another example, if a patient presents with certain symptoms while certain variables are present, the statistically best outcome might be achieved by giving an empiric treatment, rather than doing any testing. Or perhaps a single test would determine which treatment would have the best outcome, even if that test does not seem directly related to the presenting symptoms. Statistics produces counterintuitive outcomes – but what we mostly care about is the net outcome for the patient.

An AI clinical assistant can also incorporate cost effectiveness into the decision making, by suggesting equal but less expensive treatments or diagnostic pathways. This is the “Moneyball” approach to medicine – looking at the bang for the buck of each intervention. This is also not just about money: cost-benefit can also consider the pain or inconvenience to the patient. If we could get the same outcome minus a painful procedure, most patients would appreciate that.

In many cases there is likely to be one optimal clinical pathway. However, even with a powerful AI assistant, there may be two or more acceptable clinical pathways with different trade-offs. This is similar to your GPS software offering you the option of choosing the fastest route, the shortest route, or the route that avoids highways. A clinician and their patient may need to decide among the treatments with the best outcome, with the least pain and inconvenience, the least time off from work or family duties, or the least out-of-pocket expenses. Putting hard statistical numbers on these trade-offs will at least allow patients to know what they are getting in the bargain.

This is all things we do now – but an AI assistant would allow us to do it better, more consistently, and more up to date with the latest published information.

If for no other reason, this should be a high priority because of the potentially enormous savings to the health care system. Such savings would likely be in the billions of dollars, which justifies a lot of investment in developing this technology. A one-fifteenth share of £20m is nice, but paltry by comparison. All the machine learning technology already exists – now it is just a matter of developing this specific application. Any government investment in developing this application would likely be repaid by many orders of magnitude.