Jun 20 2019

Possible Universal Memory Reported

Universal memory is one of those things you probably didn’t know you wanted (unless you are a computer nerd). However, it is the “holy grail” of computer memory that, if achievable, would revolutionize computers. Now, scientists from Lancaster University in the UK, have claimed to do just that. The practical benefit of this advance, if implemented, would be a significant reduction in the energy consumption of computers. This is significant because by 2025 it is projected that data centers will consume 20% of global electricity.

Universal memory is one of those things you probably didn’t know you wanted (unless you are a computer nerd). However, it is the “holy grail” of computer memory that, if achievable, would revolutionize computers. Now, scientists from Lancaster University in the UK, have claimed to do just that. The practical benefit of this advance, if implemented, would be a significant reduction in the energy consumption of computers. This is significant because by 2025 it is projected that data centers will consume 20% of global electricity.

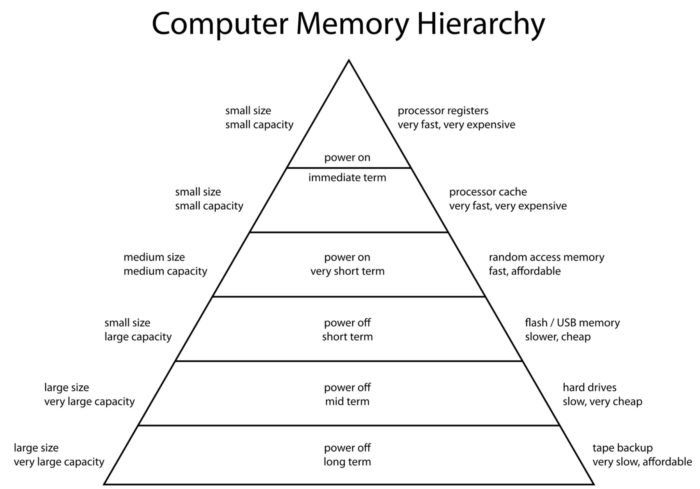

There are several properties that define theoretical perfect computer memory. Memory exists on a spectrum from volatile to robust. Volatile memory is short lived, needs active power, and needs to be refreshed. Robust memory is long term and does not need active power. Memory should also be stable over long time periods – ideally centuries, if not longer. Computer memory also needs to be fast, so data can be written to and read from the memory quickly. And finally the less energy a memory system uses to do all this the better.

The classic problem is that scientists have been unable to come up with a form of computer memory that is simultaneously robust and fast. This has lead to a hierarchy of memory, with each type of memory being optimized for one specific function. A universal memory, by contrast, would have optimal features for every function.

So, there is processor memory which is very fast but very volatile. Then there is cache, which is local memory that is also volatile and fast. Random access memory, or RAM, is the working memory of a computer, and is also optimized to be fast at the expense of being volatile. These types of memory all require active power and are generally used for computer operation. When you are running a program you are loading it into RAM (as much as will fit, anyway), for example.

Memory storage is robust but relatively slow. This includes flash drives, hard drives, and tape backup. These media can store information long term without power, but are relatively slow. Modern computers, therefore, have multiple memory types which requires a lot of moving information around. When you “boot up” your computer, part of that is loading the operating system into volatile memory like RAM from where it is stored on your flash drive or hard drive.

If, however, we had the perfect memory – something that was cheap, had large capacity, was robust even with the power off, was very fast like a processor, and used little energy the entire design of a computer can be simplified and made much more efficient. This would mean that all computer memory was robust, so you would never have to boot up the computer. You would not have to shuttle data from your hard drive to RAM and then to cache and to the processor and back again. This would make computers, theoretically, more energy efficient, more stable, faster, smaller and perhaps cheaper as there would be fewer components (it depends on how expensive the universal memory itself is).

The paper itself is highly technical, but the conclusion is a good summary:

We have demonstrated room-temperature operation of non-volatile, charge-based memory cells with compact design, low-voltage write and erase and non-destructive read. The contradictory requirements of non-volatility and low-voltage switching, are achieved by exploiting the quantum-mechanical properties of an asymmetric triple resonant-tunnelling barrier. The compact configuration and junctionless channel with uniform doping suggest good prospects for device scaling, whilst the low-voltages, non-volatility and non-destructive read will minimise the peripheral circuity required in a complete memory chip. These devices thus represent a promising new emerging memory concept.

This means they have achieved a robust, fast, and low energy form of memory. It’s still too early to know if this exact technology will work out commercially. How will it scale, and how expensive will it be, for example? But it is, if nothing else, a proof of concept. This type of memory can theoretically exist, and we seem to be on the path to developing something viable.

While universal memory is the ultimate goal, it’s also not an all-or-nothing proposition. Memory technology that unifies any of the above memory applications would help simplify and potentially improve computer design. Recently Intel announced something they say is “close” to universal memory, which they call Optane, which uses 3D XPoint technology (they are vague on the details). This is essentially a flash drive with non-volatile memory that is fast enough to function as RAM. This makes them excellent as solid state drives, but it seems they are not quite up to RAM speeds yet:

Intel is providing software that will enable computers to operate Optane as memory as opposed to storage, but it will be slow. Optane drive latencies max out at 7 or 8 microseconds—way faster than flash, which takes hundreds of microseconds, but not touching DRAM’s low hundreds of nanoseconds. The Optane drives are fettered by the interface they use: They connect to the storage interface, not the memory interface.

So not there yet.

The unification of different memory types, on the path to truly universal memory, are milestones in an otherwise steady incremental advance of computer technology. One obvious benefit is the continuation of the trend toward faster, smaller, better, cheaper computers. But there is also a real practical benefit, as I brought up above. Data processing is an increasingly vital aspect of the infrastructure of our world. We are approaching 20% of global electricity production and who know where this will level off.

I tried to source that 20% figure but it will take more time than I have right now. From what I can tell, by 2020 in the US, data centers will use 73 billion kWh, while total electricity production in the US was 3.95 trillion kWh in 2018. That’s less than 2% of total use. Still, it’s a lot, and will likely significantly increase, even without cryptocurrency. The cryptocurrency issue, however, is important. The bigger lesson is that we cannot necessary predict new applications that will dramatically increase demand for number crunching. I suspect that as the efficiency of computer processing increases, our applications will also increase. That doesn’t mean there is no benefit to improved efficiency – we will need to prioritize efficiency and make advances just to reduce the increase in energy use by data centers.

At this point no matter what we do, a significant portion of our carbon footprint is in computer processing. So this industry matters to global energy consumption, and advances like a universal memory can have a large impact.