Apr

22

2024

I recently received the following question to the SGU e-mail:

“I have had several conversations with friends/colleagues lately regarding indigenous beliefs/stories. They assert that not believing these based on oral histories alone is morally wrong and ignoring a different cultures method of knowledge sharing. I do not want to be insensitive, and I would never argue this point directly with an indigenous person (my friends asserting these points are all white). But it really rubs me the wrong way to be told to believe things without what I would consider more concrete evidence. I’m really not sure how to comport myself in these situations. I would love to hear any thoughts you have on this topic, as I don’t have many skeptical friends.”

I also frequently encounter this tension, between a philosophical dedication to scientific methods and respect of indigenous cultures. Similar tensions come up in other contexts, such as indigenous cultures that hunt endangered species. These tensions are sometimes framed as “decolonization” defined as “the process of freeing an institution, sphere of activity, etc. from the cultural or social effects of colonization.” Here is a more detailed description:

“Decolonization is about “cultural, psychological, and economic freedom” for Indigenous people with the goal of achieving Indigenous sovereignty — the right and ability of Indigenous people to practice self-determination over their land, cultures, and political and economic systems.”

I completely understand this concept and think the project is legitimate. To “decolonize” an indigenous culture you have to do more than just physically remove foreign settlers. Psychological and cultural colonization is harder to remove. And often cultural colonization was very deliberate, such as missionaries spreading the “correct” religion to “primitive” people.

Continue Reading »

Apr

01

2024

I have not written before about Havana Syndrome, mostly because I have not been able to come to any strong conclusions about it. In 2016 there was a cluster of strange neurological symptoms among people working at the US Embassy in Havana, Cuba. They would suddenly experience headaches, ringing in the ears, vertigo, blurry vision, nausea, and cognitive symptoms. Some reported loud whistles, buzzing or grinding noise, usually at night while they were in bed. Perhaps most significantly, some people who reported these symptoms claim that there was a specific location sensitivity – the symptoms would stop if they left the room they were in and resume if they returned to that room.

These reports lead to what is popularly called “Havana Syndrome”, and the US government calls “anomalous health incidents” (AHIs). Eventually diplomats in other countries also reported similar AHIs. Havana Syndrome, however, remains a mystery. In trying to understand the phenomenon I see two reasonable narratives or hypotheses that can be invoked to make sense of all the data we have. I don’t think we have enough information to definitely reject either narrative, and each has its advocates.

One narrative is that Havana Syndrome is caused by a weapon, thought to be a directed pulsed electromagnetic or acoustic device, used by our adversaries to disrupt American and Canadian diplomats and military personnel. The other is that Havana Syndrome is nothing more than preexisting conditions or subjective symptoms caused by stress or perhaps environmental factors. All it would take is a cluster of diplomats with new onset migraines, for example, to create the belief in Havana Syndrome, which then takes on a life of its own.

Both hypotheses are at least plausible. Neither can be rejected based on basic science as impossible, and I would be cautious about rejecting either based on our preexisting biases or which narrative feels more satisfying. For a skeptic, the notion that this is all some kind of mass delusion is a very compelling explanation, and it may be true. If this turns out to be the case it would definitely be satisfying, and we can add Havana Syndrome to the list of historical mass delusions and those of us who lecture on skeptical topics can all add a slide to our Powerpoint presentations detailing this incident.

Continue Reading »

Mar

29

2024

I don’t think I know anyone personally who doesn’t have strong opinions about music – which genres they like, and how the quality of music may have changed over time. My own sense is that music as a cultural phenomenon is incredibly complex, no one (in my social group) really understands it, and our opinions are overwhelmed by subjectivity. But I am fascinated by it, and often intrigued by scientific studies that try to quantify our collective cultural experience. And I know there are true experts in this topic, musicologists and even ethnomusicologists, but haven’t found good resources for science communication in this area (please leave any recommendations in the comments).

I don’t think I know anyone personally who doesn’t have strong opinions about music – which genres they like, and how the quality of music may have changed over time. My own sense is that music as a cultural phenomenon is incredibly complex, no one (in my social group) really understands it, and our opinions are overwhelmed by subjectivity. But I am fascinated by it, and often intrigued by scientific studies that try to quantify our collective cultural experience. And I know there are true experts in this topic, musicologists and even ethnomusicologists, but haven’t found good resources for science communication in this area (please leave any recommendations in the comments).

In any case, here are some random bits of music culture science that I find interesting. A recent study analyzing 12,000 English language songs over the last 40 years has found that songs have been getting simpler and more repetitive over time. They are using fewer words with greater repetition. Further, the structure of the lyrics are getting simpler, and they are more readable and easier to understand. Also, the use of emotional words has increased, and has become overall more negative and more personal. I have to note this is a single study and there are some concerns about the software used in the analysis, but while this is being investigated the authors state that it is unlikely any glitch will alter their basic findings.

But taken at face value, it’s interesting that these findings generally fit with my subjective experience. This doesn’t necessarily make me more confident in the findings, and I do worry that I am just viewing these results through my confirmation bias filter. Still, it not only fits what I have perceived in music but in culture in general, especially with social media. We should be wary of simplistic explanations, but I wonder if this is mainly due to a general competition for attention. Overtime there is a selective pressure for media that is more immediate, more emotional, and easier to consume. The authors also speculate that it may reflect our changing habits in terms of consuming media. There is a greater tendency to listen to music, for example, in the background, while doing other things (perhaps several other things).

Continue Reading »

Feb

12

2024

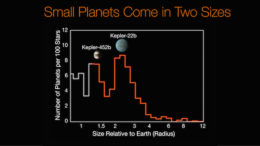

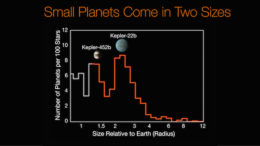

As of this writing, there are 5,573 confirmed exoplanets in 4,146 planetary systems. That is enough exoplanets, planets around stars other than our own sun, that we can do some statistics to describe what’s out there. One curious pattern that has emerged is a relative gap in the radii of exoplanets between 1.5 and 2.0 Earth radii. What is the significance, if any, of this gap?

As of this writing, there are 5,573 confirmed exoplanets in 4,146 planetary systems. That is enough exoplanets, planets around stars other than our own sun, that we can do some statistics to describe what’s out there. One curious pattern that has emerged is a relative gap in the radii of exoplanets between 1.5 and 2.0 Earth radii. What is the significance, if any, of this gap?

First we have to consider if this is an artifact of our detection methods. The most common method astronomers use to detect exoplanets is the transit method – carefully observe a star over time precisely measuring its brightness. If a planet moves in front of the star, the brightness will dip, remain low while the planet transits, and then return to its baseline brightness. This produces a classic light curve that astronomers recognize as a planet orbiting that start in the plane of observation from the Earth. The first time such a dip is observed that is a suspected exoplanet, and if the same dip is seen again that confirms it. This also gives us the orbital period. This method is biased toward exoplanets with short periods, because they are easier to confirm. If an exoplanet has a period of 60 years, that would take 60 years to confirm, so we haven’t confirmed a lot of those.

There is also the wobble method. We can observe the path that a star takes through the sky. If that path wobbles in a regular pattern that is likely due to the gravitational tug from a large planet or other dark companion that is orbiting it. This method favors more massive planets closer to their parent star. Sometimes we can also directly observe exoplanets by blocking out their parent star and seeing the tiny bit of reflected light from the planet. This method favors large planets distant from their parent star. There are also a small number of exoplanets discovered through gravitational microlensing, and effect of general relativity.

None of these methods, however, explain the 1.5 to 2.0 radii gap. It’s also likely not a statistical fluke given the number of exoplanets we have discovered. Therefore it may be telling us something about planetary evolution. But there are lots of variables that determine the size of an exoplanet, so it can be difficult to pin down a single explanation.

Continue Reading »

Feb

06

2024

Have you ever been in a discussion where the person with whom you disagree dismisses your position because you got some tiny detail wrong or didn’t know the tiny detail? This is a common debating technique. For example, opponents of gun safety regulations will often use the relative ignorance of proponents regarding gun culture and technical details about guns to argue that they therefore don’t know what they are talking about and their position is invalid. But, at the same time, GMO opponents will often base their arguments on a misunderstanding of the science of genetics and genetic engineering.

Have you ever been in a discussion where the person with whom you disagree dismisses your position because you got some tiny detail wrong or didn’t know the tiny detail? This is a common debating technique. For example, opponents of gun safety regulations will often use the relative ignorance of proponents regarding gun culture and technical details about guns to argue that they therefore don’t know what they are talking about and their position is invalid. But, at the same time, GMO opponents will often base their arguments on a misunderstanding of the science of genetics and genetic engineering.

Dismissing an argument because of an irrelevant detail is a form of informal logical fallacy. Someone can be mistaken about a detail while still being correct about a more general conclusion. You don’t have to understand the physics of the photoelectric effect to conclude that solar power is a useful form of green energy.

There are also some details that are not irrelevant, but may not change an ultimate conclusion. If someone thinks that industrial release of CO2 is driving climate change, but does not understand the scientific literature on climate sensitivity, that doesn’t make them wrong. But understanding climate sensitivity is important to the climate change debate, it just happens to align with what proponents of anthropogenic global warming are concluding. In this case you need to understand what climate sensitivity is, and what the science says about it, in order to understand and counter some common arguments deniers use to argue against the science of climate change.

What these few examples show is a general feature of the informal logical fallacies – they are context dependent. Just because you can frame someone’s position as a logical fallacy does not make their argument wrong (thinking this is the case is the fallacy fallacy). What logical fallacy is using details to dismissing the bigger picture? I have heard this referred to as a “Reverse Gish Gallop”. I’m don’t use this term because I don’t think it captures the essence of the fallacy. I have used the term “weaponized pedantry” before and I think that is better.

Continue Reading »

Jan

29

2024

There is an ongoing battle in our society to control the narrative, to influence the flow of information, and thereby move the needle on what people think and how they behave. This is nothing new, but the mechanisms for controlling the narrative are evolving as our communication technology evolves. The latest addition to this technology is the large language model AIs.

There is an ongoing battle in our society to control the narrative, to influence the flow of information, and thereby move the needle on what people think and how they behave. This is nothing new, but the mechanisms for controlling the narrative are evolving as our communication technology evolves. The latest addition to this technology is the large language model AIs.

“The media”, of course, has been a large focus of this competition. On the right there is constant complaints of the “liberal bias” in the media, and on the left there are complaints of the rise of right-wing media which they feel is biased and radicalizing. The culture wars focus mainly on schools, because those schools teach not only facts and knowledge but convey the values of our society. The left views DEI (diversity, equity, and inclusion) initiates as promoting social justice while the right views it as brainwashing the next generation with liberal propaganda. This is an oversimplification, but it is the basic dynamic. Even industry has been targeted by the culture wars – which narratives are specific companies supporting? Is Disney pro-gay? Which companies fly BLM or LGBTQ flags?

But increasingly “the narrative” (the overall cultural conversation) is not being controlled by the media, educational system, or marketing campaigns. It’s being controlled by social media. This is why, when the power of social media started to become apparent, many people panicked. Suddenly it seemed we had seeded control of the narrative to a few tech companies, who had apparently decided that destroying democracy was a price they were prepared to pay for maximizing their clicks. We now live in a world where YouTube algorithms can destroy lives and relationships.

Continue Reading »

Jan

12

2024

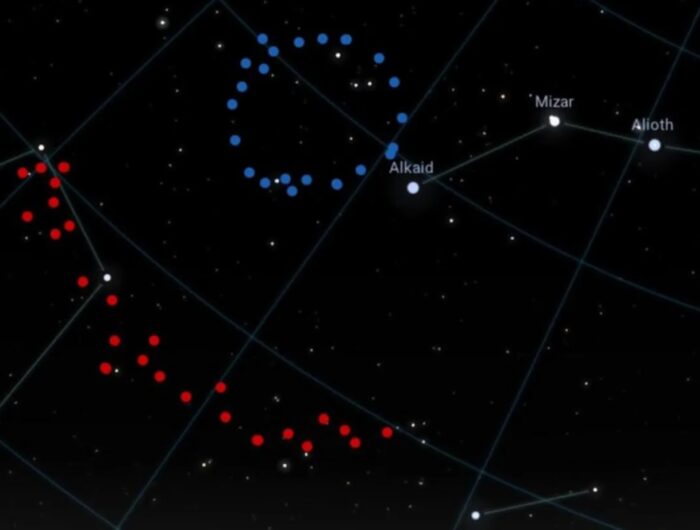

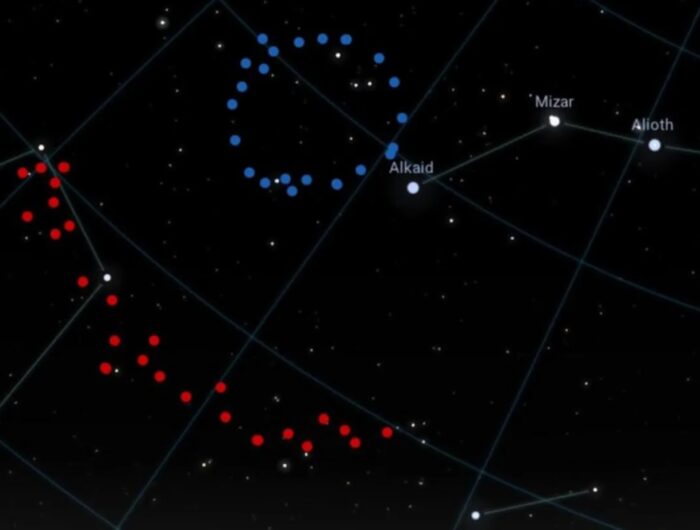

University of Central Lancashire (UCLan) PhD student Alexia Lopez, who two years ago discovered a giant arc of galaxy clusters in the distant universe, has now discovered a Big Ring. This (if real) is one of the largest structures in the observable universe at 1.3 billion light years in diameter. The problem is – such a large structure should not be possible based on current cosmological theory. It violates what is known as the Cosmological Principle (CP), the notion that at the largest scales the universe is uniform with evenly distributed matter.

University of Central Lancashire (UCLan) PhD student Alexia Lopez, who two years ago discovered a giant arc of galaxy clusters in the distant universe, has now discovered a Big Ring. This (if real) is one of the largest structures in the observable universe at 1.3 billion light years in diameter. The problem is – such a large structure should not be possible based on current cosmological theory. It violates what is known as the Cosmological Principle (CP), the notion that at the largest scales the universe is uniform with evenly distributed matter.

The CP actually has two components. One is called isotropy, which means that if you look in any direction in the universe, the distribution of matter should be the same. The other component is homogeneity, which means that wherever you are in the universe, the distribution of matter should be smooth. Of course, this is only true beyond a certain scale. At small scale, like within a galaxy or even galaxy cluster, matter is not evenly distributed, and it does matter which direction you look. But at some point in scale, isotropy and heterogeneity are the rule. Another way to look at this is – there is an upper limit to the size of any structure in the universe. The Giant Arc and Big Ring are both too big. If the CP is correct, they should not exist. There are also a handful of other giant structures in the universe, so these are not the first to violate the CP.

The Big Ring is just that, a two-dimensional structure in the shape of a near-perfect ring facing Earth (likely not a coincidence but rather the reason it was discoverable from Earth). Alexia Lopez later discovered that the ring is actually a corkscrew shape. The Giant Arc is just that, the arc of a circle. Interestingly, it is in the same region of space and the same distance as the Big Ring, so the two structures exist at the same time and place. This suggests they may be part of an even bigger structure.

How certain are we that these structures are real, and not just a coincidence? Professor Don Pollacco, of the department of physics at the University of Warwick, said the probability of this being a statistical fluke is “vanishingly small”. But still, it seems premature to hang our hat on these observations just yet. I would like to see some replications and attempts at poking holes in Lopez’s conclusions. That is the normal process of science, and it takes time to play out. But so far, it seems like solid work.

Continue Reading »

Jan

08

2024

Categorization is critical in science, but it is also very tricky, often deceptively so. We need to categorize things to help us organize our knowledge, to understand how things work and relate to each other, and to communicate efficiently and precisely. But categorization can also be a hindrance – if we get it wrong, it can bias or constrain our thinking. The problem is that nature rarely cleaves in straight clean lines. Nature is messy and complicated, almost as if it is trying to defy our arrogant attempts at labeling it. Let’s talk a bit about how we categorize things, how it can go wrong, and why it matters.

Categorization is critical in science, but it is also very tricky, often deceptively so. We need to categorize things to help us organize our knowledge, to understand how things work and relate to each other, and to communicate efficiently and precisely. But categorization can also be a hindrance – if we get it wrong, it can bias or constrain our thinking. The problem is that nature rarely cleaves in straight clean lines. Nature is messy and complicated, almost as if it is trying to defy our arrogant attempts at labeling it. Let’s talk a bit about how we categorize things, how it can go wrong, and why it matters.

We can start with an example that might seem like a simple category – what is a planet? Of course, any science nerd knows how contentious the definition of a planet can be, which is why it is a good example. Astronomers first defined them as wandering stars – the points of light that were not fixed but seemed to wonder throughout the sky. There was something different about them. This is often how categories begin – we observe a phenomenon we cannot explain and so the phenomenon is the category. This is very common in medicine. We observe a set of signs and symptoms that seem to cluster together, and we give it a label. But once we had a more evolved idea about the structure of the universe, and we knew that there are stars and stars have lots of stuff orbiting around them, we needed a clean way to divide all that stuff into different categories. One of those categories is “planet”. But how do we define planet in an objective, intuitive, and scientifically useful way?

This is where the concept of “defining characteristic” comes in. A defining characteristic is, “A property held by all members of a class of object that is so distinctive that it is sufficient to determine membership in that class. A property that defines that which possesses it.” But not all categories have a clear defining characteristic, and for many categories a single characteristic will never suffice. Scientists can and do argue about which characteristics to include as defining, which are more important, and how to police the boundaries of that characteristic.

Continue Reading »

Dec

21

2023

This is not exactly a “best of” because I don’t know how that applies to science news, but here are what I consider to be the most impactful science news stories of 2023 (or at least the ones that caught by biased attention).

This is not exactly a “best of” because I don’t know how that applies to science news, but here are what I consider to be the most impactful science news stories of 2023 (or at least the ones that caught by biased attention).

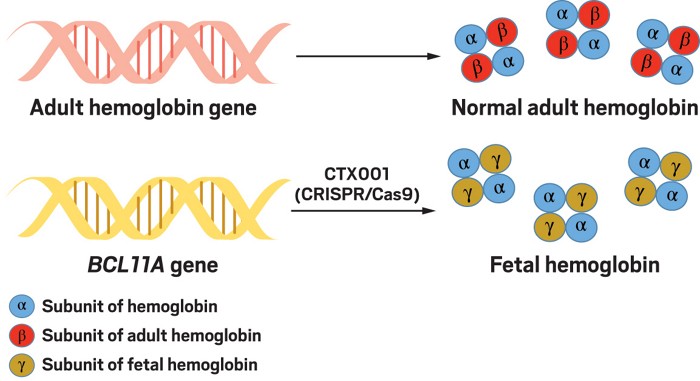

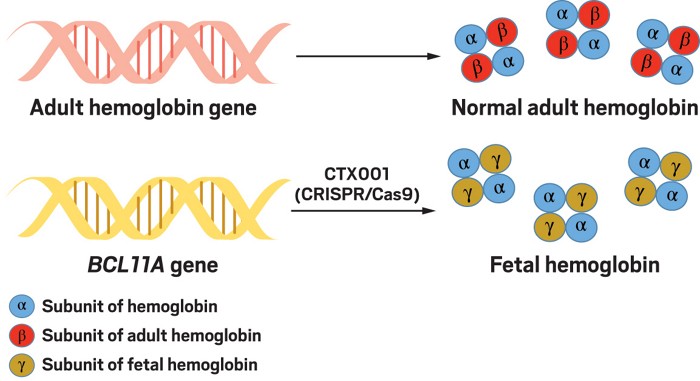

This was a big year for medical breakthroughs. We are seeing technologies that have been in the works for decades come to fruition with specific applications. The FDA recently approved a CRISPR treatment for sickle cell anemia. The UK already approved this treatment for sickle cell and beta thalassemia. This is the first CRISPR-based treatment approval. The technology itself is fascinating – I have been writing about CRISPR since it was developed, it’s a technology for making specific alterations to DNA at a specific target site. It can be used to permanently inactivate a gene, insert a new gene, or reversibly turn a gene off and then on again. Importantly, the technology is faster and cheaper than prior technologies. It is a powerful genetics research tool, and is a boon to genetic engineering. But since the beginning we have also speculated about its potential as a medical intervention, and now we have proof of concept.

The procedure is to take bone-marrow from the patient, then use CRISPR to silence a specific gene that turns off the production of fetal hemoglobin. The altered blood stem cells are then transplanted back into the patient. Both of these diseases, sickle cell and thalassemia, are genetic mutations of adult hemoglobin. The fetal hemoglobin is unaffected. By turning back on the production of fetal hemoglobin, this effectively reduces or even eliminates the negative effects of the mutations. Sickle cell patients do not go into crisis and thalassemia patients do not need constant blood transfusions.

This is an important milestone – we can control the CRISPR technique sufficiently that it is a safe and effective tool for treating genetically based diseases. This does not mean we can now cure all genetic diseases. There is still the challenge of getting the CRISPR to the right cells (using some vector). Bone-marrow based disease is low hanging fruit because we can take the cells to the CRISPR. But still – this is a lot of potential disease targets – anything blood or bone marrow based. Also, any place in the body where we can inject CRISPR into a contained space, like the eye, is an easy target. Other targets will not be as easy, but that technology is advancing as well. This all opens up a new type of medical intervention, through precise genetic alteration. Every future story about this technology will likely refer back to 2023 as the year of the first approved CRISPR treatment.

Continue Reading »

Dec

19

2023

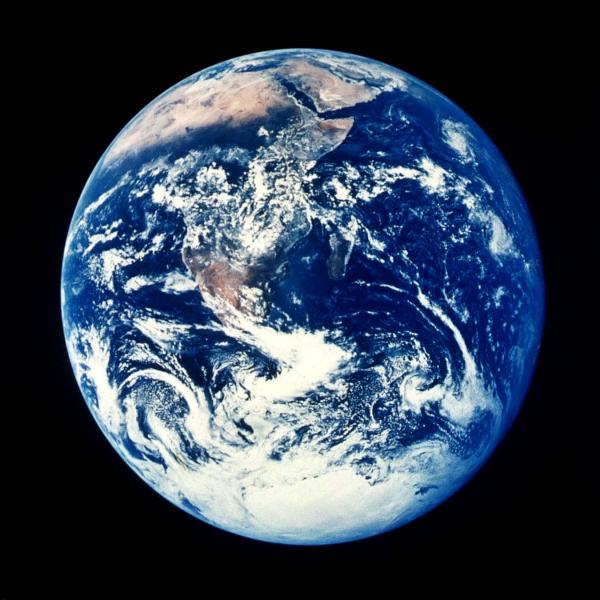

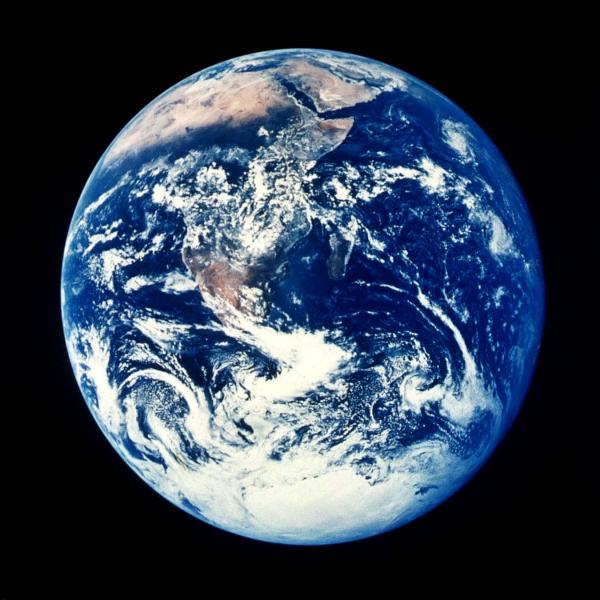

One of the biggest questions of exoplanet astronomy is how many potentially habitable planets are out there in the galaxy. By one estimate the answer is 6 billion Earth-like planets in the Milky Way. But of course we have to set parameters and make estimates, so this number can vary significantly depending on details.

One of the biggest questions of exoplanet astronomy is how many potentially habitable planets are out there in the galaxy. By one estimate the answer is 6 billion Earth-like planets in the Milky Way. But of course we have to set parameters and make estimates, so this number can vary significantly depending on details.

And yet – how many exoplanets have we discovered so far that are “Earth-like”, meaning they are a rocky world orbiting a sun-like star in the habitable zone, not tidally locked to their parent star, with the potential for liquid water on the surface? Zero. Not a single one, out of the over 5,500 exoplanets confirmed so far. This is not a random survey, however, because it is biased by the techniques we use to discover exoplanets, which favor larger worlds and worlds closer to their stars. But still, zero is a pretty disappointing number.

I am old enough to remember when the number of confirmed exoplanets was also zero, and when the first one was discovered in 1995. Basically since then I have been waiting for the first confirmed Earth-like exoplanet. I’m still waiting.

A recent simulation, if correct, may mean there are even fewer Earth-like exoplanets than we think. The study looks at the transition from a planet like Earth to one like Venus, where a runaway greenhouse effect leads to a dry and sterile planet with a surface temperature of hundreds of degrees. The question being explored by this simulation is this – how delicate is the equilibrium we have on Earth? What would it take to tip the Earth into a similar climate as Venus? The answer is – not much.

Continue Reading »

I don’t think I know anyone personally who doesn’t have strong opinions about music – which genres they like, and how the quality of music may have changed over time. My own sense is that music as a cultural phenomenon is incredibly complex, no one (in my social group) really understands it, and our opinions are overwhelmed by subjectivity. But I am fascinated by it, and often intrigued by scientific studies that try to quantify our collective cultural experience. And I know there are true experts in this topic, musicologists and even ethnomusicologists, but haven’t found good resources for science communication in this area (please leave any recommendations in the comments).

I don’t think I know anyone personally who doesn’t have strong opinions about music – which genres they like, and how the quality of music may have changed over time. My own sense is that music as a cultural phenomenon is incredibly complex, no one (in my social group) really understands it, and our opinions are overwhelmed by subjectivity. But I am fascinated by it, and often intrigued by scientific studies that try to quantify our collective cultural experience. And I know there are true experts in this topic, musicologists and even ethnomusicologists, but haven’t found good resources for science communication in this area (please leave any recommendations in the comments). As of this writing, there are

As of this writing, there are  Have you ever been in a discussion where the person with whom you disagree dismisses your position because you got some tiny detail wrong or didn’t know the tiny detail? This is a common debating technique. For example, opponents of gun safety regulations will often use the relative ignorance of proponents regarding gun culture and technical details about guns to argue that they therefore don’t know what they are talking about and their position is invalid. But, at the same time, GMO opponents will often base their arguments on a misunderstanding of the science of genetics and genetic engineering.

Have you ever been in a discussion where the person with whom you disagree dismisses your position because you got some tiny detail wrong or didn’t know the tiny detail? This is a common debating technique. For example, opponents of gun safety regulations will often use the relative ignorance of proponents regarding gun culture and technical details about guns to argue that they therefore don’t know what they are talking about and their position is invalid. But, at the same time, GMO opponents will often base their arguments on a misunderstanding of the science of genetics and genetic engineering. There is an ongoing battle in our society to control the narrative, to influence the flow of information, and thereby move the needle on what people think and how they behave. This is nothing new, but the mechanisms for controlling the narrative are evolving as our communication technology evolves. The latest addition to this technology is the large language model AIs.

There is an ongoing battle in our society to control the narrative, to influence the flow of information, and thereby move the needle on what people think and how they behave. This is nothing new, but the mechanisms for controlling the narrative are evolving as our communication technology evolves. The latest addition to this technology is the large language model AIs. University of Central Lancashire (UCLan) PhD student Alexia Lopez, who two years ago discovered a giant arc of galaxy clusters in the distant universe,

University of Central Lancashire (UCLan) PhD student Alexia Lopez, who two years ago discovered a giant arc of galaxy clusters in the distant universe, Categorization is critical in science, but it is also very tricky, often deceptively so. We need to categorize things to help us organize our knowledge, to understand how things work and relate to each other, and to communicate efficiently and precisely. But categorization can also be a hindrance – if we get it wrong, it can bias or constrain our thinking. The problem is that nature rarely cleaves in straight clean lines. Nature is messy and complicated, almost as if it is trying to defy our arrogant attempts at labeling it. Let’s talk a bit about how we categorize things, how it can go wrong, and why it matters.

Categorization is critical in science, but it is also very tricky, often deceptively so. We need to categorize things to help us organize our knowledge, to understand how things work and relate to each other, and to communicate efficiently and precisely. But categorization can also be a hindrance – if we get it wrong, it can bias or constrain our thinking. The problem is that nature rarely cleaves in straight clean lines. Nature is messy and complicated, almost as if it is trying to defy our arrogant attempts at labeling it. Let’s talk a bit about how we categorize things, how it can go wrong, and why it matters. This is not exactly a “best of” because I don’t know how that applies to science news, but here are what I consider to be the most impactful science news stories of 2023 (or at least the ones that caught by biased attention).

This is not exactly a “best of” because I don’t know how that applies to science news, but here are what I consider to be the most impactful science news stories of 2023 (or at least the ones that caught by biased attention). One of the biggest questions of exoplanet astronomy is how many potentially habitable planets are out there in the galaxy.

One of the biggest questions of exoplanet astronomy is how many potentially habitable planets are out there in the galaxy.