Jan 22 2018

False Alarm

On January 13 a state-wide alarm was sent out in Hawaii warning of an incoming missile. “BALLISTIC MISSILE THREAT INBOUND TO HAWAII. SEEK IMMEDIATE SHELTER. THIS IS NOT A DRILL,” the emergency alert read. For the next 38 minutes the citizens of Hawaii had the reasonable belief that they were about to die, especially given the recent political face off with North Korea over their nuclear missiles.

On January 13 a state-wide alarm was sent out in Hawaii warning of an incoming missile. “BALLISTIC MISSILE THREAT INBOUND TO HAWAII. SEEK IMMEDIATE SHELTER. THIS IS NOT A DRILL,” the emergency alert read. For the next 38 minutes the citizens of Hawaii had the reasonable belief that they were about to die, especially given the recent political face off with North Korea over their nuclear missiles.

However, within minutes the Governor and the Hawaiian government knew that this was a false alarm, resulting from a technician hitting the wrong button. So, there are two massive failures here – sending out the alarm in the first place, and taking 38 minutes to officially send out the correction. (They did tweet that it was a false alarm, but the retraction was not generally known and it wasn’t certain that it was official.)

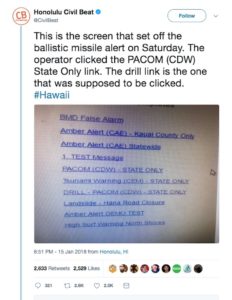

Then the media was shown a screen capture of the menu options the operator would have seen (image above). However, this is not an actual screen capture of the actual menu, but something similar to what the operator would have seen. So some headlines read that the screen capture was fake, but is does represent what the operator would have seen. You are probably now as confused as that operator.

The Hawaiian state government did review the situation and “fixed” it by adding a second operator who has to confirm any real missile warnings. They also added a menu option to issue a false alarm message – because there previously wasn’t one, and that is why it took 38 minutes to issue the false alarm.

While these steps are good, they are a long way away from addressing the real problem. What I think this represents is the general reality that our technological civilization is more complex than we can optimally manage. We simply don’t have the culture of competency necessary to keep things like this from happening.

It seems to me that in general people have a child-like assumption of competency for any large institution. This assumption is reinforced by dramatic movies showing a level of competency and control that is really a fantasy. Institutions are just made of people, who are incredibly flawed and limited.

Atule Gawande wrote about this in The Checklist Manifesto. Essentially we have historically dealt with complexity through training, creating professionals with incredible knowledge and technical skill able to handle complex technology or situations. This, of course, is often necessary. But, Gawande argues, we have passed the point where training alone can deal with the complexity we face, resulting in error.

Further, in many situations minor errors can have catastrophic results – crashing a plane, operating on the wrong body part, or sending out a false alarm. In such situations we need error minimization orders of magnitude beyond what a mere mortal can accomplish. So how do we do this?

Gawande recommends the humble checklist – a list of procedures to be carried out in order, and checked off to insure that nothing is missed. The checklist works, and is increasingly used in medicine and elsewhere – but perhaps not everywhere it should.

Error minimization is also accomplished through redundancy, which I have heard referred to as the Swiss cheese model. Every person is like a slice of Swiss cheese, with holes. If you line up several slices, however, any one hole is less likely to go all the way through. In other words, it is less likely that two people will make the same uncommon mistake at the same time. The probabilities of failure multiply, reducing the error rate by orders of magnitude.

What the missile false alarm event shows is that there are other layers to error minimization, and increasingly that means having a computer user interface that is designed to be user friendly, intuitive, and to minimize errors.

I think anyone looking at that screen capture will have sympathy for the poor operator. Sure, he screwed up. But in a way he was set up for failure by the system. The system had holes, and he was just the unlucky chump to fall through one of them in a very public way.

Anyone who works for a large institution will be familiar with such situations. Your work place is likely not uniquely incompetent, but is probably fairly typical of every workplace out there, which can be a scary thought. Errors like hitting the wrong choice on that menu must happen all the time. This error, however, happened to be affect an entire state in a dramatic way. The error itself was not dramatic, only the result.

Creating an intuitive and optimized user interface is an art form and a science unto itself, and it is amazing at this point in computer technology that it is so often neglected.

I use the Epic electronic medical system, which is used by many large medical institutions. It is huge in the industry – and the user interface is terrible. The arrangement of information and options on the screen is not intuitive or optimized, there is often too much information that is unnecessary, and processes are unnecessarily complex.

Further, user warnings are terrible. You get warnings when they are unnecessary, and you don’t get them when they can be helpful. So you get warning fatigue, and tend to just click through warnings that are unhelpful, making it more likely to miss an actually useful warning.

So I totally sympathize with this poor operator who faced that terrible menu that was not organized in any way, did not have unambiguously descriptive labels, and did not include a real warning to let them know unequivocally what they were about to do. Apparently they just got an “are your sure” button, like we get many times a day just for doing common computer operations- completely worthless.

It is obvious that the software being used had no serious user interface design, no failure analysis, risk assessment, or error mitigation. If reports in the wake of this incident are accurate, this is typical of government applications, which seems plausible.

And all of this is just one tiny slice of our complex technological civilization. The entire field of risk assessment needs to be taken more seriously, and should be ubiquitous in any large organization.

And please, software developers – hire dedicated user interface experts. Your regular software engineers cannot do this. Really. The difference is between an amateur B-movie and a professional film made by industry experts. There is a lot of knowledge, skill, and nuance that goes into communicating with people, whether it is through film or a user interface.

Any software that is used to prescribe medication, or send a missile launch warning, should be tweaked as hell, and frequently reviewed and updated, with feedback from the actual people using the software. You also can’t fix a bad user interface through training alone.

The missile episode is also an example of how the stakes are getting higher. Our systems are not only complex they are increasingly interdependent, and running critical aspects of our lives.

In short we need to have thoughtful systems in place that allow flawed humans to operate with minimal error, and those systems need systems to make sure they are optimized. This should be standard procedure, taken for granted as part of any process. That is the culture we need.

By coincidence I am in Hawaii this week on vacation. I will be seeing the Pearl Harbor memorial later today – that is another case history in the consequences of tiny errors in a system set up to fail.