Mar

21

2023

Are you familiar with the “lumper vs splitter” debate? This refers to any situation in which there is some controversy over exactly how to categorize complex phenomena, specifically whether or not to favor the fewest categories based on similarities, or the greatest number of categories based on every difference. For example, in medicine we need to divide the world of diseases into specific entities. Some diseases are very specific, but many are highly variable. For the variable diseases do we lump every type into a single disease category, or do we split them into different disease types? Lumping is clean but can gloss-over important details. Splitting endeavors to capture all detail, but can create a categorical mess.

Are you familiar with the “lumper vs splitter” debate? This refers to any situation in which there is some controversy over exactly how to categorize complex phenomena, specifically whether or not to favor the fewest categories based on similarities, or the greatest number of categories based on every difference. For example, in medicine we need to divide the world of diseases into specific entities. Some diseases are very specific, but many are highly variable. For the variable diseases do we lump every type into a single disease category, or do we split them into different disease types? Lumping is clean but can gloss-over important details. Splitting endeavors to capture all detail, but can create a categorical mess.

As is often the case, an optimal approach likely combines both strategies, trying to leverage the advantages of each. Therefore we often have disease headers with subtypes below to capture more detail. But even there the debate does not end – how far do we go splitting out subtypes of subtypes?

The debate also happens when we try to categorize ideas, not just things. Logical fallacies are a great example. You may hear of very specific logical fallacies, such as the “argument ad Hitlerum”, which is an attempt to refute an argument by tying it somehow to something Hitler did, said, or believed. But really this is just a specific instance of a “poisoning the well” logical fallacy. Does it really deserve its own name? But it’s so common it may be worth pointing out as a specific case. In my opinion, whatever system is most useful is the one we should use, and in many cases that’s the one that facilitates understanding. Knowing how different logical fallacies are related helps us truly understand them, rather than just memorize them.

A recent paper enters the “lumper vs splitter” fray with respect to cognitive biases. They do not frame their proposal in these terms, but it’s the same idea. The paper is – Toward Parsimony in Bias Research: A Proposed Common Framework of Belief-Consistent Information Processing for a Set of Biases. The idea of parsimony is to use to be economical or frugal, which often is used to apply to money but also applies to ideas and labels. They are saying that we should attempt to lump different specific cognitive biases into categories that represent underlying unifying cognitive processes.

Continue Reading »

Mar

09

2023

Psychiatry, psychology, and all aspects of mental health are a challenging area because the clinical entities we are dealing with are complex and mostly subjective. Diagnoses are perhaps best understood as clinical constructs – a way of identifying and understanding a mental health issue, but not necessary a core neurological phenomenon. In other words, things like bipolar disorder are identified, categorized, and diagnosed based upon a list of clinical signs and symptoms. But this is a descriptive approach, and may not correlate to specific circuitry in the brain. Researchers are making progress finding the “neuroanatomical correlates” of known clinical entities, but such correlates are mostly partial and statistical. Further, there is culture, personality, and environment to deal with, which significantly influences how underlying brain circuitry manifests clinically. Also, not all mental health diagnoses are equal – some are likely to be a lot closer to discrete brain circuitry than others.

Psychiatry, psychology, and all aspects of mental health are a challenging area because the clinical entities we are dealing with are complex and mostly subjective. Diagnoses are perhaps best understood as clinical constructs – a way of identifying and understanding a mental health issue, but not necessary a core neurological phenomenon. In other words, things like bipolar disorder are identified, categorized, and diagnosed based upon a list of clinical signs and symptoms. But this is a descriptive approach, and may not correlate to specific circuitry in the brain. Researchers are making progress finding the “neuroanatomical correlates” of known clinical entities, but such correlates are mostly partial and statistical. Further, there is culture, personality, and environment to deal with, which significantly influences how underlying brain circuitry manifests clinically. Also, not all mental health diagnoses are equal – some are likely to be a lot closer to discrete brain circuitry than others.

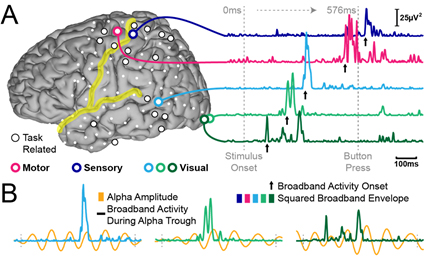

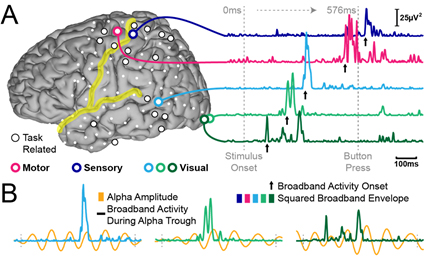

With all of these challenges, researchers are still trying to progress mental health from a purely descriptive endeavor to a more biological approach, where appropriate. There are a number of ways to do this. The most obvious is to look at the brain itself. Such imaging can be anatomical (taking a picture of the physical anatomy of the brain, such as a CT scan or MRI scan) or functional (looking at some functional aspect of the brain, like EEG or functional MRI). This kind of research is producing a steady stream of information, finding correlations with mental health disorder states, but few have progressed to the point that they are clinically useful. To be useful for research all we need is sufficient statistical significance. But to be useful clinically, to actually determine how to treat an individual person, you need sufficient accuracy (sensitivity and specificity) to guide treatment decisions. That requires much more accuracy than just basic research.

There is also another biological way of evaluating mental health states – molecular biomarkers. This approach stems from the fact that every cell in the body activates a different set of genes – so brain cells activate brain genes, while liver cells activate liver cells. Also, one type of cell will activate genes at different intensities during different functions. So when the pancreas needs to create a lot of insulin, the insulin genes become more active. We can detect the RNA that is produced when specific genes are activated, or patterns of RNA when suites of genes are activated. This can be a biomarker signature of specific functional states.

Continue Reading »

Feb

16

2023

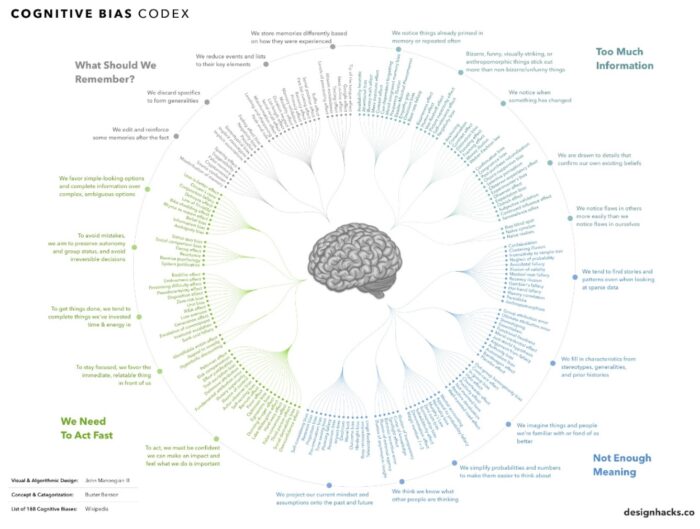

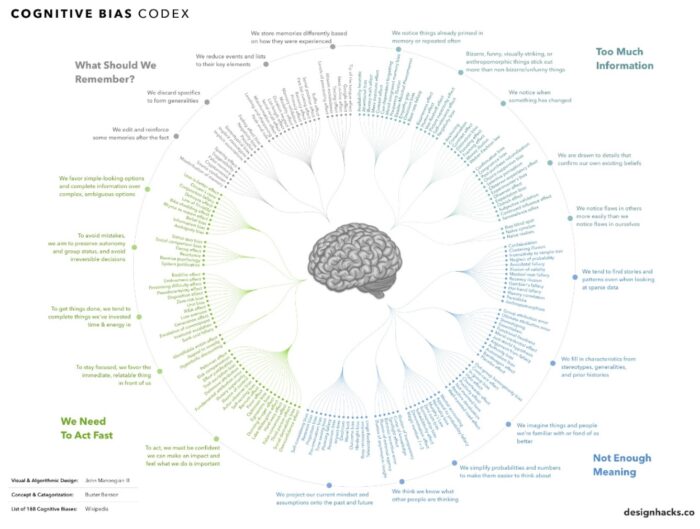

As I have discussed numerous times on this blog, our brains did not evolve to be optimal precise perceivers and processors of information. Here is an infographic showing 188 documents cognitive biases. These biases are not all bad – they are tradeoffs. Evolutionary forces care only about survival, and so the idea is that many of these biases are more adaptive than accurate. We may, for example, overcall risk because avoiding risk has an adaptive benefit. Not all of the biases have to be adaptive. Some may be epiphenomena, or themselves tradeoffs – a side effect of another adaptation. Our visual perception is rife with such tradeoffs, emphasizing movements, edges, and change at the expense of accuracy and the occasional optical illusion.

As I have discussed numerous times on this blog, our brains did not evolve to be optimal precise perceivers and processors of information. Here is an infographic showing 188 documents cognitive biases. These biases are not all bad – they are tradeoffs. Evolutionary forces care only about survival, and so the idea is that many of these biases are more adaptive than accurate. We may, for example, overcall risk because avoiding risk has an adaptive benefit. Not all of the biases have to be adaptive. Some may be epiphenomena, or themselves tradeoffs – a side effect of another adaptation. Our visual perception is rife with such tradeoffs, emphasizing movements, edges, and change at the expense of accuracy and the occasional optical illusion.

One interesting perceptual bias is called serial dependence bias – what we see is influenced by what we recently saw (or heard). It’s as if one perception primes us and influences the next. It’s easy to see how this could be adaptive. If you see a wolf in the distance, your perception is now primed to see wolves. This bias may also benefit in pattern recognition, making patterns easier to detect. Of course, pattern recognition is one of the biggest perceptual biases in humans. Our brains are biased towards detecting potential patterns, way over calling possible patterns, and then filtering out the false positives at the back end. Perhaps serial perceptual bias is also part of this hyper-pattern recognition system.

Psychologists have an important question about serial dependence bias, however. Does this bias occur at the perceptual level (such as visual processing) or at a higher cognitive level? A recently published study attempted to address this question. They exposed subjects to an image of coins for half a second (the study is Japanese, so both the subjects and coins were Japanese). They then asked subjects to estimate the number of coins they just saw and their total monetary value. The researchers wanted to know what had a greater effect on the subjects – the previous amount of coins they had just viewed or their most recent guess. The idea is that if serial dependence bias is primarily perceptual, then the amount of coins will be what affects their subsequent guesses. If the bias is primarily a higher cognitive phenomenon, then their previous guesses will have a greater effect than the actual amount they saw. To help separate the two (because higher guesses would tend to align with greater amounts) they had subjects estimate the number and value of coins on only every other image. Therefore their most recent guess would be different than the most recent image they saw.

Continue Reading »

Jan

06

2023

The mammalian brain is an amazing information processor. Millions of years of evolutionary tinkering has produced network structures that are fast, efficient, and capable of extreme complexity. Neuroscientists are trying to understand that structure as much as possible, which is understandably complicated. But progress is steady.

The mammalian brain is an amazing information processor. Millions of years of evolutionary tinkering has produced network structures that are fast, efficient, and capable of extreme complexity. Neuroscientists are trying to understand that structure as much as possible, which is understandably complicated. But progress is steady.

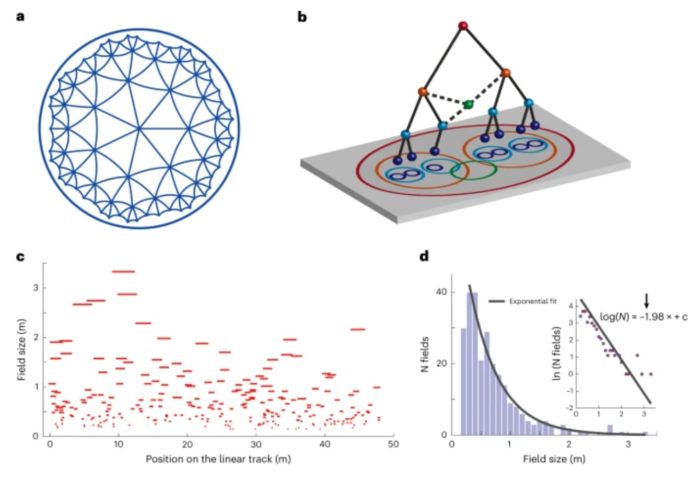

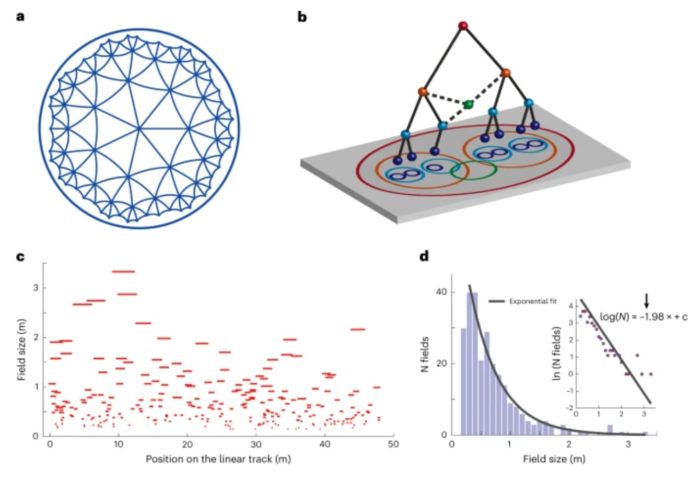

A recent study illustrates how complex this research can get. The researchers were looking at the geometry of neuron activation in the part of the brain that remembers spatial information – the CA1 region of the hippocampus. This is the part of the brain that has place neurons – those that are activated by being in a specific location. They wanted to know how networks of overlapping place neurons grow as rats explore their environment. What they found was not surprising given prior research, but is extremely interesting.

Psychologically we tend to have a linear bias in how we think of information. This extends to distances as well. It seems that we don’t deal easily (at least not intuitively) with geometric or logarithmic scales. But often information is geometric. When it comes to the brain, information and physical space are related because neural information is stored in the physical connection of neurons to each other. This allows neuroscientists to look at how brain networks “map” physically to their function.

In the present study the neuroscientists looked at the activity in place neurons as rats explored their environment. They found that rats had to spend a minimum amount of time in a location before a place neuron would become “assigned” to that location (become activated by that location). As rats spent more time in a location, gathering more information, the number of place neurons increased. However, this increase was not linear, it was hyperbolic. Hyperbolic refers to negatively curved space, like an hourglass with the starting point at the center.

Continue Reading »

Nov

10

2022

A recent study asked subjects to give their overall impression of other people based entirely on a photograph of their face. In one group the political ideology of the person in the photograph was disclosed (and was sometimes true and sometime not true), and in another group the political ideology was not disclosed. The question the researchers were asking is whether thinking you know the political ideology of someone in a photo affects your subjective impression of them. Unsurprisingly, it did. Photos that were labeled with the same political ideology (conservative vs liberal) were rated more likable, and this effect was stronger for subjects who have a higher sense of threat from those of the other political ideology.

A recent study asked subjects to give their overall impression of other people based entirely on a photograph of their face. In one group the political ideology of the person in the photograph was disclosed (and was sometimes true and sometime not true), and in another group the political ideology was not disclosed. The question the researchers were asking is whether thinking you know the political ideology of someone in a photo affects your subjective impression of them. Unsurprisingly, it did. Photos that were labeled with the same political ideology (conservative vs liberal) were rated more likable, and this effect was stronger for subjects who have a higher sense of threat from those of the other political ideology.

This question is part of a broader question about the relationship between facial characteristics and personality and our perception of them. We all experience first impressions – we meet someone new and form an overall impression of them. Are they nice, mean, threatening? But if you get to actually know the person you may find that your initial impression had no bearing on reality. The underlying question is interesting. Are there actual facial differences that correlate with any aspect of personality? First, what’s the plausibility of this notion and possible causes, if any?

The most straightforward assumption is that there is a genetic predisposition for some basic behavior, like aggression, and that these same genes (or very nearby genes that are likely to sort together) also determine facial development. This notion is based on a certain amount of biological determinism, which itself is not a popular idea among biologists. The idea is not impossible. There are genetic syndromes that include both personality types and facial features, but these are extreme outliers. For most people the signal to noise ratio is likely too small to be significant. The research bears this out – attempts at linking facial features with personality or criminality have largely failed, despite their popularity in the late 19th and early 20th centuries.

Continue Reading »

Nov

07

2022

The notion of near death experiences (NDE) have fascinated people for a long time. The notion is that some people report profound experiences after waking up from a cardiac arrest – their heart stopped, they received CPR, they were eventually recovered and lived to tell the tale. About 20% of people in this situation will report some unusual experience. Initial reporting on NDEs was done more from a journalistic methodology than scientific – collecting reports from people and weaving those into a narrative. Of course the NDE narrative took on a life of it’s own, but eventually researchers started at least collecting some empirical quantifiable data. The details of the reported NDEs are actually quite variable, and often culture-specific. There are some common elements, however, notably the sense of being out of one’s body or floating.

The notion of near death experiences (NDE) have fascinated people for a long time. The notion is that some people report profound experiences after waking up from a cardiac arrest – their heart stopped, they received CPR, they were eventually recovered and lived to tell the tale. About 20% of people in this situation will report some unusual experience. Initial reporting on NDEs was done more from a journalistic methodology than scientific – collecting reports from people and weaving those into a narrative. Of course the NDE narrative took on a life of it’s own, but eventually researchers started at least collecting some empirical quantifiable data. The details of the reported NDEs are actually quite variable, and often culture-specific. There are some common elements, however, notably the sense of being out of one’s body or floating.

The most rigorous attempt so far to study NDEs was the AWARE study, which I reported on in 2014. Lead researcher Sam Parnia, wanted to be the first to document that NDEs are a real-world experience, and not some “trick of the brain.” He failed to do this, however. The study looked at people who had a cardiac arrest, underwent CPR, and survived long enough to be interviewed. The study also included a novel element – cards placed on top of shelves in ERs around the country. These can only been seen from the vantage point of someone floating near the ceiling, meant to document that during the CPR itself an NDE experiencer was actually there and could see the physical card in their environment. The study also tried to match the details of the remembered experience with actual events that took place in the ER during their CPR.

You can read my original report for details, but the study was basically a bust. There were some methodological problems with the study, which was not well-controlled. They had trouble getting data from locations that had the cards in place, and ultimately had not a single example of a subject who saw a card. And out of 140 cases they were only able to match reported details with events in the ER during CPR in one case. Especially given that the details were fairly non-specific, and they only had 1 case out of 140, this sounds like random noise in the data.

Continue Reading »

Oct

14

2022

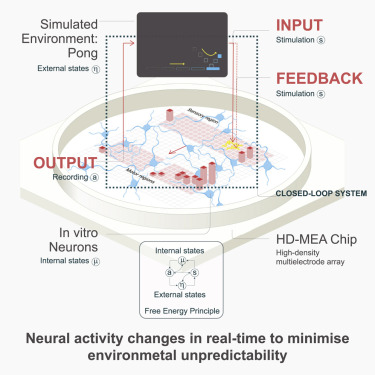

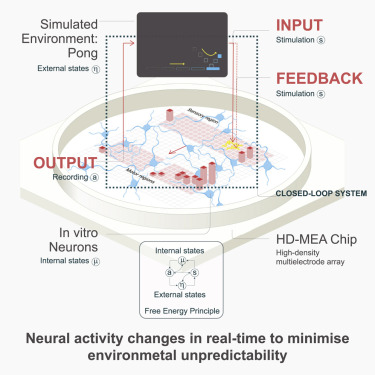

This is definitely the neuroscience news of the week. It shows how you can take an incremental scientific advance and hype it into a “new science” and a breakthrough and the media will generally just eat it up. Did scientists teach a clump of brain cells to play the video-game pong? Well, yes and no. The actual science here is fascinating and very interesting, but I fear it is generally getting lost in the hype.

This is definitely the neuroscience news of the week. It shows how you can take an incremental scientific advance and hype it into a “new science” and a breakthrough and the media will generally just eat it up. Did scientists teach a clump of brain cells to play the video-game pong? Well, yes and no. The actual science here is fascinating and very interesting, but I fear it is generally getting lost in the hype.

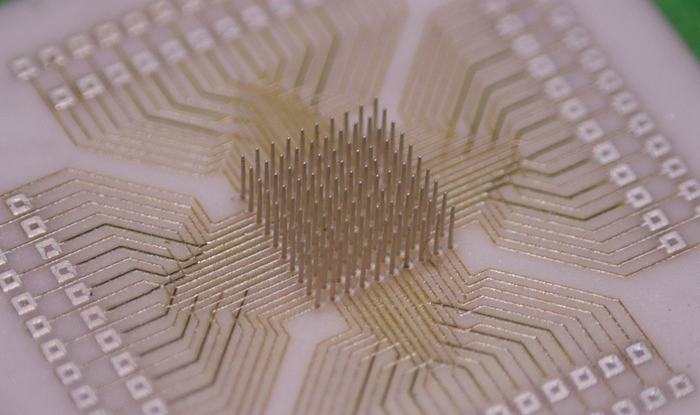

This is what the researchers actually did – they cultured mouse or human neurons derived from stem cells onto a multi-electrode array (MEA). The MEA can both read and stimulate the neurons. Neurons spontaneously network together, so that’s what these neurons did. They then stimulated the two-dimensional network of neurons either on the left or the right and at different frequencies, and recorded the network’s response. If the network responded in a way the scientists deemed correct, then they were “rewarded” with a predictable further stimulation. If their response was deemed incorrect, they were “punished” with random stimulation. Over time the network learned to produce the desired response, and its learning accelerated. Further, human neurons learned faster than mouse neurons.

Why did this happen? That is what researchers are trying to figure out, but the authors speculate that predictable stimulation allows the neurons to make more stable connections, while random stimulation is disruptive. Therefore predictable feedback tends to reinforce whatever network pattern results in predictable feedback. In this way the network is behaving like a simple AI algorithm.

Continue Reading »

Oct

06

2022

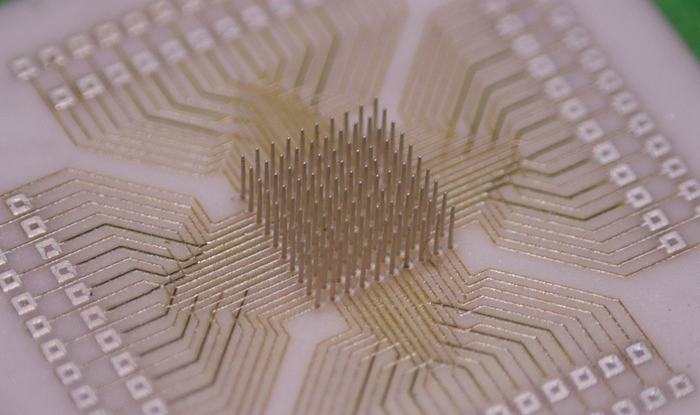

This is definitely a “you got chocolate in my peanut butter” type of advance, because it combines two emerging technologies to create a potential significant advance. I have been writing about brain-machine interface (or brain-computer interface, BCI) for years. My take is that the important proof of concepts have already been established, and now all we need is steady incremental advances in the technology. Well – here is one of those advances.

This is definitely a “you got chocolate in my peanut butter” type of advance, because it combines two emerging technologies to create a potential significant advance. I have been writing about brain-machine interface (or brain-computer interface, BCI) for years. My take is that the important proof of concepts have already been established, and now all we need is steady incremental advances in the technology. Well – here is one of those advances.

Carnegie Mellon University researchers have developed a computer chip for BCI, called a microelectrode array (MEA), using advanced 3D printing technology. The MEA looks like a regular computer chip, except that it has thin pins that are electrodes which can read electrical signals from brain tissue. MEAs are inserted into the brain with the pins stuck into brain tissue. They are thin enough to cause minimal damage. The MEA can then read the brain activity where it is placed, either for diagnostic purposes or to allow for control of a computer that is connected to the chip (yes, you need wired coming out of the skull). You can also stimulate the brain through the electrodes. MEAs are mostly used for research in animals and humans. They can generally be left in the brain for about one year.

One MEA in common use is called the Utah array, because it was developed at the University of Utah, which was patented in 1993. So these have been in use for decades. How much of an advance is the new MEA design? There are several advantage, which mostly stem from the fact that these MEAs can be printed using an advanced 3D printing technology called Aerosol Jet 3D Printing. This allows for the printing at the nano-scale using a variety of materials, included those needed to make MEAs. Using this technology provides three advantages.

Continue Reading »

Sep

26

2022

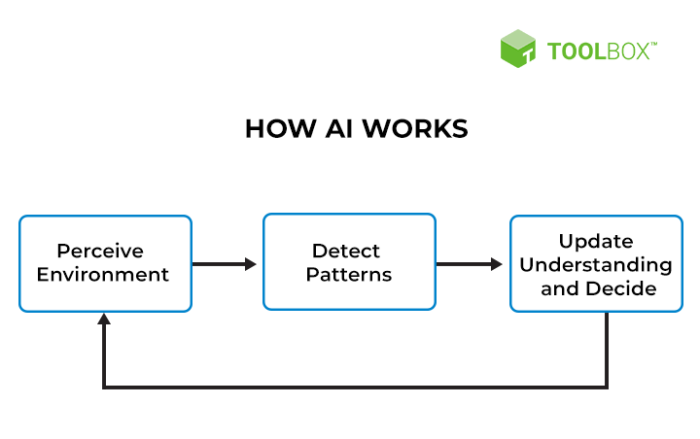

We appear to be in the middle of an explosion of AI (artificial intelligence) applications and ability. I had the opportunity to chat with an AI expert, Mark Ho, about what the driving forces are behind this rapid increase in AI power. Mark is a cognitive scientist who studies how AI’s work out and solve problems, and compares that to how humans solve problems. I was interviewing him for an SGU episode that will drop in December. The conversation was far-ranging, but I did want to discuss this one question – why are AIs getting so much more powerful in recent years?

We appear to be in the middle of an explosion of AI (artificial intelligence) applications and ability. I had the opportunity to chat with an AI expert, Mark Ho, about what the driving forces are behind this rapid increase in AI power. Mark is a cognitive scientist who studies how AI’s work out and solve problems, and compares that to how humans solve problems. I was interviewing him for an SGU episode that will drop in December. The conversation was far-ranging, but I did want to discuss this one question – why are AIs getting so much more powerful in recent years?

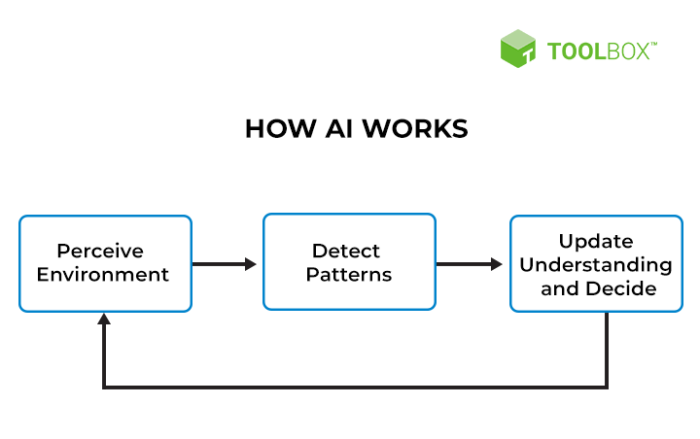

First let me define what we mean by AI – this is not self-aware conscious computer code. I am referring to what may be called “narrow” AI, such as deep learning neural networks that can do specific things really well, like mimic a human conversation, reconstruct art images based on natural-language prompts, drive a car, or beat the world-champion in Go. The HAL-9000 version of sentient computer can be referred to as Artificial General Intelligence, or AGI. But narrow AI does not really think, it does not not understand in a human sense. For the rest of this article when I refer to “AI” I am referring to the narrow type.

In order to understand why AI is getting more powerful we have to understand how current AI works. A full description would take a book, but let me just describe one basic way that AI algorithms can work. Neural nets, for example, are a network of nodes which also act as gates in a feed forward design (they pass information in one direction). The gates receive information and assign a weight to that information, and if it exceeds a set threshold it then passes that along to the next layers of nodes in the network. decide whether or not to pass information onto the next node based on preset parameters, and can give different weight to this information. The parameters (weights and thresholds) can be tuned to affect how the network processes information. These networks can be used for deep machine learning, which “trains” the network on specific data. To do this there needs to be an output that is either right or wrong, and that result is fed back into the network, which then tweaks the parameters. The goal is for the network to “learn” how it needs to process information by essentially doing millions of trials, tweaking the parameters each time and evolving the network in the direction of more and more accurate output.

So what is it about this system that is getting better? What others have told me, and what Mark confirmed, is that the underlying math and basic principles are essentially the same as 50 years ago. The math is also not that complicated. The basic tools are the same, so what is it that is getting better? One critical component of AI that is improving is the underlying hardware, which is getting much faster and more powerful. There is just a lot more raw power to run lots of training trials. One interesting side point is that computer scientists figured out that graphics cards (graphics processing unit, or GPU), the hardware used to process the images that go to your computer screen, happen to work really well for AI algorithms. GPUs have become incredibly powerful, mainly because of the gaming industry. This, by the way, is why graphics cards have become so expensive recently. All those bitcoin miners are using the GPUs to run their algorithms. (Although I recently read they are moving in the direction of application specific integrated circuits.)

Continue Reading »

Sep

13

2022

There is ongoing debate as to the extent that a skeptical outlook is natural vs learned in humans. There is no simple answer to this question, and human psychology is complex and multifaceted. People do demonstrate natural skepticism toward many claims, and yet seem to accept with abject gullibility other claims. For adults it can also be difficult to tease out how much skepticism is learned vs innate.

There is ongoing debate as to the extent that a skeptical outlook is natural vs learned in humans. There is no simple answer to this question, and human psychology is complex and multifaceted. People do demonstrate natural skepticism toward many claims, and yet seem to accept with abject gullibility other claims. For adults it can also be difficult to tease out how much skepticism is learned vs innate.

This is where developmental psychology comes in. We can examine children of various ages to see how they behave, and this may provide a window into natural human behavior. Of course, even young children are not free from cultural influences, but it at least can provide some interesting information. A recent study looked at two related questions – to children (ages 4-7) accept surprising claims from adults, and how do they react to those claims. A surprising claim is one that contradicts common knowledge that even a 4-year old should know.

In one study, for example, an adult showed the children a rock and a sponge and asked them if the rock was soft or hard. The children all believed the rock was hard. The adult then either told them that the rock was hard, or that the rock was soft (or in one iteration that the rock was softer than the sponge). When the adult confirmed the children’s beliefs, they continued in their belief. When the adult contradicted their belief, many children modified their belief. The adult then left the room under a pretense, and the children were observed through video. Unsurprisingly, they generally tested the surprising claims of the teacher through direct exploration.

This is not surprising – children generally like to explore and to touch things. However, the 6-7 year-old engaged in (or proposed during online versions of the testing) more appropriate and efficient methods of testing surprising claims than the 4-5 year-olds. For example, they wanted to directly compare the hardness of the sponge vs the rock.

Continue Reading »

Are you familiar with the “lumper vs splitter” debate? This refers to any situation in which there is some controversy over exactly how to categorize complex phenomena, specifically whether or not to favor the fewest categories based on similarities, or the greatest number of categories based on every difference. For example, in medicine we need to divide the world of diseases into specific entities. Some diseases are very specific, but many are highly variable. For the variable diseases do we lump every type into a single disease category, or do we split them into different disease types? Lumping is clean but can gloss-over important details. Splitting endeavors to capture all detail, but can create a categorical mess.

Are you familiar with the “lumper vs splitter” debate? This refers to any situation in which there is some controversy over exactly how to categorize complex phenomena, specifically whether or not to favor the fewest categories based on similarities, or the greatest number of categories based on every difference. For example, in medicine we need to divide the world of diseases into specific entities. Some diseases are very specific, but many are highly variable. For the variable diseases do we lump every type into a single disease category, or do we split them into different disease types? Lumping is clean but can gloss-over important details. Splitting endeavors to capture all detail, but can create a categorical mess.

Psychiatry, psychology, and all aspects of mental health are a challenging area because the clinical entities we are dealing with are complex and mostly subjective. Diagnoses are perhaps best understood as clinical constructs – a way of identifying and understanding a mental health issue, but not necessary a core neurological phenomenon. In other words, things like bipolar disorder are identified, categorized, and diagnosed based upon a list of clinical signs and symptoms. But this is a descriptive approach, and may not correlate to specific circuitry in the brain. Researchers are making progress finding the “neuroanatomical correlates” of known clinical entities, but such correlates are mostly partial and statistical. Further, there is culture, personality, and environment to deal with, which significantly influences how underlying brain circuitry manifests clinically. Also, not all mental health diagnoses are equal – some are likely to be a lot closer to discrete brain circuitry than others.

Psychiatry, psychology, and all aspects of mental health are a challenging area because the clinical entities we are dealing with are complex and mostly subjective. Diagnoses are perhaps best understood as clinical constructs – a way of identifying and understanding a mental health issue, but not necessary a core neurological phenomenon. In other words, things like bipolar disorder are identified, categorized, and diagnosed based upon a list of clinical signs and symptoms. But this is a descriptive approach, and may not correlate to specific circuitry in the brain. Researchers are making progress finding the “neuroanatomical correlates” of known clinical entities, but such correlates are mostly partial and statistical. Further, there is culture, personality, and environment to deal with, which significantly influences how underlying brain circuitry manifests clinically. Also, not all mental health diagnoses are equal – some are likely to be a lot closer to discrete brain circuitry than others. As I have discussed numerous times on this blog, our brains did not evolve to be optimal precise perceivers and processors of information.

As I have discussed numerous times on this blog, our brains did not evolve to be optimal precise perceivers and processors of information.  The mammalian brain is an amazing information processor. Millions of years of evolutionary tinkering has produced network structures that are fast, efficient, and capable of extreme complexity. Neuroscientists are trying to understand that structure as much as possible, which is understandably complicated. But progress is steady.

The mammalian brain is an amazing information processor. Millions of years of evolutionary tinkering has produced network structures that are fast, efficient, and capable of extreme complexity. Neuroscientists are trying to understand that structure as much as possible, which is understandably complicated. But progress is steady. A

A  The notion of near death experiences (NDE) have fascinated people for a long time. The notion is that some people report profound experiences after waking up from a cardiac arrest – their heart stopped, they received CPR, they were eventually recovered and lived to tell the tale. About 20% of people in this situation will report some unusual experience. Initial reporting on NDEs was done more from a journalistic methodology than scientific – collecting reports from people and weaving those into a narrative. Of course the NDE narrative took on a life of it’s own, but eventually researchers started at least collecting some empirical quantifiable data. The details of the reported NDEs are actually quite variable, and often culture-specific. There are some common elements, however, notably the sense of being out of one’s body or floating.

The notion of near death experiences (NDE) have fascinated people for a long time. The notion is that some people report profound experiences after waking up from a cardiac arrest – their heart stopped, they received CPR, they were eventually recovered and lived to tell the tale. About 20% of people in this situation will report some unusual experience. Initial reporting on NDEs was done more from a journalistic methodology than scientific – collecting reports from people and weaving those into a narrative. Of course the NDE narrative took on a life of it’s own, but eventually researchers started at least collecting some empirical quantifiable data. The details of the reported NDEs are actually quite variable, and often culture-specific. There are some common elements, however, notably the sense of being out of one’s body or floating. This is definitely the neuroscience news of the week. It shows how you can take an incremental scientific advance and hype it into a “new science” and a breakthrough and the media will generally just eat it up. Did scientists teach a clump of brain cells

This is definitely the neuroscience news of the week. It shows how you can take an incremental scientific advance and hype it into a “new science” and a breakthrough and the media will generally just eat it up. Did scientists teach a clump of brain cells  This is definitely a “you got chocolate in my peanut butter” type of advance, because it combines two emerging technologies to create a potential significant advance.

This is definitely a “you got chocolate in my peanut butter” type of advance, because it combines two emerging technologies to create a potential significant advance.  We appear to be in the middle of an explosion of AI (artificial intelligence) applications and ability. I had the opportunity to chat with an AI expert, Mark Ho, about what the driving forces are behind this rapid increase in AI power.

We appear to be in the middle of an explosion of AI (artificial intelligence) applications and ability. I had the opportunity to chat with an AI expert, Mark Ho, about what the driving forces are behind this rapid increase in AI power.  There is ongoing debate as to the extent that a skeptical outlook is natural vs learned in humans. There is no simple answer to this question, and human psychology is complex and multifaceted. People do demonstrate natural skepticism toward many claims, and yet seem to accept with abject gullibility other claims. For adults it can also be difficult to tease out how much skepticism is learned vs innate.

There is ongoing debate as to the extent that a skeptical outlook is natural vs learned in humans. There is no simple answer to this question, and human psychology is complex and multifaceted. People do demonstrate natural skepticism toward many claims, and yet seem to accept with abject gullibility other claims. For adults it can also be difficult to tease out how much skepticism is learned vs innate.