Jun 10 2014

Turing Test 2014

The press is abuzz with the claim that a computer has passed the famous Turing Test for the first time. The University of Reading organized a Turing Test competition, held at the Royal Society in London on Saturday June 7th. The have now announced that a chatbot named Eugene Goostman passed the test by convincing 33% of the judges that it was a human.

The press is abuzz with the claim that a computer has passed the famous Turing Test for the first time. The University of Reading organized a Turing Test competition, held at the Royal Society in London on Saturday June 7th. The have now announced that a chatbot named Eugene Goostman passed the test by convincing 33% of the judges that it was a human.

The Turing Test, devised by Alan Turing, was proposed as one method for determining if artificial intelligence has been achieved. The idea is – if a computer can convince a human through normal conversation that it is also a human, then it will have achieved some measure of artificial intelligence (AI).

The test, while interesting, is really more of a gimmick, however. It cannot discern whether any particular type of AI has been achieved. The current alleged winner is a good example – a chatbot is simply a software program designed to imitate human conversation. There is no actual intelligence behind the algorithm.

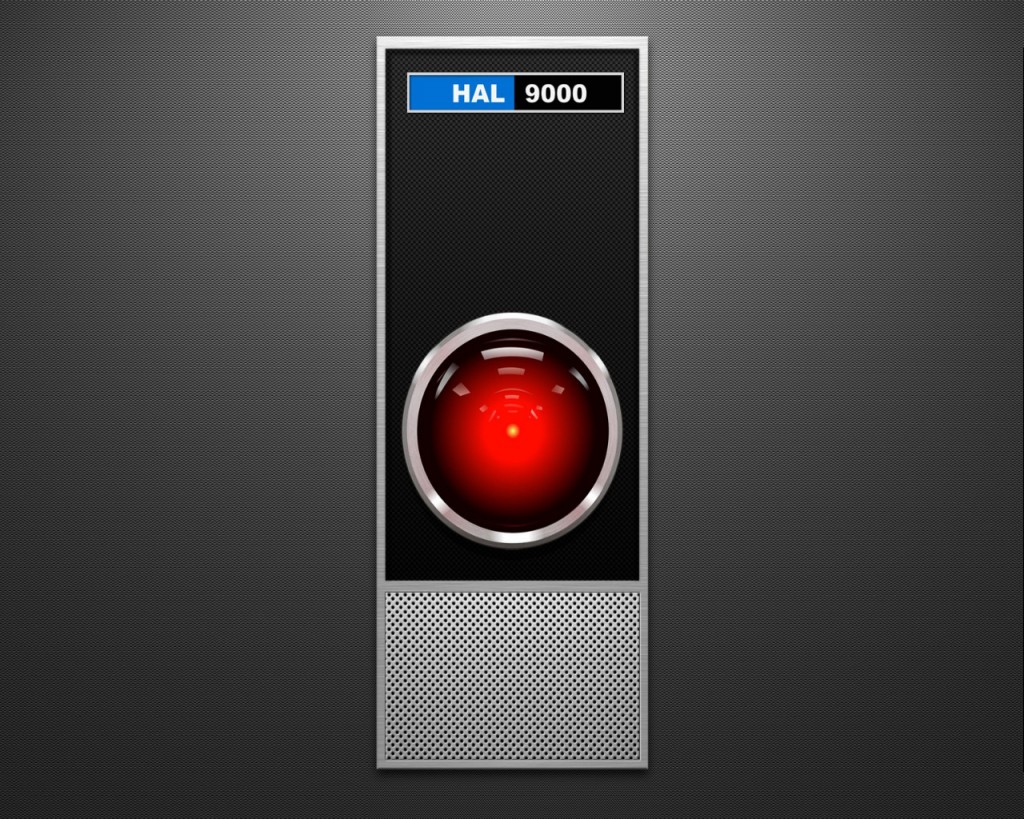

Of course we have to ask what we mean by AI. I think most non-experts think of AI as a self-aware computer, like HAL from 2001. However, the term AI is used by programmers to refer to a variety of expert systems, and potentially any software that uses a knowledge base and a sophisticated algorithm in order to interact adaptively with its user.

Such systems make no attempt to produce computer awareness or even anything that can be considered thinking. They may simulate conversation, even very well, but they are not made to think.

In this way Turing’s test has never been considered a true test of AI self-awareness, or true AI. It really is just a test of how well a computer can simulate human conversation.

Let’s take a look at the current claim – the test itself seems to have been reasonably administered. Thirty judges were used for each entry, judges has 5 minute conversations with both a real person and an AI, and then had to decide which was which. The overall process was refereed to make sure it was carried out well. The threshold for considering that the test was “passed” is convincing over 30% of the judges that the AI is a person, and in this case Eugene achieved 33%.

Already, however, there is criticism that the test was not fair. Eugene was meant to simulate a 13 year old Ukrainian boy. This means that his knowledge would be limited, and odd answers can be explained by being foreign and perhaps English not being his primary language. Therefore this lowers the bar for fooling the judges, and can explain why Eugene eked over the 30% threshold.

The press release, and many derivative news reports, are calling this an “historic milestone.” I don’t think so. At best this is an incremental advance in chatbot software. Even that claim is dubious, given that the test was essentially gamed by lowering the bar.

The Turing Test has become a cultural icon, which means that we will forever be explaining what it actually is and what it isn’t. I don’t think it should be considered a test for AI, as most people understand it. It should be considered a test for simulating human conversation. This is still one very useful aspect of AI, but it is not AI itself.

To give another example, if someone engineered a robot that could convince most people it was a human by the way it looked and moved, that would not make it artificial life. It would make it a convincing simulation.

I’m all for celebrating advances in computer and software technology. Like it or not, the Turing Test is a popular idea and has the potential to open discussions of what we mean by AI and to monitor progress in both hardware and software in AI applications. It should be used, however, as an opportunity to educate the public a bit more about this exciting and increasingly important technology, not to confuse them with hyperbole.