Sep 26 2022

The AI Renaissance

We appear to be in the middle of an explosion of AI (artificial intelligence) applications and ability. I had the opportunity to chat with an AI expert, Mark Ho, about what the driving forces are behind this rapid increase in AI power. Mark is a cognitive scientist who studies how AI’s work out and solve problems, and compares that to how humans solve problems. I was interviewing him for an SGU episode that will drop in December. The conversation was far-ranging, but I did want to discuss this one question – why are AIs getting so much more powerful in recent years?

We appear to be in the middle of an explosion of AI (artificial intelligence) applications and ability. I had the opportunity to chat with an AI expert, Mark Ho, about what the driving forces are behind this rapid increase in AI power. Mark is a cognitive scientist who studies how AI’s work out and solve problems, and compares that to how humans solve problems. I was interviewing him for an SGU episode that will drop in December. The conversation was far-ranging, but I did want to discuss this one question – why are AIs getting so much more powerful in recent years?

First let me define what we mean by AI – this is not self-aware conscious computer code. I am referring to what may be called “narrow” AI, such as deep learning neural networks that can do specific things really well, like mimic a human conversation, reconstruct art images based on natural-language prompts, drive a car, or beat the world-champion in Go. The HAL-9000 version of sentient computer can be referred to as Artificial General Intelligence, or AGI. But narrow AI does not really think, it does not not understand in a human sense. For the rest of this article when I refer to “AI” I am referring to the narrow type.

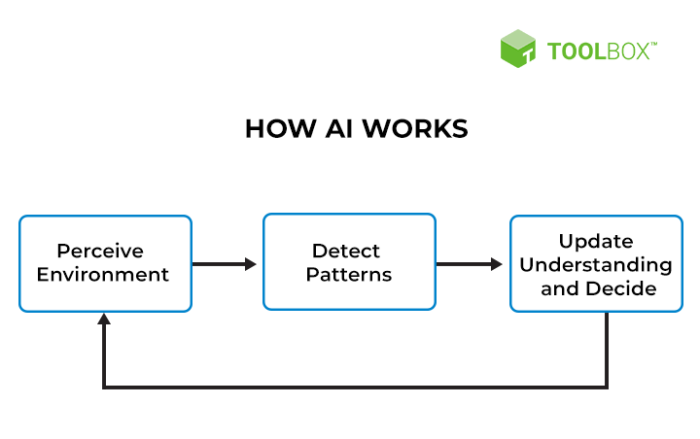

In order to understand why AI is getting more powerful we have to understand how current AI works. A full description would take a book, but let me just describe one basic way that AI algorithms can work. Neural nets, for example, are a network of nodes which also act as gates in a feed forward design (they pass information in one direction). The gates receive information and assign a weight to that information, and if it exceeds a set threshold it then passes that along to the next layers of nodes in the network. decide whether or not to pass information onto the next node based on preset parameters, and can give different weight to this information. The parameters (weights and thresholds) can be tuned to affect how the network processes information. These networks can be used for deep machine learning, which “trains” the network on specific data. To do this there needs to be an output that is either right or wrong, and that result is fed back into the network, which then tweaks the parameters. The goal is for the network to “learn” how it needs to process information by essentially doing millions of trials, tweaking the parameters each time and evolving the network in the direction of more and more accurate output.

So what is it about this system that is getting better? What others have told me, and what Mark confirmed, is that the underlying math and basic principles are essentially the same as 50 years ago. The math is also not that complicated. The basic tools are the same, so what is it that is getting better? One critical component of AI that is improving is the underlying hardware, which is getting much faster and more powerful. There is just a lot more raw power to run lots of training trials. One interesting side point is that computer scientists figured out that graphics cards (graphics processing unit, or GPU), the hardware used to process the images that go to your computer screen, happen to work really well for AI algorithms. GPUs have become incredibly powerful, mainly because of the gaming industry. This, by the way, is why graphics cards have become so expensive recently. All those bitcoin miners are using the GPUs to run their algorithms. (Although I recently read they are moving in the direction of application specific integrated circuits.)

Raw power is a major component of why AIs are getting more powerful. But a second reason is the availability of training data, and this is largely due to the internet. Fifty years ago if you wanted to train your AI on pictures of animals, how would you feed it millions of such images? Today we have the internet, which conveniently supplies billions (750 billion by one estimate) of images that can be “scrubbed” to supply training data (for AI that is based on images). For other applications, like playing Go, that gets back mostly to raw power – the AI can essentially play itself millions of games to learn the rules and figure out optimal play.

Mark also pointed out a third factor – that AI computer scientists have been really clever in figuring out how to leverage the basic AI learning process to achieve specific outcomes. This is where you get into the weeds, and I suspect have to be an AI expert to understand the precise details. But essentially they are getting better at using the basic AI tools to achieve specific applications. So while the AI tools themselves have not changed fundamentally in decades, AI is run on more powerful computers, is fed much more data, and is being used in more clever ways.

I am interested in where AIs may go next. One idea I have is that we can network multiple narrow AI systems, each with different abilities, together (which may requires another AI system just to integrate them). For example, let’s say we have an AI chatbot that is really good at simulating conversation, another that can mimic human emotional reactions, yet another that can read and interpret the facial expressions of humans, and another that is optimized for writing poetry. If we add these all together into one system could that provide a much more convincing interaction that will feel like you are interacting with an actual person?

Here is where things get interesting, because that is basically how the human brain works. The brain is a series of circuits (functional networks) that do specific things – all the things that people do. Many of these things you are not aware of, they just run in the background creating your sense of self and reality. Also, neuroscientists have been unable to locate any network functioning as a central conductor (which they called the global workspace). Eventually they had to abandon the idea – there doesn’t appear to be one. Essentially, the human brain is a bunch of narrow AIs massively networked together. You see where I am going with this. So what if we do the same thing, network a bunch of narrow AI systems together, to do all the things we think are necessary to create actual sentience? At some critical point with that network of narrow AIs become a general AI – a sentient computer?

This is a science fiction trope about which I have long been skeptical. Skynet or V’ger, I thought, would not just “wake up” and become conscious. But maybe I was wrong – maybe it could. I still don’t think this would necessarily happen by accident, because based on our current understanding of neuroscience, there would have to be some deliberate functionality that contributes to human-like consciousness.

But then again, what about consciousness that is not human-like? Insects are conscious, at least a bit. So are fish, and rabbits. It is an interesting question whether consciousness was selected for by evolution, or if consciousness is merely an epiphenomenon, something that can happen when enough neural activity is present. Maybe the same epiphenomenon will happen when we start networking narrow AIs together to increase their functionality. The result will not be human, but it may be conscious – a machine consciousness. From a practical point of view what this might mean is that we observe emergent behavior from these systems that cannot be explained by any of the narrow AI components. At that point we will likely debate whether or not the system is expressing consciousness, and there may be no real way to objectively resolve that debate.

We will also run into this problem (and actually already have) when AI systems are designed to mimic human behavior. That can create the powerful illusion that there is a human-like intelligence behind them. When the system is complex enough that an emergent consciousness is actually plausible, again, we will have a tough time resolving the debate.

Meanwhile narrow AI systems continues to get more and more powerful. They are changing our world, and will continue to do so without ever getting close to anything like a general intelligence.