Apr 29 2014

Neuromorphic Computing

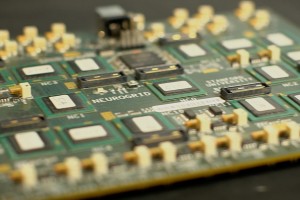

Kwabena Boahen and other engineers at Stanford University announce that they have developed a computer chip modeled after the human brain. They call the technology “neuromorphic” and their current device the neurogrid. They say it can simulate 1 million neurons in real time, a feat that would otherwise require a super computer.

Kwabena Boahen and other engineers at Stanford University announce that they have developed a computer chip modeled after the human brain. They call the technology “neuromorphic” and their current device the neurogrid. They say it can simulate 1 million neurons in real time, a feat that would otherwise require a super computer.

The idea is that the human brain is much more powerful and energy efficient than our current computers. A mouse brain can process information 9,000 times faster than a computer simulation of its functions, while using much less energy.

The article does not explicitly state it, but I suspect these numbers are for virtual simulations – in other words, we are not building mouse brain circuits in silicon, we are using standard digital computer to simulate the mouse circuits in software. Virtual simulations are much less efficient (about an order of magnitude) than dedicated circuits.

Even still, the neurogrid looks like a huge advance even over building dedicated brain circuits using standard digital chip technology.

The engineers explain that the neurogrid is built using analog rather than digital connections. Each “neuron” on the chip makes many connections to other neurons, and these connection can vary in intensity (similar to the variable strength of connections among brain neurons). More or less voltage going through the circuit translates to a stronger or weaker connection.

Their prototype board, which costs $40,000 to make, simulates 1 million neurons (divided into 16 neurocore chips). For comparison, the human brain has 100 billion neurons – 100,000 times more than the neurogrid. So we still have a ways to go. The researchers believe that with modern mass production the cost for each board can come down to $400 each.

There are other projects looking to reverse engineer the brain. This works both ways – using what we learn about the brain to make better computers, and using computers to model the brain, improving our understanding of how it works. Such research programs feed off each other.

There is no reason why eventually we will not arrive at a piece of hardware with the ability to perform human brain processing in real time. And then, of course, once we get to that point we will then surpass it, creating computers increasingly more powerful than the human brain.

Boahen summarizes other research projects working in this field. The European Union’s brain project is attempting to simulate a human brain on a supercomputer. The US’s brain project, rather, is working toward neuromorphic computing, modeling a computer after the human brain.

IBM has their own project, called SyNAPSE, which also seeks to emulate the human brain in a computer chip. Unlike the Stanford project, their chip is digital, with 256 digital neurons each making 1024 connections.

Heidelberg University has a similar project (HICANN), which is analog, like the Stanford chip. Their chip currently has 512 neurons each with 224 circuits.

Conclusion

The Stanford chip sounds like a significant advance, or at least a new approach to the efforts to merge our understanding of the brain with computer technology. I find it interesting that the neurogrid is analog, which superficially sounds like a step backward from digital technology. But analog circuits do better simulate brain function.

The potential benefits of this technology are computer circuits that are much more powerful for certain kinds of processing, and that use less energy. Neuroprosthetics are an obvious application. The lower energy requirement especially would be very useful. Implantable devices would benefit from needing only small amounts of energy, which would also reduce their heat production, which can also be a limiting factor.

Advances in material science may also be of benefit in addition to improved chip design. I am especially interested in carbon nanotubes, which are highly efficient conductors. That is another technology which is potentially converging on the neuromorphing and neuromodeling technologies.

I am also very interested in how all this will feed back onto our understanding of neuroscience. Being able to model circuits in the brain is likely to be a useful research paradigm for understanding how the brain works.

It’s definitely worth keeping an eye on all this development.