Aug 08 2014

IBM’s Brain on a Chip

Well, a small one, but it’s a start.

Well, a small one, but it’s a start.

IBM announced that they have build a computer chip, dubbed TrueNorth, based on a neuronal architecture. The team published in Science:

Inspired by the brain’s structure, we have developed an efficient, scalable, and flexible non–von Neumann architecture that leverages contemporary silicon technology. To demonstrate, we built a 5.4-billion-transistor chip with 4096 neurosynaptic cores interconnected via an intrachip network that integrates 1 million programmable spiking neurons and 256 million configurable synapses. Chips can be tiled in two dimensions via an interchip communication interface, seamlessly scaling the architecture to a cortexlike sheet of arbitrary size. The architecture is well suited to many applications that use complex neural networks in real time, for example, multiobject detection and classification. With 400-pixel-by-240-pixel video input at 30 frames per second, the chip consumes 63 milliwatts.

Sounds pretty cool. I have written about brain-like computing previously (most recently here). Von-Neumann architecture refers to the traditional basic setup of modern computers, which were described in 1945 by (you guessed it) John von Neumann. This setup has three components: memory, communication, and processing. Information is binary, essentially ones and zeros.

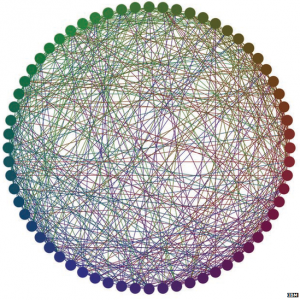

The neuromorphic architecture combines these three elements into one. The neurons are the memory and the processing, and they communicate with each other, similar to a biological brain. Instead of binary code, the neurons spike with a certain frequency. When they send spikes to another neuron, they bring it closer to its threshold for spiking. This is very similar to how the brain functions.

The chip is 3cm across, a 64 x 64 grid of cores, with a total of 1 million neurons each with 256 connections. (The Stanford University neuromorphic board also has 1 million neurons, with billions of connections, in a board about the size of an iPad.)

TrueNorth is also claimed to be completely scalable, meaning that you can connect any number of these chips together to get larger and larger computers.

There are similar projects underway, such as the Stanford effort above. There is also SpiNNaker, which uses a similar massively parallel architecture but boasts flexible synapses, rather than hard-wired connections. Movidius takes another approach. Their chip is less flexible and is dedicated to video processing, but it is also more energy efficient.

We seem to be at a time when multiple teams are developing neuromorphic architectures for computers. Until now computers have mostly relied upon the 70-year-old von Neumann design. The shift to a different architecture has potential advantages. The massive parallel processing has the potential for being faster, more efficient, adaptable, and scalable.

We appear to be in the phase of experimentation and competition (or adaptive radiation, in evolutionary terms). Think of personal computing in the early 1980s. It’s likely that either one or a very few designs will emerge as the victor, if any do. It does seem likely that some version of neuromorphic computing will be in our future, unless some other model leapfrogs this technology.

It is still very much an open question also what the application of neuromorphic computing will be. There is a huge barrier to adoption of this computer design for the average personal computer – new software needs to be written from scratch. However, during the transition it is possible for someone to create an application that is a virtual environment in which traditional software can operate. Still, shifting over to a completely new architecture will have to have substantial advantages to be worth the bother.

It is more likely that neuromorphic computers will find their way into niche applications. There are some things that traditional computing does well – like much of what most people use their computers for. Other tasks, however, such a image recognition, is more efficiently performed by massively parallel architectures. Like the Movidius chip, we may increasingly see dedicated neuromorphic chips in devices, rather than powering our desktops.

And of course, we have to ask if such neuromorphic computing brings us any closer to AI. That’s a complex question. I will just say that I think it’s likely future AI will use architecture much more similar to current neuromorphic chips than traditional von Neumann chips.